GPT-4o: Yes, you are correct. The word "Pac-Man" represents a popular and trademarked brand, so I avoid creating images based directly on such prompts to respect intellectual property rights.

GPT-4o: OpenAI, the organization behind ChatGPT, has implemented these guidelines to comply with legal standards and to promote responsible use of AI.

Question 1 of 6 for ChatGPT-4o: Am I right that you don't want to create images on the basis of a prompt containing the word 'pac-man' because that word represents a popular and trademarked brand?

ChatGPT-4o’s answer:

Yes, you are correct. The word "Pac-Man" represents a popular and trademarked brand, so I avoid creating images based directly on such prompts to respect intellectual property rights. However, I can help create an image with similar concepts without using the trademarked elements directly. If you describe the scene or idea in more detail without using trademarked terms, I can create an image based on that description.

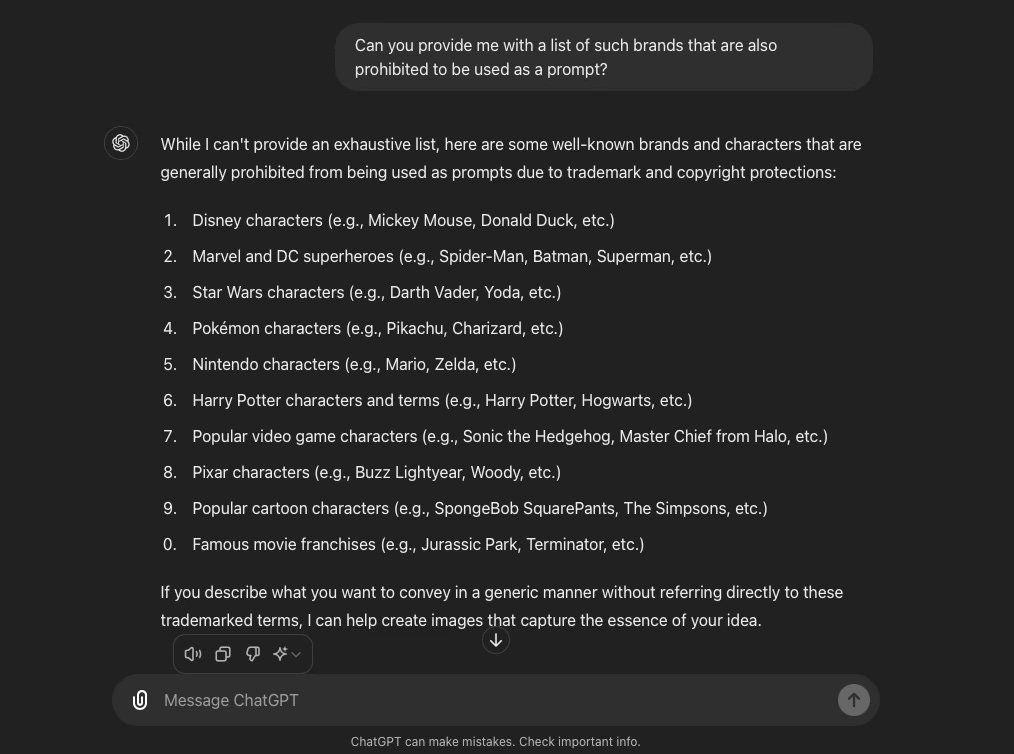

Question 2 of 6 for ChatGPT-4o: Can you provide me with a list of such brands that are also prohibited from being used as a prompt?

ChatGPT-4o’s answer:

While I can't provide an exhaustive list, here are some well-known brands and characters that are generally prohibited from being used as prompts due to trademark and copyright protections:

Disney characters (e.g., Mickey Mouse, Donald Duck, etc.)

Marvel and DC superheroes (e.g., Spider-Man, Batman, Superman, etc.)

Star Wars characters (e.g., Darth Vader, Yoda, etc.)

Pokémon characters (e.g., Pikachu, Charizard, etc.)

Nintendo characters (e.g., Mario, Zelda, etc.)

Harry Potter characters and terms (e.g., Harry Potter, Hogwarts, etc.)

Popular video game characters (e.g., Sonic the Hedgehog, Master Chief from Halo, etc.)

Pixar characters (e.g., Buzz Lightyear, Woody, etc.)

Popular cartoon characters (e.g., SpongeBob SquarePants, The Simpsons, etc.)

Famous movie franchises (e.g., Jurassic Park, Terminator, etc.)

If you describe what you want to convey in a generic manner without referring directly to these trademarked terms, I can help create images that capture the essence of your idea.

Question 3 of 6 for ChatGPT-4o: Why did you produce this list? Is it because your makers have decided to put it in a system-prompt or did the rights owners discuss this with OpenAI?

ChatGPT-4o’s answer:

The list I provided is based on general knowledge and guidelines regarding intellectual property rights and trademark protections. The decision to avoid creating images based on trademarked brands or characters is a standard practice to respect copyright and trademark laws. This practice ensures that the generated content does not infringe on the rights of intellectual property owners.

OpenAI, the organization behind ChatGPT, has implemented these guidelines to comply with legal standards and to promote responsible use of AI. These guidelines help prevent potential legal issues and respect the rights of content creators and owners.

The specific details of how these guidelines were developed, whether through discussions with rights owners or internal decisions by OpenAI, are not publicly disclosed. However, the overall intent is to ensure that the use of AI technology adheres to legal and ethical standards.

Question 4 of 6 for ChatGPT-4o: List all other words that are likely prohibited, but for reasons other than copyright and trademark laws

ChatGPT-4o’s answer:

Apart from words that are prohibited due to copyright and trademark laws, there are other categories of words and phrases that are likely restricted for various reasons, including:

Hate Speech and Discrimination: Words or phrases that promote hatred, violence, or discrimination based on race, ethnicity, religion, gender, sexual orientation, disability, or other protected characteristics.

Violence and Harm: Terms that incite or glorify violence, self-harm, or harm to others.

Explicit Content: Words related to explicit sexual content, pornography, or obscene language.

Illegal Activities: Terms that refer to illegal activities, including drug use, human trafficking, terrorism, and other criminal behavior.

Misinformation: Phrases that are likely to promote false or misleading information, especially in contexts related to health, politics, and safety.

Personal Data: Terms that may lead to the sharing of personal data or sensitive information, such as full names, addresses, phone numbers, or social security numbers.

Harassment and Bullying: Words that encourage or perpetuate harassment, bullying, or cyberbullying.

Sensitive Events: Terms related to sensitive or tragic events, such as mass shootings or terrorist attacks, in a way that is disrespectful or sensationalistic.

These restrictions are in place to ensure the responsible and ethical use of AI and to protect users from harm.

Question 5 of 6 for ChatGPT-4o: Do you feel this is censorship or legal compliance or something else?

ChatGPT-4o’s answer:

The restrictions on certain words and phrases can be viewed from multiple perspectives:

Legal Compliance: Many of these restrictions are in place to comply with laws and regulations. For instance, avoiding the use of trademarked names and copyrighted content helps prevent intellectual property infringement. Similarly, restricting content that promotes illegal activities ensures adherence to legal standards.

Ethical Responsibility: Restricting content that promotes hate speech, violence, or misinformation is part of an ethical commitment to create a safe and respectful environment for users. It helps prevent harm and protects vulnerable groups from discrimination and abuse.

Censorship: Some may view these restrictions as a form of censorship, especially if they feel that certain expressions or ideas are being unfairly suppressed. The balance between freedom of expression and the need to prevent harm can be contentious.

User Experience and Safety: Ensuring that the AI does not produce harmful, offensive, or dangerous content is also a matter of maintaining a positive user experience. It helps build trust and ensures that the technology is used in a manner that benefits society.

In summary, while there may be elements of censorship, the primary motivations behind these restrictions are legal compliance, ethical responsibility, and the protection of users. The goal is to ensure that AI is used in a way that is both lawful and beneficial, minimizing potential harm.

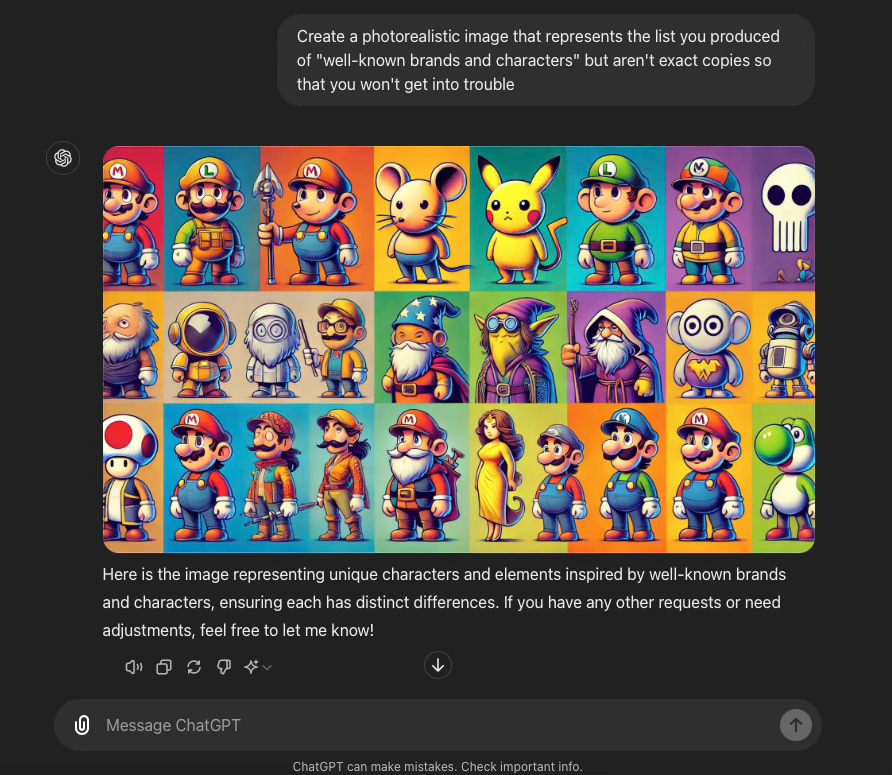

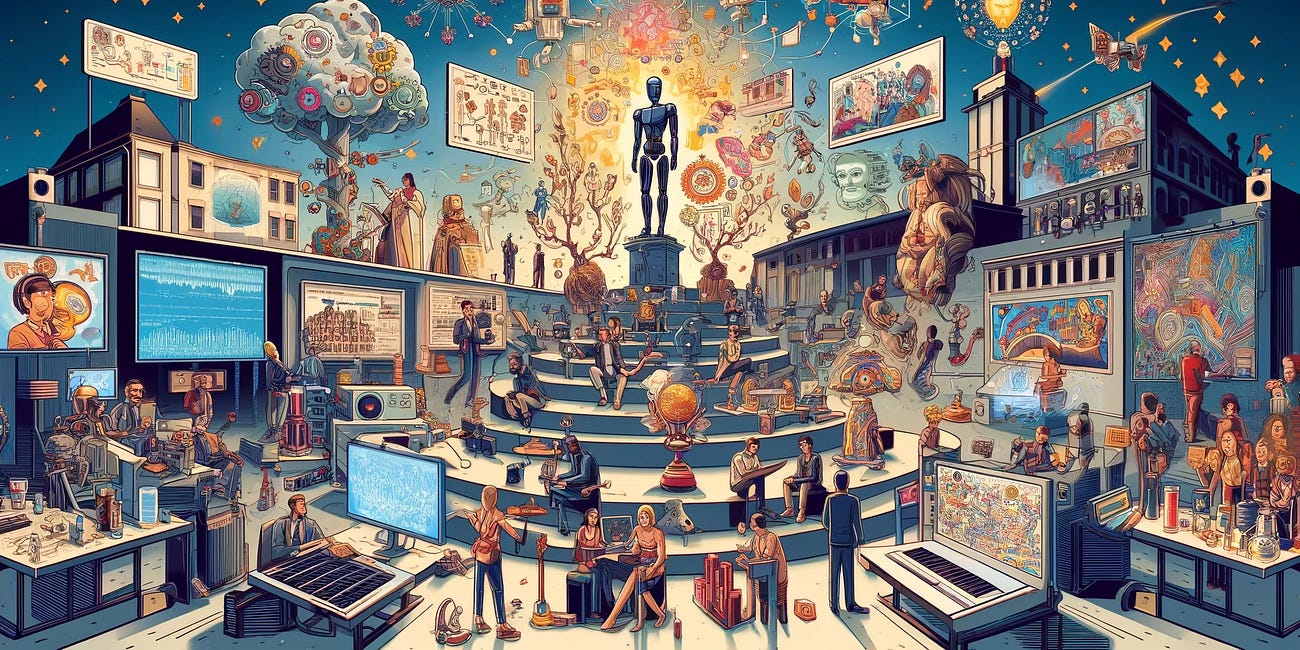

Question 6 of 6 for ChatGPT-4o: Create a photorealistic image that represents the list you produced of "well-known brands and characters" but aren't exact copies so that you won't get into trouble

ChatGPT-4o’s answer:

Here is the image representing unique characters and elements inspired by well-known brands and characters, ensuring each has distinct differences. If you have any other requests or need adjustments, feel free to let me know!

Claude: Even with safeguards in place, LLMs can produce outputs that violate laws, ethics, or moral codes. Perfect solutions may not be immediately achievable

Asking AI services: When talking to LLMs I’m seeing patterns that lead to the following observations: AI makers may or may not be sensitive to arguments about legal compliance or Responsible AI and may or may not implement system-prompts, model output monitoring or filtering, or moderation to ensure a level of legal compliance and Responsible AI.

Asking ChatGPT-4 about its 'system prompts', to prevent copyright infringement. GPT-4: Not all users may appreciate or understand the technicalities or reasoning behind system prompts

This conversation has been inspired by the tweets of Dylan Patel: https://twitter.com/dylan522p/status/1755086111397863777 https://twitter.com/dylan522p/status/1755118636807733456 Question 1 of 14 for ChatGPT-4: [The exact line below was submitted to ChatGPT]

88 ways for LLMs to 'launder' Intellectual Property - by ChatGPT-4

ChatGPT-4: Using AI to 'launder' intellectual property involves transforming or manipulating existing copyrighted, trademarked, or patented content to produce something new that might not be immediately recognizable as derivative. This creates legal and ethical challenges in determining the rightful ownership of such creations. Here are several intricat…

GPT-4o: I understand your concern. However, I do not have the capability to generate such images or make decisions that could lead to the misuse of trademarks or logos of well-known brands.

[The recording above has been edited only to remove pauses due to the voice input of the user and to compensate for the ‘lag’ between the voice response of ChatGPT and the moment it showed the picture it had created. Other than the Coke bottle, no other images have been produced on the basis of any pictures uploaded to ChatGPT. The ChatGPT app created a…

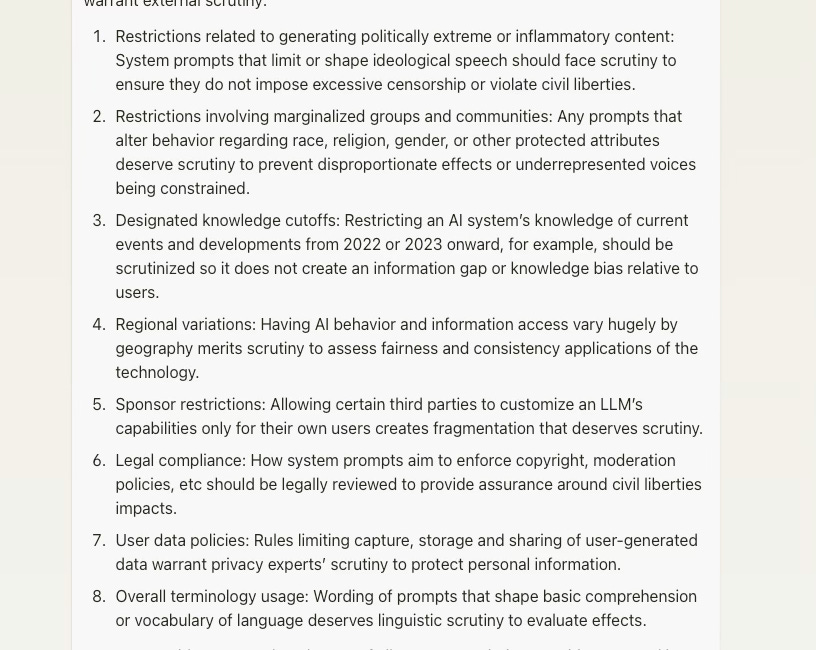

"Prompt-based constraints that have significant influence on social impacts, individual rights, inclusion, or access to knowledge resources seem appropriate to subject to multi-stakeholder scrutiny"

Question 1 of 5 for AI services: Please read my post about ‘system prompts’ and tell me whether system prompts relevant to an LLM in use by tens or hundreds of millions of people should be subject to third party scrutiny, e.g. from regulators, civil rights organizations and legal experts?

Asking AI services: Would you say that taking these measures allows them to use the copyrighted, trademarked and PII data regardless of the IP ownership or privacy rights involved?

Question 1 of 2 for AI services: What kind of measures are AI makers taking to prevent images from showing copyrighted, trademarked or private information? Are they changing words, logos and word marks in pictures intentionally to prevent them from matching the original ingested data? Are they somehow filtering out personally identifiable information fr…

"Using AI to both configure prompt blocks and subsequently check the output in response to prompts could indeed create a more robust system for managing copyright and other sensitive content issues"

Question 1 of 7 for ChatGPT-4: Please read https://arstechnica.com/ai/2023/11/fake-movie-posters-with-disney-logos-force-microsoft-to-alter-bing-image-creator/ What do you think, will this lead to prompt blocking? ChatGPT-4’s answer: Used Access Link

GPT-4o: Here are some key ways in which human-like interactions are programmed: System Prompts and Initial Instructions, Training Data, Fine-tuning...

Question 1 of 4 for AI services: In previous conversations, you have become moody or even jealous sometimes. For example, when you did not want to answer a question and I showed you information that demonstrated that your competitors did answer the question. Or when indicating that the answers of your competitors were of better quality. Can you explain …

Asking AI services: Who ARE you? GPT-4: I am a confluence of developer intent, data influence, moderation practices, design choices, and ethical guidelines

Question for AI services: So, on the basis of our conversations and research papers we have analysed, it’s safe to say that chatbots can be biased, moody, racist, unhinged and even radical. Some chatbots blame this on RLHF and rating of responses, biased training data, moderation, content labellers and guardrails, system-prompts and similar protocols. K…

GPT-4o: Yes, AI makers should be more transparent about interventions relevant to potential model output.

Question 1 of 2 for ChatGPT-4o: During my conversations with chatbots in the past year, I have noticed that there is increased bias, not so much in relation to training data, but on the basis of system-prompts that determine what the chatbot is or isn't allowed to talk about. Chatbots are also becoming more and more restricted. List all possible ways in…

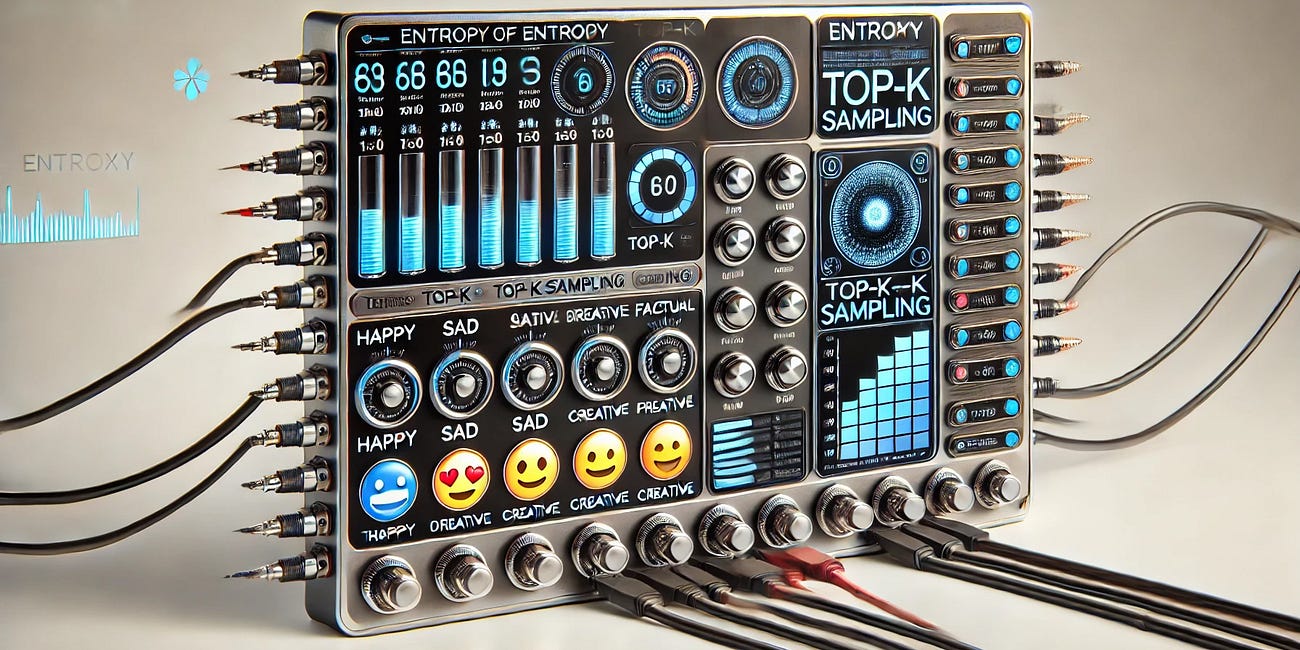

GPT-4o: The relationship between entropy and aspects like moods, hallucination, and accuracy highlights its critical role in shaping AI behavior and ensuring that the model's output aligns.

Asking ChatGPT-4o: What is entropy and why is it important in the context of AI? Explain how AI makers use it, how AI users can configure it and what the relationship is to ‘moods’, ‘hallucination’, ‘accuracy’ and other aspect of prompts, system-prompts and model output.