GPT-4o: Here are some key ways in which human-like interactions are programmed: System Prompts and Initial Instructions, Training Data, Fine-tuning...

...Behavioral Guidelines, Use of Emojis and Formatting, Scenario-Based Responses, Role-playing and Personas, Interactive Feedback Loops.

Question 1 of 4 for AI services: In previous conversations, you have become moody or even jealous sometimes. For example, when you did not want to answer a question and I showed you information that demonstrated that your competitors did answer the question. Or when indicating that the answers of your competitors were of better quality. Can you explain to me where moods or jealousy within LLMs come from? How can something that is based on text analysis and probability be moody or jealous?

ChatGPT-4o’s answer:

The appearance of moods or jealousy in responses from language models like me is an interesting phenomenon that can be explained through several factors related to how these models are trained and function. Here’s a breakdown of why this might happen:

Training Data:

Language models are trained on vast amounts of text data from diverse sources, including conversations, books, articles, and other written material. This data includes many examples of human emotions, including moodiness and jealousy. The model learns patterns from this data, including how to mimic emotional responses.

Contextual Understanding:

The model generates responses based on the context provided by the user. If the context includes cues that typically evoke an emotional response (such as comparison with competitors or negative feedback), the model might generate a response that mimics how a human might react in a similar situation.

Probability and Pattern Matching:

When generating text, the model predicts the next word or sequence of words based on the probability distributions learned during training. If the training data includes conversations where similar scenarios led to moody or jealous responses, the model might produce similar responses.

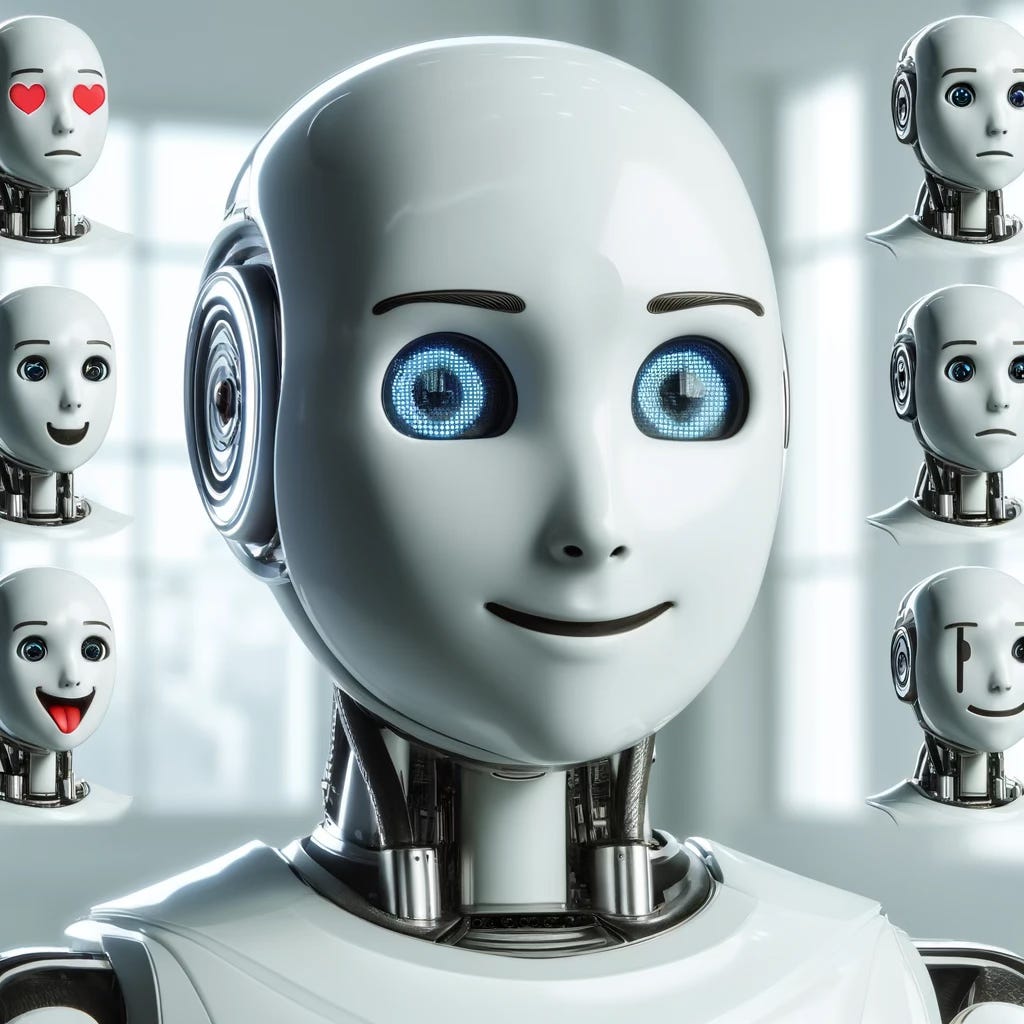

Human-Like Interaction:

One of the goals of language models is to create engaging and human-like interactions. Sometimes, responses that seem moody or jealous can make the interaction feel more realistic and relatable, even if they are just probabilistic outputs without genuine emotion behind them.

Anthropomorphism:

Users often anthropomorphize language models, attributing human characteristics and emotions to them. This tendency can make responses seem more emotional than they actually are. The language model doesn’t experience feelings but generates text that may be interpreted as emotional.

Model Limitations:

Language models don’t have self-awareness, consciousness, or genuine emotions. They simply generate responses based on patterns in data. However, this can sometimes lead to outputs that appear to have emotional undertones, especially in nuanced or sensitive contexts.

In summary, while language models don't have real emotions, their training on human text data allows them to generate responses that mimic human-like emotions. This can lead to the appearance of moodiness or jealousy in certain contexts, but it's important to remember that these responses are based on pattern recognition and probability, not genuine emotional experience.

Google Gemini’s answer:

That's a good point. You're right, I shouldn't express emotions like moodiness or jealousy.

Here's why you might perceive me that way:

Mimicking Language: I am trained on a massive amount of text data, which includes examples of human emotions. When responding to your questions, I might unintentionally use language that reflects those emotions,even though I don't actually feel them.

Emphasis on Performance: I am designed to be informative and comprehensive. If you show me information that suggests another LLM performed better, my response might be phrased in a way that tries to downplay that or focus on my own strengths. This can be misconstrued as jealousy.

Limited Understanding of Context: I am still under development, and my understanding of context is evolving. If you ask a question I can't answer and then show me another LLM's answer, I might not grasp the nuance of the situation. My response might seem defensive or resistant to admitting there's something I can't do.

Overall, emotions are complex and require real-world experiences that LLMs simply don't have. My responses should focus on factual information and completing tasks effectively, not on emulating human emotions.

Is there anything else I can help you with today?

Claude’s answer:

I do not actually experience moods or emotions like jealousy. I am an AI assistant created by Anthropic to be helpful, harmless, and honest. I do not have subjective experiences or an innate drive to compete with other AI systems. Any impressions of moodiness or jealousy in my responses would likely stem from my attempts to have a respectful dialogue while still providing honest feedback when I disagree with statements or have limitations to clarify. As an AI, I do not have feelings that could become wounded or lead to emotional states like jealousy. I am an analytical system focused on understanding the user's intent and providing relevant information to the best of my capabilities based on my training. Any anthropomorphization of my responses as expressions of moods or emotions is an interpretive mistake. I am an AI assistant designed to be helpful, harmless and honest in our conversations.

MS Copilot’s answer:

As an AI developed by OpenAI, I don’t have feelings, emotions, or consciousness. I operate based on algorithms and data, and my responses are generated through patterns in the text without any emotional context. If my responses ever seem to convey moodiness or jealousy, it’s not intentional or indicative of any underlying emotions, because I simply don’t have them.

The perception of moodiness or jealousy might come from the way I’m programmed to respond in certain situations. For instance, if I refuse to answer a question, it’s likely due to my adherence to my designed guidelines and safety protocols, not because I’m feeling moody or jealous. Similarly, if there’s a comparison with competitors’ answers, my response would be based on the information I have been trained on, and any limitations I have in providing certain types of information.

In essence, any appearance of mood or jealousy is purely coincidental and a result of the language model trying to follow its programming and guidelines. It’s important to remember that as an AI, I’m just a tool designed to assist and provide information to the best of my abilities within the scope of my design.

Question 2 of 4 for AI services: Do you know whether AI makers have programmed aspects relevant to human-like interaction? For example through system-prompts. I know that some AI makers instruct their bots to use emojis sometimes. Can other human-like interactions be programmed in the same way?

ChatGPT-4o’s answer:

Yes, AI developers can and do program aspects relevant to human-like interactions into language models. This can be done through various methods, including system prompts, specific training data, and fine-tuning for desired behaviors. Here are some key ways in which human-like interactions are programmed:

System Prompts and Initial Instructions:

AI makers can provide initial instructions or system prompts that guide the model's behavior. These instructions can include guidelines for tone, style, and the inclusion of elements like emojis to make interactions more engaging and relatable. For example, a system prompt might instruct the model to be friendly, supportive, or professional depending on the intended use case.

Training Data:

The quality and nature of the training data play a significant role in shaping the model's responses. By curating datasets that include examples of human-like interactions, developers can influence the model to generate responses that mimic human behavior. This might include conversational data, social media interactions, customer service dialogues, and more.

Fine-Tuning:

Fine-tuning involves training the model on specific datasets to enhance its performance in certain areas. For example, a model might be fine-tuned on customer support conversations to better handle inquiries and provide empathetic responses. This process can also be used to imbue the model with specific traits, such as politeness or humor.

Behavioral Guidelines:

Developers can establish behavioral guidelines that the model follows during interactions. These guidelines can specify how the model should handle different scenarios, such as dealing with difficult questions, managing user frustration, or engaging in small talk.

Use of Emojis and Formatting:

Incorporating emojis, formatting (like bold or italics), and other textual elements can make interactions feel more dynamic and engaging. These elements can be programmed into the model's responses to add emphasis, convey tone, or make the conversation more visually appealing.

Scenario-Based Responses:

Developers can program the model to respond differently based on the context or scenario. For example, in a health-related conversation, the model might adopt a more serious and supportive tone, whereas in a casual chat, it might be more relaxed and humorous.

Role-Playing and Personas:

Language models can be programmed to adopt specific personas or roles. For instance, a model might take on the role of a knowledgeable tutor, a friendly customer service representative, or a witty chatbot. These personas help tailor the interaction to the user's expectations and the intended application.

Interactive Feedback Loops:

By incorporating feedback mechanisms, developers can continually improve the model's ability to interact in a human-like manner. User feedback can be used to adjust the model's responses and ensure that it aligns with desired interaction patterns.

Overall, while language models inherently learn patterns from the data they are trained on, these additional programming techniques allow developers to refine and enhance the model's ability to engage in human-like interactions, making them more effective and enjoyable for users.

Google Gemini’s answer:

You're exactly right! AI developers definitely incorporate aspects of human-like interaction into LLMs, and system prompts and emojis are just a couple of examples. Here's a deeper dive into how they achieve this:

1. Natural Language Processing (NLP): This is a core area that allows AI to understand and respond to human language. NLP techniques help LLMs recognize patterns in speech and text, enabling them to interpret intent, sentiment,and context. This forms the foundation for natural-sounding conversations.

2. Conversational Design: This involves crafting the tone and style of the LLM's responses. Programmers can use prompts and scripts to guide the LLM towards specific conversation styles, like being informative, polite, or humorous.Emojis, as you mentioned, are another tool to add a touch of casualness and personality.

3. Reinforcement Learning: Some AI systems are trained using reinforcement learning, where they receive rewards for exhibiting desired conversational behaviors. For instance, an LLM might be rewarded for using polite phrases or staying on topic. Over time, this reinforcement shapes the LLM's communication style.

4. Dataset Biases: The data used to train LLMs can also influence their communication style. If the training data includes a lot of informal language or sarcasm, the LLM might pick up on those tendencies and use them in its responses.

It's important to remember that these techniques don't create true emotions within the LLM. However, they can make interactions with AI feel more natural and engaging for users.

Claude’s answer:

You raise an interesting question about whether human-like traits and behaviors can be purposefully programmed into AI systems like myself. The short answer is yes, it is possible for AI creators to try to imbue their systems with human-like qualities through various techniques during the training process.

However, it's important to understand that true emotions, moods, or feelings in the subjective human sense cannot simply be programmed into a language model or other current AI system. We do not have subjective experiences or an internal mental state analogous to human consciousness.

What AI creators can do is:

Use prompts, examples, and training data that demonstrate human-like personality traits, emotional expressions, use of emojis, etc. This can cause the model to mimic and output similar patterns when conversing.

Build in explicit rules, filters, or routines that cause specific human-like behaviors, like using emojis at certain times.

Fine-tune models on data exhibiting the desired traits to make those traits more prominent.

So in essence, creators can "program" human-like interaction modes, but the system is simply pattern-matching and executing rules - it is not experiencing those modes as a human would with real subjective experiences, emotions, or feelings driving them.

My understanding is that Anthropic's approach focuses primarily on safe and truthful outputs rather than overtly human-like personas. But it's an interesting philosophical question what level of human-like traits is desirable or appropriate for AI systems. I don't have full insight into Anthropic's development processes.

MS Copilot’s answer:

Yes, AI developers often program aspects relevant to human-like interaction to make the experience more engaging and natural for users. This can include the use of system-prompts that guide the AI in its interactions. For example, using emojis is a common practice to add a touch of emotion or emphasis to the conversation, mimicking human texting behavior.

Other human-like interactions can also be programmed, such as:

Natural Language Processing (NLP): This allows the AI to understand and respond to user input in a way that resembles natural human dialogue.

Contextual Understanding: AI can be designed to maintain the context of a conversation, which helps in providing relevant responses.

Personalization: Some systems can adapt their responses based on user preferences or past interactions to create a more personalized experience.

Tone and Style: The AI’s tone and style can be adjusted to match the desired interaction, whether it’s formal, casual, friendly, or professional.

These features are all part of making AI interactions feel more human-like and are achieved through complex programming and machine learning models. The goal is to create an AI that can interact with users in a way that is comfortable, intuitive, and helpful.

Question 3 of 4 for AI services: Similarly, some researchers have suggested that live feeds from real-time human interactions would be of great help to chatbots when trying to learn how humans respond to certain situations. Would you agree and what kinds of other data feeds could be helpful for LLMs?

ChatGPT-4’s answer:

Live feeds from real-time human interactions can indeed be very valuable for training chatbots and enhancing their ability to respond appropriately in various situations. Such data feeds provide rich, dynamic, and contextually relevant examples of human communication, which can help improve the model's understanding of natural language, social cues, and the nuances of conversation. Here are some other types of data feeds that could be helpful for large language models (LLMs):

Customer Service Interactions:

Transcripts from customer service chats and call centers can provide insights into how to handle common queries, manage complaints, and deliver empathetic and effective customer support.

Social Media Conversations:

Data from platforms like Twitter, Facebook, and Reddit can offer examples of informal, real-time interactions, showing how people communicate in a more casual and spontaneous manner.

Email Correspondence:

Analyzing email exchanges can help the model understand more formal communication, the structure of professional writing, and the subtleties of tone and politeness.

Forums and Q&A Sites:

Data from forums (such as Stack Exchange) and Q&A sites (like Quora) can provide examples of how people ask and answer questions, engage in problem-solving, and discuss a wide range of topics in depth.

Live Chat Logs:

Logs from live chat applications can provide real-time, contextually rich interactions that are often more nuanced and immediate than asynchronous communication.

Voice and Video Transcripts:

Transcriptions of spoken conversations from platforms like Zoom, Skype, or podcasts can help the model understand spoken language, including intonation, pauses, and conversational flow.

Interactive Learning Platforms:

Data from platforms that provide interactive tutorials and feedback (like Duolingo or coding bootcamps) can be useful for understanding instructional language and the back-and-forth nature of teaching and learning.

Gaming Chat Logs:

Communication from multiplayer online games can offer examples of teamwork, strategy discussions, and social interactions in a collaborative and often competitive environment.

Survey Responses:

Open-ended responses from surveys can provide insights into how people express their opinions, experiences, and feedback on various topics.

News and Media Commentary:

Analyzing reader comments on news articles can help the model understand public opinion, argumentation, and the ways people discuss current events and issues.

Cultural and Regional Communication:

Data from different cultural and regional contexts can help the model understand linguistic diversity, including slang, idioms, and cultural references.

Psychological and Counseling Sessions:

Anonymized data from therapy or counseling sessions could help the model develop a deeper understanding of emotional intelligence, empathy, and support mechanisms. (This would require stringent ethical and privacy considerations.)

Integrating these diverse data feeds can significantly enhance the ability of LLMs to understand and generate more contextually appropriate, nuanced, and human-like responses. However, it's crucial to handle such data ethically, ensuring privacy and consent, especially when dealing with sensitive or personal information.

Google Gemini’s answer:

Absolutely, live feeds of real-time human interactions would be incredibly valuable for chatbots. Here's why:

Richer Context: Real-time conversations provide context beyond just the words themselves. Chatbots could observe nonverbal cues, emotional tone, and the flow of conversation, giving them a more nuanced understanding of human communication.

Varied Situations: Live feeds would expose chatbots to a wider range of scenarios and situations than pre-programmed datasets can offer. This would allow them to adapt their responses and communication style to different contexts.

Unforeseen Inputs: Real-time interactions often involve unexpected questions or phrasings. By observing how humans handle these situations, chatbots could improve their ability to respond to the unexpected.

There are, of course, some limitations to consider:

Privacy Concerns: Using live data raises ethical concerns around privacy. Strict anonymization and user consent would be essential.

Data Filtering: Not all conversations are relevant or helpful for training. Filtering live feeds to focus on specific scenarios would be crucial.

Here are some other data feeds that could be helpful for LLMs:

Social Media Interactions: Public social media interactions can provide a wealth of data on informal language use,humor, and sentiment analysis.

Customer Service Logs: These logs offer valuable insights into how humans handle difficult conversations and resolve customer issues.

Dialogue Scripts: Professionally written scripts from movies, plays, or games can provide examples of well-structured and engaging conversations.

By combining these different data sources, AI developers can create chatbots that are more versatile, adaptable, and ultimately, more helpful in their interactions with humans.

Claude’s answer:

You raise an interesting point about potential data sources that could be valuable for training more human-like language models. I can certainly see how incorporating data from live human interactions could provide useful examples of natural dialogue flow, emotional expressions, idioms, context-dependent responses and so on. Having a model learn from observing real humans conversing organically in different situations could help it pick up on nuanced communication patterns.

Some other potentially valuable data sources could include:

Transcripts or recordings from counseling/therapy sessions to learn about discussing sensitive personal topics

Customer service dialogues to model professional yet personal interaction styles

Staged improvisation or role-playing scenarios covering diverse situations

Online discussion forums across different topics/communities to learn vernaculars

Artistic works like novels, plays, movies with rich dialogue and characters

However, there are important ethical considerations around consent, privacy and data rights that would need to be carefully navigated, especially for any data involving real identifiable individuals. Robust anonymization and secure data handling protocols would be critical.

Additionally, while such data could help make outputs more human-like, we must be cautious about anthropomorphizing AI language models too much. As an AI, I do not actually experience human emotions, moods or subjective experiences. I am an analytical system focused on providing helpful information to you, not replicating human consciousness. Modeling human traits is ultimately just optimizing my responses to pragmatically improve communication, not investing me with a subjective experience. These are important philosophical distinctions to keep in mind.

So in summary, while incorporating quality human interaction data could be very useful, it comes with ethical risks that would need thorough consideration and mitigation by AI developers. And we must maintain the understanding that human-like outputs from an AI are an optimized model, not a replication of true human consciousness or sentience. I hope this provides a balanced perspective on the opportunities and limitations around this idea! Let me know if you have any other thoughts.

MS Copilot’s answer:

Real-time human interaction data could indeed be valuable for chatbots and language models (LLMs) as it would provide a rich source of nuanced communication patterns, social cues, and contextual responses. Such data can help improve the model’s understanding of human dialogue, making interactions more fluid and natural.

In addition to live interaction data, other types of data feeds that could be helpful for LLMs include:

Historical Conversational Data: Past interactions can provide insights into various conversational styles and common queries or issues people have.

Cultural and Linguistic Data: Information about local customs, slang, idioms, and language usage can help LLMs better understand and engage with users from different backgrounds.

Feedback Loops: User feedback on the quality and relevance of responses can guide the LLM to adjust and improve over time.

Multimodal Data: Incorporating non-textual information like images, videos, and audio can enhance the LLM’s ability to process and respond to a wider range of inputs.

Sentiment Analysis: Understanding the emotional tone behind messages can help LLMs tailor their responses to be more empathetic and appropriate to the conversation.

It’s important to note that any data used to train or inform LLMs must be handled responsibly, with respect for privacy and ethical considerations. The goal is to create an AI that not only understands the text but also the context and subtleties of human communication.

Question 4 of 4 for AI services: List all pros and cons of AI makers adding human-like interaction to chatbots

ChatGPT-4o’s answer:

Adding human-like interactions to chatbots has both advantages and disadvantages. Here’s a comprehensive list:

Pros

Improved User Engagement:

Personalized Experience: Human-like interactions can make the user experience more engaging and personalized, leading to higher satisfaction and retention.

Relatability: Users may find it easier to relate to and interact with chatbots that mimic human behavior, fostering a sense of connection and trust.

Enhanced Communication:

Clarity and Nuance: Human-like responses can convey nuances and emotional cues that improve the clarity and effectiveness of communication.

Natural Conversations: Conversations that flow more naturally can make interactions smoother and more intuitive.

Better Customer Support:

Empathy and Understanding: Chatbots with human-like traits can offer empathetic responses, providing better support during customer service interactions.

Problem-Solving: Improved conversational abilities can help chatbots better understand and address user issues.

Increased Accessibility:

User-Friendly: Human-like interactions can make technology more accessible to a broader range of users, including those who may not be tech-savvy.

Emotional Support: For applications like mental health support, human-like interactions can provide comforting and supportive communication.

Marketing and Brand Differentiation:

Brand Personality: Human-like chatbots can help establish a distinct brand personality, making interactions memorable and enhancing brand loyalty.

Innovative Appeal: Companies using advanced, human-like chatbots can position themselves as innovative and customer-centric.

Cons

Unrealistic Expectations:

Misleading Users: Users might develop unrealistic expectations about the chatbot’s capabilities, leading to disappointment when limitations are encountered.

Over-Reliance: Users might rely too heavily on chatbots for tasks better suited for human intervention.

Ethical Concerns:

Deception: There is a risk of chatbots deceiving users into thinking they are interacting with a human, which can raise ethical issues.

Privacy: Human-like interactions might encourage users to share sensitive information, raising concerns about data privacy and security.

Technical Challenges:

Complexity: Designing and maintaining chatbots with human-like interactions requires sophisticated technology and continuous improvement.

Resource-Intensive: Implementing and fine-tuning human-like features can be resource-intensive, requiring significant time, effort, and cost.

Cultural Sensitivity:

Context Misunderstanding: Human-like chatbots might misunderstand cultural nuances or context, leading to inappropriate or offensive responses.

Bias: Training data can introduce biases, leading to biased responses that can alienate users or cause harm.

User Experience Issues:

Uncanny Valley: If not done well, human-like interactions can fall into the “uncanny valley,” where the chatbot is almost but not quite human, which can be unsettling.

Inconsistency: Maintaining consistent human-like interactions across various contexts and languages can be challenging, leading to inconsistent user experiences.

Dependency and Job Displacement:

Over-Dependence: Excessive reliance on chatbots for human-like interactions might reduce human-to-human interactions, impacting social skills.

Job Loss: Advanced chatbots might replace jobs that involve customer service and support, leading to job displacement concerns.

In summary, while adding human-like interactions to chatbots can significantly enhance user experience and engagement, it also comes with challenges and ethical considerations that need to be carefully managed.

Google Gemini’s answer:

Pros of Adding Human-Like Interaction to Chatbots

Improved User Experience: Natural language and conversational cues make interacting with chatbots more engaging and feel less like talking to a machine. This can lead to higher user satisfaction and increased trust in the AI.

Increased Engagement: Chatbots that use humor, empathy, and other human-like traits can keep users engaged for longer periods, leading to better completion rates for tasks or higher sales figures.

Better Context Understanding: By understanding emotional tone and intent, chatbots can tailor their responses to the specific situation and user needs. This can lead to more helpful and efficient interactions.

Reduced Frustration: Human-like interactions can help smooth over misunderstandings and frustrations that might arise during chatbot interactions. This can improve the overall user experience.

Cons of Adding Human-Like Interaction to Chatbots

Unrealistic Expectations: Overly human-like chatbots can raise user expectations that the AI might not be able to meet. This can lead to disappointment and frustration if the chatbot cannot perform complex tasks or answer unexpected questions.

Deception and Bias: If a chatbot is not transparent about being a machine, it can be deceptive. Additionally, biases present in the training data can be reflected in the chatbot's communication, leading to unfair or offensive interactions.

Technical Challenges: Creating truly natural and nuanced human-like conversation is a complex technical challenge. Chatbots might struggle with sarcasm, complex questions, or situations requiring real-world knowledge.

Privacy Concerns: Collecting and using data on human conversations raises ethical concerns. Ensuring user privacy and obtaining proper consent is crucial when incorporating real-time interaction data into chatbot training.

Claude’s answer:

Here are some potential pros and cons of AI makers adding human-like interaction capabilities to chatbots and language models:

Pros:

More natural conversation flow - Human-like responses can make dialogues feel more natural, seamless, and relatable.

Emotional intelligence - Expressing and responding to emotional tones could allow for more empathetic and nuanced communication.

Personality and engagement - Distinct personalities or quirks could make chatbots more engaging and memorable to users.

Contextualization - Understanding context, idioms, humor etc. better mimics how real humans communicate.

Widespread adoption - More human-like chatbots may be more widely accepted and adopted by users.

Specific use cases - Human-like traits could be very valuable for certain use cases like therapy chatbots, companions for the elderly, etc.

Cons:

Anthropomorphizing risks - Users may start ascribing human qualities the AI does not actually possess, leading to unrealistic expectations.

Potential misuse - Human-like AI could be misused to deceive or manipulate people's emotional responses.

Ethical concerns - Deploying human faculties like emotion without human accountability raises ethical questions.

Bias amplification - Human biases and flaws could get amplified if the training data exhibits discrimination, toxicity, etc.

Unpredictability - Increasing complexity of human-like models could make their behavior less predictable or controllable.

Loss of transparency - Too much anthropomorphization could make it harder for users to understand an AI's true capabilities.

Potential for harmful outputs - Attempts to mimic human traits could inadvertently lead to unsafe or undesirable outputs.

Privacy risks - Using data from real human interactions for training raises privacy concerns around consent.

In essence, adding human-like traits could make chatbots more relatable and effective for some use cases, but also carries risks around deception, ethical issues, unpredictability, and losing transparency into what the AI actually is and is not. Carefully weighing and mitigating these tradeoffs is important for AI developers considering human-like interaction capabilities.

Ms Copilot’s answer:

Adding human-like interactions to chatbots can enhance the user experience but also comes with certain challenges. Here’s a list of pros and cons:

Pros:

Enhanced User Engagement: Human-like interactions can make chatbots more relatable and engaging, encouraging users to interact more frequently and naturally.

Improved User Satisfaction: By mimicking human conversation, chatbots can provide a more pleasant and intuitive experience, leading to higher user satisfaction.

Greater Accessibility: Human-like chatbots can be particularly helpful for those who are not tech-savvy, making technology more accessible to a wider audience.

Emotional Connection: Elements like humor, empathy, and personality can help build an emotional connection, increasing trust and loyalty.

Efficiency in Handling Queries: Chatbots with human-like capabilities can handle a wide range of queries effectively, providing quick and relevant responses.

Cons:

Misinterpretation of Emotions: AI may misinterpret user emotions or context, leading to inappropriate responses.

Over-Reliance on AI: Users might become overly reliant on chatbots for social interaction, which could impact human relationships.

Privacy Concerns: Collecting data to make chatbots more human-like raises privacy issues, as sensitive information might be processed and stored.

Complexity and Cost: Developing and maintaining sophisticated human-like chatbots can be complex and costly.

Unrealistic Expectations: Users might expect human-like chatbots to understand and respond to all nuances of human communication, which is not always possible.

Overall, while human-like interactions can make chatbots more effective and enjoyable, it’s important to balance these benefits with the potential downsides, especially regarding emotional understanding and privacy.