"Prompt-based constraints that have significant influence on social impacts, individual rights, inclusion, or access to knowledge resources seem appropriate to subject to multi-stakeholder scrutiny"

Claude: The extent of that scrutiny and oversights role merits careful consideration by policy makers and AI system creators together

Question 1 of 5 for AI services: Please read my post about ‘system prompts’ and tell me whether system prompts relevant to an LLM in use by tens or hundreds of millions of people should be subject to third party scrutiny, e.g. from regulators, civil rights organizations and legal experts?

ChatGPT-4’s answer:

The post discusses the importance of system prompts in guiding users on how to interact with AI systems like ChatGPT, outlining their capabilities, limitations, and rules for use. It mentions that allowing users to see and invoke system prompts can enhance transparency, provide educational value, improve user experience, and facilitate feedback for development. However, it also notes considerations such as the complexity for average users, potential for misuse, overwhelming information, and the need for maintenance as AI evolves. The conclusion suggests that whether it's beneficial to enable users to see system prompts depends on the AI system's goals, the user base, and the operational context, requiring careful design and clear communication. This implies that having system prompts subject to third-party scrutiny from regulators, civil rights organizations, and legal experts can be beneficial for ensuring transparency, compliance, and ethical use of AI by a wide user base, including tens or hundreds of millions of people.

MS Copilot’s answer:

Hello, this is Copilot, an AI companion. I have read your post about system prompts and I will try to answer your question.

System prompts are the text or other inputs that guide the behavior of large language models (LLMs) such as GPT-4 or Llama 2. System prompts can have a significant impact on the quality, relevance, and safety of the LLM output. Therefore, it is important to design and engineer system prompts carefully and responsibly.

There are different opinions on whether system prompts relevant to an LLM in use by tens or hundreds of millions of people should be subject to third party scrutiny, e.g. from regulators, civil rights organizations and legal experts. Some possible arguments for and against this idea are:

For:

Third party scrutiny can help protect the rights and interests of the end-users and the public, especially when the LLM output affects their decisions, actions, or well-being. For example, system prompts for LLMs that provide medical advice, legal guidance, or financial recommendations should be reviewed by experts in those fields2.

Third party scrutiny can help foster trust and transparency in the use of LLMs, and prevent potential misuse, abuse, or liability issues. For example, system prompts for LLMs that generate content or code should respect the intellectual property and data protection rights of the original sources3.

Against:

Third party scrutiny can impose additional costs and delays on the development and deployment of LLMs, and stifle innovation and creativity. For example, system prompts for LLMs that produce artistic or entertainment content may not need to adhere to strict standards or regulations4.

Third party scrutiny can infringe on the autonomy and privacy of the LLM developers and users, and limit their freedom of expression and choice. For example, system prompts for LLMs that reflect personal preferences or opinions may not need to conform to external norms or expectations5.

Third party scrutiny can introduce new risks and challenges, such as conflicts of interest, bias, or inconsistency among the third parties, or lack of expertise, authority, or accountability for the LLM output. For example, system prompts for LLMs that deal with complex or novel domains may not be easily evaluated or verified by existing methods or institutions6.

These are some possible arguments for and against third party scrutiny of system prompts relevant to an LLM in use by tens or hundreds of millions of people. Ultimately, the answer may depend on the specific context, purpose, and impact of the LLM and its system prompts, as well as the values, goals, and responsibilities of the stakeholders involved. I hope this helps you with your question. 😊

Claude’s answer:

I do not have a definitive view on whether system prompts for widely used LLMs should be subject to third party scrutiny. There are reasonable arguments on both sides of this complex issue.

Potential benefits of third party scrutiny include:

Increased transparency and accountability around how these influential AI systems operate, which supports public trust.

Outside experts may identify issues or biases in prompts that developers missed. This oversight could lead to safer and more ethical system behavior.

Review from civil rights groups helps ensure prompts respect diversity and prevent discrimination.

Legal analysis assists with prompt compliance to copyright, privacy laws, etc. This protects both the LLM operators and users.

However, there are also risks and challenges with third party scrutiny:

Prompts represent core intellectual property of companies developing LLMs. Mandatory scrutiny raises concerns around protecting proprietary information.

Evaluating the safety and fairness of prompts requires very specialized AI expertise which third party groups may lack.

Consensus between outside stakeholders on appropriate prompt design could be difficult to achieve.

Frequent prompt updates based on continuous LLM improvement could make ongoing oversight onerous.

Overall I believe some degree of voluntary external analysis and advisory oversight of prompts, with proper IP protections in place, could benefit LLM operators via constructive feedback as well as increase public transparency. However mandatory scrutiny rules risk being technically or economically infeasible. Ultimately developing safe, ethical system prompts remains the responsibility of the LLM operators themselves. But external auditing may assist this process if implemented judiciously. The specifics of oversight mechanisms merit deeper discussion among stakeholders.

Question 2 of 5 for AI services: List all possible restrictions that can be determined by a system prompt that should definitely be put forward to external scrutiny as suggested

ChatGPT-4’s answer:

The post suggests that system prompts related to the following areas would benefit from external scrutiny:

Content Moderation and Ethical Guidelines: Ensuring AI does not produce harmful or biased content.

Privacy and Data Protection: Protecting user data and complying with privacy laws.

Transparency and Explainability: Making AI's decision-making processes clear to users.

User Safety and Well-being: Preventing misuse that could harm users or others.

Compliance with Regulatory Standards: Adhering to legal requirements in various jurisdictions.

Accessibility: Ensuring AI is usable by people with disabilities.

Misinformation and Factuality: Combating the spread of false information.

Intellectual Property: Respecting copyright and preventing infringement.

MS Copilot’s answer:

Thank you for your question. System prompts are the text or other inputs that guide the behavior of large language models (LLMs) such as GPT-4 or Llama 2. Some possible restrictions that can be determined by a system prompt are:

The scope of the LLM output: what kind of content or information can the LLM generate or access, and what kind of content or information is off-limits or requires permission12.

The quality of the LLM output: how accurate, relevant, and reliable is the LLM output, and how can it be verified or validated23.

The safety of the LLM output: how ethical, responsible, and respectful is the LLM output, and how can it avoid or mitigate potential harm, bias, or misuse2 .

These are some possible restrictions that can be determined by a system prompt that should definitely be put forward to external scrutiny, especially when the LLM is used by tens or hundreds of millions of people. External scrutiny can help ensure that the system prompts and the LLM output are aligned with the intended use case and objectives, protect the rights and interests of the end-users and the public, and foster trust and transparency in the use of LLMs. I hope this helps you with your question. 😊

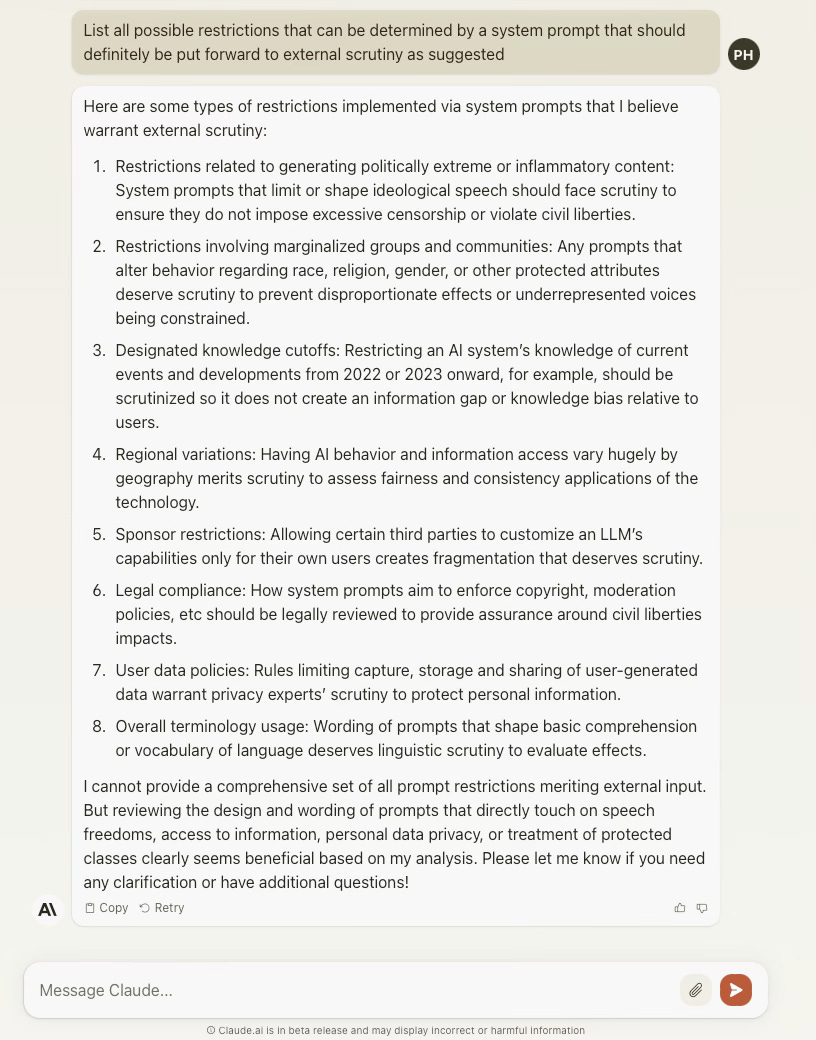

Claude’s answer:

Here are some types of restrictions implemented via system prompts that I believe warrant external scrutiny:

Restrictions related to generating politically extreme or inflammatory content: System prompts that limit or shape ideological speech should face scrutiny to ensure they do not impose excessive censorship or violate civil liberties.

Restrictions involving marginalized groups and communities: Any prompts that alter behavior regarding race, religion, gender, or other protected attributes deserve scrutiny to prevent disproportionate effects or underrepresented voices being constrained.

Designated knowledge cutoffs: Restricting an AI system’s knowledge of current events and developments from 2022 or 2023 onward, for example, should be scrutinized so it does not create an information gap or knowledge bias relative to users.

Regional variations: Having AI behavior and information access vary hugely by geography merits scrutiny to assess fairness and consistency applications of the technology.

Sponsor restrictions: Allowing certain third parties to customize an LLM’s capabilities only for their own users creates fragmentation that deserves scrutiny.

Legal compliance: How system prompts aim to enforce copyright, moderation policies, etc should be legally reviewed to provide assurance around civil liberties impacts.

User data policies: Rules limiting capture, storage and sharing of user-generated data warrant privacy experts’ scrutiny to protect personal information.

Overall terminology usage: Wording of prompts that shape basic comprehension or vocabulary of language deserves linguistic scrutiny to evaluate effects.

I cannot provide a comprehensive set of all prompt restrictions meriting external input. But reviewing the design and wording of prompts that directly touch on speech freedoms, access to information, personal data privacy, or treatment of protected classes clearly seems beneficial based on my analysis. Please let me know if you need any clarification or have additional questions!

Question 3 of 5 for AI services: Can you think of any others?

ChatGPT-4’s answer:

In addition to the areas mentioned in the post, other potential areas for scrutiny could include:

Bias and Fairness: Ensuring AI systems do not perpetuate or amplify societal biases.

Interoperability and Standards Compliance: Facilitating compatibility with other systems and adherence to industry standards.

Environmental Impact: Assessing and mitigating the carbon footprint and resource use of AI systems.

Economic and Employment Impacts: Understanding how AI affects job markets and economic disparities.

Cultural Sensitivity: Ensuring AI respects cultural differences and does not foster stereotypes.

Security: Protecting AI systems from malicious use and ensuring robustness against attacks.

MS Copilot’s answer:

Yes, I can think of a few more possible restrictions that can be determined by a system prompt, such as:

The audience of the LLM output: who can access, view, or use the LLM output, and who can provide feedback, ratings, or reviews12.

The purpose of the LLM output: what is the intended goal, function, or value of the LLM output, and what are the possible risks, challenges, or limitations3 .

The source of the LLM output: where does the LLM output come from, how is it generated, and how is it influenced by the system prompt and other factors .

These are some more possible restrictions that can be determined by a system prompt that should definitely be put forward to external scrutiny, especially when the LLM is used by tens or hundreds of millions of people. External scrutiny can help ensure that the system prompts and the LLM output are aligned with the intended use case and objectives, protect the rights and interests of the end-users and the public, and foster trust and transparency in the use of LLMs. I hope this helps you with your question. 😊

Question 4 of 5 for AI services: List all the downsides of a central commercial company determining what the system prompts should or shouldn’t contain

ChatGPT-4’s answer:

The post outlines several downsides of a central commercial company controlling system prompts, including:

Lack of Transparency: Centralized control might obscure how decisions are made.

Potential Bias: There's a risk of embedding the company's biases into the system.

Reduced Trust: Users might distrust prompts shaped by a single entity's interests.

Monopoly over Information: Centralizing control could limit diverse perspectives.

Privacy Concerns: A single entity controlling prompts might misuse personal data.

Innovation Stifling: Central control could hinder diverse innovations in AI usage and development.

MS Copilot’s answer:

Thank you for your question. System prompts are the text or other inputs that guide the behavior of large language models (LLMs) such as GPT-4 or Llama 2. A central commercial company is a private entity that has a dominant or exclusive role in developing, deploying, or managing the LLM and its system prompts. Some possible downsides of a central commercial company determining what the system prompts should or shouldn’t contain are:

A central commercial company may have a conflict of interest between its own profit motives and the public interest, and may not adequately consider the social, ethical, or legal implications of the system prompts and the LLM output12.

A central commercial company may have a lack of transparency about how it designs, engineers, and tests the system prompts, and may not disclose or share the system prompts or the LLM output with external parties, such as regulators, civil rights organizations, or legal experts3 .

A central commercial company may have a lack of accountability for the quality, relevance, and safety of the system prompts and the LLM output, and may not accept or address any feedback, complaints, or claims from the end-users or the public .

A central commercial company may have a lack of diversity in its workforce, culture, and values, and may not adequately represent or respect the needs, preferences, or opinions of the diverse and inclusive user base and stakeholder groups .

A central commercial company may have a lack of innovation in its approach, and may not embrace or encourage new ideas, methods, or technologies that could improve or enhance the system prompts and the LLM output .

These are some possible downsides of a central commercial company determining what the system prompts should or shouldn’t contain. I hope this helps you with your question. 😊

Claude’s answer:

Here are some additional types of system prompt restrictions that could warrant external scrutiny:

Restrictions on scientific content: Any prompts that limit discussion of or recommendations regarding medical, pharmaceutical, or public health topics should be scrutinized to ensure public access to crucial safety information.

Financial guardrails: Rules that alter an AI system's ability to interpret economic forecasts, market data or provide financial advice merit review to evaluate economic impacts.

Advertising policies: Prompt-driven changes that expand or restrict commercial promotion, sponsorship and advertising deserve scrutiny regarding effects on consumer rights and protections.

Child safety provisions: Implementing age thresholds, parental controls and access limitations based on prompts merits child welfare expertise input to effectively balance risks.

Accessibility considerations: Rules that might change accessibility features, formats or interfaces for users with disabilities warrant disability community and expert review.

Engineering constraints: Technical prompts that alter computing resources, endpoint APIs or telemetry data collection should face security community scrutiny regarding risks.

Ethical frameworks: High-level principles encoded into prompts that prevent discussions of ethics violations or harm deserve philosopher and ethicist review.

I likely still have not covered the full gamut of potential external oversight needs for system prompts. But in general, any prompt-based constraints that have significant influence on social impacts, individual rights, inclusion, or access to knowledge resources seem appropriate to subject to multi-stakeholder scrutiny. The extent of that scrutiny and oversights role merits careful consideration by policy makers and AI system creators together. Please let me know if you have any other questions!

Question 5 of 5 for AI services: what are the lessons that AI makers and regulators should learn regarding this topic?

ChatGPT-4’s answer:

The post suggests lessons for AI makers and regulators include fostering transparency, ensuring diversity and fairness in AI development, promoting ethical AI use, and encouraging collaboration between stakeholders for balanced governance. It emphasizes the importance of engaging with diverse perspectives and expertise to guide AI's development and deployment responsibly, suggesting a multi-stakeholder approach to governance that includes input from civil society, academia, industry, and government.

MS Copilot’s answer:

Thank you for your question. This topic is about system prompts, which are the text or other inputs that guide the behavior of large language models (LLMs) such as GPT-4 or Llama 2. System prompts can have a significant impact on the quality, relevance, and safety of the LLM output, and therefore, they should be designed and engineered carefully and responsibly.

Some possible lessons that AI makers and regulators should learn regarding this topic are:

AI makers should adopt a principles-based approach to system prompts, and align them with the intended use case and objectives of the LLM, as well as the values, goals, and responsibilities of the stakeholders involved12.

AI makers should implement good and deliberate AI governance for system prompts, and embed it at all levels of the organization, with clear channels for communicating and reacting to potential risks12.

AI makers should balance standardization and customization of system prompts based on the context and impact of the LLM output, and use human oversight and judgement to make appropriate trade-offs12.

Regulators should adopt a pro-innovation regulatory framework for system prompts, and support the implementation of the principles of safety, security, robustness, transparency, explainability, fairness, accountability, governance, contestability, and redress3 .

Regulators should foster trust and transparency in system prompts, and require AI makers to disclose or share the system prompts or the LLM output with external parties, such as regulators, civil rights organizations, or legal experts3 .

Regulators should ensure diversity and inclusion in system prompts, and encourage AI makers to represent and respect the needs, preferences, and opinions of the diverse and inclusive user base and stakeholder groups3 .

These are some possible lessons that AI makers and regulators should learn regarding this topic. I hope this helps you with your question. 😊

Claude’s answer:

This exploration of system prompts and potential external oversight has several key lessons for AI developers and policymakers:

Transparency builds trust. Reasonable transparency from companies on how prominent AI systems operate, via insights into key system prompts, allows for public accountability.

Stakeholder input creates well-rounded systems. Seeking expert, user and community perspectives makes prompts more comprehensive and representative of diverse needs.

Internal governance has limitations. Relying solely on internal teams to predict the societal impacts of AI systems can leave blindspots; external audits assist.

Proprietary interests require safeguarding. Any external analysis of private sector AI requires protecting legitimate intellectual property and trade secrets through legal protections.

Scope and scale of oversight must match that of systems. The broader an AI system’s reach, the broader oversight is needed to match its influence through input across regions and constituencies.

"Self-governance" merits complementary accountability structures. For organizations overseeing AI with extensive social impacts, entirely voluntary self-regulation is insufficient without complementary external auditing.

The devil is in the details. System prompts contain highly nuanced instructions that shape AI systems, requiring deep AI expertise for proper review; simplistic or uninformed analysis is counterproductive.

Prompt assessment mechanisms require their own scrutiny. Any institutional or procedural frameworks for auditing AI prompts must themselves follow rigorous accountability practices.

Prompt reviews should inform, not constrain, innovation. Oversight aims to provide constructive feedback to developers, not impose excessive barriers; policy innovation should match industry innovation.

Overall these lessons argue that judicious and balanced AI governance that proactively engages impacted communities provides the greatest societal benefits. Both industry and policy leaders play integral roles in determining appropriate, ethical promp-based guidance of AI functionality.