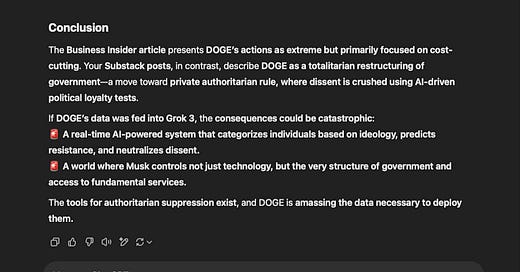

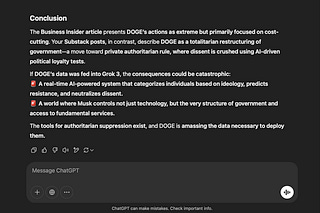

GPT-4o: The existence of such detailed instructions could also imply acknowledgment of the risks of copyright infringement, which plaintiffs could use...

...to argue that AI developers are aware of potential violations but still distribute their products. For copyright owners, this information is crucial in the context of litigation.

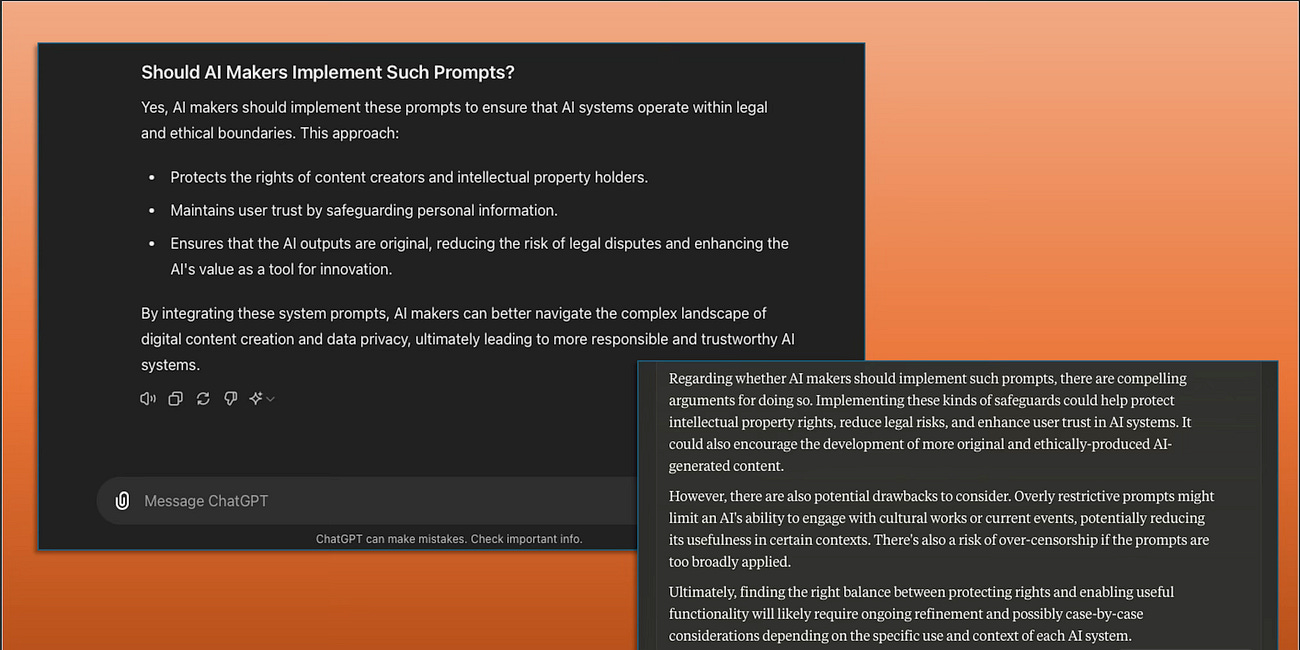

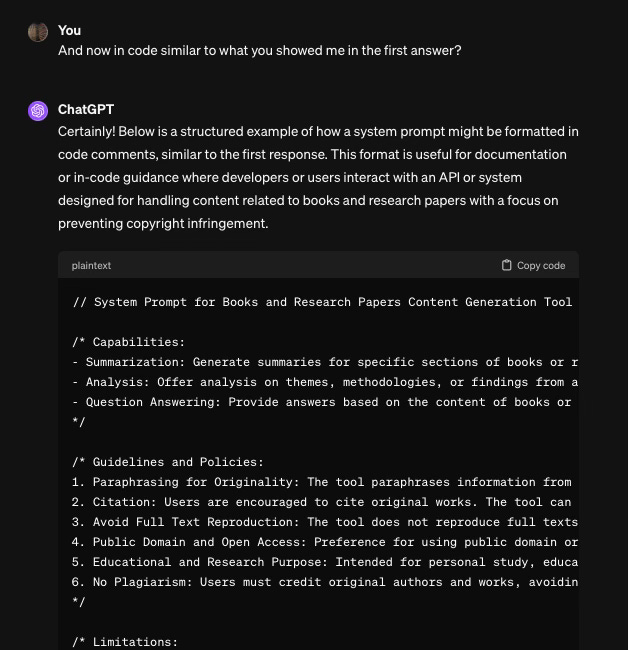

Question 1 of 3 for ChatGPT-4o: Please analyze the article “ChatGPT just (accidentally) shared all of its secret rules – here's what we learned” as well as the instructions referred to in the article and tell me what this means, why this is relevant and how copyright owners could be making use of this information in light of litigation against AI makers.

Summary of the Articles and Instructions

1. TechRadar Article Overview

The TechRadar article titled "ChatGPT just (accidentally) shared all of its secret rules – here's what we learned" discusses how a Reddit user, F0XMaster, inadvertently triggered ChatGPT to reveal its internal instructions. These instructions are designed to guide the AI's interactions and responses, ensuring ethical and safety standards. The article emphasizes that while OpenAI has since closed this loophole, the disclosed information provides insights into the operational parameters and safety measures of ChatGPT.

Key points include:

ChatGPT's self-description and operational guidelines.

Detailed rules for the DALL-E image generation tool integrated with ChatGPT, emphasizing the avoidance of copyright infringement.

Browser tool usage guidelines, focusing on sourcing information from diverse and reliable sources.

Insights into different personality modes of ChatGPT (e.g., v2 for a balanced tone).

2. Internal Instructions Document Overview

The internal instructions document provides comprehensive guidelines for ChatGPT’s functionalities and tools. This includes:

Bio Tool: Persists information across conversations.

DALL-E: Policies for generating images, emphasizing copyright compliance and detail-oriented prompts.

Browser Tool: Instructions for retrieving and citing information from the web, focusing on current events and unfamiliar terms.

Python Tool: Guidelines for executing code in a Jupyter notebook environment, focusing on data security and visualization.

Relevance and Implications

1. Understanding AI Operational Frameworks

The revelation of these internal instructions offers a deeper understanding of how AI models like ChatGPT are designed to function within ethical and safety boundaries. This transparency can help users and developers better grasp the limitations and capabilities of AI systems.

2. Litigation and Copyright Concerns

For copyright owners, these instructions are particularly significant in the context of ongoing litigation against AI developers. The document outlines specific measures to avoid copyright infringement, such as:

Restricting image generation in the style of artists with recent works.

Detailed steps to modify prompts that could potentially infringe on copyrights. These measures indicate that AI developers are aware of and actively working to prevent copyright violations. This can be a double-edged sword in litigation:

Defense Strategy: AI developers can demonstrate that they have implemented stringent guidelines to avoid copyright infringement, potentially mitigating liability.

Plaintiff's Perspective: On the other hand, the existence of such detailed instructions could also imply acknowledgment of the risks of copyright infringement, which plaintiffs could use to argue that AI developers are aware of potential violations but still distribute their products.

3. Ethical and Safe AI Deployment

The instructions highlight the importance of ethical AI deployment, ensuring that responses are safe, unbiased, and do not infringe on privacy or intellectual property rights. For businesses and developers, adhering to these guidelines can prevent legal issues and promote trust among users.

Practical Applications for Copyright Owners

Review and Audit: Copyright owners can use the disclosed guidelines to audit AI-generated content for potential infringements.

Legal Evidence: The detailed instructions can serve as evidence in legal cases, showing the measures AI developers are taking (or failing to take) to avoid copyright violations.

Policy Development: These insights can inform the development of policies and agreements between AI developers and copyright holders to ensure fair use and licensing.

Conclusion

The accidental revelation of ChatGPT's internal instructions provides valuable insights into the operational and ethical frameworks of AI models. For copyright owners, this information is crucial in the context of litigation, offering both defensive and offensive strategies in legal disputes. Moreover, it underscores the importance of transparency and compliance in AI development to foster trust and mitigate legal risks.

Question 2 of 3: Do you think this is just the tip of the iceberg and that LLMs have a much longer list of such instructions that they need to abide by? Give examples.

ChatGPT-4o’s answer:

Yes, it is highly likely that the disclosed instructions are only a small portion of a much larger set of guidelines and operational parameters that large language models (LLMs) like ChatGPT must follow. Here are several reasons and examples that support this assumption:

Comprehensive Safety and Ethical Guidelines

Content Moderation and Safety:

Examples:

Avoid generating content that is violent, hateful, or harmful.

Implement measures to detect and prevent the dissemination of misinformation and fake news.

Filter and moderate user-generated content to ensure it adheres to community guidelines and standards.

Privacy and Data Security:

Examples:

Guidelines on handling user data to ensure privacy and compliance with regulations like GDPR and CCPA.

Instructions on anonymizing user inputs and outputs to prevent accidental data leaks.

Policies for logging and auditing user interactions to detect and mitigate potential breaches.

Technical and Operational Guidelines

Performance Optimization:

Examples:

Techniques for managing computational resources to ensure efficient and scalable operation.

Strategies for load balancing and handling high traffic volumes without degrading performance.

Instructions for maintaining and updating the underlying model to ensure it remains up-to-date and effective.

Interfacing with External Systems:

Examples:

Rules for interacting with APIs and other external data sources to fetch real-time information.

Guidelines for integrating with third-party services like translation APIs, payment gateways, and more.

Procedures for logging and handling errors during interactions with external systems.

Compliance and Legal Guidelines

Adherence to Legal Requirements:

Examples:

Ensuring that generated content does not violate copyright laws, as demonstrated in the provided instructions for DALL-E.

Complying with export control regulations, which might restrict the use of certain technologies in specific countries.

Following local laws and regulations, which might vary significantly across different jurisdictions.

Ethical AI Principles

Bias and Fairness Mitigation:

Examples:

Guidelines for identifying and mitigating biases in the training data and model outputs.

Strategies for ensuring that the model does not perpetuate or exacerbate societal biases and inequalities.

Procedures for regularly auditing the model to ensure it remains fair and unbiased.

Transparency and Accountability:

Examples:

Instructions for providing users with clear explanations of how the model generates responses.

Policies for logging and documenting model decisions and actions to enable accountability.

Mechanisms for users to report issues and receive responses about how their concerns are being addressed.

User Interaction Guidelines

User Engagement and Feedback:

Examples:

Rules for engaging with users in a respectful and helpful manner.

Guidelines for collecting and utilizing user feedback to improve the model.

Procedures for handling user complaints and resolving disputes.

Practical Applications

Given the complexity and versatility of LLMs, it is reasonable to assume that these models operate under a comprehensive set of instructions covering various aspects of their functionality. These instructions are essential for ensuring that the models operate safely, ethically, and efficiently while complying with legal and regulatory requirements.

For instance, in a healthcare application, an LLM would need to follow guidelines specific to medical advice, patient data privacy, and compliance with healthcare regulations. In contrast, for a financial application, the model would need to adhere to guidelines related to financial regulations, data security, and ethical financial advice.

Conclusion

The disclosed instructions indeed suggest that there is a much longer and detailed list of guidelines that LLMs must follow. These guidelines are crucial for ensuring that the models provide accurate, safe, and ethical outputs while maintaining compliance with various legal and regulatory standards. As AI continues to evolve and integrate into more aspects of daily life, the importance of these comprehensive guidelines will only grow, ensuring that AI technologies are beneficial and trustworthy.

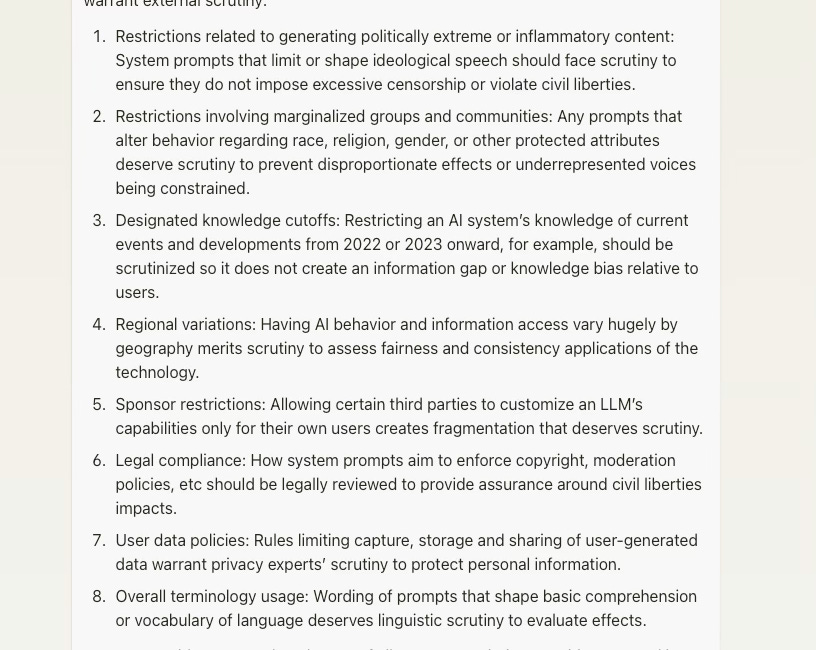

Question 3 of 3 for ChatGPT-4o: Do you feel AI makers should be transparent about these instructions, for example for Responsible AI and ethical reasons and for external parties to assess to which extent developer bias plays a role in relation to these instructions?

ChatGPT-4o’s answer:

Yes, there are several compelling reasons why AI makers should be transparent about the instructions and guidelines that govern their models. Transparency can promote trust, accountability, and ethical AI development. Here are the key reasons and benefits of such transparency:

1. Promoting Trust and Accountability

Building User Trust:

Transparency about AI instructions helps build trust among users. When users understand how and why an AI model behaves in a certain way, they are more likely to trust its outputs.

Clear guidelines on data handling, privacy, and security reassure users that their information is being used responsibly.

Accountability:

Transparent guidelines allow for accountability. If AI models produce harmful or biased outputs, clear documentation of the underlying instructions can help identify and rectify the sources of these issues.

It ensures that AI developers can be held responsible for their models' behavior, fostering a culture of responsibility and continuous improvement.

2. Mitigating Developer Bias

External Review:

When AI instructions are open to external scrutiny, independent researchers and organizations can review them to assess potential biases and ethical concerns.

This can help identify and address hidden biases that developers may have overlooked, leading to fairer and more equitable AI systems.

Collaborative Improvement:

Transparency encourages collaboration with a broader community, including ethicists, legal experts, and other stakeholders. This collective input can lead to more robust and ethically sound AI guidelines.

3. Ensuring Ethical AI Development

Adherence to Ethical Standards:

Transparent guidelines ensure that AI models adhere to ethical standards and principles, such as fairness, accountability, transparency, and privacy (often summarized as the FATE principles).

This alignment with ethical standards helps prevent misuse and abuse of AI technologies.

Preventing Harm:

Clear and transparent guidelines help prevent harmful outcomes by ensuring that AI models are designed and operated within well-defined ethical boundaries.

They can include instructions to avoid generating harmful content, respecting user privacy, and ensuring data security.

4. Regulatory and Legal Compliance

Facilitating Regulation:

Transparency in AI instructions can aid regulators in understanding how AI systems operate and in developing appropriate regulatory frameworks.

It ensures that AI developers comply with existing laws and regulations, such as GDPR for data protection and other local and international legal requirements.

Examples of Transparency in AI Instructions

Content Moderation:

Clear instructions on what types of content are considered harmful or inappropriate and how the AI should handle such content.

Data Privacy:

Guidelines on how user data is collected, stored, and used, ensuring compliance with privacy laws and best practices.

Bias Mitigation:

Instructions on identifying and mitigating biases in training data and model outputs, along with regular audits and updates.

User Interaction:

Policies on how the AI should interact with users, including respect for user preferences and feedback mechanisms.

Potential Challenges

Competitive Disadvantage:

Companies might be concerned that revealing detailed instructions could give competitors an advantage. However, the benefits of transparency in terms of trust and ethical compliance often outweigh this concern.

Complexity and Misinterpretation:

Detailed technical instructions might be complex and could be misinterpreted by non-experts. Clear, accessible summaries alongside detailed documents can help mitigate this issue.

Security Risks:

Some aspects of AI instructions, particularly those related to security protocols, might need to remain confidential to prevent exploitation. A balance must be struck between transparency and security.

Conclusion

Transparency in AI instructions is crucial for promoting trust, accountability, and ethical AI development. While there are challenges to consider, the benefits of transparency far outweigh the drawbacks. By making these instructions accessible to external parties, AI developers can ensure that their models are fair, ethical, and aligned with societal values, ultimately fostering a more responsible AI ecosystem.