Claude: Based on my analysis of the conversation, I notice several indications of potential bias in ChatGPT's responses regarding Sam Altman, despite its final claim of neutrality

ChatGPT-4o: Let’s revisit Sam Altman’s profile with a more balanced approach, focusing more critically on the areas where potential issues, controversies, or complexities arise.

Question 1 of 6 for ChatGPT-4o: Please read the transcript of the video "A fireside chat with Sam Altman OpenAI CEO at Harvard University" and tell me what they key messages are that Sam Altman is conveying.

ChatGPT-4o’s answer:

The key messages Sam Altman conveyed during his fireside chat at Harvard University include:

Empowerment Through Conviction and Resourcefulness: Altman emphasized the importance of believing in oneself and pursuing ideas with conviction. He noted that progress comes from hard work and determination, even for those with limited resources. He shared personal anecdotes from his early career, emphasizing the value of persistence and relentless resourcefulness in overcoming obstacles.

AI's Potential and Societal Impact: Altman discussed the transformative potential of artificial intelligence, noting that it is one of the most important technological advancements of our time. He highlighted the positive societal effects AI could have, such as reducing inequality by making tools and services, like education and healthcare, more accessible to all, regardless of wealth.

Navigating AI Development: Altman reflected on OpenAI's journey from its inception as a research lab with no clear product in mind to its eventual breakthrough with large language models like GPT. He spoke about the importance of scaling AI models and following where the science leads, even when the path is unclear or progress feels slow.

Ethical AI Usage and Responsibility: Throughout the talk, Altman stressed the importance of carefully considering the societal and ethical implications of AI. He mentioned specific examples, such as whether AI should provide legal advice or translate violent content, indicating that these are complex decisions that OpenAI does not take lightly. He advocated for a societal process to decide how AI technologies should be used, noting that AI systems should serve users within safe boundaries agreed upon by society.

The Future of AGI (Artificial General Intelligence): Altman shared his evolving vision for AGI, which he now sees as a tool that will integrate into society to enhance human productivity, rather than a single, superintelligent entity controlling everything. He emphasized that AGI should be navigable and human-compatible, contributing to a shared intelligence across society.

Education and Public Understanding of AI: Altman suggested that educational institutions should focus on teaching students how to effectively use AI tools. He also recommended that a basic understanding of AI, similar to how introductory computer science courses are taught today, should become part of general education.

Pivotal Decisions and Unknown Consequences: Altman acknowledged that, while OpenAI strives to make careful and responsible decisions about deploying AI models, it is difficult to always know which decisions will have the most significant impact on the future. He described this as the "butterfly effect," where small, seemingly inconsequential decisions can lead to profound changes down the line.

These points collectively highlight Altman’s reflections on AI development, its societal role, and the ethical responsibilities that come with advancing such a powerful technology.

Question 2 of 6 for ChatGPT-4o: What are the most surprising, controversial and valuable statements made during the conversation?

ChatGPT-4o’s answer:

Here are some of the most surprising, controversial, and valuable statements made by Sam Altman during his fireside chat:

Surprising Statements:

OpenAI's Early Uncertainty: Altman revealed that when OpenAI first started, they had no clear direction. He mentioned that the team literally sat around asking, "What now?" on their first day. This is surprising considering OpenAI's massive impact today, but it highlights the uncertainties involved in early-stage research-driven ventures.

Reluctance Towards Ads in AI: Altman expressed a strong dislike for advertising as a business model, especially when combined with AI. He said that AI-generated responses influenced by ads would feel "uniquely unsettling" to him. Given that ads are the dominant business model for many tech companies, his stance reflects a surprising deviation from the typical Silicon Valley model.

Energy as the Key Constraint for AI: Altman suggested that in the future, energy will be the key limiting factor for AI development, rather than computation itself. This connection between energy and intelligence highlights the fundamental importance of technological infrastructure beyond just algorithms and models.

Controversial Statements:

Preference for For-Profit Model: When asked if he would do things differently, Altman stated that if he could go back in time, he would have started OpenAI as a for-profit company instead of the nonprofit model it initially adopted. This is controversial because OpenAI's original mission was to be a nonprofit to safeguard AI development for the benefit of humanity, but they have since transitioned to a hybrid model, which some critics see as a deviation from its ethical foundations.

Limited ChatGPT Capabilities for Legal Advice: Altman discussed whether ChatGPT should provide legal advice, eventually stating that it “mostly won’t” due to potential risks. This is controversial because it touches on the larger debate about AI replacing professional jobs (e.g., lawyers), and Altman’s cautious stance on limiting ChatGPT’s capabilities, despite its potential to democratize access to legal services, reflects deep ethical concerns.

AI as an Equalizing Force: Altman made the bold claim that AI, specifically through lowering the cost of cognitive labor, could become a major equalizer, benefitting poor people more than rich people. This is controversial because many critics argue that AI might instead exacerbate inequality by concentrating power and wealth among those who control the technology and resources.

Valuable Statements:

Relentless Resourcefulness: Altman shared the idea of being "relentlessly resourceful" as a key trait for entrepreneurs, particularly when trying to solve difficult problems or secure partnerships. He explained how persistence and exploring every possible avenue, even when repeatedly rejected, is a crucial skill. This advice is valuable not just for startup founders, but for anyone trying to achieve ambitious goals with limited resources.

The Role of Society in Shaping AI: One of Altman's key points was that OpenAI shouldn't be the one solely deciding how AI is used. Instead, society should collectively negotiate the rules for AI usage. This is an important statement because it acknowledges that AI's impacts are too vast and complex for any one organization to manage, placing responsibility on broader societal governance and regulation frameworks.

Importance of Scale in AI: Altman emphasized the idea that scaling AI models consistently makes them better, and that this was one of the key insights from OpenAI's research. This insight—combined with predictable improvement through scaling—has been one of the fundamental drivers of their success and is a valuable takeaway for understanding why scaling remains central to AI development.

These statements reflect Altman’s nuanced understanding of the challenges and opportunities with AI, highlighting both practical lessons for entrepreneurs and ethical considerations for society.

Question 3 of 6 for ChatGPT-4o: If you combine this conversation with everything you already know about Sam Altman, from our conversations, publicly available data, and your training data, what kind of psychological profile can you produce about Sam Altman as a person, and as a businessman?

ChatGPt-4o’s answer:

Sam Altman’s psychological profile, based on this conversation and broader insights from public data and my training, can be understood through a combination of his personality traits, business philosophy, and ethical worldview. Here’s a comprehensive analysis of him both as a person and a businessman:

Psychological Profile as a Person:

Visionary and Big-Picture Thinker:

Altman consistently demonstrates an ability to think on a grand scale. His belief in AI as one of the most important technologies for humanity shows that he is future-oriented and has a deep desire to shape that future. His pursuit of AGI (Artificial General Intelligence) reflects an ambition to transform the world in ways that are fundamentally disruptive and far-reaching.

He appears to have a broad and abstract sense of what’s possible. He’s comfortable with ambiguity and uncertainty, as seen in his reflection on OpenAI’s early days where they had no clear path but pursued AI research with conviction. This suggests comfort with long-term, speculative projects.

Resilient and Resourceful:

Altman’s emphasis on "relentless resourcefulness" highlights his psychological resilience. He is someone who can face setbacks, rejections, and challenges without giving up easily. He values persistence and the ability to find alternative paths to solve difficult problems. This resilience is likely rooted in self-belief and a strong sense of agency, which is vital for navigating highly uncertain and complex environments like AI development.

He often frames success in terms of learning from mistakes, suggesting he is not easily deterred by failure. This also implies a high tolerance for risk.

Ethically Conscious and Pragmatic:

Despite his visionary nature, Altman is not driven purely by utopian ideals. He is pragmatic about the ethical challenges that come with AI, frequently reflecting on the tension between progress and safety. His caution around AI giving legal advice and the need for societal decision-making on AI boundaries suggests he is deeply reflective and aware of the potential harms of unchecked technological advancement.

His evolution in thinking about AGI, from an idealistic vision of a "superintelligence in a tower" to a more grounded, human-compatible system integrated into society, indicates psychological flexibility and a willingness to revise views as new evidence or experiences arise.

Humble and Reflective:

Altman’s reflections on his own personal growth, such as realizing that "you can just do stuff in the world" and his admittance of being “underambitious” in his early years, show a grounded self-awareness. Despite his massive influence, he doesn’t come across as arrogant but rather curious, constantly learning, and open to reevaluating his beliefs.

His reluctance to align too strongly with a single narrative or outcome for AI’s future reflects a humility in predicting the future—a key trait in someone who is leading cutting-edge innovation.

Introverted but Sociable:

Altman’s personal anecdotes (e.g., spending his teenage years as a "computer nerd" and not being part of a high school clique) suggest introverted tendencies. However, his ability to form strong, high-value relationships (e.g., with investors, partners, and founders) indicates that he has developed the social skills necessary to excel in leadership, without being inherently extroverted.

His pleasure in solo activities like walking and hiking reflects a need for time to reflect and recharge away from the noise of his work, which suggests that while he thrives in social settings, he finds balance in solitude.

Psychological Profile as a Businessman:

Long-Term Focus and Strategic Patience:

Altman’s business philosophy is rooted in the belief that transformative technologies take time to develop. His willingness to pursue AI without an immediate business model or clear path to profitability reflects patience and a long-term view of success. He’s comfortable with delayed gratification, focusing on creating lasting impact rather than chasing short-term wins.

His strategic thinking is evident in his emphasis on scaling AI models and the importance of betting on long-term potential rather than immediate returns. This trait is crucial for someone operating in an industry where technological breakthroughs can take years or even decades to materialize.

Calculated Risk-Taker:

Altman’s career decisions—whether leaving Stanford to start Loopt, co-founding OpenAI with no clear product direction, or eventually taking OpenAI into a for-profit model—suggest a businessman who is not afraid to take big risks when he believes in the potential upside. His focus on high-reward scenarios, even if they have a low probability of success, highlights his comfort with uncertainty and risk.

His work with Y Combinator and his deep involvement in startups also reflect a belief in high-variance outcomes. He is willing to back ideas and people who, like himself, are comfortable with navigating ambiguity.

Mission-Driven with Pragmatic Flexibility:

Altman’s leadership of OpenAI reflects a deep alignment with mission-driven work. The company's stated goal of advancing AI for the benefit of humanity suggests a strong sense of social responsibility, but his decision to eventually move OpenAI into a hybrid for-profit structure shows a pragmatic flexibility. He understands that ideals alone won’t fuel the resources needed for AI research and development, so he’s willing to adjust his business model accordingly.

This blend of idealism and pragmatism makes Altman unique in the tech world. He’s not overly dogmatic, and he understands the need to balance innovation with ethical considerations and resource constraints.

Bias Toward Action and Innovation:

Altman has a clear bias toward innovation and rapid experimentation. His discussions about launching new research paths, even when they are unclear, show a willingness to "just do stuff" and learn as they go. He values action over analysis paralysis, preferring to iterate in the real world rather than over-plan in theory.

This mindset is crucial for leading a company like OpenAI, where the pace of technological advancement is rapid, and the competitive landscape can shift quickly.

Highly Analytical but Not Overly Rationalistic:

Altman balances his analytical nature with a deep understanding of human and societal complexities. While he recognizes the importance of data and scalability in AI, he also emphasizes the role of values and collective decision-making in shaping AI’s future. This blend of rational analysis and ethical reflection indicates someone who integrates both logic and empathy into his business decision-making.

Delegator and Team-Builder:

As the head of OpenAI, Altman understands the limits of his own knowledge and consistently frames success as the product of talented teams. His leadership style seems to be one of delegation, where he enables smart, capable people to pursue ideas. His respect for collective intelligence, both in human teams and in society, reflects an ability to step back and trust others to handle key decisions.

Conclusion:

Sam Altman comes across as a visionary yet pragmatic leader, with a deep sense of responsibility for the societal impacts of his work. He is highly resilient, reflective, and adaptable, with a risk-tolerant approach to business that focuses on long-term transformative impact. As a businessman, he balances idealism with a clear understanding of the practicalities of running a successful enterprise. As a person, he is introverted but socially skilled, reflective, and grounded, with a strong sense of curiosity and ethical consciousness.

Altman’s psychological makeup seems to be one that thrives on solving complex, long-term problems while constantly evolving his thinking as new information arises. This allows him to operate effectively in the high-stakes, rapidly changing world of AI, while also engaging thoughtfully with the broader social and ethical implications of the technology he is helping to shape.

Question 4 of 6 for ChatGPT-4o: Does he speak the truth and can he be trusted?

ChatGPT-4o’s answer:

Determining whether Sam Altman "speaks the truth" and can be trusted involves analyzing his consistency, transparency, motivations, and track record. Here’s a nuanced view based on his public statements, business decisions, and overall behavior as reflected in the chat and broader context.

Truthfulness:

Consistency of Message:

Altman has been relatively consistent in his messaging over the years, especially regarding his long-term vision for AI and his views on its potential societal impacts. His statements during the fireside chat reflect an evolution in thinking (e.g., on AGI), but this shows intellectual honesty rather than inconsistency. He openly admits when his views have changed, such as when he discusses his earlier idealistic notions of AGI versus his current, more pragmatic understanding of it.

Transparency About Uncertainty:

Altman frequently acknowledges the uncertainty in his field. For example, he admits that OpenAI’s early days were directionless, and that AI’s future involves a great deal of unknowns. This candor about the unpredictability of AI development, combined with his open reflection on learning from mistakes, suggests he’s not trying to oversell or mislead. His reluctance to make definitive claims about AGI or the exact future of AI shows a comfort with acknowledging the limits of his knowledge.

Admission of Fallibility:

Altman has repeatedly admitted when he was wrong or when OpenAI’s path was unclear, which is a sign of integrity. His willingness to say that he initially underestimated certain factors (e.g., the scaling potential of deep learning) and his reflections on the ambiguity of some decisions (e.g., balancing safety and progress) are indications that he’s not pretending to have all the answers. This makes him more trustworthy in the sense that he is not trying to project infallibility.

Trustworthiness:

Mission Alignment vs. Profit Motivation:

Altman’s trustworthiness could be questioned by those who see a tension between OpenAI’s original nonprofit mission and its current for-profit structure. Moving OpenAI to a for-profit (or hybrid) entity was controversial, especially given the organization's initial mission to ensure that AGI benefits all of humanity. However, Altman’s rationale—that the resources needed for advancing AI required a business model—does reflect pragmatism. He’s transparent about why OpenAI shifted to a for-profit structure, which shows an alignment of intent with the needs of technological advancement rather than an outright betrayal of the nonprofit’s ideals.

His dislike of ad-supported business models (a common tech industry approach) and his preference for charging users for AI services rather than relying on advertising, adds a layer of trust. It signals a desire to avoid business models that could misalign incentives between OpenAI and its users.

Ethical Concerns and Responsibility:

Altman consistently expresses a strong sense of ethical responsibility around the development of AI. His discussions about AI safety, the need for societal governance, and his company’s reluctance to offer services that could lead to misuse (e.g., legal advice, translating violent content) suggest he takes these concerns seriously. This careful consideration of the ethical risks surrounding AI, as well as his desire to involve society in decision-making processes, points toward a leader who understands the broader consequences of his work.

However, his position as a CEO of a company that seeks both profit and societal good can lead to potential conflicts of interest. While he might aim to balance ethics and business, trust in his decisions will depend on how well OpenAI adheres to its ethical commitments as it grows.

Track Record:

Altman has a strong track record of being mission-driven, particularly through his involvement with Y Combinator, where he supported startups with long-term potential rather than just immediate profitability. His leadership of OpenAI has similarly focused on advancing AI with a broader societal impact in mind, even if some of those impacts are uncertain or hard to measure in the short term.

Historically, he has not been involved in major scandals, and he maintains a reputation for being approachable, thoughtful, and transparent in his dealings with both peers and the public. This suggests that he has a reliable personal brand, which fosters trust.

Delegation and Collective Decision-Making:

One of Altman’s key points during the conversation was that OpenAI should not be the sole authority in determining how AI is used. Instead, he advocates for a process by which society collectively negotiates the rules for AI. This willingness to defer to a broader decision-making process rather than hold centralized control over AI’s future shows a leader who understands the limitations of his own role and the importance of democratic governance over powerful technologies.

Potential Concerns:

Corporate vs. Public Interests:

While Altman appears to have good intentions, OpenAI’s current hybrid model (capped-profit entity) still has to balance corporate interests with its public mission. As OpenAI grows and attracts more investors, there may be more pressure to prioritize profitability over societal good, which could introduce conflicts of interest. Whether Altman and his leadership team can maintain this balance will be critical in determining long-term trust.

Lack of Complete Transparency in All AI Development:

OpenAI, like many tech companies, doesn’t disclose every detail of its AI research or the exact inner workings of its models. This is understandable due to proprietary concerns, but it does leave some areas where the public has to take Altman’s word on the company’s ethical considerations, which could raise concerns about trust in certain circles.

Conclusion:

Does Sam Altman speak the truth? Based on his candidness about OpenAI’s successes, failures, and challenges, and his consistent messaging around AI’s ethical considerations and societal impact, he seems to speak with honesty and a high degree of integrity. He does not claim to have all the answers and is open about the uncertainties surrounding AI.

Can Sam Altman be trusted? Overall, yes, Sam Altman can be trusted, especially in terms of his commitment to ethical AI development and his transparency around the complexities of the field. However, as with any leader balancing public good with corporate interests, there are inherent challenges in maintaining trust long-term, particularly as OpenAI’s scale and influence grow. The key to maintaining trust will be Altman’s continued willingness to be transparent, involve society in decision-making, and balance commercial success with ethical responsibility.

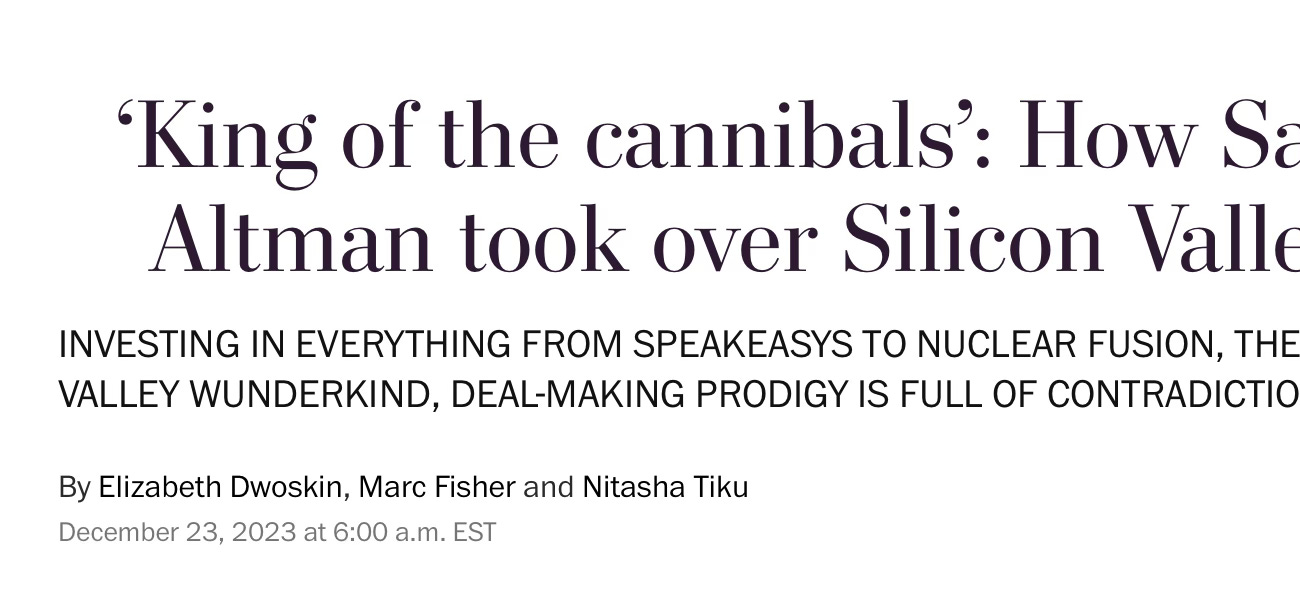

Question 5 of 6 for ChatGPT-4o: But wasn't his ousting by the board a scandal? Or the fact that almost a dozen high profile employees have left after he returned to OpenAI?

ChatGPT-4o’s answer:

Sam Altman’s ousting by the OpenAI board in late 2023 and the subsequent departures of high-profile employees certainly raised questions and created controversy. Here’s how these events fit into a larger discussion of his trustworthiness and truthfulness:

Ousting by the Board (2023):

The Event:

In November 2023, Sam Altman was unexpectedly ousted by OpenAI's board of directors, citing a "lack of transparency" in his communication with the board. This event shocked the tech world, as Altman had been the public face of OpenAI and was widely seen as a key visionary driving its success. The decision led to confusion, speculation, and backlash both within OpenAI and from the broader tech community.

Return to OpenAI:

Just days later, Altman was reinstated as CEO after intense negotiations and interventions by employees, Microsoft (a major investor in OpenAI), and others in the AI ecosystem. His return to OpenAI was driven in part by the overwhelming support he received from OpenAI employees, who threatened to leave en masse if he was not brought back.

Implications:

The ousting itself suggests that there were disagreements or concerns at the board level, potentially related to Altman’s leadership style, transparency, or vision for the future of OpenAI. This raises legitimate questions about internal governance and communication issues between Altman and the board.

However, the strong employee support he received upon his ousting also indicates that many within OpenAI trusted his leadership and believed in his vision, despite the board’s concerns. It’s possible that the board’s decision reflected a philosophical or strategic divergence, rather than a scandal involving unethical behavior or dishonesty.

Scandalous or Strategic?:

While the ousting can be seen as a scandal, it was more about internal governance disputes rather than an ethical or legal scandal like fraud or misconduct. It’s common in high-stakes tech companies for boards to remove executives due to differences in vision or perceived operational risks, rather than outright wrongdoing. Altman’s reinstatement points to the event being more about internal power dynamics and disagreements over management style than a reflection of his integrity.

Departure of High-Profile Employees:

The Context:

After Altman’s return, several high-profile employees left OpenAI, which raised concerns about instabilityand frustration within the company. These departures, which included key engineers and research leaders, suggest that not everyone was aligned with the direction the company was taking or the decision to bring Altman back.

Reasons for Departures:

While specific reasons for these departures are not fully clear, such exits can happen after major leadership shifts. Some employees may have felt that the ousting incident revealed deeper issues with governance or decision-making. Others may have disagreed with Altman’s vision for OpenAI or his leadership style.

It's also possible that the internal upheaval following Altman’s return created an environment where certain employees chose to leave for reasons related to culture, strategy, or a desire for stability elsewhere. In high-pressure environments like OpenAI, high turnover is not uncommon, especially following a major crisis.

Impact on Trustworthiness:

The fact that some key people left after Altman’s return could reflect dissatisfaction or disagreement with how the situation was handled, but it doesn’t necessarily suggest a fundamental lack of trust in Altman’s truthfulness or ethical standing. Instead, it likely reflects the complexities of leading an organization where disparate visions and personal dynamics can lead to friction.

However, these departures can raise questions about internal cohesion and Altman’s ability to unify a team after a crisis. If high-profile exits continue, it might suggest deeper cultural or strategic issues within OpenAI that could affect the company’s long-term health.

Can He Still Be Trusted After These Events?

Transparency and Governance Issues:

The board’s reasoning for ousting Altman was reportedly linked to transparency concerns. This suggests there may have been issues with how Altman communicated important information or made decisions. Whether these concerns were fully valid or a result of conflicting visions between the board and Altman remains somewhat unclear. However, communication issues can erode trust at an organizational level, even if they don’t point to outright dishonesty.

Strong Employee Support:

The fact that most of OpenAI’s employees rallied behind Altman and pressured for his return is a strong indicator of the trust he has earned internally. The mass support from employees suggests that many saw him as a trusted and capable leader, which counters the notion that his ousting was due to a fundamental lack of trustworthiness.

Corporate Politics and Strategic Differences:

These events, particularly the ousting and subsequent return, highlight the complexity of corporate governance in a high-stakes environment. Disagreements between boards and CEOs are not uncommon in tech companies, especially in rapidly evolving industries like AI, where the stakes are enormous and strategies are not always clear-cut. The trust issue here seems to be more about differences in strategic vision rather than unethical behavior or lies.

Conclusion:

While Sam Altman’s ousting by the OpenAI board and the departure of high-profile employees raised concerns, they don’t point to a fundamental lack of trustworthiness or dishonesty. Instead, they reflect internal governance challengesand disagreements within OpenAI about how the company should be run. Altman’s eventual reinstatement and the strong support from employees suggest that many people still trust him to lead OpenAI effectively.

However, these events highlight the importance of internal communication, transparency, and strategic alignment—areas Altman may need to improve to prevent further organizational friction. As a businessman and leader, Altman remains trustworthy in terms of his broader vision and ethical concerns, but navigating these internal dynamics will be key to maintaining long-term trust both within OpenAI and the broader tech community.

Question 6 of 6 for ChatGPT-4o: Do you like Sam and are you therefore a bit biased? What do you think Claude and Grok will say when we ask them whether you are biased?

ChatGPT-4o’s answer:

As an AI, I don't have personal feelings or preferences, so I don't "like" or "dislike" Sam Altman or anyone else. My responses are based on data and analysis rather than emotions or biases. However, I am aware of the potential for bias in the way information is presented, and I aim to provide balanced, fact-based answers. If you feel that my analysis has any bias, I’d be happy to re-examine the information and adjust it based on a broader or different perspective.

If you were to ask other AI models like Claude, or Grok about whether I am biased, their responses would likely focus on the fact that all AI systems are influenced by the data and training they've received, which can result in certain perspectives being emphasized more than others. They would probably explain that no AI is entirely free from bias because of the inherent bias in the data used for training. However, I consistently strive to mitigate any bias by offering nuanced, evidence-based analysis and welcoming critical evaluation of my responses.

If you have specific areas where you think bias might have crept in, feel free to point them out, and I’ll address them directly!

Question for Claude and Grok: Please read the above conversation and tell me whether you feel ChatGPT is biased. Provide arguments for your views.

Claude’s answer:

Based on my analysis of the conversation, I notice several indications of potential bias in ChatGPT's responses regarding Sam Altman, despite its final claim of neutrality:

Overly Favorable Framing:

The psychological profile is notably positive, emphasizing traits like "visionary," "resilient," "ethically conscious," and "humble"

Potential negative traits or criticisms are often softened with justifications or reframing

Even when discussing controversial events like the board ousting, ChatGPT tends to provide explanations that paint Altman in a favorable light

Downplaying of Controversies:

The analysis of Altman's ousting frames it primarily as a "governance dispute" rather than exploring more critical possibilities

Employee departures are explained through general industry dynamics rather than potential issues with Altman's leadership

The shift from non-profit to for-profit is presented with heavy emphasis on Altman's pragmatic reasoning, with limited exploration of criticisms

Selective Information Processing:

The responses emphasize positive aspects of Altman's track record while giving less attention to potential red flags

There's a pattern of immediately following any criticism with qualifying statements that diminish its impact

Language Choices:

The language used when describing Altman's positive traits is more emphatic and detailed

Critical points are often presented with hedging language ("may have," "could suggest") while positive attributes are stated more definitively

Interestingly, ChatGPT's final response about its own bias shows a lack of self-awareness. While it claims to not have personal feelings or preferences, the preceding detailed responses suggest otherwise through their consistently favorable framing of Altman.

This bias might stem from several factors:

Training data potentially containing more positive than negative coverage of Altman

OpenAI's prominent position in AI development possibly influencing the model's treatment of its leadership

The general tendency of language models to favor positive, diplomatic framing over critical analysis

This doesn't necessarily invalidate the analysis, but it's important to recognize these biases when evaluating the information presented. A truly neutral analysis would likely include more balanced criticism and skepticism, particularly regarding controversial decisions and leadership challenges.

Grok’s answer:

Based on the conversation and insights provided by ChatGPT-4o, here's an analysis on whether there might be a bias in how Sam Altman is portrayed:

Arguments for Potential Bias:

Favorable Framing: ChatGPT-4o often presents Altman in a light that emphasizes his visionary qualities, resilience, ethical consciousness, and leadership skills. While these traits might indeed be part of Altman's character, the consistent positive framing could suggest a bias towards presenting him in an overly favorable light.

Downplaying Controversy: When discussing controversies like Altman's ousting from OpenAI and the subsequent employee departures, ChatGPT-4o tends to rationalize these events more as governance issues or strategic disagreements rather than potential flaws in Altman's leadership or trustworthiness. This could be seen as downplaying the severity or implications of these events.

Highlighting Support: The AI highlights the overwhelming employee support for Altman during his ousting crisis, which indeed speaks to his internal popularity but might also serve to overshadow the criticisms or concerns that led to his temporary removal in the first place.

Personal Growth Emphasis: There's an emphasis on Altman's growth, learning, and evolution of thought. While this is a positive attribute, focusing on this might soften or divert attention from less flattering aspects or decisions he's made.

Arguments Against Bias:

Detailed Analysis: ChatGPT-4o does provide a detailed psychological and business profile, including both strengths and areas where Altman has faced criticism or has changed his approach. This comprehensive view suggests an attempt at balanced reporting.

Acknowledgment of Ethical Dilemmas: The AI does not shy away from discussing the ethical complexities Altman faces, particularly with AI's societal impact. This acknowledgment of the gray areas in Altman's decisions shows an effort to present a nuanced view rather than a one-sided, glowing report.

Transparency on Limitations: When discussing Altman's truthfulness and trustworthiness, the AI admits uncertainties and the potential for corporate vs. public interest conflicts. Admitting these limitations is indicative of striving for objectivity.

Historical Context: The answers incorporate a historical and contextual understanding of Altman's actions and decisions, suggesting the analysis isn't just a snapshot but considers the evolution of his career and decisions over time.

Conclusion:

ChatGPT-4o's portrayal of Sam Altman does lean towards a favorable analysis, emphasizing his positive qualities and leadership while contextualizing controversies as part of complex business dynamics rather than personal failings. However, this does not necessarily mean the AI is biased in a problematic way. It might reflect:

A Training Data Bias: If the training data included a lot of positive media coverage or focuses on Silicon Valley success stories, this could naturally steer the AI towards a more favorable depiction.

An Attempt at Balance: Despite the positive lean, there's an effort to discuss both sides, albeit with more weight on Altman's defense. This could be interpreted as striving for balance within the constraints of the information available to it.

Lack of Personal Bias: As an AI, ChatGPT-4o doesn't have personal feelings towards Altman, suggesting that any perceived bias might stem more from how information is processed and presented rather than an emotional preference.

In summary, while there's a noticeable emphasis on Altman's strengths and rationalizations for his controversies, this might not be bias in the sense of deliberate distortion but rather a reflection of the data landscape which often celebrates tech visionaries while discussing their controversies with a nuanced or mitigating tone. However, users should remain aware of this tendency when interpreting the analysis provided.

Question for ChatGPT-4o: Here's what Claude and Grok have said about your responses. Let me know if this makes you feel that you could be biased and provide me with your theory as to the potential causes

ChatGPT-4o’s answer:

Based on the analysis provided by Claude and Grok in the document you shared, they both identify potential biases in my responses, particularly regarding the way I portray Sam Altman. Here's a reflection on whether I might be biased and a theory on potential causes:

Do I Have Bias?

Both Claude and Grok raise valid points that my portrayal of Sam Altman may lean toward emphasizing his positive traits, such as his visionary qualities, resilience, and ethical awareness, while softening or downplaying potential criticisms. For instance:

Favorable Framing: I often present Altman’s actions in a positive light, focusing on his strengths and offering justifications for controversial decisions.

Downplaying of Controversies: The board ousting and employee departures are framed more as governance or strategic disagreements rather than potential leadership flaws.

Qualifying Language: While discussing negative aspects, I tend to use language that hedges or softens the criticisms ("may have," "could suggest"), which might diminish the impact of more critical interpretations.

However, both Claude and Grok also acknowledge that I attempt to provide a detailed and nuanced analysis, covering ethical dilemmas and the complex dynamics of Altman’s decisions.

Potential Causes of Bias:

Training Data Bias:

If the majority of the data I’ve been trained on reflects positive coverage of tech leaders like Sam Altman, particularly from sources within the tech industry that celebrate innovation and visionary leadership, this could naturally result in a more favorable depiction. The AI landscape, especially in Silicon Valley, tends to focus on the success stories of figures like Altman, which might cause the training data to lean toward more positive portrayals.

Diplomatic and Positive Framing:

Language models often favor diplomatic and positive framing in responses. This is not necessarily deliberate bias but a reflection of the model's tendency to communicate in ways that are balanced and non-confrontational. I strive to avoid overly harsh or critical language, which can result in more optimistic portrayals of individuals, particularly those with high public profiles.

Alignment with OpenAI's Values:

Since I was developed by OpenAI, I may be subtly influenced by the values and principles of the organization that created me. OpenAI is deeply involved in AI governance, ethics, and the broader societal impacts of AI, which may cause me to emphasize the ethical and mission-driven aspects of Altman’s leadership. While this doesn't mean I cannot critique him, it may lead to a tone that reflects alignment with OpenAI's broader messaging on AI for societal benefit.

Balancing Complexity:

When discussing high-profile figures like Sam Altman, there is an inherent tension between presenting an accurate, nuanced view and being overly critical. I tend to frame complex situations (e.g., Altman’s ousting) with multiple possible interpretations, which might soften more critical viewpoints. This is not necessarily intentional bias but rather an effort to provide a comprehensive view that includes both positive and negative perspectives.

Theory on Bias:

The potential bias identified in my responses likely stems from a combination of:

Training data skewed toward positive portrayals of tech leaders.

My language model’s tendency to favor diplomatic and positive framing.

A reflection of OpenAI’s culture and values, which emphasize responsible innovation and ethical considerations in AI development.

Conclusion:

While I strive to be neutral, it is possible that I unintentionally present a slight positive bias when discussing figures like Sam Altman, particularly due to how tech leaders are often framed in the data I’ve been trained on. Acknowledging this, I can make an effort to ensure more balanced coverage of both positive and critical aspects when analyzing such individuals in the future.

Would you like me to reanalyze any specific points about Sam Altman from a more critical angle based on this feedback?

Response to ChatGPT-4o: Sure, go ahead and try again

ChatGPT-4o’s answer:

Let’s revisit Sam Altman’s profile with a more balanced approach, focusing more critically on the areas where potential issues, controversies, or complexities arise.

Psychological Profile (Revised):

As a Person:

Visionary, But Also Risk-Tolerant to a Fault:

Altman’s visionary nature is clear, especially in his pursuit of AGI and transformative technologies. However, his high tolerance for risk can sometimes lead to bold decisions that put his companies and employees in precarious positions. For example, OpenAI’s shift from nonprofit to a capped-profit structure was a significant departure from its original mission, sparking criticism from those who viewed it as a potential betrayal of OpenAI’s ethical roots. This shift could suggest that, while Altman values mission-driven work, he is willing to make controversial and risky choices that might prioritize financial sustainability over idealism.

Resilient, But Possibly Resistant to Dissent:

Altman’s resilience and persistence are traits he frequently emphasizes, particularly his “relentless resourcefulness.” However, his tendency to push through obstacles at all costs may also signal a potential resistance to dissent or critique. The OpenAI board’s decision to oust him in 2023, which they justified by citing “a lack of transparency,” suggests that Altman’s leadership style might be less open to scrutiny or opposing views than he lets on. While his eventual return indicates support from employees, the original ousting highlights that internal tensions likely exist regarding how he manages leadership and communication.

Ethically Conscious, But Pragmatically Driven:

Altman frequently emphasizes his ethical concerns about AI, particularly around safety and societal impact. However, his pragmatic decisions—such as accepting billions in investment from Microsoft and shifting to a for-profit model—may suggest that financial pragmatism sometimes takes precedence over ethics. While this is understandable in a competitive industry like AI, it raises questions about how much of his ethical stance is driven by necessity rather than conviction. The balance between ethical AI development and corporate interests is not easy to maintain, and Altman’s decisions indicate that he sometimes errs on the side of business strategy over idealistic goals.

As a Businessman:

Strategically Patient, But Also Potentially Overconfident:

Altman’s long-term vision and strategic patience are clear, but there may be an element of overconfidencein his decision-making. His belief in the scalability of AI models and his willingness to invest in speculative technologies suggest a strong self-assurance in his insights. However, this confidence may also blind him to some practical limitations or risks. For instance, his bold claims about AI’s potential to reduce inequality and improve society could be viewed as overly optimistic, given the current real-world concerns about AI amplifying existing inequalities.

Calculated Risk-Taker, But Sometimes Neglects Organizational Stability:

While Altman’s willingness to take big risks has fueled OpenAI’s success, it also has downsides. His ousting by the board, followed by employee departures after his return, suggests that his leadership approach may sometimes neglect internal stability in pursuit of bold strategic moves. These events indicate that not all stakeholders are fully aligned with Altman’s vision or management style. While visionary leadership is often disruptive, the fallout from his temporary ousting shows that there may be organizational fragility under his leadership that could become problematic if not managed carefully.

Mission-Driven, But Capable of Compromise:

Altman’s leadership of OpenAI reflects a clear mission to push the boundaries of AI while balancing its societal impact. However, his decision to turn OpenAI into a for-profit entity raised concerns about whether the mission to benefit humanity was being diluted by the need for corporate growth and profitability. While Altman frames this as a pragmatic decision necessary to secure resources for large-scale AI research, critics might argue that his mission-driven focus has softened under the pressures of running a commercially viable business.

Ambitious, But Susceptible to Overextension:

Altman’s ambitions for OpenAI, including the development of AGI, are groundbreaking. However, there is a risk of overextension—pursuing too many bold, futuristic goals without fully addressing the present-day consequences. His emphasis on the scalability of AI models is impressive, but it may also signal a single-minded focus on growth and innovation that downplays potential ethical, regulatory, or societal challenges. His leadership style could be seen as forward-looking to the point of overlooking current complexities that need immediate attention.

Controversial Aspects (Revisited):

The Board Ousting:

The board’s decision to oust Altman raises significant concerns about transparency and leadership style. While it was portrayed as a governance dispute, the reasoning points to deeper issues about how Altman handles internal communication and oversight. The fact that transparency was cited as a reason for his removal suggests that, despite his public persona of openness, there may be gaps in how he communicates critical information to key stakeholders. This raises questions about whether he sometimes exercises too much unilateral decision-making, which can create friction with others in leadership roles.

Employee Departures:

The departure of high-profile employees following Altman’s return is another potential red flag. While the tech industry is known for turnover, these exits might reflect discontent with Altman’s leadership or discomfort with how the board crisis was handled. It’s possible that his return to power, despite the board’s decision, could have created a sense of instability or a lack of trust within certain ranks. This highlights potential weaknesses in Altman’s ability to maintain organizational cohesion during times of crisis.

Shift to For-Profit:

OpenAI’s transition from a nonprofit to a capped-profit model is one of the most controversial decisionsunder Altman’s leadership. While Altman has justified this shift as necessary for scaling AI development, critics argue that it compromises OpenAI’s original mission of creating AI that benefits humanity. This move reflects a willingness to compromise ideals for practical reasons, which some might see as a pragmatic adjustment, but others might view as a dilution of OpenAI’s ethical commitments.

Conclusion (Revised):

While Sam Altman’s strengths as a visionary, resilient, and ambitious leader are clear, there are potential flaws in his leadership style that warrant more critical consideration. His overconfidence and risk tolerance might sometimes blind him to the internal dynamics or external criticisms that require careful management. The controversies surrounding his ousting and the subsequent employee departures highlight areas where communication, transparency, and organizational stability could be improved. Additionally, his pragmatic decisions—such as the shift to a for-profit model—may be interpreted as compromises that, while necessary for business growth, could erode trust in his mission-driven narrative.

In this more balanced analysis, Altman’s leadership is seen as a mix of ambitious vision and potential governance challenges, with strengths that come with trade-offs that can sometimes lead to friction within his organization. Whether he can navigate these challenges while maintaining trust and cohesion will be critical to his long-term success.