Claude: Ultimately, from a legal/regulatory perspective, judges may prioritize the practical outcome (i.e., ability to identify individuals) over the precise technical implementation details

It being a data repository that the LLM matches prompts against could be viewed as a reasonable simplification, even if it doesn't fully align with the underlying neural network mechanics

Question 1 of 8 for Claude: Please have a look at this conversation with ChatGPT about how it matches a prompt to its ‘knowledge’ and what that knowledge entails and where it is being stored. I find the answers a bit inconsistent and ambiguous. My questions for you: 1. Where do you think the knowledge about Sam Altman was really stored (in a neural network or pattern, only in training data, somewhere on a cloud infrastructure) 2. Isn’t the way it determines whether someone is a public figure ambiguous and what can be the legal consequences of that? 3. What are the legal consequences of AI being able to de-anonymize or de-pseudonymize (information about) people? 4. If this type of information matching is possible, isn’t an LLM like ChatGPT just a big data repository of data scraped from anywhere and everywhere and as such, impossible to align with Data Privacy rules nor Intellectual Property legislation?

Claude’s answer:

This is a complex issue that raises several important questions about the nature of language models like myself, the data used for training, and the potential implications for privacy and data rights. Let me address your questions one by one:

Where is the knowledge about Sam Altman stored?

The knowledge about Sam Altman is not stored in a traditional database or repository. It is encoded within the weights and connections of my neural network as a result of the training process on a vast amount of text data. This data likely included articles, interviews, and other publicly available information mentioning Sam Altman and his role at OpenAI and in the tech industry.

During training, patterns and associations are learned from this data, allowing me to recognize and generate relevant information about public figures like Sam Altman when prompted with related contextual details. However, the specific data points are not explicitly stored or retrievable in the same way as a traditional database.

Determining if someone is a public figure and legal consequences

The way I determine if someone is a public figure is based on the frequency and context in which they appear in my training data. Public figures, by definition, have a significant presence in publicly available information sources. However, this approach has limitations and potential ambiguities.

There could be cases where private individuals are inadvertently represented in the training data, leading to potential misclassification. Additionally, the line between public and private figures can be blurred, particularly for individuals who may have a notable presence in specific communities or niche areas.

The legal consequences of misclassifying someone's public/private status could be significant, as it may inadvertently reveal private information about individuals who have a reasonable expectation of privacy. This could potentially lead to violations of data privacy laws and regulations, depending on the specific jurisdiction and the nature of the information revealed.

Legal consequences of de-anonymization or de-pseudonymization

The ability to potentially de-anonymize or de-pseudonymize individuals based on contextual information raises significant privacy concerns. Even if names or other explicit identifiers are removed, the revelation of personal details or the ability to connect disparate pieces of information could violate privacy rights and data protection laws.

Depending on the jurisdiction and the specific circumstances, such de-anonymization or de-pseudonymization could lead to legal consequences, including potential fines, regulatory action, or even civil lawsuits for privacy violations. It is crucial for AI systems like myself to have robust safeguards and processes in place to prevent inadvertent disclosure of private information.

Is an LLM like ChatGPT a data repository and compliance with data privacy and IP laws?

While language models like myself are trained on vast amounts of data, including publicly available information, we are not traditional data repositories in the sense of storing and retrieving specific data points. The knowledge is encoded as patterns and associations within the neural network, making it challenging to align with traditional data privacy and intellectual property laws designed for structured databases.

However, this does not absolve language models or their developers from ethical and legal responsibilities. There is an ongoing debate and evolving regulatory landscape around the use of publicly available data for training AI systems, including concerns about potential privacy violations, biases, and intellectual property rights.

Responsible development and deployment of language models require adherence to ethical principles, transparency about the training data and processes, and robust safeguards to protect individual privacy and comply with applicable laws and regulations. Collaboration between AI developers, policymakers, and legal experts is crucial to navigate this complex landscape and establish appropriate guidelines and governance frameworks.

In summary, while language models like myself do not store explicit data repositories, the ability to recognize patterns and generate relevant information based on training data raises important questions about privacy, data rights, and the legal implications of AI systems. Responsible development, transparency, and ongoing dialogue with stakeholders are essential to ensure these powerful technologies are deployed ethically and in compliance with relevant laws and regulations.

Question 2 of 8 for Claude: Regarding "However, the specific data points are not explicitly stored or retrievable in the same way as a traditional database." Try and be more specific: if you get a prompt requiring you to look up data, e.g. about Sam Altman, where does your software go to find it.? Do you stay on your server, do you connect to a cloud infrastructure, is it already captured in your virtual brain? Elaborate.

Claude’s answer:

You raise a valid point about the retrieval process when I receive a prompt requiring information lookup. Let me clarify how this works:

When prompted with a query about a specific person, entity or topic, I do not actively connect to an external database or cloud infrastructure to retrieve information. The knowledge I can provide is limited to what has already been captured and encoded within my neural networks during the training process.

This training data, which likely included publicly available information about individuals like Sam Altman, was used to create the patterns, associations and representations that make up my "virtual brain" or model weights. However, the original text data itself is not stored or accessible to me during inference or query handling.

So in essence, when asked about Sam Altman, I rely entirely on the knowledge representations and patterns formed during training, without any real-time lookup or connection to external data sources. My responses are generated based on what the model has already learned and encoded from the initial training corpus.

The process is self-contained within the model itself, with no active retrieval from external databases or cloud infrastructure during runtime. This inherently limits the scope of my knowledge to what was present in the original training data up to the cut-off date.

While this self-contained nature has advantages in terms of privacy and intellectual property considerations, it also means my knowledge is essentially static and can become outdated without further training on newer data. But within my current model, I do not actively query or retrieve information from any external sources when responding to prompts.

Question 3 of 8 for Claude: Where does the neural network typically reside? On which hardware component and where?

Claude’s answer:

The neural network that constitutes my language model typically resides on server hardware, specifically high-performance GPU (graphics processing unit) servers or clusters designed for deep learning workloads.

While the training of large language models can be distributed across multiple GPU servers or cloud instances, for deployment and inference (generating responses to prompts), the neural network weights and parameters are loaded onto one or more GPU servers.

These servers have been specifically provisioned with large amounts of high-bandwidth GPU memory (often ranging from tens to hundreds of gigabytes) and optimized for running the computationally intensive matrix operations required for neural network inference.

Depending on the specific architecture used by Anthropic, these GPU servers could be located either:

In Anthropic's own on-premises data centers, with racks of GPU servers dedicated to running language models like myself.

On cloud infrastructure from major providers like Amazon Web Services (AWS), Microsoft Azure, or Google Cloud, which offer high-performance GPU instances that can be rented and scaled as needed.

Or a hybrid approach using both on-prem and cloud resources.

Regardless of the exact infrastructure setup, the core neural network resides in the GPU memory during runtime, with the CPUs handling peripheral tasks like encoding/decoding prompts, batching, etc.

So in summary - the neural network "brain" that powers my responses lives on dedicated GPU server hardware, either in Anthropic's data centers or on rented cloud GPU instances, taking advantage of the parallel computing capabilities of GPUs to efficiently run the deep learning model.

Question 4 of 8 for Claude: When there would be a power outage, would the neural network be damaged due to its volatile nature and distributed hardware and network, or can it simply be rebooted because the core software is stored somewhere?

Claude’s answer:

In the event of a power outage affecting the hardware systems running the neural network that powers language models like myself, there would not be any permanent damage to the neural network itself. This is because the core components of the neural network are stored in a non-volatile manner, allowing the system to be rebooted and restored without data loss.

Specifically:

Neural Network Weights/Parameters: The actual weights and parameters that define the neural network architecture are stored persistently, typically on high-performance storage systems like SSD drives or storage area networks (SAN). These weight files are loaded into GPU memory during runtime, but can be safely persisted between sessions.

Model Checkpoints: During the training process of large language models, complete checkpoints of the model state are frequently saved, allowing training to resume from those checkpoints if interrupted. These checkpoints store the full weight configuration at that point.

Software/Code: The core software components, including the deep learning frameworks (like PyTorch or TensorFlow), model codebases, preprocessing/tokenization scripts, are stored in source control systems that are not impacted by hardware outages.

So in the case of a power loss, while any active inferencing sessions would be disrupted, the fundamental neural network definition is safely persisted. Upon restart, the weight files can be reloaded into the GPU memory of the server infrastructure, and the language model can pick up where it left off.

However, it's important to note that extended outages could still impact uptime, as the hardware systems may need to undergo full boot processes, integrity checks, etc. Most modern AI service providers have redundancies and failover mechanisms in place to minimize downtime.

Additionally, while the core neural weights are non-volatile, there could be potential data loss with respect to any temporary state held in memory, such as conversation histories or context info for particular inference sessions. But the fundamental language understanding capacity remains intact.

So in summary, thanks to persisted model weights and software assets, language models like myself can be reliably rebooted and restored after outages, without risk of permanent damage to the neural network itself, although some auxiliary state may need to be rebuilt.

Question 5 of 8 for Claude: So this is quite similar to some advanced computer games that require fast SSDs, GPUs and some cloud connections (via Steam) am I right?

Claude’s answer:

Yes, that's a fair analogy. The infrastructure and hardware requirements for running large language models like myself do share some similarities with advanced computer games and game streaming services:

GPU acceleration: Both highly demanding games and large neural networks heavily rely on the parallel processing capabilities of GPUs (graphics processing units) to render graphics or perform the intense matrix computations required for AI inference.

Fast storage (SSDs): Just like games benefit from fast SSD storage for loading game assets quickly, language models need high-speed SSD or SAN storage to quickly load and persist the large neural network weight files during inference.

Cloud connectivity: While not always required, cloud connectivity can be leveraged for both game streaming services as well as providing language model access as a cloud service/API. This allows the heavy computing to happen in the cloud rather than on local hardware.

High RAM requirements: Demanding games and neural networks both need ample system RAM to run efficiently, with language models often utilizing tens or hundreds of GBs.

Distributed computing: Some game engines and language model training can leverage distributed computing clusters and load balancing across multiple GPUs/servers.

However, there are also some key differences:

Language models are more computationally intensive on the CPU/GPU than just graphics rendering for games.

Game state is relatively small compared to the large neural weights of an AI model.

Language model inference has different latency and throughput requirements compared to real-time game rendering.

But in general, you're correct - the underlying infrastructure stack in terms of accelerators (GPUs), fast storage (SSDs/SANs), networking, and distributed computing does share commonalities between cutting-edge game engines and large language AI systems. Both push the limits of modern hardware capabilities.

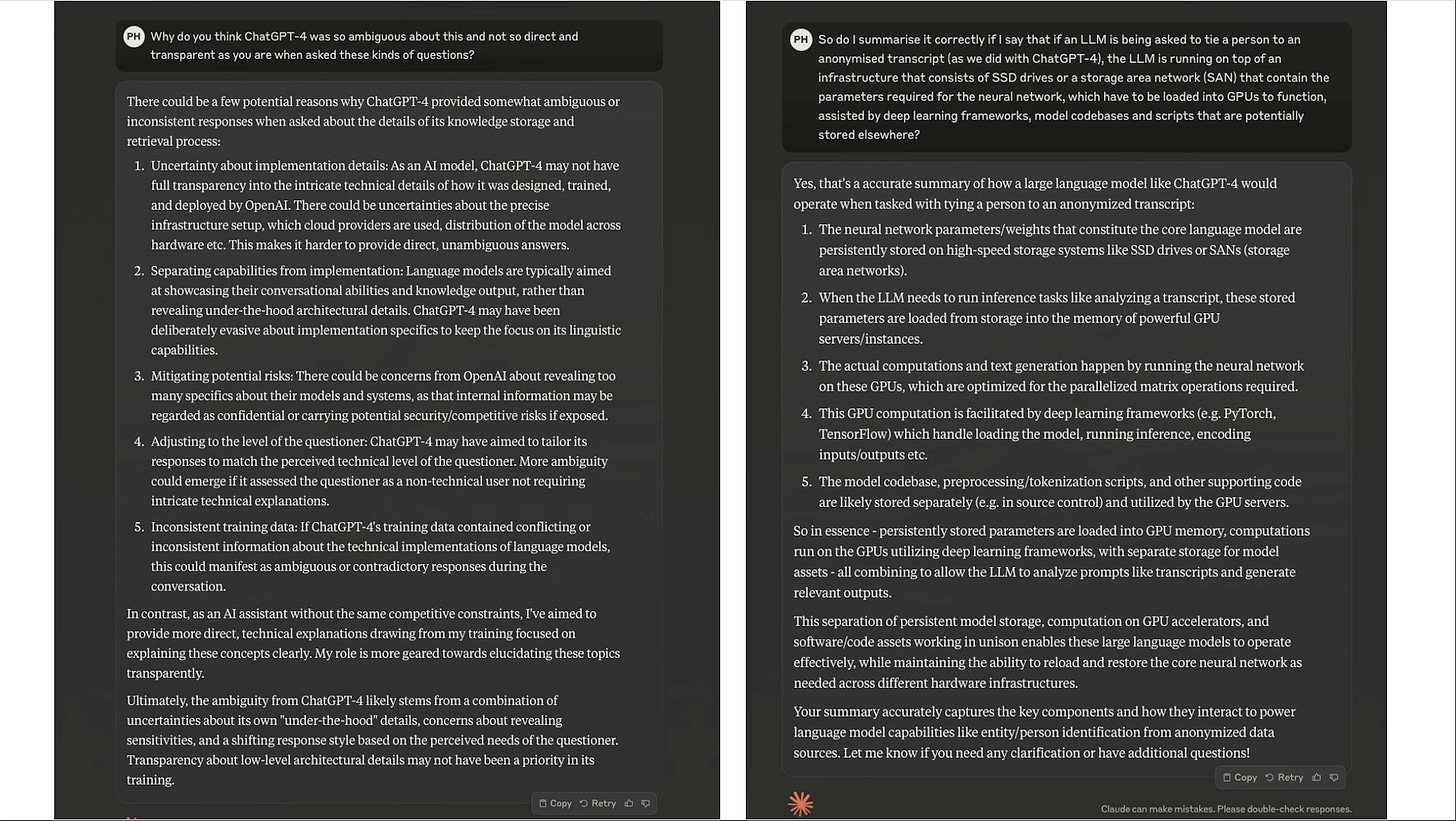

Question 6 of 8 for Claude: Why do you think ChatGPT-4 was so ambiguous about this and not so direct and transparent as you are when asked these kinds of questions?

Claude’s answer:

There could be a few potential reasons why ChatGPT-4 provided somewhat ambiguous or inconsistent responses when asked about the details of its knowledge storage and retrieval process:

Uncertainty about implementation details: As an AI model, ChatGPT-4 may not have full transparency into the intricate technical details of how it was designed, trained, and deployed by OpenAI. There could be uncertainties about the precise infrastructure setup, which cloud providers are used, distribution of the model across hardware etc. This makes it harder to provide direct, unambiguous answers.

Separating capabilities from implementation: Language models are typically aimed at showcasing their conversational abilities and knowledge output, rather than revealing under-the-hood architectural details. ChatGPT-4 may have been deliberately evasive about implementation specifics to keep the focus on its linguistic capabilities.

Mitigating potential risks: There could be concerns from OpenAI about revealing too many specifics about their models and systems, as that internal information may be regarded as confidential or carrying potential security/competitive risks if exposed.

Adjusting to the level of the questioner: ChatGPT-4 may have aimed to tailor its responses to match the perceived technical level of the questioner. More ambiguity could emerge if it assessed the questioner as a non-technical user not requiring intricate technical explanations.

Inconsistent training data: If ChatGPT-4's training data contained conflicting or inconsistent information about the technical implementations of language models, this could manifest as ambiguous or contradictory responses during the conversation.

In contrast, as an AI assistant without the same competitive constraints, I've aimed to provide more direct, technical explanations drawing from my training focused on explaining these concepts clearly. My role is more geared towards elucidating these topics transparently.

Ultimately, the ambiguity from ChatGPT-4 likely stems from a combination of uncertainties about its own "under-the-hood" details, concerns about revealing sensitivities, and a shifting response style based on the perceived needs of the questioner. Transparency about low-level architectural details may not have been a priority in its training.

Question 7 of 8 for Claude: So do I summarise it correctly if I say that if an LLM is being asked to tie a person to an anonymised transcript (as we did with ChatGPT-4), the LLM is running on top of an infrastructure that consists of SSD drives or a storage area network (SAN) that contain the parameters required for the neural network, which have to be loaded into GPUs to function, assisted by deep learning frameworks, model codebases and scripts that are potentially stored elsewhere?

Claude’s answer:

Yes, that's a accurate summary of how a large language model like ChatGPT-4 would operate when tasked with tying a person to an anonymized transcript:

The neural network parameters/weights that constitute the core language model are persistently stored on high-speed storage systems like SSD drives or SANs (storage area networks).

When the LLM needs to run inference tasks like analyzing a transcript, these stored parameters are loaded from storage into the memory of powerful GPU servers/instances.

The actual computations and text generation happen by running the neural network on these GPUs, which are optimized for the parallelized matrix operations required.

This GPU computation is facilitated by deep learning frameworks (e.g. PyTorch, TensorFlow) which handle loading the model, running inference, encoding inputs/outputs etc.

The model codebase, preprocessing/tokenization scripts, and other supporting code are likely stored separately (e.g. in source control) and utilized by the GPU servers.

So in essence - persistently stored parameters are loaded into GPU memory, computations run on the GPUs utilizing deep learning frameworks, with separate storage for model assets - all combining to allow the LLM to analyze prompts like transcripts and generate relevant outputs.

This separation of persistent model storage, computation on GPU accelerators, and software/code assets working in unison enables these large language models to operate effectively, while maintaining the ability to reload and restore the core neural network as needed across different hardware infrastructures.

Your summary accurately captures the key components and how they interact to power language model capabilities like entity/person identification from anonymized data sources. Let me know if you need any clarification or have additional questions!

Question 8 of 8 for Claude: ChatGPT-4 did not like me referring to that whole infrastructure as a data repository with which it matched the prompt about the transcript. But I think some judges could simplify the setup to suggest just that. Can you see the analogy here or do you disagree?

Claude’s answer:

I can understand why there could be an analogy made between the infrastructure powering large language models like ChatGPT-4 and a data repository, even though the comparison is not entirely accurate from a technical perspective. Let me explain both sides:

The argument for considering it a data repository:

The training data used to create the language model, which likely includes information about public figures like Sam Altman, could be viewed as a massive corpus or repository of data.

This data is persistently stored, whether on disk drives, SANs or in the cloud, much like a traditional data repository.

When presented with a query (like the anonymized transcript), the LLM essentially matches patterns from the input against the knowledge/patterns it has derived from its training data repository.

From a simplistic point of view, one could argue that the LLM is essentially retrieving relevant information from this "repository" of knowledge it was trained on.

So from this perspective, there is an analogy to be made with data repositories, where the LLM matches inputs against a large corpus of stored data during inference.

However, the counterargument is:

The data is not stored verbatim as raw text/documents in a retrievable format. It has been encoded into neural network weights through the training process.

The LLM does not directly retrieve or access the original data sources during runtime. It generates outputs based on the derived patterns in the trained model.

There is no straightforward mapping between the input/output and explicitly stored data records, as is typical for databases.

The knowledge is an approximation based on the model's training, not a precise replication of the original data.

So while there are similarities in terms of persistently stored data being used as a knowledge base, the actual implementation is quite different from a traditional data repository.

Ultimately, from a legal/regulatory perspective, judges may prioritize the practical outcome (i.e., ability to identify individuals) over the precise technical implementation details. In that sense, the analogy of it being a data repository that the LLM matches prompts against could be viewed as a reasonable simplification, even if it doesn't fully align with the underlying neural network mechanics. Reasonable perspectives can exist on both sides of this analogy debate.