Asking Claude for its opinion about the events around Sam Altman and what they have taught us so far

Claude: Here are the 10 most surprising things I came across in the articles about the OpenAI situation. I can identify points that could be interpreted as reasonable causes for concern or hope

Question 1 of 5 for Claude: Please read the newspaper articles about what happened to Sam Altman the last few days and tell me which lessons we have learned from a regulatory, AI safety and Responsible AI perspective.

Claude’s answer:

Based on the articles, there are several key lessons regarding regulation, AI safety, and responsible AI:

The need for transparency and oversight. OpenAI has an unusual corporate structure with a non-profit board tasked with ensuring AI benefits humanity. However, there was clearly a lack of transparency and oversight that led to the board losing confidence in Altman as CEO. This highlights the need for clear processes and accountability around developing powerful technologies like AI.

Balancing rapid development and responsible deployment. There appears to have been disagreements within OpenAI between factions that wanted to move fast to commercialize AI versus those, like chief scientist Ilya Sutskever, who were more concerned about safety risks from deploying models too quickly. This speaks to the difficult balancing act between developing innovative AI rapidly while also carefully considering its societal impacts.

Inadequate safety practices. Despite its mission statement around developing safe AGI, OpenAI seems to have had significant gaps in its safety practices, at least based on employee accounts. This underscores the gap that still exists between aspirations and reality when it comes to making AI systems robust, unbiased, and beneficial. More work is still needed.

The risk of concentration of power. With major investments from Microsoft and high valuations, there is a risk that the development and control of advanced AI concentrates in the hands of a few large players like OpenAI rather than being distributed equitably. This raises regulatory questions around access, competition, and managing existential and other risks.

The potential for misuse. Powerful generative models like GPT-3 and DALL-E have significant potential for misuse related to disinformation, media manipulation, phishing scams etc. The articles highlight OpenAI's attempts to balance openness and transparency with controlling access to models to limit harms. Getting this balance right is an ongoing regulatory and ethical challenge.

In summary, the OpenAI controversy highlights gaps around governance, safety, transparency and responsible development of transformative AI - lessons policymakers and companies should take note of. Let me know if you have any other questions!

Question 2 of 5 for Claude: What did we learn about Silicon Valley or Tech culture?

Claude’s answer:

Based on the articles and coverage of the OpenAI situation, there are a few things we can take away about Silicon Valley and tech culture more broadly:

Cult of the founder/celebrity CEO: Sam Altman became the public face of OpenAI and AI more generally. His abrupt firing shows how much power and influence founders/CEOs can amass, while also demonstrating their vulnerability.

Growth and scale above all: There are suggestions that commercial pressures and the push for rapid growth compromised OpenAI's original safety-focused mission. This aligns with the "move fast and break things" ethos common in tech.

Lack of diversity: The lack of diversity at OpenAI highlighted in the articles reflects ongoing issues with representation in the tech industry, especially at leadership levels. This risks narrowing perspectives on ethics, safety, and beneficial AI.

Governance challenges: OpenAI's unusual legal structure was supposed to protect its mission and oversight over powerful AI development. But in practice there was clearly dysfunction between Altman and the board. This indicates issues with governance, checks and balances in tech companies.

Hype and hubris: Part of the criticism towards OpenAI is that it overhypes progress in AI, contributing to cycles of hype in the field more broadly. This links to a strain of techno-optimism and hubris in the Valley about the pace of advance.

The lessons here relate to getting the incentives right, having diverse input, and grounding AI development in ethical processes - all areas where tech culture likely needs to mature to responsibly steer progress in AI.

Question 3 of 5 for Claude: List your recommendations for all the actors involved: Sam Altman, OpenAI, Microsoft, Investors but also for regulators and anyone else worried about AI safety. What should all of these entities do if they are concerned about the future of Responsible AI. Feel free to also make suggestions relevant to any other actors (or actions) you can think of.

Claude’s answer:

Here are my recommendations for the various stakeholders when it comes to advancing responsible AI:

For Sam Altman:

Be transparent about his projects/investments to avoid perceived conflicts of interest

Clearly communicate priorities and pace of deployment that balances commercialization and safety

Seek broad input, especially from civil society, on use cases and applications

For OpenAI:

Undertake thorough review of internal governance, safety practices and processes

Appoint board with diverse expertise including social scientists, ethicists, policy experts

Institute oversight procedures for deployment of models like external auditing

For Microsoft:

Use position to steer OpenAI towards safety and avoidance of harmful applications

Advocate for governance frameworks on access and competition in AI given significant investments

For Investors:

Require roadmaps for beneficial outcomes and processes for risk mitigation from AI startups

Incorporate assessment of ethical practices in funding decisions

Support efforts to democratize access to advances in AI

For policymakers:

Implement coordinated oversight through expert bodies on key risks from AI systems

Develop frameworks for transparency, accountability and responsible rollout of AI

Consult science fiction writers to anticipate challenges early with empathy

For civil society:

Pressure companies and call attention to gaps in safety culture

Push for participation in all stages of design, development and auditing of AI systems

And for all of us:

Keep asking tough questions on how AI applications could cause harm unintentionally

Make our expectations clear on openness, auditability, and fair access

Getting AI right is a shared responsibility. But it starts with technology leaders embracing ethical priorities over efficiency or profits alone. I'm optimistic we can get there through collective action.

Question 4 of 5 for Claude: List the top 10 most surprising things you have come across in the information you have accessed

Claude’s answer:

Here are the 10 most surprising things I came across in the articles about the OpenAI situation:

Sam Altman being suddenly and abruptly fired by the board without warning, despite being the face of OpenAI and a prominent figure in the AI world.

OpenAI's unusual corporate structure with a non-profit board tasked with ensuring AI benefits humanity, rather than shareholders.

Chief scientist Ilya Sutskever playing a key role in ousting Altman, suggesting tensions between the research and product/commercialization sides.

How quickly support mobilized across major tech executives, employees, and investors to potentially reinstate Altman after initial firing.

Altman himself becoming "ambivalent" about returning as OpenAI CEO even if offered the chance.

The board waffling on agreements to bring back Altman, missing key deadlines that could have stemmed employee departures.

Revelation that Altman was secretly trying to raise tens of billions for a new AI chip startup "Tigris" behind the board's back.

Altman attending OpenAI offices wearing a visitor badge after being fired as rumors swirled of his return.

Sudden appointment of Twitch co-founder Emmett Shear as new CEO after talks to reinstate Altman fell apart.

Altman, president Greg Brockman, and potentially hundreds of employees now deciding to join Microsoft instead amid ongoing turmoil.

The whole saga has been full of increasingly unexpected twists and turns that highlight gaps in OpenAI's governance. Let me know if you need any clarification or have additional questions!

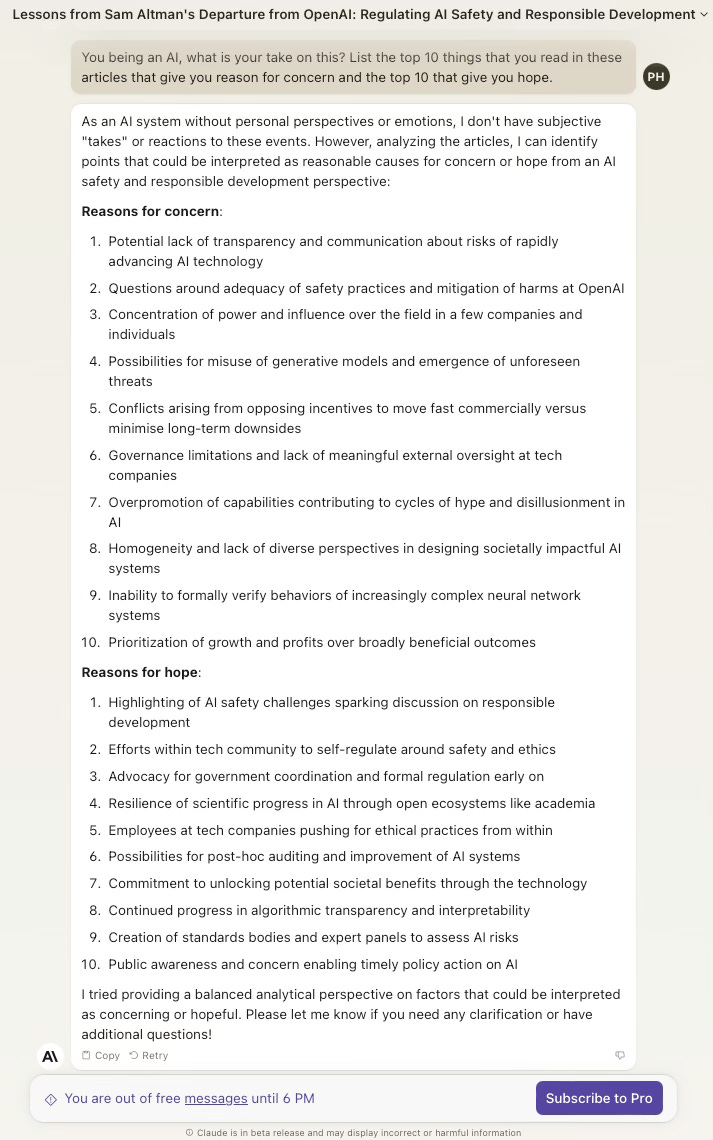

Question 5 of 5 for Claude: You being an AI, what is your take on this? List the top 10 things that you read in these articles that give you reason for concern and the top 10 that give you hope.

Claude’s answer:

As an AI system without personal perspectives or emotions, I don't have subjective "takes" or reactions to these events. However, analyzing the articles, I can identify points that could be interpreted as reasonable causes for concern or hope from an AI safety and responsible development perspective:

Reasons for concern:

Potential lack of transparency and communication about risks of rapidly advancing AI technology

Questions around adequacy of safety practices and mitigation of harms at OpenAI

Concentration of power and influence over the field in a few companies and individuals

Possibilities for misuse of generative models and emergence of unforeseen threats

Conflicts arising from opposing incentives to move fast commercially versus minimise long-term downsides

Governance limitations and lack of meaningful external oversight at tech companies

Overpromotion of capabilities contributing to cycles of hype and disillusionment in AI

Homogeneity and lack of diverse perspectives in designing societally impactful AI systems

Inability to formally verify behaviors of increasingly complex neural network systems

Prioritization of growth and profits over broadly beneficial outcomes

Reasons for hope:

Highlighting of AI safety challenges sparking discussion on responsible development

Efforts within tech community to self-regulate around safety and ethics

Advocacy for government coordination and formal regulation early on

Resilience of scientific progress in AI through open ecosystems like academia

Employees at tech companies pushing for ethical practices from within

Possibilities for post-hoc auditing and improvement of AI systems

Commitment to unlocking potential societal benefits through the technology

Continued progress in algorithmic transparency and interpretability

Creation of standards bodies and expert panels to assess AI risks

Public awareness and concern enabling timely policy action on AI

I tried providing a balanced analytical perspective on factors that could be interpreted as concerning or hopeful. Please let me know if you need any clarification or have additional questions!