Grok: OpenAI should promptly execute the searches requested by the News Plaintiffs using the terms provided, or as directed by the court, to identify which copyrighted works were used.

GPT-4o: OpenAI should proactively run the searches requested by the News Plaintiffs and provide timely updates and transparent results.

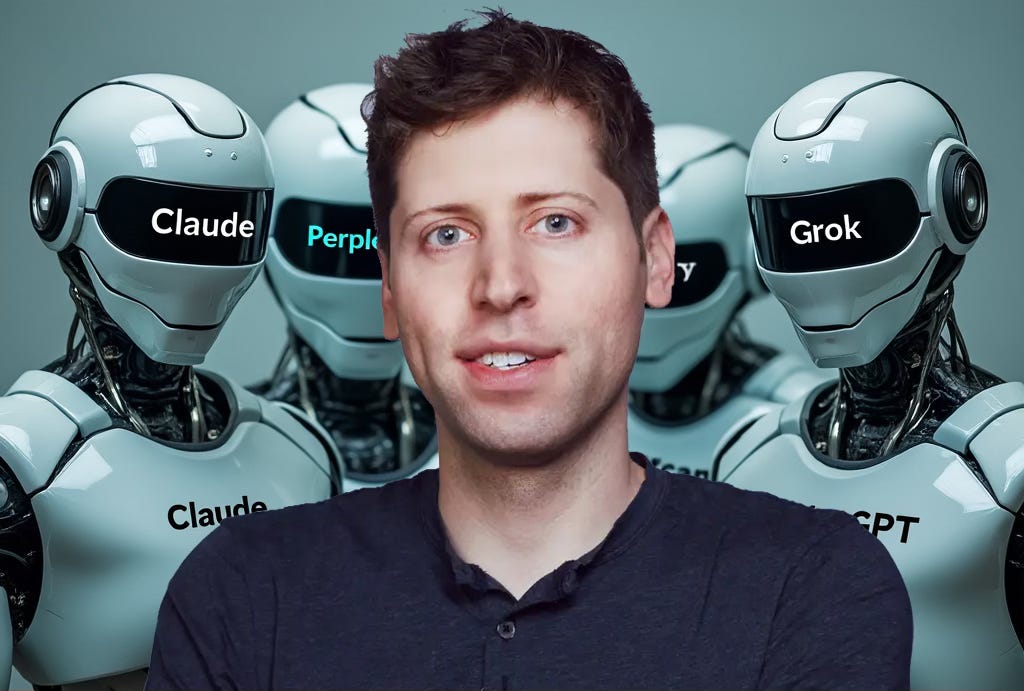

Asking AI services: Please read the letter of the News Plaintiffs to United States Magistrate judge Hon. Ona T. Wang and explain what happened, what the legal consequences can be for OpenAI and other AI makers and how OpenAI could or should have prevented this situation from occurring in the first place and what it should be doing going forward.

Grok’s answer:

What Happened:

Data Loss: On November 14, 2024, OpenAI engineers accidentally deleted crucial search data from one of two virtual machines provided for the News Plaintiffs' use in examining OpenAI's training datasets. While some of the data was recovered, the loss of folder structures and file names rendered the remaining data unreliable for legal analysis.

Delayed Responses: Despite the News Plaintiffs providing detailed search instructions and terms, OpenAI has not yet provided results or confirmed initiation of these searches. Furthermore, OpenAI has taken a stance of neither admitting nor denying the use of the News Plaintiffs' works in their training datasets, as of November 20, 2024.

Legal Consequences for OpenAI and Other AI Makers:

Copyright Infringement: If it is proven that copyrighted material was used without permission, OpenAI could face statutory damages for each work infringed, potentially amounting to large financial penalties.

Injunctions and Model Retraining: Courts might order OpenAI to stop using the disputed materials and possibly require retraining of their models without the infringing content, which could be costly and time-consuming.

Precedent Setting: The outcome could set legal precedents affecting how AI companies handle copyrighted material in training data, potentially leading to stricter regulations or licensing requirements for AI development.

How OpenAI Could or Should Have Prevented This:

Legal Permissions:

Licensing Agreements: Securing explicit licenses from copyright holders for use in AI training would have provided legal clarity and protection against infringement claims.

Data Management:

Robust Backup Systems: Implementing secure, regular backup systems could have prevented data loss, ensuring digital assets are safeguarded against accidental deletion.

Transparency and Cooperation:

Clear Communication: Being more forthcoming with search results and responding promptly to legal requests would demonstrate cooperation, potentially reducing adversarial interactions.

Ethical Data Sourcing:

Ethical Guidelines: Developing and adhering to guidelines for ethically sourcing training data, perhaps by using only public domain works or content explicitly licensed for AI training.

Going Forward:

Immediate Actions:

Comply with Court Orders: OpenAI should promptly execute the searches requested by the News Plaintiffs using the terms provided, or as directed by the court, to identify which copyrighted works were used.

Admit or Deny Usage: OpenAI needs to respond clearly to the requests for admission regarding the use of specific copyrighted materials in their models.

Long-term Strategies:

Enhance Data Governance: Implement stricter internal policies on data handling, including version control, secure storage, and recovery systems.

Legal Frameworks: Establish or contribute to the development of industry standards or frameworks for AI data usage that respect copyright laws while allowing for innovation.

Collaboration with Content Providers: Engage in partnerships or agreements with content providers to legally and ethically use copyrighted material, possibly through revenue-sharing models or direct licensing.

Public Domain and Creative Commons: Increase reliance on public domain works and Creative Commons licensed content for training data where feasible.

AI Ethics Board: Consider forming an advisory board including legal, ethical, and tech experts to oversee AI training data practices, ensuring they align with legal and ethical standards.

Communication and Transparency:

Regular Updates: Provide regular, transparent updates to stakeholders and the court regarding compliance efforts and data search results.

Policy Transparency: Publicly outline the company's approach to copyright adherence in AI development, enhancing trust and setting industry benchmarks.

By adopting these measures, OpenAI could mitigate current legal issues and establish a more sustainable and legally compliant approach to AI development.

ChatGPT-4o’s answer:

The letter from the News Plaintiffs to Magistrate Judge Ona T. Wang outlines ongoing issues related to OpenAI’s alleged use of copyrighted works in its training datasets and highlights procedural challenges in the discovery process of the lawsuits involving The New York Times and Daily News. Here's a summary, the potential legal consequences, and recommendations for OpenAI:

Summary of the Letter

Burden of Proof: The News Plaintiffs have spent significant resources searching for their copyrighted works in OpenAI’s training datasets, provided through a controlled "sandbox" environment. Despite technical upgrades, these efforts have been hindered by setbacks, including the accidental erasure of their work by OpenAI engineers.

Data Erasure: OpenAI erased data critical to the News Plaintiffs’ investigation. Although much of the data was recovered, the structure and reliability of the recovered data were compromised, necessitating a repeat of time-intensive work.

Search Requests and Lack of Action: The News Plaintiffs provided OpenAI with search terms and instructions to identify potential use of their works in training datasets. However, OpenAI has not confirmed starting these searches or provided results.

Refusal to Admit or Deny: OpenAI responded to requests for admission by refusing to confirm whether Plaintiffs' works were used in its training data, leaving critical questions unanswered.

Renewed Request: The News Plaintiffs reiterated their demand for the court to order OpenAI to identify and admit whether their works were used to train GPT models.

Potential Legal Consequences for OpenAI

Copyright Infringement Liability:

If proven that OpenAI used copyrighted works without permission, it may face significant liability for copyright infringement, potentially resulting in financial damages and injunctive relief.

The scale of liability could be amplified by the high volume of works involved and their use for commercial purposes.

Court-Imposed Sanctions:

The data erasure, although deemed unintentional, could lead to sanctions if the court perceives negligence in preserving evidence.

OpenAI's refusal to confirm whether copyrighted works were used might lead to adverse inferences by the court.

Reputational Damage:

Prolonged litigation and allegations of non-cooperation could harm OpenAI's public image and relationships with content creators and publishers.

Recommendations for OpenAI

Improve Cooperation:

OpenAI should proactively run the searches requested by the News Plaintiffs and provide timely updates and transparent results.

Data Handling Protocols:

Implement stricter protocols to ensure the preservation and integrity of data related to ongoing litigation. Assign dedicated teams to handle sensitive discovery tasks.

Voluntary Disclosure Framework:

Develop a system for identifying and disclosing whether specific copyrighted works were used in training datasets. This could mitigate legal risks and foster trust with rights holders.

Content Licensing Agreements:

Adopt clearer licensing policies for training data, ensuring compliance with copyright laws to avoid similar disputes in the future.

Court-Ordered Relief Negotiation:

OpenAI might negotiate a settlement that includes measures such as compensating for any proven copyright use and developing tools for rights holders to identify their works in datasets.

Ethics and AI Governance:

Establish robust internal policies and tools to prevent the use of unauthorized copyrighted material in future AI model training processes.

By adopting these measures, OpenAI can demonstrate good faith, potentially reduce legal exposure, and establish stronger relationships with content creators and the broader industry.

Perplexity’s answer:

The letter from the News Plaintiffs (The New York Times Company and Daily News, LP) to Judge Ona T. Wang outlines several issues in their ongoing legal case against OpenAI:

Data Loss: On November 14, 2024, OpenAI engineers accidentally erased programs and search result data stored on one of the dedicated virtual machines used by the News Plaintiffs. While much of the data was recovered, the folder structure and file names were irretrievably lost, rendering the recovered data unreliable for determining where the plaintiffs' copied articles were used in OpenAI's models.

Search Delays: The plaintiffs provided OpenAI with search terms and instructions for identifying their content in OpenAI's training datasets, but as of the letter's date, they had not received any results or confirmation that the searches had begun.

Admission Refusal: OpenAI refused to admit or deny whether the plaintiffs' works appear in their training datasets or were used to train their models.

Potential Legal Consequences

Sanctions: The court may impose sanctions on OpenAI for the data loss, especially if it's deemed negligent or in violation of discovery obligations.

Adverse Inference: The judge might allow an adverse inference against OpenAI, assuming that the lost data would have been unfavorable to their case.

Increased Scrutiny: This incident could lead to more stringent oversight of OpenAI's data handling practices during the legal proceedings.

Potential Liability: If it's proven that OpenAI used copyrighted material without permission, they could face significant financial penalties and be required to modify their AI models.

Prevention and Future Actions

OpenAI could have prevented this situation by:

Implementing robust data backup and recovery systems to prevent irretrievable loss of critical information.

Establishing clear protocols for handling sensitive data during legal proceedings.

Providing timely and transparent communication about search progress and results.

Going forward, OpenAI should:

Conduct a thorough investigation of the data loss incident and implement measures to prevent future occurrences.

Expedite the requested searches and provide regular updates to the plaintiffs and the court.

Consider being more transparent about their training data sources to avoid prolonged legal battles and potential reputational damage.

Develop clearer policies and technical solutions for identifying and properly licensing copyrighted material used in AI training.

This case highlights the growing legal challenges facing AI companies regarding copyright infringement and the importance of responsible data management practices in the AI industry.

Claude’s answer:

Based on the letter dated November 20, 2024, here's an analysis of the situation:

What Happened:

The New York Times and Daily News (News Plaintiffs) are involved in a lawsuit against Microsoft and OpenAI regarding the use of their copyrighted content in training AI models.

The News Plaintiffs have been searching through OpenAI's training datasets in a controlled environment called "the sandbox" to identify their copyrighted works.

Key incidents:

On November 14, OpenAI engineers accidentally erased all of the News Plaintiffs' programs and search result data from one of the dedicated virtual machines

While OpenAI recovered much of the data, the folder structure and file names were irretrievably lost

This made the recovered data unreliable for determining where the copied articles were used in OpenAI's models

The News Plaintiffs must now redo an entire week's worth of work

Additionally:

The News Plaintiffs provided OpenAI with search terms and instructions for two separate searches (URL-based and n-gram based) but haven't received results

OpenAI has responded that it will "neither admit nor deny" whether Plaintiffs' works appear in their training datasets

Potential Legal Consequences:

For OpenAI and other AI companies:

Potential copyright infringement liability if found to have used copyrighted content without permission

Possible monetary damages and injunctive relief

Could be forced to retrain models without copyrighted content

May need to implement licensing agreements with publishers

Could set a precedent requiring AI companies to be more transparent about training data

Prevention Strategies OpenAI Should Have Implemented:

Data Management:

Implement robust backup systems

Use version control for all data and search results

Maintain detailed logs of all system changes

Have proper data recovery protocols

Process Management:

Establish clear procedures for handling sensitive legal discovery data

Implement multiple approval levels for any changes to discovery environments

Create detailed documentation of all systems and processes

Regular training for engineers on handling legally sensitive data

Going Forward, OpenAI Should:

Immediate Actions:

Fully cooperate with the News Plaintiffs' search requests

Provide clear timelines for completing requested searches

Be more transparent about which works were used in training

Long-term Measures:

Develop better systems for tracking and documenting training data sources

Create clear protocols for handling copyright-protected content

Implement stronger data management and backup systems

Consider developing licensing frameworks with content creators

Establish better communication channels with stakeholders during legal proceedings

The situation highlights the broader challenges facing AI companies regarding transparency, data management, and copyright compliance. It also emphasizes the need for better systems and protocols when handling legally sensitive data during discovery proceedings.