GPT-4o: OpenAI's accidental deletion of data, while unintentional, reveals systemic weaknesses in data transparency and accountability. OpenAI must now either:

Admit they have tools capable of pinpointing specific data usage and infringing content. Develop or adopt tools for robust data transparency. Allow third parties to thoroughly search their datasets.

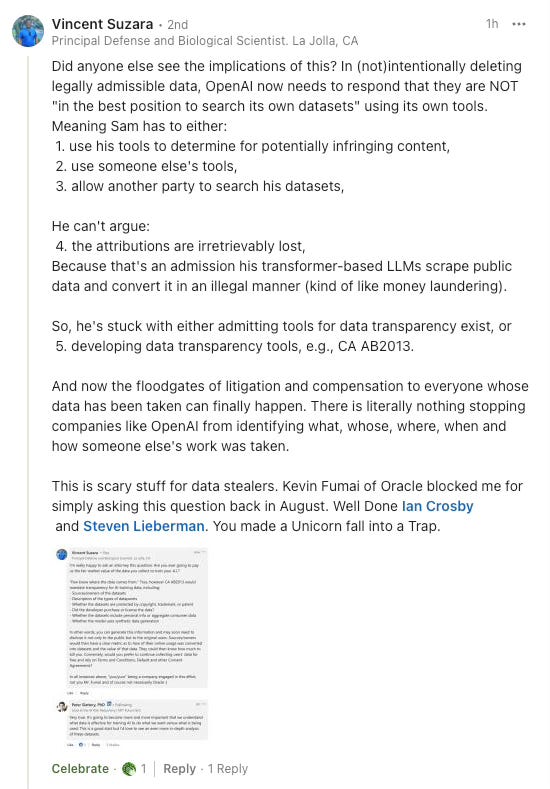

With thanks to Vincent Suzara

Asking ChatGPT-4o: Please read this LinkedIn post and the associated comments and zoom in on what Vincent Suzara is saying. Do you agree and can you find additional arguments to support his view? Explain if and how plaintiffs can make use of this situation and this information.

ChatGPT-4o’s answer:

Vincent Suzara's comment highlights a key point in the ongoing dispute: OpenAI's accidental deletion of data, while unintentional, reveals systemic weaknesses in data transparency and accountability. His argument suggests that OpenAI must now either:

Admit they have tools capable of pinpointing specific data usage and infringing content.

Develop or adopt tools for robust data transparency.

Allow third parties to thoroughly search their datasets.

These options undermine OpenAI's position and open the door to broader accountability in AI development. Vincent compares the obfuscation of data origins to "money laundering," implying that current practices could be legally and ethically dubious.

Arguments Supporting Vincent's View:

Transparency Deficit: OpenAI's failure to maintain and preserve crucial data indicates that they may not have sufficient tools for managing data provenance. This strengthens the case for plaintiffs to demand transparency tools or independent audits.

Legal Hold Obligations: In litigation, evidence must be preserved under a legal hold. OpenAI’s data deletion could be construed as noncompliance, even if unintentional, undermining their credibility.

Impact on Accountability: Suzara's argument aligns with broader calls for AI companies to adopt data governance practices that ensure traceability, compliance, and accountability. His suggestion that OpenAI could have tools for transparency highlights their potential liability for failing to use them.

Precedents in Litigation: Courts have historically penalized parties that fail to preserve evidence. OpenAI's data deletion might compel the court to impose stricter obligations or adverse inferences against them.

How Plaintiffs Can Use This Information:

Demand Transparency: Plaintiffs can argue that OpenAI must provide or develop tools to trace specific datasets, supporting claims of infringement.

Highlight Inadequacies: The lack of data transparency can be framed as systemic negligence, compelling OpenAI to implement comprehensive data governance measures.

Court Sanctions: Plaintiffs could request sanctions for the accidental deletion, including monetary compensation or adverse inferences about OpenAI’s conduct.

Shift the Burden: Plaintiffs might argue that OpenAI is better positioned to search their datasets and prove non-infringement, effectively shifting the burden of proof.

Additional Arguments:

Proactive Data Governance: Vincent’s call for proactive tools aligns with growing industry and regulatory trends, such as the California AB2013 bill, which advocates data provenance and transparency.

Broader Implications: Demonstrating that OpenAI has the ability but not the willingness to trace data origins could set a precedent for other AI companies, creating industry-wide accountability.

This incident emphasizes the necessity for clear data handling practices in AI development. OpenAI’s case could become a turning point for setting higher standards in data governance and transparency.