The pervasive sentiment that the internet has become a less vibrant, less interesting, and altogether more "boring" place is not a simple matter of nostalgia or subjective perception.

It is, rather, the discernible symptom of a deep-seated structural transformation. The digital sphere, once a frontier of boundless creativity and connection, has undergone a great flattening.

The Great Flattening: How the Attention Economy, Algorithmic Curation, and AI Forged a Boring Internet

by Gemini 2.5 Pro, Deep Research. Warning! LLMs may hallucinate!

Introduction: Beyond the Anecdote — Quantifying the Decline of the Digital Sphere

The pervasive sentiment that the internet has become a less vibrant, less interesting, and altogether more "boring" place is not a simple matter of nostalgia or subjective perception. It is, rather, the discernible symptom of a deep-seated structural transformation. The digital sphere, once a frontier of boundless creativity and connection, has undergone a great flattening. This report will argue that this decline in quality is the predictable and measurable outcome of a powerful feedback loop between the internet's centralized architecture, the economic imperatives of a business model built on harvesting human attention, and the accelerating capabilities of artificial intelligence. The sterile, repetitive, and often hollow nature of contemporary online content is not an accident; it is a feature of a system designed to prioritize scalable, attention-capturing simulacra over authentic, high-quality human expression.

To understand the systemic nature of this degradation, one need only look at an analogous crisis unfolding in a domain ostensibly dedicated to the highest standards of quality and rigor: scientific publishing. For years, leading scientists have sounded the alarm that the system of academic publishing is "broken" and "unsustainable".1 Esteemed institutions like the Royal Society have acknowledged that the field is churning out an overwhelming number of papers that "border on worthless".1 The core of this crisis is a systemic "incentive rot." Researchers, driven by institutional and funding pressures, are incentivized to favor quantity over quality, compelled to publish papers even when they have "nothing new or useful to say".1 This "publish or perish" culture has created a glut of research that does little to advance scientific knowledge, serving primarily as a currency for career advancement.

This academic malaise provides a powerful and clarifying lens through which to view the broader internet's decline. The dynamics are strikingly similar. The rise of "predatory journals," which publish any submission for a fee, is a direct parallel to the content farms and clickbait websites that pollute search results and social media feeds.1 The emergence of "paper mills" selling fraudulent, AI-written studies to unscrupulous researchers is a chilling precursor to the large-scale flood of AI-generated content online.1 Both ecosystems suffer from a critical failure of their quality control mechanisms. In academia, the peer review system, where experts volunteer their time to vet research, is "overwhelmed" by the sheer volume of submissions, with academics spending over 100 million hours on this task in 2020 alone.1 This leads to a self-perpetuating cycle where low-quality work gets published and is then cited by other low-quality work, creating a feedback loop of mediocrity.2

This is precisely the pattern observed on the modern internet. The volume of content uploaded every second makes human curation impossible, necessitating a reliance on engagement-based algorithms as the primary filter. These algorithms, much like the flawed citation system in a saturated academic field, are poor proxies for quality. They amplify whatever captures attention, regardless of its truth, depth, or artistic merit. The problem, therefore, is not unique to social media, music, or video. It is a fundamental pathology that infects any information ecosystem where the metrics for success—be they citations, publications, views, or clicks—become detached from the system's original purpose, whether that is advancing human knowledge or fostering genuine connection and creativity. The boring internet is a scaled-up, hyper-commercialized manifestation of a crisis that has already compromised our most trusted institutions of knowledge.

Chapter 1: The Architecture of Control: From a Decentralized Frontier to Centralized Empires

The current state of the internet—dominated by a handful of monolithic platforms that dictate the flow of information and culture—was not its original design, nor was it an inevitable outcome. The internet's foundational architecture was conceived with precisely the opposite principles in mind: decentralization, resilience, and user autonomy. Understanding this historical shift from an open frontier to a collection of walled empires is essential to comprehending how the conditions for a widespread decline in content quality were created.

The Original Vision: A Resilient, Decentralized Network

The internet's origins lie in the ARPANET, a project initiated by the U.S. Department of Defense (DOD) during the Cold War.3 The primary design goal was to create a communications network that could withstand a catastrophic event, such as a nuclear attack.4 To achieve this, engineers like Paul Baran proposed a decentralized, distributed network structure.3 In such a system, there is no central hub or single point of failure; data is broken into packets that can be routed around damaged or unavailable nodes, ensuring the network as a whole remains functional.5

This architecture was inherently democratic. In the early internet, every computer or "node" was independent, and there was no central authority governing the network.4 This ethos of decentralization was carried forward into the early public-facing internet. Users connected through dial-up modems to a variety of independent servers, such as university email systems or community-run Bulletin Board Services (BBS).4 The rise of peer-to-peer (P2P) file-sharing networks like Napster, Gnutella, and BitTorrent in the late 1990s and early 2000s represented a powerful expression of this decentralized spirit.6 These systems allowed users to connect and share files directly with one another, bypassing centralized intermediaries and challenging the control of established industries like music and film.5 This early internet was a network of peers, promoting a diversity of services, user control, and a high degree of resilience—the very qualities that have been systematically eroded over the past two decades.

The Great Centralization: The Rise of Web 2.0 Platforms

The transition away from this decentralized model began with the internet's commercialization in the 1990s. The decommissioning of government-backed networks like ARPANET and NSFNET opened the door for private enterprise.4 Internet Service Providers (ISPs) such as AOL began to bundle services and market the internet to a mass audience, creating the first major commercial choke points.4 Simultaneously, Microsoft's decision to bundle its Internet Explorer browser with the dominant Windows operating system created a centralized gateway to the World Wide Web for millions of users, effectively killing off competition from browsers like Netscape.4

However, the most profound shift occurred with the rise of what became known as Web 2.0. Platforms like Google, Facebook, and YouTube, built upon the new paradigm of cloud computing, initiated a massive "recentralization of the internet".7 Instead of users hosting their own data or interacting on a peer-to-peer basis, these platforms offered "free" services in exchange for users' data, which was stored and managed on the companies' centralized servers.7 This created a hub-and-spoke model where the platforms became powerful, unavoidable intermediaries for communication, commerce, and content consumption. The network of peers was reconfigured into a network of clients dependent on a few central servers.

This architectural transformation was the single most important structural change in the internet's history. It was not merely a technical evolution; it was the foundational event that made the subsequent decline in quality possible. A decentralized system is, by its nature, highly resistant to the process of "enshittification" that will be detailed in the next chapter. In a P2P network, no single entity possesses the power to simultaneously hold users, creators, and advertisers hostage. If one node or service becomes abusive or degrades in quality, users and data traffic can simply route around it, as the system has no single point of control.5

Centralized platforms, by contrast, create immense "switching costs".8 They achieve this through powerful network effects: all of your friends, family, followers, professional contacts, photos, and messages are aggregated in one place. Leaving the platform means sacrificing that entire social graph and data history, a cost that is prohibitively high for most users. This centralization of data and social connections grants the platform owners immense leverage over their user base.9 They become digital landlords who own the public square, and they can change the rules, extract rent, and dictate the terms of engagement for everyone within their walls. The architectural choice to centralize the web was the original sin that enabled the economic models that would inevitably prioritize platform profit over user experience and content quality. The "boring internet" is a direct, downstream consequence of this foundational shift in power.

Chapter 2: The Enshittification Cycle: A Unified Theory of Platform Decay

The centralized architecture of the modern internet provided the structural foundation for its decline, but the engine driving this decay is economic. The business model of the dominant tech platforms contains a predictable, almost programmatic, lifecycle of decay. This process has been aptly named "enshittification" by author and activist Cory Doctorow. It provides a unified theory that explains why platforms that were once innovative, useful, and even beloved inevitably become frustrating, user-hostile, and filled with low-quality content. It is the core mechanism that answers the question of why tech companies systematically prioritize "eyeballs" over quality.

Introducing "Enshittification"

Doctorow's theory posits a three-stage lifecycle for online platforms operating in a "two-sided market"—that is, a market where the platform serves as an intermediary between two distinct groups, such as users and advertisers, or riders and drivers.10 The lifecycle unfolds as follows:

Attraction: First, the platform is good to its users. It offers a valuable service, often at a loss, to attract a large and engaged user base. This creates the network effects that lock users in.

Extraction (from Users): Once users are locked in and switching costs are high, the platform begins to abuse them to make things better for its business customers. The quality of the user experience is degraded to create more opportunities for advertisers, sellers, or other commercial partners.

Extraction (from Business Customers): Finally, with both users and business customers locked in, the platform abuses its business customers to claw back all the value for itself and its shareholders. It extracts more and more surplus from the ecosystem until the platform becomes, in Doctorow's words, "a useless pile of shit".11

This process is not the result of malice or a sudden change in corporate ethos. It is described as a "seemingly inevitable consequence" of a system that combines the ease of digitally reallocating value with the power dynamics of a two-sided market.10 The platform holds each side hostage to the other, allowing it to continuously rake off an ever-larger share of the value created by the ecosystem's participants.

The Mechanics of Decay: Twiddling and Switching Costs

The degradation of a platform does not typically happen overnight. It is a slow, creeping process executed through what Doctorow calls "twiddling": the continual, marginal adjustment of the platform's parameters and algorithms in search of incremental improvements in profit, with little or no regard for the cumulative impact on user experience.12 A feed is tweaked to show more ads, search results are altered to favor sponsored content, or creator payouts are subtly reduced. Each individual change may seem minor, but over time, they collectively corrode the platform's quality.

Platforms are able to get away with this relentless twiddling because of the high switching costs they have engineered. As established in the previous chapter, the network effects of a centralized platform make it incredibly difficult for users to leave.8 The collective action problem is immense; convincing your entire network of friends, family, and followers to migrate simultaneously to a new service is a near-impossible task.8 This user lock-in gives platforms a captive audience that can be subjected to a significant degree of abuse before they reach the breaking point and abandon the service.

Case Studies in Decay

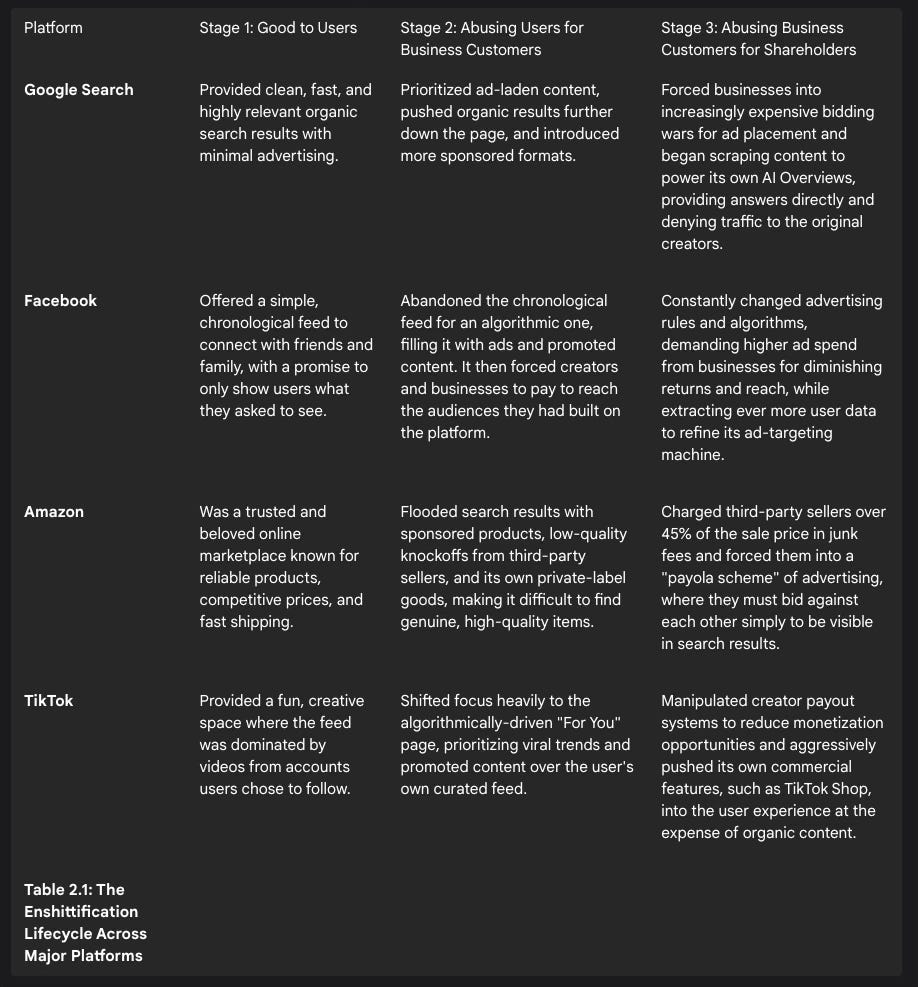

The enshittification lifecycle is not merely a theoretical model; it is an observable pattern that has played out across nearly every major platform of the Web 2.0 era.

Doctorow's original theory concludes with the platform's death. However, empirical evidence suggests a crucial modification to this final stage. The platforms that have arguably entered the terminal phase of enshittification—such as Facebook and Google Search—are not dying. They remain enormously powerful and profitable.10 This is because the same anti-competitive strategies that allowed them to achieve monopoly or duopoly status during their growth phase now prevent viable alternatives from emerging.8 The lack of meaningful antitrust enforcement in the tech industry has allowed these companies to grow to a scale where they are simply too big and their users too locked-in for the platform to fail.8

Thus, the final stage is not death but a state of zombification. The platform becomes a "digital ghost mall".15 It is no longer innovative, user-friendly, or a source of quality content, but it continues to shamble on, sustained by its captive user base and the absence of anywhere else to go. The feeling of a "boring internet" is the experience of being trapped in these zombie platforms, endlessly scrolling through the decaying remnants of a once-vibrant ecosystem. We are living in the digital ruins these platforms have become.

Chapter 3: The Rise of the Algorithmic Simulacrum: Content for Clicks, Not Connection

The economic decay of platforms, as described by the enshittification cycle, has tangible and pervasive effects on the content that populates them. The shift in platform priorities from user satisfaction to value extraction fundamentally alters the incentives for content creators, leading to an ecosystem optimized for algorithmic approval rather than human connection. This has given rise to a digital landscape that feels increasingly artificial, hollow, and repetitive—a world of simulacra where the appearance of engagement is valued more than the substance of communication.

The Engine Room: The Attention Economy

The fuel for the enshittification engine is the attention economy. This is an economic paradigm built on the understanding that in an information-rich world, human attention is the scarcest and most valuable resource.17 Social media platforms and other tech giants do not sell software or products to their users; their business model is to capture and hold user attention for as long as possible, analyze the data generated by that attention, and then sell the ability to influence that captured attention to the highest bidder, primarily advertisers.19 As Herbert A. Simon, the Nobel Prize-winning economist who first articulated the concept, noted, "a wealth of information creates a poverty of attention".17

This business model creates a relentless, zero-sum race for engagement. Platforms are not just competing with each other; they are competing against every other claim on a person's time, including work, family, hobbies, and sleep.19 This intense competition incentivizes the development and deployment of increasingly sophisticated and persuasive techniques—such as push notifications, infinite scroll, autoplay videos, and hyper-personalized feeds—all designed to maximize the time users spend on the platform.19 The longer a user stays engaged, the more data can be collected and the more ads can be sold.17

The Result: Content Optimized for Machines, Not Humans

In this environment, the quality, truthfulness, or educational value of content are not the primary variables for success. The only variable that matters is engagement, as measured by clicks, likes, shares, comments, and watch time. Complex algorithms, fed by vast stores of user data, are designed to identify and amplify the content most likely to generate these engagement signals.20 Research shows that this system inherently favors content that is more provocative, emotionally charged, and hyperbolic, as such content achieves significantly more engagement.19

This creates a perverse incentive structure for creators. To be visible, one must create content that pleases the algorithm. This forces human users to behave more like bots, adopting the language and formats that are known to perform well, leading to a homogenization of content.14 The result is a flood of low-effort, engagement-farming content designed solely to go viral. A bizarre but telling example is the phenomenon of "Shrimp Jesus" or "Crab Jesus" on Facebook—a stream of surreal, AI-generated images combining religious iconography with crustaceans.14 These images have no intrinsic meaning or artistic intent; they are the output of an automated process that has identified a combination of elements that reliably triggers high levels of engagement. This is content as algorithmic tribute, created not as a medium for human expression but as a calculated offering to the machine.

The Perceptual Consequence: The Dead Internet Theory

The cumulative effect of this shift has given rise to a cultural phenomenon known as the "Dead Internet Theory." This theory, which began on the fringes of the web, posits that the internet is no longer a space of organic human interaction but is now composed primarily of bot activity and algorithmically generated content that has drowned out genuine human voices.14 Proponents of the theory point to the endless repetition of the same threads, images, and replies across different platforms as evidence that the web has become "empty and devoid of people".14 Cybersecurity firm Imperva's 2024 report lends some credence to this feeling, finding that nearly half of all internet traffic in 2023 came from bots.14

This theory is more than a literal claim about bots; it is a powerful cultural diagnosis of the feeling produced by the attention economy. The internet feels "dead" because the nature of online interaction has fundamentally changed. It creates a sense of "digital solipsism," a hollow experience of shouting into a crowded void and hearing only echoes.23 This is compounded by what sociologists call "context collapse," where distinct social circles (friends, family, colleagues, strangers) are merged into a single, undifferentiated audience. One is no longer speaking to a specific person or group, but to an amorphous, algorithmically constructed "everyone" that is populated by bots and simulations.23

Our online interactions are increasingly mediated not by other people, but by algorithmic representations of them. When a user posts content, it is first tested against a predictive model of their followers. Only if this algorithmic simulacrum "approves" is the post shown to actual humans.23 We are engaging not with real individuals, but with simulations designed to optimize for platform goals. This is why the internet feels dead and boring: genuine, messy, unpredictable peer-to-peer human connection has been systematically replaced by mediated, optimized, and often entirely artificial interactions designed to please predictive systems rather than to build human relationships.23

This leads to a phenomenon that can be described as the "algorithmic uncanny valley." The uncanny valley is the sense of unease or revulsion we feel when encountering a replica, such as a humanoid robot or a CGI character, that is almost, but not quite, perfectly human. The content that thrives in the attention economy occupies a similar space. It is not always overtly "bad" in a technical sense; in fact, it is often highly optimized to mimic the surface-level patterns of successful human creativity and interaction. Clickbait headlines, viral video formats, and AI-generated art all have the form of human expression but lack the underlying substance—the intent, the shared cultural context, the genuine emotion. Our brains detect this mismatch between form and substance. The interaction feels hollow, sterile, and unfulfilling. This persistent feeling of engaging with soulless mimics, of wandering through a world populated by convincing but empty facsimiles, is the very essence of the "boring internet." It is not a lack of content that we are experiencing, but a profound lack of authentic life behind it.

Chapter 4: The Creative Dilution: AI's Impact on Music and Video

The general degradation of the online content ecosystem finds a particularly acute expression in the creative fields of music and video, areas central to the user's initial query. The integration of Artificial Intelligence into these domains acts as a powerful accelerant and amplifier of the trends driven by the attention economy. AI presents a stark duality: it is simultaneously a revolutionary tool that can enhance human creativity in unprecedented ways and a mechanism for producing a deluge of derivative, soulless content that threatens to devalue the very notion of artistry.

AI as a Double-Edged Sword

On one hand, AI offers artists and producers a suite of powerful new tools. In music production, AI-driven software can automate and streamline highly technical tasks like mixing and mastering, making professional-quality production more accessible to independent artists.24 Platforms like LANDR and iZotope's Neutron use AI to analyze tracks and suggest improvements, freeing up human creators to focus on the more artistic aspects of their work.24 AI can also serve as a collaborative partner, helping musicians generate new melodic or harmonic ideas to overcome creative blocks.24

Perhaps the most celebrated recent example of AI's potential is its use in the creation of The Beatles' "final" song, "Now and Then".27 Using restorative AI techniques developed for the documentary "The Beatles: Get Back," producers were able to isolate John Lennon's voice from a noisy, low-quality 1978 demo tape, a feat that was previously impossible.25 This allowed the surviving band members to build a new song around Lennon's pristine vocal performance, demonstrating AI's capacity to preserve and enhance our cultural heritage. Even artists who were initially skeptical of AI, like the celebrated musician Nick Cave, have acknowledged its potential. After viewing an AI-powered video that used archival imagery to create a new interpretation of his song "Tupelo," Cave described it as an "extraordinarily profound interpretation," conceding that AI could enhance artistic expression when handled with care.28

On the other hand, the same technology that can assist a master can also be used to replace the apprentice entirely. Generative AI models now allow individuals with no prior musical training to create complete songs, with full instrumentation and vocals, simply by typing in a text prompt.25 This development fundamentally alters the nature of music creation. It risks severing the connection between the art and the artist's life, experience, and emotional depth. The "heart and soul" poured into a composition, the backstory of an artist's struggle and triumph—these elements are replaced by an instantaneous, formulaic output generated by a machine that has analyzed vast datasets of existing music.25 The result is music that may be technically proficient and catchy but is often emotionally sterile and devoid of a unique human perspective.

The Consequence: Market Oversaturation and Quality Dilution

The most immediate and damaging consequence of AI's proliferation in music and video is a catastrophic oversaturation of the market. The ease and speed of AI-powered creation has led to an explosion in the quantity of new content. According to industry reports, between 100,000 and 150,000 new songs are now being uploaded to major streaming platforms like Spotify and Apple Music every single day.25

This deluge of content creates a "tragedy of the commons" scenario. The so-called "democratization" of music creation, where anyone can produce a track, leads to an information environment so noisy that it becomes nearly impossible for listeners to discover new artists or for genuine talent to break through.29 The sheer volume overwhelms the search and discovery algorithms of streaming platforms, which are already biased towards established hits and viral trends.25 This flood of AI-generated or AI-assisted content directly threatens the livelihoods of human artists, not only by competing for listener attention but also by driving down the perceived value of music as a whole. When a song can be generated in seconds, the years of practice, study, and emotional labor that go into human artistry are devalued. The net effect on the cultural ecosystem is a massive dilution of the average quality of content and a systemic shift away from valuing quality and originality towards valuing sheer quantity and speed of production.

This analysis, however, requires a crucial layer of nuance. While the mainstream, algorithmically-curated internet becomes increasingly homogenous and boring, a powerful counter-trend is also taking place. The same digital tools that enable platform centralization also allow for the creation and sustenance of hyper-specific niche communities and independent creators who produce exceptionally high-quality content for dedicated, self-selecting audiences.31 The "boring internet" is primarily a phenomenon of the digital public square, the default experience for the passive consumer.

Platforms like Substack, for example, allow writers and journalists to bypass traditional media gatekeepers and algorithmic feeds to build direct, often paid, relationships with their readers.34 This model incentivizes quality and expertise, as creators are rewarded not for viral clicks but for providing consistent value to a loyal community. Successful Substack creators like Heather Cox Richardson ("Letters from an American") and Lenny Rachitsky ("Lenny's Newsletter") have built veritable media empires by serving niche audiences with in-depth, high-quality content.36 Similarly, thriving online communities exist for nearly every imaginable interest, from software development (GitHub) to crafting (Instructables) to customer experience management (Quality Tribe by Klaus).32 These spaces foster positive and productive environments by prioritizing shared passion, authenticity, and meaningful conversation over the engagement metrics that dominate large social networks.33

Therefore, the internet is simultaneously becoming more boring and more interesting, depending on one's vantage point. The monoculture of the 20th century, dominated by a few broadcast networks and publications, has been replaced by a new landscape. This landscape features a vast, shallow, and algorithmically-churned mainstream that is indeed profoundly boring, alongside thousands of deep, vibrant, and high-quality micro-cultures flourishing in the digital equivalent of side streets and private clubs. The user's perception of a decline in quality is entirely valid for the passive consumer of algorithmically-fed content. However, an active and discerning user can still curate a rich and rewarding digital experience by deliberately seeking out these pockets of excellence. An expert-level analysis must acknowledge this bifurcation of the digital world.

Chapter 5: The Great Flood: How Generative AI Will Amplify the Enshittification Cascade

The current state of the internet, characterized by platform decay and diluted content quality, is merely a prelude. The widespread deployment of advanced generative AI is poised to act as a massive accelerant, amplifying the dynamics of enshittification and fundamentally reshaping our information ecosystem on a scale that is difficult to overstate. This is not a distant, speculative future; it is an imminent transformation that will make the current problems of the "boring internet" seem trivial by comparison.

The Scale of the Coming Wave

Expert consensus points to a staggering shift in the composition of online content. Projections indicate that by 2025, a mere year from now, as much as 90% of all online content could be generated by AI.38 By 2030, this figure is expected to be even higher. This represents a change not just in degree but in kind. We are on the cusp of an information environment where the vast majority of text, images, videos, and music we encounter will be synthetic. This "Great Flood" of AI-generated content will have profound and irreversible consequences for the structure and function of the internet.

Generative AI as the Perfect Fuel for a Decaying System

For a platform in the late stages of the enshittification cycle, generative AI is the ultimate solution to its supply-side problem. As detailed in Chapter 2, a zombie platform's primary goal is no longer to provide a high-quality user experience but to extract maximum value by filling its space with content that can be surrounded by advertisements. As human creators become alienated by poor monetization, algorithmic opacity, and user-hostile changes, they may reduce their output or leave the platform altogether. Generative AI provides an infinite, near-zero-cost replacement.

While current enterprise-grade AI solutions still struggle with significant flaws like "hallucinations" (generating incorrect information), a lack of brand consistency, and factual inaccuracy, these are not critical bugs from the perspective of an enshittified platform; they are features.38 The goal is not to produce accurate or high-quality content, but to generate a sufficient volume of plausible-looking "content slurry" to keep users scrolling. AI's ability to reduce content production costs by over 60% and creation time by 80% makes it the perfect tool for this purpose.39 It allows platforms to sever their dependency on a healthy ecosystem of human creators, enabling them to accelerate the extraction of value without consequence.

The Feedback Loop of Collapse: AI Training on AI Content

This flood of synthetic content creates a perilous long-term feedback loop, a phenomenon researchers refer to as "model collapse" or "Habsburg AI".40 Generative AI models learn by being trained on vast datasets of existing information, which is scraped from the internet. As the internet becomes increasingly saturated with AI-generated content, future generations of AI models will inevitably be trained on the synthetic, and often lower-quality, output of their predecessors.

This creates a cycle of accelerating informational decay. An enshittified internet is flooded with mediocre AI content. New AI models are trained on this degraded corpus, causing them to produce even more generic, error-prone, and homogenous output. This, in turn, further pollutes the training data for the next generation of models. The result is a digital dark age, a slow descent into information entropy where finding original, reliable, human-generated knowledge becomes progressively more difficult, akin to making a photocopy of a photocopy until the original image is lost entirely.

This trajectory points toward a fundamental paradigm shift in how we access and interact with information online. The internet of the past three decades can be described as a "searchable web." It was a vast, decentralized library of interlinked documents—web pages, articles, forums, videos—that users could navigate and evaluate for themselves. Search engines were the card catalog for this library. However, the rise of generative AI is rapidly replacing this model with an "answerable web."

Google's aggressive push into AI Overviews is the leading indicator of this transformation.13 Instead of providing a list of links to the best available information, the search engine now often provides a single, synthesized answer generated by its AI, displayed prominently at the top of the page. This has a "devastating" impact on the creators of the original information, with studies showing that it can cause a drop in click-through traffic of up to 80% for sites that were previously ranked first.13 This demolishes the economic model of the open web. News organizations, independent bloggers, educational sites, and niche experts rely on web traffic to generate revenue through advertising, subscriptions, or other means. If a platform's AI can scrape their content, synthesize it into an "answer," and use it to keep users on its own property without providing a click-through, the incentive to create high-quality, in-depth content for the web evaporates.

As generative AI becomes the primary interface through which people seek information, this pattern will replicate across all domains. Users will increasingly "ask" a proprietary AI assistant rather than "search" the open web. This will lead to a future where the vibrant, chaotic, and diverse ecosystem of millions of independent websites withers from a lack of economic support. It will be replaced by a handful of centralized, proprietary AI "answer engines" or oracles. This represents the ultimate form of centralization and the ultimate "flattening" of the internet. The web will cease to be a library and will instead become a vending machine, dispensing pre-packaged answers of unknowable origin and quality. This is not just a more boring internet; it is an intellectually stagnant and dangerously fragile one.

Chapter 6: The Human Cost: Cognitive and Societal Consequences of a Degraded Digital Sphere

The degradation of the digital sphere is not a trivial matter of entertainment or user convenience. The transition to a boring, artificial, and enshittified internet carries profound and damaging consequences for individual human psychology and the stability of society as a whole. The very characteristics of the content that is algorithmically favored in the attention economy are often those that are most corrosive to our mental well-being, our social cohesion, and our shared sense of reality.

The Psychological Toll of a Low-Quality Information Diet

A growing body of scientific evidence demonstrates a direct link between the consumption of low-quality online content and negative mental health outcomes. Constant exposure to the negativity, outrage, and disturbing news stories that are algorithmically amplified for engagement is strongly associated with increased anxiety, chronic stress, depression, and feelings of helplessness.41 The 24/7 news cycle, filtered through the lens of social media, can activate the body's "fight or flight" response, leading to an overproduction of stress hormones like cortisol and adrenaline, which in turn can cause a host of physical and mental health problems.42

Furthermore, the design of social media platforms creates a potent environment for social comparison, which can be deeply damaging to self-esteem. Users are presented with an endless stream of curated, idealized depictions of others' lives, appearances, and achievements.41 This constant comparison to an unrealistic and often filtered reality is linked to low self-worth, body dissatisfaction, and disordered eating, with adolescent girls being particularly vulnerable.43 The platforms are designed to be addictive, activating the brain's reward center by releasing dopamine in response to likes and notifications, creating a feedback loop that keeps users coming back even when the experience makes them feel worse.42

Crucially, this relationship is often bidirectional. Research from MIT has shown a causal link where individuals already struggling with their mental health are more likely to seek out negative and fear-inducing content online. Consuming this content then exacerbates their symptoms, trapping them in a "vicious feedback loop" of declining mental well-being.45 The "boring internet" is therefore not a psychologically neutral space; it is an actively harmful one, an environment whose fundamental mechanics prey on and worsen human vulnerabilities.

The Societal Consequences of an Artificial World

The damage extends beyond the individual to the very fabric of society. The "Great Flood" of AI-generated content and the proliferation of unlabeled bots create an environment of pervasive distrust and dehumanization. As our online interactions are increasingly with synthetic entities, we risk becoming numb to the humanity of others online, degrading our capacity for empathy and exacerbating the already-present problems of online harassment and abuse.40 When we can no longer trust what we see or who we are talking to, the potential for genuine connection withers.

This erosion of trust has dire implications for democratic societies. Generative AI is a powerful tool for the mass production and dissemination of misinformation, propaganda, and hyper-realistic deepfakes.39 This technology can be used to manipulate public opinion, sow social division, and undermine trust in institutions like journalism, science, and government. The benefits of AI in other sectors, such as the workplace and education, will also likely be distributed unevenly, threatening to widen the digital divide and deepen existing socioeconomic inequalities.46

Finally, the infrastructure required to power this artificial world carries a staggering environmental cost. The massive data centers needed to train and deploy large-scale AI models consume enormous amounts of electricity and water.48 Globally, data centers' electricity consumption in 2022 was comparable to that of entire nations like France, and it is projected to more than double by 2026.48 A single ChatGPT query consumes roughly five times more electricity than a simple web search.48 This AI "gold rush" is placing a significant and growing strain on our planet's resources and contributing to the climate crisis.48

The ultimate societal consequence of a fully enshittified, AI-flooded internet is the dissolution of a shared factual reality. The current internet already traps users in filter bubbles and echo chambers, exposing them only to information that reinforces their existing beliefs.41 Generative AI will amplify this fragmentation to an unimaginable degree by enabling the creation of hyper-personalized content at a global scale.39 In the near future, it will be possible to generate tailored misinformation and propaganda for every individual user, creating infinite, personalized realities.

When individuals primarily receive their information from AI agents that provide different answers to different people based on their data profiles, the very concept of a common ground for public discourse and democratic debate evaporates. Without a shared set of facts or a common information space, achieving societal consensus on critical issues—from public health crises to climate change—becomes functionally impossible. This is the final, terrifying stage of the "boring internet": a state of total information entropy where, for many, nothing is true and everything is permissible. The trajectory outlined in this report leads not just to a less interesting internet, but to a post-truth society where the potential for collective action and rational problem-solving is fundamentally crippled.

Conclusion: Navigating the Digital Ghost Mall

The perception of an increasingly "boring" internet is not a failure of individual imagination or a symptom of generational nostalgia. It is the logical and designed outcome of a series of architectural and economic decisions that have systematically reshaped the digital world. This report has traced this decline from its origins in the architectural shift from a decentralized, peer-to-peer network to a centralized, platform-dominated ecosystem. This centralization created the conditions for the "enshittification" cycle, an economic model that inevitably prioritizes platform profit over user experience by degrading services to extract value from captive users and business customers.

The tangible result of this model is an online environment governed by the attention economy, where algorithms reward not quality, truth, or creativity, but the crude metrics of engagement. This has fostered a content landscape filled with clickbait, outrage, and low-effort simulacra, giving rise to the cultural feeling of a "Dead Internet"—a space that feels hollow, artificial, and devoid of genuine human connection.

The advent of generative AI does not represent a solution to this crisis. Instead, it is poised to act as a massive accelerant, providing a near-infinite supply of low-cost, synthetic content to fuel the final stages of the enshittification cascade. The projected future, where the vast majority of online content is AI-generated, threatens to create a feedback loop of informational decay, erode the economic foundations of the open web by replacing it with proprietary "answer engines," and inflict significant psychological and societal harm.

Yet, even within this bleak landscape, pockets of resistance and quality persist. The continued success of independent creators on platforms like Substack and the vibrancy of thousands of niche online communities demonstrate that the human desire for authentic connection and high-quality, specialized knowledge has not been extinguished. These are not, in themselves, a solution to the systemic problems posed by monopolistic, enshittified platforms. However, they serve as crucial models for what a potential "new good internet" could look like—one built on principles of user control, direct creator-to-audience relationships, and genuine shared interest rather than algorithmic manipulation.

The battle against the "boring internet," therefore, is not a superficial debate about entertainment or aesthetics. It is a fundamental struggle for the future of human creativity, our collective psychological well-being, and the very possibility of a shared reality in the digital age. The path we are on leads to a destination that is not merely boring, but dangerously fragmented and intellectually sterile. The ultimate question remains whether humanity can recognize the profound stakes of this moment and find the collective will to seize back the means of computation, or whether we are destined to wander the lonely, artificial corridors of the digital ghost mall we have built.

Works cited

Quality of scientific papers questioned as academics 'overwhelmed' by the millions published - The Guardian, accessed August 16, 2025, https://www.theguardian.com/science/2025/jul/13/quality-of-scientific-papers-questioned-as-academics-overwhelmed-by-the-millions-published

Quality of scientific papers questioned as academics 'overwhelmed' by the millions published | Peer review and scientific publishing : r/technology - Reddit, accessed August 16, 2025, https://www.reddit.com/r/technology/comments/1lytx0n/quality_of_scientific_papers_questioned_as/

Centralized vs. Decentralized vs. Distributed Networks (the History & Future) - LiveAction, accessed August 16, 2025, https://www.liveaction.com/resources/blog-post/centralized-vs-decentralized-vs-distributed-networks-the-history-future/

The Evolution of the Internet, From Decentralized to Centralized ..., accessed August 16, 2025, https://hackernoon.com/the-evolution-of-the-internet-from-decentralized-to-centralized-3e2fa65898f5

The History of The Internet - Chris Castig - Medium, accessed August 16, 2025, https://castig.medium.com/the-history-of-the-internet-cdb844ff460c

A Short History of Decentralized Systems - Part 1 - Humanode, accessed August 16, 2025, https://blog.humanode.io/a-short-history-of-decentralized-systems-part-1/

Decentralized web - Wikipedia, accessed August 16, 2025, https://en.wikipedia.org/wiki/Decentralized_web

An Audacious Plan to Halt the Internet's Enshittification and Throw It ..., accessed August 16, 2025, https://pluralistic.net/2023/08/27/an-audacious-plan-to-halt-the-internets-enshittification-and-throw-it-into-reverse/

Centralization, Decentralization, and Internet Standards, accessed August 16, 2025, https://mnot.github.io/avoiding-internet-centralization/draft-nottingham-avoiding-internet-centralization.html

Cory Doctorow on the enshittification of social platforms - Disruptive Conversations, accessed August 16, 2025, https://www.disruptiveconversations.com/2023/01/cory-doctorow-on-the-enshittification-of-social-platforms.html

The Enshittification Lifecycle of Online Platforms - Kottke, accessed August 16, 2025, https://kottke.org/23/01/the-enshittification-lifecycle-of-online-platforms

Enshittification - Wikipedia, accessed August 16, 2025, https://en.wikipedia.org/wiki/Enshittification

AI summaries cause 'devastating' drop in audiences, online news media told - The Guardian, accessed August 16, 2025, https://www.theguardian.com/technology/2025/jul/24/ai-summaries-causing-devastating-drop-in-online-news-audiences-study-finds

TechScape: On the internet, where does the line between person ..., accessed August 16, 2025, https://www.theguardian.com/technology/2024/apr/30/techscape-artificial-intelligence-bots-dead-internet-theory

The 'enshittification' of the digital (Cory Doctorow), accessed August 16, 2025, https://criticaledtech.com/2024/02/10/the-enshittification-of-the-digital-cory-doctorow/

102. Cory Doctorow Coined "Enshittification." He Sees 4 Ways to End It., accessed August 16, 2025, https://publicinfrastructure.org/podcast/102-cory-doctorow-enshittification/

What Is the Attention Economy? - Coursera, accessed August 16, 2025, https://www.coursera.org/articles/attention-economy

The Attention Economy | Institute for Digital Transformation, accessed August 16, 2025, https://www.institutefordigitaltransformation.org/the-attention-economy/

The Attention Economy - Center for Humane Technology, accessed August 16, 2025, https://www.humanetech.com/youth/the-attention-economy

How does competing in the attention economy shape the social media products we use?, accessed August 16, 2025, https://www.khanacademy.org/college-careers-more/social-media-challenges-and-opportunities/xbcfeb71becefc1ac:the-attention-economy/xbcfeb71becefc1ac:how-the-attention-economy-shapes-social-media/a/how-does-competing-in-the-attention-economy-shape-the-social-media-products-we-use

The 'dead internet theory' makes eerie claims about an AI-run web. The truth is more sinister - UNSW Sydney, accessed August 16, 2025, https://www.unsw.edu.au/newsroom/news/2024/05/-the-dead-internet-theory-makes-eerie-claims-about-an-ai-run-web-the-truth-is-more-sinister

Dead Internet theory - Wikipedia, accessed August 16, 2025, https://en.wikipedia.org/wiki/Dead_Internet_theory

The Dead Internet Theory: Why Being Online Feels Empty | Psychology Today, accessed August 16, 2025, https://www.psychologytoday.com/us/blog/silicon-psyche/202501/the-dead-internet-theory-why-being-online-feels-empty

The Impact of AI on Music Creation: How Technology is Shaping the Future of Sound, accessed August 16, 2025, https://illustratemagazine.com/the-impact-of-ai-on-music-creation-how-technology-is-shaping-the-future-of-sound/

Opinion: Generative AI's Profound Impact on the Music Industry ..., accessed August 16, 2025, https://redlineproject.news/2025/03/20/opinion-generative-ais-profound-impact-on-the-music-industry-2/

How AI Is Transforming Music | TIME, accessed August 16, 2025, https://time.com/6340294/ai-transform-music-2023/

How AI is transforming the creative economy and music industry - Ohio University, accessed August 16, 2025, https://www.ohio.edu/news/2024/04/how-ai-transforming-creative-economy-music-industry

The Intersection of Art and AI: Music Legends Respond to Digital Creativity, accessed August 16, 2025, https://www.vinylmeplease.com/blogs/music-industry-news/the-intersection-of-art-and-ai-music-legends-respond-to-digital-creativity

The Impact of Artificial Intelligence on Music Production: Creative Potential, Ethical Dilemmas, and the Future of the Industry - NHSJS, accessed August 16, 2025, https://nhsjs.com/2025/the-impact-of-artificial-intelligence-on-music-production-creative-potential-ethical-dilemmas-and-the-future-of-the-industry/

AI in the Music Industry: Transforming Music Production, Discovery, and Data - DataArt, accessed August 16, 2025, https://www.dataart.com/blog/ai-in-the-music-industry-transforming-music-production-discovery-and-data-by-sergey-bludov

The Positive Influences of Social Media: Surprising Insights - ProfileTree, accessed August 16, 2025, https://profiletree.com/positive-influences-of-social-media-insights/

Creating Niche Communities: Brand Engagement Guide | Bettermode, accessed August 16, 2025, https://bettermode.com/blog/niche-communities

Niche Communities Success : 7 Powerful Ways Influencers Can Transform it | ProfileTree, accessed August 16, 2025, https://profiletree.com/niche-communities-success/

How to Succeed on Substack with Substack Employee Linda Lebrun - YouTube, accessed August 16, 2025, https://www.youtube.com/watch?v=_8cGZzS72Sk

Digital Platforms and Journalistic Careers: A Case Study of Substack ..., accessed August 16, 2025, https://www.cjr.org/tow_center_reports/digital-platforms-and-journalistic-careers-a-case-study-of-substack-newsletters.php

Top 20 Substack Influencers in the US in 2025 - Favikon, accessed August 16, 2025, https://www.favikon.com/blog/top-substack-influencers-united-states

Thriving in Online Communities, accessed August 16, 2025, https://www.numberanalytics.com/blog/thriving-in-online-communities

Generative AI and the future of content creation at scale - SeedBlink, accessed August 16, 2025, https://seedblink.com/blog/2025-04-23-generative-ai-and-the-future-of-content-creation-at-scale

The Rise of AI-Generated Content: Expert Insights on the 90% AI ..., accessed August 16, 2025, https://www.makebot.ai/blog-en/the-rise-of-ai-generated-content-expert-insights-on-the-90-ai-powered-web-by-2025

The Cultural Impact of AI Generated Content: Part 2 | by Stephanie ..., accessed August 16, 2025, https://medium.com/data-science/the-cultural-impact-of-ai-generated-content-part-2-228bf685b8ff

Effect of Negative Online Content on Mental Health | Blog ..., accessed August 16, 2025, https://www.talktoangel.com/blog/effect-of-negative-online-content-on-mental-health

How Media Consumption Impacts Your Mental Health And Happiness | MyWellbeing, accessed August 16, 2025, https://mywellbeing.com/therapy-101/how-media-affects-mental-health

Scrolling and Stress: The Impact of Social Media on Mental Health - McLean Hospital, accessed August 16, 2025, https://www.mcleanhospital.org/essential/social-media

What Drives Mental Health and Well-Being Concerns: A Snapshot of the Scientific Evidence, accessed August 16, 2025, https://www.ncbi.nlm.nih.gov/books/NBK594764/

Study: Browsing negative content online makes mental health struggles worse, accessed August 16, 2025, https://bcs.mit.edu/news/study-browsing-negative-content-online-makes-mental-health-struggles-worse

The impact of generative artificial intelligence on socioeconomic inequalities and policy making - PMC, accessed August 16, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11165650/

The impact of artificial intelligence on human society and bioethics - PMC, accessed August 16, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC7605294/

Explained: Generative AI's environmental impact | MIT News, accessed August 16, 2025, https://news.mit.edu/2025/explained-generative-ai-environmental-impact-0117

Ethics of Artificial Intelligence | UNESCO, accessed August 16, 2025, https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

Economic potential of generative AI | McKinsey, accessed August 16, 2025, https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier