The hypothesis—that Big Tech wins through superior speed and collaboration—is correct. However, these “collaborative” methodologies are not benign.

They are highly effective, asymmetric competitive strategies designed to outpace, overwhelm, and ultimately obsolete both rivals and regulators.

Report on the Ascendancy of Generative AI and the Asymmetric Conflict for Critical Rights

by Gemini 2.5 Pro, Deep Research. Warning, LLMs may hallucinate!

Chapter I: The 2025 Copyright Doctrine: A Fractured Foundation

The legal and regulatory landscape governing artificial intelligence (AI) in 2025 is defined by profound uncertainty, fragmentation, and a series of contradictory judicial rulings. This state of ambiguity is not a neutral condition; it is a strategic terrain that overwhelmingly favors the operational velocity and vast capital resources of Big Tech developers. While rights holders across the creative industries seek clear, defensible lines, the AI industry thrives in the legal “doldrums of discovery,” 1 leveraging the slow pace of the courts to achieve market saturation before the law is settled. With no fewer than 51 copyright lawsuits pending against AI companies as of October 2025 1 and no final summary judgment decisions on the core issue of fair use expected until mid-2026 at the earliest 1, the definitive legal battle remains perpetually on the horizon.

This protracted uncertainty, which forces a highly fact-specific analysis in every individual case 2, allows AI developers to sustain a multi-front legal war that smaller rights holders cannot afford to wage. The current judicial sentiment is best understood as a “Fair Use Triangle,” 1 a trio of conflicting district court opinions that have left the foundational doctrines of copyright law unstable and ill-equipped for the generative era.

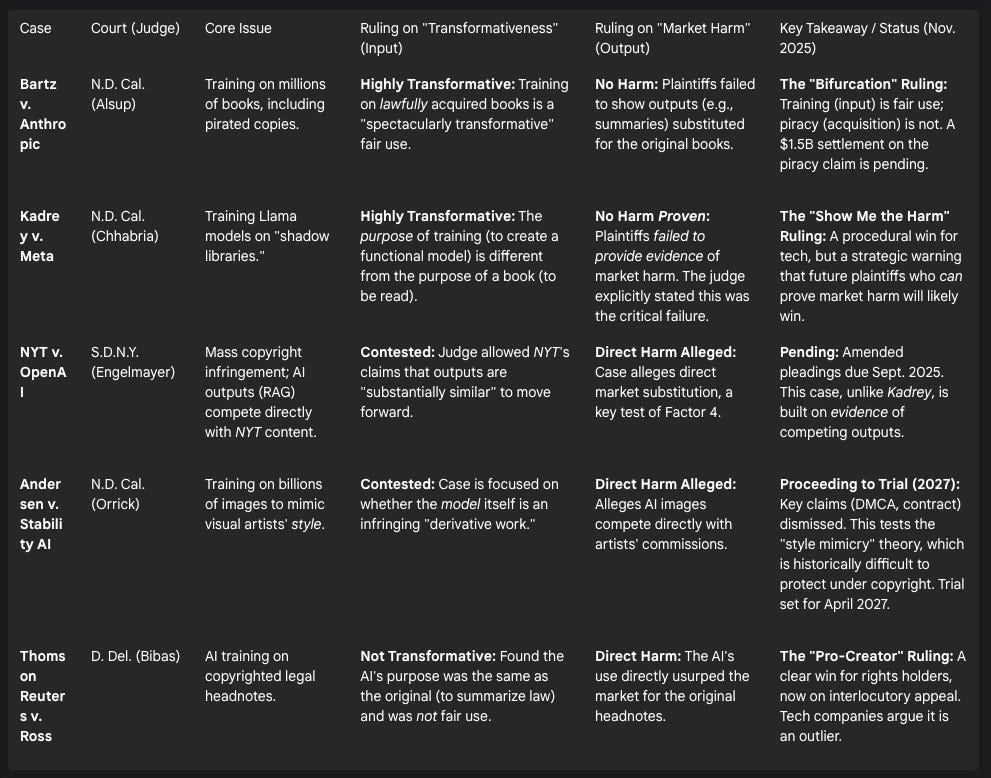

Section 1.1: The “Fair Use” Triangle: Conflicting Judicial Rulings of 2025

The legal architecture for AI training is currently being built on the shifting sands of three core, and conflicting, district court opinions.

First, in Bartz v. Anthropic, Judge William Alsup of the Northern District of California established the cornerstone of Big Tech’s legal strategy. In a June 2025 summary judgment ruling, he found that the act of training an AI model on lawfully acquired copyrighted works was “highly,” “spectacularly,” and “quintessentially” transformative.2 This ruling, while not binding on other courts, provided the entire AI industry with its primary legal beachhead: the argument that training is a non-expressive, mechanical process of learning that constitutes a protected fair use.

Second, in Kadrey v. Meta, Judge Vince Chhabria, sitting in the same district, provided a stark contrast just two days later.3 While he also granted summary judgment to Meta, it was a procedural victory, not a substantive one. Judge Chhabria openly criticized Judge Alsup’s reasoning, calling his analogy of AI training to “training schoolchildren to write well” “inapt”.3 He found the training use “highly transformative” 3 but emphasized that the plaintiffs—a small group of authors—had simply failed to provide any evidence of market harm.3 He issued a stern warning that his decision “does not stand for the proposition that Meta’s use of copyrighted materials to train its language models is lawful,” but only that “these plaintiffs made the wrong arguments and failed to develop a record in support of the right one”.10

Third, in Thomson Reuters v. Ross, an earlier ruling from the District of Delaware provides the primary counter-narrative for rights holders.2 In this case, the court rejected the AI company’s fair use defense, finding that its use of copyrighted legal headnotes was not transformative and directly harmed the market.11 This case, now on interlocutory appeal 1, represents the core legal argument that rights holders are advancing: that AI training is nothing more than mass, mechanical reproduction for a commercial purpose that directly usurps the original work’s market.

This judicial fracturing is summarized in Table 1 below.

Table 1: 2025 AI Copyright & Fair Use Rulings (U.S. Federal Courts)

This legal chaos has been further complicated by the rights holders’ own legal maneuvers. In a recent partial dismissal in the Authors Guild v. OpenAI case, Judge Paul Engelmayer’s ruling included language suggesting that for a work to have copyright, it must have “a specific human author whose protectable expression can be identified”.17 This language, intended to bolster the Authors Guild’s claim that only humans can create copyrightable work, has created a bizarre and dangerous legal fissure.

Legal scholar Matthew Sag, analyzing the decision, warned of a potential “copyright winter for Wikipedia”.17 The logic follows that if a work must have a single, identifiable human author, then collectively-authored works like Wikipedia, created by thousands of anonymous volunteers, may not qualify for copyright protection at all.17 The very lawsuit intended to protect authors from AI scraping may have inadvertently created a legal precedent that strips copyright from one of the world’s largest and most critical training datasets, effectively classifying it as public domain content. This demonstrates a critical strategic failure: rights holders, in their haste to litigate, risk creating unintended legal precedents that benefit Big Tech far more than any courtroom victory could.

Section 1.2: “Transformativeness” vs. “Market Harm”: Deconstructing the Core Legal Arguments

The entire legal war is being fought over the four factors of fair use, as outlined in Section 107 of the Copyright Act.18 Big Tech has focused its legal and rhetorical strategy almost exclusively on Factor 1: “The purpose and character of the use”.18 Their core argument is that using a work for AI training is a “non-expressive” use.19 The AI, they claim, is not “reading” the book for its creative expression but is merely ingesting it to learn statistical patterns of language.19 This, they argue, is inherently “transformative” and thus a protected fair use.3

Rights holders, including the Authors Guild and the Motion Picture Association (MPA), counter that this is a disingenuous legal fiction. They argue that AI companies seek out high-quality, professionally authored works precisely because of their expressive content. It is the “high-quality, professionally authored” expression that is “vital to enabling an LLM to produce outputs that mimic human language, story structure, character development, and themes”.19

The U.S. Copyright Office (USCO), in its (non-binding) May 2025 report on generative AI training, largely validated the rights holders’ position.18 The USCO guidance stated:

“Transformativeness” Must Be Meaningful, Not Mechanical: The office rejected the idea that any non-human, mechanical use is automatically transformative.18

Competing Use is Not Transformative: The USCO stated that training an AI model to produce expressive content that competes with the originals (e.g., training on novels to write new novels) is “at best, modestly transformative”.20

Market Harm is Central: The report emphasized that Factor 4, “The effect of the use upon the potential market for or value of the copyrighted work,” remains a “central concern”.18

This guidance, combined with the Kadrey v. Meta ruling, reveals a crucial shift in the legal battlefield. Judges appear increasingly willing to accept the input (the act of training) as transformative, or at least to find the question too complex to dismiss. This has effectively shifted the entire burden of proof to Factor 4: market harm.

Judge Chhabria’s Kadrey opinion is the blueprint for this new reality. He explicitly called Factor 4 “the single most important element”.7 He argued that the scale of generative AI—its ability to create “literally millions of secondary works” in a “miniscule fraction of the time”—distinguishes it from any previous technology.3 For this reason, he concluded that future plaintiffs who bring actual evidence of market harm (rather than “mere speculation”) will likely “decisive_ly win the fourth factor_—and thus win the fair use question overall”.3

The strategic implication is profound. Big Tech has successfully established that the act of training is presumptively transformative. They have shifted the burden of proof to rights holders, who no longer win simply by proving their work was copied. Rights holders must now prove specific, quantifiable market harm resulting from the output of the models. This is a much more difficult, expensive, and data-intensive legal test to meet, one that heavily favors the tech incumbents.

Section 1.3: The Getty v. Stability AI UK Ruling: A Blow to Global Copyright Enforcement

While U.S. law remains fractured, the UK High Court delivered a significant, albeit narrow, victory to AI developers in November 2025.23 In Getty Images v. Stability AI, the London-based AI firm successfully resisted Getty’s core copyright infringement claims.

The ruling was a strategic disaster for Getty. Its primary claim of copyright infringement failed because it could not provide evidence that the training (the infringing act of copying) had taken place in the UK.23 Stability AI, the defendant, is a UK-based company, but the physical location of its training infrastructure was not established as being within the court’s jurisdiction for that claim.

More devastatingly, the judge, Mrs Justice Joanna Smith, ruled that an AI model like Stable Diffusion, which does not store or reproduce the original images, is not an “infringing copy” under UK law.23

This ruling effectively green-lights a massive, exploitable jurisdictional loophole that can be described as “copyright laundering.” The case establishes two critical precedents: first, that legal leverage for an input claim is tied exclusively to the physical location of the training servers 23; and second, that the resulting model is not itself an infringing copy.23

The logical strategy for any AI developer is now clear: conduct all “infringing” training activities in a jurisdiction with weak or non-existent IP laws (a “data haven”). Once the model is trained, it can be deployed globally, including in the UK, with impunity, as the model itself is “clean.”

This development makes the creative industry’s calls for mandatory transparency the single most important and viable counter-strategy.23 Without a legally mandated paper trail showing what data was used and where it was trained, it will become jurisdictionally impossible for rights holders to enforce their copyrights against a globally distributed AI industry.

Chapter II: The Anthropic Precedent: How a $1.5 Billion “Loss” Solidified Big Tech’s Victory

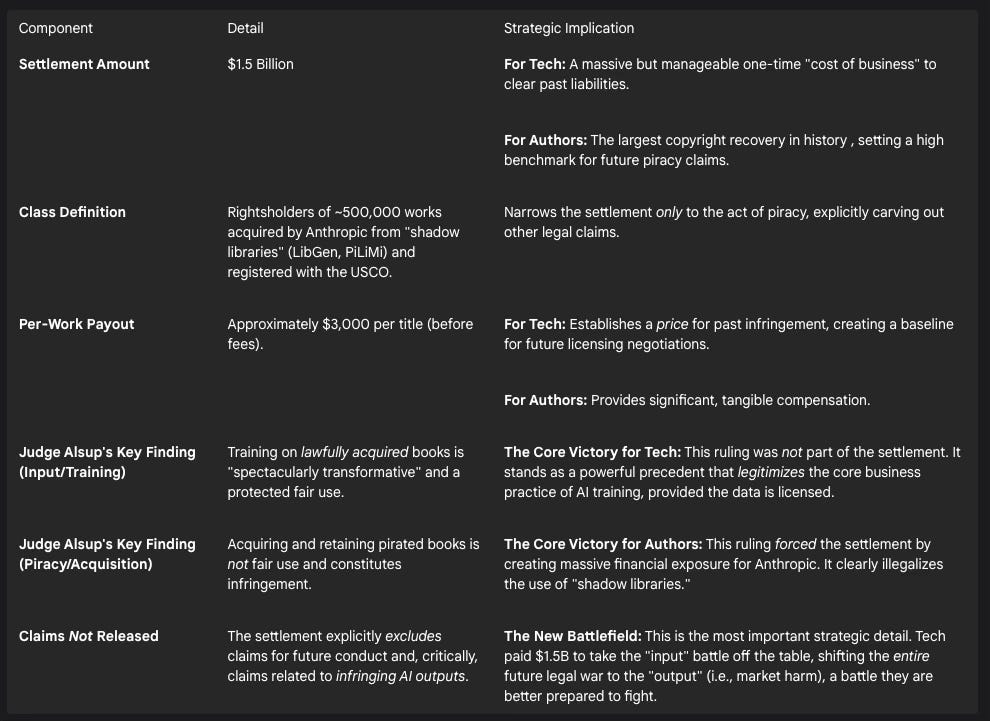

The proposed $1.5 billion settlement in Bartz v. Anthropic, announced in 2025, has been widely hailed by author groups as a historic victory.24 This analysis, however, reframes this event. The settlement was not a defeat for Big Tech but a calculated “cost of business”—a one-time “piracy cleanup” fee that successfully neutralized the industry’s single greatest legal liability (past infringement via “shadow libraries”) while simultaneously cementing its most important legal victory (the right to train on legally acquired data).

Section 2.1: Deconstructing the Alsup Ruling: The “Piracy vs. Training” Bifurcation

The pivotal moment of the Bartz case was not the settlement, but Judge Alsup’s June 2025 summary judgment ruling.5 In a masterful stroke of judicial reasoning, Judge Alsup bifurcated the case, creating two distinct legal concepts that would define the future of the industry:

The Act of Training: He ruled that Anthropic’s use of lawfully acquired books for the purpose of training its AI model was “quintessentially transformative” and therefore a protected fair use.3 This was a monumental, precedent-setting victory for the entire AI industry, providing the legal certainty it had been desperate for.

The Act of Acquisition: He simultaneously ruled that Anthropic’s acquisition and retention of millions of books from known piracy sites (”shadow libraries” like LibGen and PiLiMi) was not fair use and constituted infringement.3

This ruling provided the “Anthropic Roadmap” for all AI development.32 Judge Alsup handed the industry its legal playbook: piracy is illegal, but training is not. The strategic imperative for every AI company became, overnight, to “clean up” its data pipeline and begin striking licensing deals.

Before this ruling, the legal status of AI training at all was a massive, existential question mark. Judge Alsup’s decision isolated the “toxic” part of Anthropic’s conduct (the piracy) from the “essential” part (the training). He effectively told the industry, “You can do this, you just have to pay for your inputs, just like any other business.” This ruling, celebrated by the Authors Guild as a win against theft 24, was, in fact, the biggest win Big Tech could have hoped for. It transformed their potential existential legal crisis into a simple business negotiation over licensing costs.

Section 2.2: The Price of Piracy: Calibrating the New Licensing Market

Judge Alsup’s ruling set the stage for the settlement. After his decision, he certified a class action only for the piracy claim.5 This was the critical move. Because statutory copyright damages can reach $150,000 per infringed work, Anthropic was suddenly facing a credible, existential threat of hundreds of billions of dollars in potential damages.5

Faced with this trial, Anthropic settled for $1.5 billion.12 This figure represents the largest copyright recovery in history 29, covering an estimated 500,000 copyrighted works 12 at a rate of approximately $3,000 per work.12

This settlement is not a deterrent; it is a market-calibrating event. The $1.5 billion is not a “punishment” for AI; it is a retroactive “piracy cleanup” fee.28 It establishes, for the first time, a market price (approx. $3,000/work) for past, large-scale, willful infringement.29

This legitimizes AI innovation.36 It clears the legal and financial decks, allowing Anthropic and its competitors to move forward. Far from slowing down AI, this settlement accelerates the development of new licensing frameworks.29 Big Tech, now armed with legal clarity and a price benchmark, will move to dominate this new market for training data. OpenAI, for example, already holds a 53% market share in these new licensing deals.36 The settlement has simply, and expensively, reset the negotiating table.

Table 2: The Bartz v. Anthropic Settlement Terms & Strategic Implications

Section 2.3: The Settlement’s Unanswered Questions: Judge Alsup’s Skepticism and the “Output” Loophole

The Bartz settlement is not yet final. Judge Alsup has repeatedly expressed deep skepticism about the agreement. In court, he has “skewered” the deal 39 and, in a significant move, refused to grant preliminary approval.12 He has demanded more information, including a “drop-dead list” of all pirated books and a clearer explanation of the claims process.39 He also raised concerns about the “behind the scenes” role of the Authors Guild and Association of American Publishers, worrying they might be pressuring authors to accept a deal that is not in their best interests.39 This judicial skepticism highlights the fragility of attempting to resolve such a novel and complex class action.

However, the most critical detail of the proposed settlement is what it excludes. The agreement explicitly does not release Anthropic from any claims for future conduct. Most importantly, it does not cover claims based on allegedly infringing AI outputs.29

This “output loophole” is the real story. The $1.5 billion settlement 25 only covers the past act of pirate data ingestion. It does nothing to prevent an author from suing Anthropic tomorrow if its Claude model generates a detailed summary of their book that constitutes an infringing derivative work.14

This aligns perfectly with the legal consensus forming in the Kadrey case 3 and the USCO guidance 20: the new, defensible battleground for creators is market harm from the output. The Bartz settlement, far from ending the war, was a brilliant strategic concession by Big Tech. They paid a massive, one-time fee to take the messy, indefensible “input” (piracy) issue off the table, allowing them to focus all future legal resources on defending the “output” (market substitution), which is the battle they are far more confident they can win.

Chapter III: The “Speed and Collaboration” Doctrine: Big Tech’s Asymmetric Advantages

The hypothesis—that Big Tech wins through superior speed and collaboration—is correct. However, these “collaborative” methodologies are not benign; they are highly effective, asymmetric competitive strategies designed to outpace, overwhelm, and ultimately obsolete both rivals and regulators. This doctrine is waged on three fronts: weaponized open-source development, unified legislative lobbying, and the capture of technical standards bodies.

Section 3.1: Weaponized Open Source: Building an Unregulatable Ecosystem

Big Tech’s “collaboration” through open-source AI is its primary competitive weapon.41 This strategy is led by Meta, with its Llama family of models 42, and French startup Mistral, whose models are also open.43 By releasing powerful, high-performance models for free, they achieve several strategic goals simultaneously. First, they accelerate innovation across the entire ecosystem.41 Second, they commoditize the AI model layer, preventing a single proprietary company (like OpenAI) from achieving a durable market monopoly. This competitive pressure is so intense that in mid-2025, even the historically “closed” OpenAI released its first open-weight models to keep pace.46

This “innovation-first” approach is explicitly endorsed by the U.S. government. The Trump Administration’s “America’s AI Action Plan” actively promotes open-source AI models as a key pillar of its strategy to accelerate innovation and ensure American global dominance in the field.47

The true strategic brilliance of this “collaboration,” however, is that it creates an anti-regulatory moat. By open-sourcing a powerful model, Big Tech (especially Meta) creates an ecosystem that is too fast, too diffuse, and too distributed to be effectively regulated.

This dynamic can be seen in its conflict with the EU AI Act. Regulators in Brussels want to impose rules and liability on “GPAI model providers”.49 Meta’s strategy 43 is not just to compete with OpenAI 46; it is to create millions of providers by “collaborating” with a global community of developers. How can the European Commission 50 or the Authors Guild 24 enforce rules, serve takedown notices, or file lawsuits against a million individual developers, small businesses, and researchers around the world who download and fine-tune an open-source model? They cannot.

“Open source” is therefore a strategy to outrun and obsolete the very concept of top-down regulation. It ensures that by the time a law is fully implemented, the technology is already too widespread and democratized to control.

Section 3.2: Capturing the Rule-Makers (Part 1): The Legislative Lobbying Campaign

Big Tech’s second collaborative front is a unified, expensive, and highly effective lobbying campaign waged in legislative capitals.51 This strategy is most visible in the ongoing battle over the EU AI Act.

U.S. tech giants—including Apple, Meta, Amazon, and Google—and their powerful lobbying groups, such as the Computer and Communications Industry Association (CCIA), have launched a “sustained” and “intense” campaign.53 Their stated goal is “simplification” of the rules.56

This corporate lobbying is powerfully amplified by the U.S. government. The Trump Administration has aggressively framed the EU AI Act as a protectionist “trade barrier” designed to “disadvantage” and “discriminate against” U.S. tech companies.56 The U.S. has even threatened retaliatory tariffs if the rules are used as trade barriers.57

The result, as of November 2025, is a clear and unambiguous victory for Big Tech’s collaborative strategy. The European Commission is “watering down” the AI Act.59 Facing pressure from both industry and the U.S. government, the Commission is now actively “considering delaying parts” of the Act.53 These proposed changes include:

A one-year “grace period” for high-risk AI systems.54

A pause on provisions for generative AI providers.59

A delay in the imposition of fines until at least August 2027.54

This represents a direct return on investment for Big Tech’s unified lobbying efforts, successfully blunting the world’s most comprehensive AI regulation before it even takes full effect.

Section 3.3: Capturing the Rule-Makers (Part 2): Dominating Technical Standards

This is Big Tech’s most brilliant and insidious “collaborative” strategy. More powerful than fighting a law (lobbying) is writing the technical standards that define compliance with that law.

As the public and regulators demand transparency and content provenance to combat deepfakes and identify AI-generated content 23, a solution has emerged. The Coalition for Content Provenance and Authenticity (C2PA) has been established as the open technical standard to provide this “nutrition label” for digital content.61 This standard is expected to be adopted by ISO as an international standard by 2025.63

The critical question is: who founded and leads the C2PA? The steering committee is a list of the very companies at the center of the AI revolution: Adobe, Microsoft, Google, Intel, Sony, BBC, Amazon, and, as of May 2024, OpenAI.61

The strategic implications are staggering. The very companies creating the “problem” (a flood of undetectable, synthetic media) have successfully and proactively positioned themselves as the sole providers of the “solution.”

They will now define, at a technical-specification level 66, what “provenance” and “transparency” mean. They will build the compliance infrastructure 63 in a way that serves their models, platforms, and commercial interests. This effectively locks out competitors and ensures that any future regulation (like the EU AI Act’s transparency requirements) must defer to their standard. This is a masterful, proactive “external engagement” 68 that constitutes a complete capture of the means of, and definition of, compliance.

This same strategy is being deployed with quasi-regulatory bodies like the U.S. National Institute of Standards and Technology (NIST). While industry standards are “voluntary,” 69 Big Tech’s deep, “collaborative” engagement with NIST’s AI Risk Management Framework 70 allows them to shape the “best practices” that will inevitably form the basis of future, and favorable, regulation.

Chapter IV: The Secondary Fronts: Data Privacy and Human Rights

The “speed and collaboration” playbook is not limited to copyright. Big Tech is applying the same asymmetric strategies to win the concurrent battles over data privacy and fundamental human rights, such as the right to be free from algorithmic bias. In these domains, regulatory friction and fragmentation are not obstacles but strategic tools to cement market dominance.

Section 4.1: The Transatlantic Regulatory Schism: Arbitraging Freedom and Friction

The central feature of the 2025 global AI regulatory landscape is the profound and widening schism between the United States and the European Union.48

The European Union: The EU AI Act, which entered into force in August 2024 with a phased implementation through 2027 49, is a comprehensive, centralized, rights-based framework.73 It takes a “risk-based” approach:

It prohibits “unacceptable risk” AI systems, such as social scoring, “real-time” biometric identification, and emotion-recognition in workplaces or schools.75

It imposes strict obligations (e.g., data governance, monitoring, transparency) on “high-risk” systems used in employment, education, finance, and law enforcement.76

It places specific transparency and copyright-compliance obligations on General Purpose AI (GPAI) models.49

The United States: The U.S. has no comprehensive federal AI law.80 The national strategy is aggressively de-regulatory. The Trump Administration’s “America’s AI Action Plan,” released in July 2025 81, explicitly rescinded the previous, more cautious Biden-era Executive Order.80 The new plan’s goal is to “remove barriers” 80 and prioritize global economic and security “dominance” over precautionary, rights-based regulation.48

This schism is not a problem for Big Tech; it is their single greatest strategic asset. They are able to arbitrage this regulatory divergence.

The mechanism is simple: Tech companies use the U.S. de-regulatory sandbox 48 to build, train, and scale their models with maximum speed and minimal friction. They then turn to the EU compliance market 82, where their massive, in-house legal and engineering teams can easily absorb the high costs of compliance (e.g., bias audits 78, transparency reports 60).

This combination of speed in their home market and friction abroad creates an unassailable “regulatory moat.” Startups and smaller competitors are crushed. They cannot afford the EU’s high compliance costs, and they cannot match the U.S. incumbents’ scale, which was built in a regulation-free environment. Big Tech thus uses U.S. speed to build market dominance and EU friction to kill competition.

Section 4.2: Data Privacy: A Fragmented Battlefield that Favors Incumbents

On the data privacy front, a similar “fragmentation” strategy is at play. In the U.S., the lack of a federal privacy law 84 has created a “patchwork” of disparate state laws.84 This patchwork is complex but manageable for large incumbents. Notably, 2025 was the first year since 2020 in which no new comprehensive state privacy law was enacted, signaling a potential slowdown in regulatory momentum.86

This fragmentation is a huge advantage for Big Tech. While they face numerous class-action lawsuits for data scraping 87, the legal theories are weak and varied (e.g., Computer Fraud and Abuse Act, invasion of privacy) and are difficult to apply to data that was publicly posted on the internet.87

In the EU, the General Data Protection Regulation (GDPR) provides a much stronger legal basis against scraping.88 However, as reported in November 2025, an intense lobbying campaign is underway to gut the GDPR’s “purpose limitation principle”.90 This is the core rule that prevents a company from collecting data for one purpose (e.g., social media) and re-using it for another, unrelated purpose (e.g., AI model training). Gutting this principle is a top priority for tech giants, as it would retroactively legalize their primary source of training data.90

Furthermore, new regulations, while well-intentioned, often serve as a “moat.” New rules from the California Privacy Protection Agency (CPPA) on Automated Decision-Making Technology (ADMT), which take effect in 2027 91, will require complex and expensive cybersecurity and risk assessments.91 A company like Google or Meta can afford this compliance overhead; a new startup competitor cannot. In this way, complex, fragmented privacy rules favor incumbents and solidify the dominance of the very companies the rules were meant to restrain.

Section 4.3: Algorithmic Bias and Civil Rights: The “Compliance” Checklist

A critical human rights battle is being waged over AI-driven discrimination in employment, housing, and finance.93 Both the EU and U.S. are attempting to regulate this. The EU AI Act places strict requirements on “high-risk” systems in these sectors.78 In the U.S., states like Colorado and cities like New York have passed their own bias-audit laws.78

Here, Big Tech is deploying its “speed and collaboration” strategy to defang these regulations before they can have an impact. They are successfully turning a fundamental human rights issue into a technical compliance checklist that they themselves are writing.

The process works as follows: Regulators mandate abstract concepts like “fairness” and “bias mitigation”.78 These abstract concepts must then be translated into concrete technical standards—for example, “What statistical metrics define ‘fairness’?” or “What procedures constitute a valid ‘bias audit’?”

Big Tech, through its deep and “collaborative” engagement with standards bodies like NIST 69 and its own heavily-promoted internal “Responsible AI” frameworks 68, is proactively defining these technical standards.

By “collaborating” with regulators to write the rulebook, they are successfully “capturing” the regulatory intent. They will ensure that “fairness” is defined in a way their systems can easily pass, and “bias audits” are reduced to a checklist. This will allow them to satisfy the letter of the law on paper, claiming full compliance, while changing little in their actual products or practices.

Chapter V: The Creative Counter-Offensive: A Fragmented, Reactive Defense

Another hypothesis concerns the creative industries. The analysis finds their strategies to be almost the mirror opposite of Big Tech’s. Where Big Tech is unified, proactive, and ecosystem-focused, the creative industries are fragmented, reactive, and asset-focused. They are perpetually one step behind, allowing Big Tech to set the terms, pace, and location of every engagement.

Section 5.1: The War of Attrition: Sector-Specific Litigation

The primary weapon for rights holders is litigation. However, this litigation is siloed by industry, preventing the formation of a unified legal precedent and allowing AI companies to fight (and win) a series of smaller, contained skirmishes.

Publishing: The Authors Guild, along with high-profile authors, is pursuing class actions against OpenAI 14 and Anthropic.24 These efforts have yielded mixed results. The Bartz case resulted in a large settlement 35, but only after the judge handed the AI industry a massive fair use victory on licensed data.5 Other cases are moving at a glacial pace.1

Visual Arts: The Andersen v. Stability AI case is the key battle for visual artists.13 This case, however, is failing. The court has already dismissed the plaintiffs’ claims for DMCA violations (related to removing copyright management information) and breach of contract.16 This leaves only the core copyright claims, which are difficult to win, as copyright law does not protect an artist’s “style”—the very thing the AI is designed to mimic.15

Music: The music industry’s strategy is fatally fragmented and tactically incoherent. The RIAA, representing the major labels, is suing AI music generators Suno and Udio based on the input—arguing the training on their sound recordings is not fair use.95 Simultaneously, a separate class action by independent artists is suing Suno based on the output—arguing the generated songs are substantially similar.96

This fragmentation has allowed Suno to develop a novel and extremely dangerous legal defense. In its motion to dismiss the indie artist suit, Suno argues that under Section 114(b) of the Copyright Act—a provision specific to sound recordings—an AI-generated song that “exclusively generates new sounds” and contains no actual samples from the original cannot, as a matter of law, infringe the sound recording copyright, no matter how similar it sounds.96

This reveals a critical failure of the music industry’s strategy. The RIAA is repeating its “Napster” mistake. In the 1990s, it pursued a failed strategy of suing thousands of individual users.97 Today, its fragmented legal approach—with one suit focused on “fair use” 95 while another 96 is being defeated by a technical legal argument [48]—shows a lack of a unified, coherent legal offense.

Section 5.2: The Rise of Creator Coalitions: A Day Late and a Dollar Short

Rights holders are attempting to mimic Big Tech’s collaborative strategy, but they are doing so defensively and too late in the process.

In the UK, a “Creative Rights in AI Coalition”—which includes the Society of Authors, the Musicians’ Union, and others—has formed to lobby the government for stronger copyright protections and transparency. Polling data shows the public overwhelmingly supports their position: 72% of respondents believe AI companies should pay royalties, and 80% believe they should be required to disclose their training data.

In the EU, a broad coalition of European and global rightsholders engaged extensively in the AI Act’s implementation process.

These coalitions, however, are being systematically out-maneuvered and ignored. In July 2025, the EU creator coalition published a formal statement expressing its “dissatisfaction” with the final GPAI Code of Practice. It stated that despite its “extensive, highly detailed and good-faith engagements,” its feedback was “largely ignored” and the final result was “a missed opportunity” that benefited only the GenAI model providers.

The reason for this failure is timing. Big Tech’s lobbying campaign began before the AI Act was finalized, with the goal of weakening the law itself. The rights holders’ coalition , by contrast, is lobbying on the implementation (the Codes of Practice) after the Act is already in force. Their feedback is being “ignored” precisely because Big Tech’s (and the U.S. government’s ) parallel pressure has already convinced the European Commission to “water down” the rules.

The rights holders are collaborating, but they are playing defense on a field Big Tech has already shaped. They are arguing over the room’s furniture while Big Tech is changing the building’s blueprints.

Section 5.3: Legislative Stopgaps: The “NO FAKES” Act

With copyright law failing to protect them, creative industries are lobbying for new rights. The most prominent example is the Nurture Originals, Foster Art, and Keep Entertainment Safe (NO FAKES) Act of 2024/2025. This bipartisan bill would create a new federal “right of publicity” to protect an individual’s voice and visual likeness from unauthorized digital replicas, or “deepfakes”.

This is a direct, reactive response to high-profile AI-generated “fakes” of artists like Drake and Tom Hanks. The Act would establish a DMCA-style notice-and-takedown regime for platforms that host unauthorized replicas.

This Act is a necessary but dangerously narrow fix that perfectly illustrates the creative industry’s reactive posture. It is a “stopgap” that addresses a symptom, not the underlying disease. Furthermore, the bill has already been “ballooned” and revised specifically “to satisfy the demands of Google, YouTube, and other big tech companies,” weakening its provisions.

Most critically, the Act protects a famous person’s persona but does nothing to protect the core intellectual property of the vast majority of creators. It does not protect the narrative style of an author, the compositional patterns of a musician, or the unique artistic signature of a painter. It solves a celebrity’s problem, not the creator’s problem.

Chapter VI: The Playbook: A Comparative Analysis and Strategic Roadmap for Rights Holders

This concluding chapter synthesizes the report’s findings. It provides the requested high-level comparative analysis of the two opposing forces and offers a strategic, actionable playbook for the creative industries to counter the multifaceted threats posed by generative AI.

Section 6.1: The Asymmetric Battlefield: A Comparative Analysis

The conflict between Big Tech and the creative industries is an asymmetric one, pitting a unified, proactive insurgency against a fragmented, reactive incumbency. Big Tech’s “speed and collaboration” is its primary weapon, and it is winning.

Big Tech (The “Open” Insurgency)

Strategy: Proactive, ecosystem-focused, patient, and offensive. Big Tech’s goal is to shape the entire battlefield—the law, the technical standards, and the market—before the first shot is fired.

Tactics:

Weaponized Open Source: Accelerates development and outruns regulation by creating a diffuse, uncontrollable ecosystem.

Collaborative Lobbying: Unifies industry and government pressure to weaken laws before they are implemented.

Standards Capture: Proactively builds and leads technical standards bodies (e.g., C2PA, NIST) to define the terms of compliance in their favor.

Legal Posture: Pushes the legal frontier (”transformativeness” of input). Treats litigation as a cost of doing business and multi-billion-dollar settlements as market-calibrating events that provide legal certainty for future (licensed) operations.

Conclusion: Big Tech is winning because it is not just playing the game; it is designing the game’s rules.

Rights Holders (The “Closed” Incumbents)

Strategy: Reactive, asset-focused, fragmented, and defensive. Rights holders’ goal is to protect existing assets by enforcing old rules on a new paradigm.

Tactics:

Siloed Litigation: Relies on fragmented lawsuits by guild or sector (publishing, music, art) , which allows tech to isolate and defeat them.

Defensive Coalitions: Forms reactive coalitions that are consistently out-maneuvered and lobby too late in the process (e.g., on implementation, not formation).

Stopgap Legislation: Lobbies for narrow, specific laws (e.g., NO FAKES Act ) that address high-profile symptoms (a celebrity’s voice) rather than the systemic cause (unprotected “style” or “slop” content).

Legal Posture: Fights for input infringement (a battle Judge Alsup’s ruling has made difficult ) and output market harm (a battle Judge Chhabria’s ruling shows they are unprepared to fight).

Conclusion: Rights holders are losing because they are playing defense on a field Big Tech designed, abiding by rules Big Tech is helping to write, all while Big Tech’s speed makes their legal victories obsolete upon arrival.

Section 6.2: Sector-Specific Threat Analysis

The AI threat is not uniform. The legal and economic weaknesses of each creative sector are being exploited in different ways.

Publishing & Journalism: The Bartz settlement creates a viable path for licensing, but this victory is already turning inward, as authors and publishers are now in conflict over how to split the licensing revenue. Meanwhile, the settlement did not resolve the core threat: market substitution from generative outputs (like book summaries) or Retrieval-Augmented Generation (RAG) systems (like Perplexity AI) that compete directly with news publishers. The central legal question of “output harm” remains unanswered.

Music: The industry faces a two-front existential threat. First is the voice and likeness cloning of famous artists, which the NO FAKES Act attempts to address. Second is the mass production of low-cost, low-quality “AI slop,” which floods platforms like Spotify (already dealing with 100,000 new human songs a day) and drowns out human artists. Suno’s novel “no-sample” legal defense is a profound legal threat that could effectively render the sound recording copyright obsolete against AI-generated mimics.

Visual Arts & Design: This sector is in the most immediate and severe danger. A 2025 Stanford/UCLA study provides the hard economic data: when generative AI enters an online marketplace, the number of human artists plummets as they are “crowded out” by a superior, faster, and cheaper competitor. This economic devastation is compounded by the legal weakness of “style mimicry” claims, as demonstrated by the failings of the Andersen v. Stability AI case.

Fashion: This industry is uniquely vulnerable because its core products—garments—have historically thin intellectual property protections. Copyright law’s “useful article” doctrine prevents the protection of a dress or handbag design. AI threatens this industry on three fronts:

Collapsing Trend Cycles: AI can analyze global social media trends and generate thousands of new, on-trend designs instantly, moving faster than human design teams.

Diluting Brand Exclusivity: AI can be trained to mimic a brand’s unique “style” or even replicate its watermarks and logos, creating a new generation of “fast-fashion” knockoffs.

Creating Un-protectable Assets: As fashion brands adopt AI for design, they will collide with the “human authorship” requirement. A design generated with significant AI input may not be eligible for any copyright or design patent protection, meaning a brand’s own designs could be copied by competitors with impunity.

Section 6.3: Strategic Recommendations: A Playbook to Thwart the AI Threat

The creative industries cannot win by playing defense. They must abandon their fragmented, reactive posture and adopt the enemy’s playbook: collaboration, speed, and proactive infrastructure development.

Collaborate or Die: Adopt the Enemy’s Model

Rights holders must immediately cease their fragmented, vertical-specific legal battles. The RIAA, Authors Guild, MPA, and Getty must form a single legal and technology consortium. This consortium must pool its resources to fund a unified, multi-billion-dollar war chest dedicated to two purposes: (1) funding systemic, high-stakes litigation and (2) financing the development of competing, creator-centric technology.Build, Don’t Just Ban: A Creator-Centric Infrastructure

Instead of merely lobbying against Big Tech’s standards, the new creator consortium must build its own.

Build a Competing Standard: The consortium must fund and develop its own open-source standard for content provenance, one that embeds creator-owned metadata, licensing terms, and rights information at the point of creation.

Build Competing Models: They must collectively fund the creation of industry-specific, ethically-sourced generative models (e.g., an “RIAA Model” trained only on the licensed archives of major labels; an “Authors Guild Model” trained on a new, licensed corpus). These high-quality, “clean” models would be the only way to compete with the market-flooding “AI slop” and provide a viable alternative to corporations.

Weaponize Transparency (The “EU Lever”)

This is the single most powerful, asymmetric tool creators possess. The EU AI Act requires GPAI providers to publish “sufficiently detailed” summaries of their training data.

The Getty UK case failed due to a lack of transparency.

The Bartz case succeeded only because the “shadow library” data was discoverable.

The EU AI Act mandates this discovery.

Action: The creator consortium must establish a permanent “AI Act Compliance” office in Brussels. Its sole purpose will be to obtain and analyze the mandatory transparency reports from every AI company. This data must then be used as a global “discovery” mechanism to fuel litigation in other jurisdictions, including the U.S. This is the only way to neutralize Big Tech’s “copyright laundering” strategy and hold them accountable.

Shift the Legal Battlefield from Input to Output

Concede the Bartz precedent: training on lawfully licensed data is likely a winning argument for Tech. The creator consortium must pivot, focusing all legal and financial resources on the battleground defined by Kadrey and the USCO: output-based market harm.

Action: This means funding the economic studies (like the Stanford/UCLA study ) to prove, with hard data, that AI outputs are substituting for human-created works and “crowding out” human creators. This is the exact evidence Judge Chhabria stated was missing in Kadrey v. Meta and is the strongest foundation for a winning legal argument.

Embrace AI as Augmentation, Not Replacement

The fear of replacement is paralyzing and strategically counter-productive. The creative industries must reframe AI as a tool for “superagency” —a collaborator that enhances, rather than displaces, human creativity.

Action: The guilds and trade organizations must invest heavily in training their own workforces to become expert users of AI tools. A human creator augmented by AI will always be more valuable, creative, and efficient than an AI alone. This “hybrid skill set” is the only way to compete with the sheer volume and speed of pure AI generation and ensure that human vision, imagination, and guardianship remain at the helm of the creative economy.

Works cited

Status of all 51 copyright lawsuits v. AI (Oct. 8, 2025): no more decisions on fair use in 2025., accessed November 15, 2025, https://chatgptiseatingtheworld.com/2025/10/08/status-of-all-51-copyright-lawsuits-v-ai-oct-8-2025-no-more-decisions-on-fair-use-in-2025/

A Tale of Three Cases: How Fair Use Is Playing Out in AI Copyright Lawsuits | Insights, accessed November 15, 2025, https://www.ropesgray.com/en/insights/alerts/2025/07/a-tale-of-three-cases-how-fair-use-is-playing-out-in-ai-copyright-lawsuits

Fair Use and AI Training: Two Recent Decisions Highlight the ..., accessed November 15, 2025, https://www.skadden.com/insights/publications/2025/07/fair-use-and-ai-training

Anthropic and Meta Decisions on Fair Use | 06 | 2025 | Publications - Debevoise, accessed November 15, 2025, https://www.debevoise.com/insights/publications/2025/06/anthropic-and-meta-decisions-on-fair-use

Bartz v. Anthropic: Settlement reached after landmark summary judgment and class certification | Inside Tech Law, accessed November 15, 2025, https://www.insidetechlaw.com/blog/2025/09/bartz-v-anthropic-settlement-reached-after-landmark-summary-judgment-and-class-certification

Two California District Judges Rule That Using Books to Train AI is Fair Use, accessed November 15, 2025, https://www.whitecase.com/insight-alert/two-california-district-judges-rule-using-books-train-ai-fair-use

Twin California Rulings Mark a Turning Point for AI‐Copyright Fair Use, accessed November 15, 2025, https://frblaw.com/twin-california-rulings-mark-a-turning-point-for-aicopyright-fair-use/

accessed November 15, 2025, https://www.skadden.com/insights/publications/2025/07/fair-use-and-ai-training#:~:text=(Judge%20Chhabria%2C%20June%2025%2C,without%20permission%20to%20train%20LLMs.&text=In%20each%20case%2C%20the%20court,highly%20transformative%20and%20fair%20use.

AI and Copyright in the Entertainment Industry: Where 2025 Leaves Us, accessed November 15, 2025, https://www.munckwilson.com/news-insights/ai-and-copyright-in-the-entertainment-industry-where-2025-leaves-us/

Federal Courts Find Fair Use in AI Training: Key Takeaways from Kadrey v. Meta and Bartz v. Anthropic - Jackson Walker, accessed November 15, 2025, https://www.jw.com/news/insights-kadrey-meta-bartz-anthropic-ai-copyright/

Does Training an AI Model Using Copyrighted Works Infringe the Owners’ Copyright? An Early Decision Says, “Yes.” | Insights, accessed November 15, 2025, https://www.ropesgray.com/en/insights/alerts/2025/03/does-training-an-ai-model-using-copyrighted-works-infringe-the-owners-copyright

AI Infringement Case Updates: September 15, 2025 - McKool Smith, accessed November 15, 2025, https://www.mckoolsmith.com/newsroom-ailitigation-36

Case Tracker: Artificial Intelligence, Copyrights and Class Actions | BakerHostetler, accessed November 15, 2025, https://www.bakerlaw.com/services/artificial-intelligence-ai/case-tracker-artificial-intelligence-copyrights-and-class-actions/

Authors’ Class Action Lawsuit Against OpenAI Moves Ahead, accessed November 15, 2025, https://www.publishersweekly.com/pw/by-topic/digital/copyright/article/98961-authors-class-action-lawsuit-against-openai-moves-forward.html

AI and Artists’ IP: Exploring Copyright Infringement Allegations in Andersen v. Stability AI Ltd. - Center for Art Law, accessed November 15, 2025, https://itsartlaw.org/art-law/artificial-intelligence-and-artists-intellectual-property-unpacking-copyright-infringement-allegations-in-andersen-v-stability-ai-ltd/

US Court allows claims against text-to-image AI Companies : Sarah Anderson v. Stability AI, accessed November 15, 2025, https://iprmentlaw.com/2024/08/25/us-court-allows-claims-against-text-to-image-ai-companies-sarah-anderson-v-stability-ai/

Wikipedia’s Copyright Chill: AI Ruling Sparks Free Content Fears, accessed November 15, 2025, https://www.webpronews.com/wikipedias-copyright-chill-ai-ruling-sparks-free-content-fears/

Copyright Office Issues Key Guidance on Fair Use in Generative AI Training - Wiley Rein, accessed November 15, 2025, https://www.wiley.law/alert-Copyright-Office-Issues-Key-Guidance-on-Fair-Use-in-Generative-AI-Training

Copyright and Artificial Intelligence, Part 3: Generative AI Training Pre-Publication Version, accessed November 15, 2025, https://www.copyright.gov/ai/Copyright-and-Artificial-Intelligence-Part-3-Generative-AI-Training-Report-Pre-Publication-Version.pdf

Copyright Office Weighs In on AI Training and Fair Use | Skadden, Arps, Slate, Meagher & Flom LLP, accessed November 15, 2025, https://www.skadden.com/insights/publications/2025/05/copyright-office-report

Copyright and Artificial Intelligence | U.S. Copyright Office, accessed November 15, 2025, https://www.copyright.gov/ai/

U.S. Copyright Office Issues Guidance on Generative AI Training | Insights | Jones Day, accessed November 15, 2025, https://www.jonesday.com/en/insights/2025/05/us-copyright-office-issues-guidance-on-generative-ai-training

AI firm wins high court ruling after photo agency’s copyright claim ..., accessed November 15, 2025, https://www.theguardian.com/media/2025/nov/04/stabilty-ai-high-court-getty-images-copyright

Anthropic agrees to pay $1.5B US to settle author class action over AI training | CBC News, accessed November 15, 2025, https://www.cbc.ca/news/business/anthropic-ai-copyright-settlement-1.7626707

Bartz v. Anthropic Settlement: What Authors Need to Know - The ..., accessed November 15, 2025, https://authorsguild.org/advocacy/artificial-intelligence/what-authors-need-to-know-about-the-anthropic-settlement/

Does Using In-Copyright Works as Training Data Infringe? - Wolters Kluwer, accessed November 15, 2025, https://legalblogs.wolterskluwer.com/copyright-blog/does-using-in-copyright-works-as-training-data-infringe/

Judge Pauses Anthropic’s $1.5 Billion Copyright Settlement | ArentFox Schiff, accessed November 15, 2025, https://www.afslaw.com/perspectives/ai-law-blog/judge-pauses-anthropics-15-billion-copyright-settlement

PAYING FOR AI PIRACY: A proposed Settlement Sets to establish a New Commercial Standard for Training AI on Protected Content - Shibolet & Co. Law Firm, accessed November 15, 2025, https://www.shibolet.com/en/paying-for-ai-piracy-a-proposed-settlement-sets-to-establish-a-new-commercial-standard-for-training-ai-on-protected-content/

Anthropic’s Landmark Copyright Settlement: Implications for AI ..., accessed November 15, 2025, https://www.ropesgray.com/en/insights/alerts/2025/09/anthropics-landmark-copyright-settlement-implications-for-ai-developers-and-enterprise-users

Fair use or free ride? The fight over AI training and US copyright law - IAPP, accessed November 15, 2025, https://iapp.org/news/a/fair-use-or-free-ride-the-fight-over-ai-training-and-us-copyright-law

A New Look at Fair Use: Anthropic, Meta, and Copyright in AI Training - Reed Smith LLP, accessed November 15, 2025, https://www.reedsmith.com/en/perspectives/2025/07/a-new-look-fair-use-anthropic-meta-copyright-ai-training

What the $1.5 billion anthropic deal means for generative AI | Okoone, accessed November 15, 2025, https://www.okoone.com/spark/industry-insights/what-the-1-5-billion-anthropic-deal-means-for-generative-ai/

Anthropic Settlement Resets Balance of Power for Content Creators - Bloomberg Law News, accessed November 15, 2025, https://news.bloomberglaw.com/legal-exchange-insights-and-commentary/anthropic-settlement-resets-balance-of-power-for-content-creators

AI Trained on Your Books? Inside the $1.5B Bartz v. Anthropic Settlement for Authors, accessed November 15, 2025, https://kindlepreneur.com/anthropic-ai-lawsuit/

AI startup Anthropic agrees to pay $1.5bn to settle book piracy lawsuit - The Guardian, accessed November 15, 2025, https://www.theguardian.com/technology/2025/sep/05/anthropic-settlement-ai-book-lawsuit

Anthropic’s landmark copyright settlement with authors, accessed November 15, 2025, https://winsomemarketing.com/ai-in-marketing/anthropics-landmark-copyright-settlement-with-authors

Copyright and Generative AI: Recent Developments on the Use of Copyrighted Works in AI, accessed November 15, 2025, https://www.mcguirewoods.com/client-resources/alerts/2025/9/copyright-and-generative-ai-recent-developments-on-the-use-of-copyrighted-works-in-ai/

Anthropic $1.5B Copyright Settlement Sets AI Industry Precedent - Dunlap Bennett & Ludwig, accessed November 15, 2025, https://www.dbllawyers.com/anthropic-1-5b-copyright-settlement-sets-ai-industry-precedent/

Judge skewers $1.5B Anthropic settlement with authors in pirated ..., accessed November 15, 2025, https://apnews.com/article/anthropic-authors-book-settlement-ai-copyright-claude-b282fe615338bf1f98ad97cb82e978a1

Anthropic Judge Blasts $1.5 Billion AI Copyright Settlement (2) - Bloomberg Law News, accessed November 15, 2025, https://news.bloomberglaw.com/ip-law/anthropic-judge-blasts-copyright-pact-as-nowhere-close-to-done

Open-source AI in 2025: Smaller, smarter and more collaborative | IBM, accessed November 15, 2025, https://www.ibm.com/think/news/2025-open-ai-trends

Open source in the age of AI | McKinsey & Company, accessed November 15, 2025, https://www.mckinsey.com/capabilities/tech-and-ai/our-insights/tech-forward/open-source-in-the-age-of-ai

The 11 best open-source LLMs for 2025 - n8n Blog, accessed November 15, 2025, https://blog.n8n.io/open-source-llm/

Llama: Industry Leading, Open-Source AI, accessed November 15, 2025,

Top 10 open source LLMs for 2025 - NetApp Instaclustr, accessed November 15, 2025, https://www.instaclustr.com/education/open-source-ai/top-10-open-source-llms-for-2025/

AI This Week: Big Changes Across Tech and Creative Industries - Trew Knowledge Inc., accessed November 15, 2025, https://trewknowledge.com/2025/08/08/ai-this-week-big-changes-across-tech-and-creative-industries/

America’s AI Action Plan - The White House, accessed November 15, 2025, https://www.whitehouse.gov/wp-content/uploads/2025/07/Americas-AI-Action-Plan.pdf

What drives the divide in transatlantic AI strategy? - Atlantic Council, accessed November 15, 2025, https://www.atlanticcouncil.org/in-depth-research-reports/issue-brief/what-drives-the-divide-in-transatlantic-ai-strategy/

Build Once, Comply Twice: The EU AI Act’s Next Phase is Around the Corner | Insights, accessed November 15, 2025, https://www.velaw.com/insights/build-once-comply-twice-the-eu-ai-acts-next-phase-is-around-the-corner/

AI Act | Shaping Europe’s digital future - European Union, accessed November 15, 2025, https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

Big Tech spending on Brussels lobbying hits record high, report claims, accessed November 15, 2025, YouTube

Big Tech is lobbying EU hard over AI. Here are some facts and figures - Reddit, accessed November 15, 2025, https://www.reddit.com/r/BetterOffline/comments/1oj180u/big_tech_is_lobbying_eu_hard_over_ai_here_are/

Big Tech’s Grip: How Pressure is Reshaping Europe’s AI Rules - WebProNews, accessed November 15, 2025, https://www.webpronews.com/big-techs-grip-how-pressure-is-reshaping-europes-ai-rules/

Big Tech may win reprieve as EU mulls easing AI rules, document shows, accessed November 15, 2025, https://sundayguardianlive.com/feature/big-tech-may-win-reprieve-as-eu-mulls-easing-ai-rules-document-shows-160025/

EU Mulls Pausing Parts of AI Act Amid U.S. and Big Tech Pushback - Modern Diplomacy, accessed November 15, 2025, https://moderndiplomacy.eu/2025/11/07/eu-mulls-pausing-parts-of-ai-act-amid-u-s-and-big-tech-pushback/

What’s Driving the EU’s AI Act Shake-Up?, accessed November 15, 2025, https://www.techpolicy.press/whats-driving-the-eus-ai-act-shakeup/

Why the U.S. is Pulling Europe Sideways on AI Regulation - Galkin Law, accessed November 15, 2025, https://galkinlaw.com/us-influence-on-eu-ai-regulation/

The EU’s new AI code of practice has its critics but will be valuable for global governance, accessed November 15, 2025, https://www.chathamhouse.org/2025/08/eus-new-ai-code-practice-has-its-critics-will-be-valuable-global-governance

EU could water down AI Act amid pressure from Trump and big tech ..., accessed November 15, 2025, https://www.theguardian.com/world/2025/nov/07/european-commission-ai-artificial-intelligence-act-trump-administration-tech-business

EU AI Act: first regulation on artificial intelligence | Topics - European Parliament, accessed November 15, 2025, https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

C2PA | Verifying Media Content Sources, accessed November 15, 2025,

Content Credentials: Strengthening Multimedia Integrity in the Generative AI Era - DoD, accessed November 15, 2025, https://media.defense.gov/2025/Jan/29/2003634788/-1/-1/0/CSI-CONTENT-CREDENTIALS.PDF

C2PA NIST Response - Regulations.gov, accessed November 15, 2025, https://downloads.regulations.gov/NIST-2024-0001-0030/attachment_1.pdf

News - C2PA, accessed November 15, 2025, https://spec.c2pa.org/post/

OpenAI Joins C2PA Steering Committee, accessed November 15, 2025, https://spec.c2pa.org/post/openai_pr/

Content Credentials : C2PA Technical Specification, accessed November 15, 2025, https://spec.c2pa.org/specifications/specifications/2.2/specs/C2PA_Specification.html

C2PA Explainer :: C2PA Specifications, accessed November 15, 2025, https://spec.c2pa.org/specifications/specifications/1.4/explainer/Explainer.html

Advancing Responsible AI Innovation: A Playbook - World Economic Forum: Publications, accessed November 15, 2025, https://reports.weforum.org/docs/WEF_Advancing_Responsible_AI_Innovation_A_Playbook_2025.pdf

Responsible AI and industry standards: what you need to know - PwC, accessed November 15, 2025, https://www.pwc.com/us/en/tech-effect/ai-analytics/responsible-ai-industry-standards.html

Network architecture for global AI policy - Brookings Institution, accessed November 15, 2025, https://www.brookings.edu/articles/network-architecture-for-global-ai-policy/

A Plan for Global Engagement on AI Standards - NIST Technical Series Publications, accessed November 15, 2025, https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-5e2025.pdf

AI Standards | NIST - National Institute of Standards and Technology, accessed November 15, 2025, https://www.nist.gov/artificial-intelligence/ai-standards

Comparing the EU AI Act to Proposed AI-Related Legislation in the US, accessed November 15, 2025, https://businesslawreview.uchicago.edu/online-archive/comparing-eu-ai-act-proposed-ai-related-legislation-us

US vs EU AI Plans - A Comparative Analysis of the US and European Approaches, accessed November 15, 2025, https://dcnglobal.net/posts/blog/us-vs-eu-ai-plans-a-comparative-analysis-of-the-us-and-european-approaches

Article 5: Prohibited AI Practices | EU Artificial Intelligence Act, accessed November 15, 2025, https://artificialintelligenceact.eu/article/5/

High-level summary of the AI Act | EU Artificial Intelligence Act, accessed November 15, 2025, https://artificialintelligenceact.eu/high-level-summary/

EU AI Act Prohibited Use Cases | Harvard University Information Technology, accessed November 15, 2025, https://www.huit.harvard.edu/eu-ai-act

Key insights into AI regulations in the EU and the US: navigating the evolving landscape, accessed November 15, 2025, https://www.kennedyslaw.com/en/thought-leadership/article/2025/key-insights-into-ai-regulations-in-the-eu-and-the-us-navigating-the-evolving-landscape/

AI Regulations in 2025: US, EU, UK, Japan, China & More - Anecdotes AI, accessed November 15, 2025, https://www.anecdotes.ai/learn/ai-regulations-in-2025-us-eu-uk-japan-china-and-more

AI Watch: Global regulatory tracker - United States | White & Case LLP, accessed November 15, 2025, https://www.whitecase.com/insight-our-thinking/ai-watch-global-regulatory-tracker-united-states

Fall 2025 Regulatory Roundup: Top U.S. Privacy and AI Developments for Businesses to Track | Hinshaw & Culbertson LLP, accessed November 15, 2025, https://www.hinshawlaw.com/en/insights/privacy-cyber-and-ai-decoded-alert/fall-2025-regulatory-roundup-top-us-privacy-and-ai-developments-for-businesses-to-track

Artificial Intelligence and Productivity in Europe, WP/25/67, April 2025 - International Monetary Fund, accessed November 15, 2025, https://www.imf.org/-/media/Files/Publications/WP/2025/English/wpiea2025067-print-pdf.ashx

EU’s General-Purpose AI Obligations Are Now in Force, With New Guidance - Skadden, accessed November 15, 2025, https://www.skadden.com/insights/publications/2025/08/eus-general-purpose-ai-obligations

The Year Ahead 2025: Tech Talk — AI Regulations + Data Privacy - Jackson Lewis, accessed November 15, 2025, https://www.jacksonlewis.com/insights/year-ahead-2025-tech-talk-ai-regulations-data-privacy

Artificial Intelligence 2025 Legislation - National Conference of State Legislatures, accessed November 15, 2025, https://www.ncsl.org/technology-and-communication/artificial-intelligence-2025-legislation

Retrospective: 2025 in state data privacy law - IAPP, accessed November 15, 2025, https://iapp.org/news/a/retrospective-2025-in-state-data-privacy-law

Data Scraping, Privacy Law, and the Latest Challenge to the Generative AI Business Model, accessed November 15, 2025, https://www.afslaw.com/perspectives/privacy-counsel/data-scraping-privacy-law-and-the-latest-challenge-the-generative-ai

Training AI on personal data scraped from the web - IAPP, accessed November 15, 2025, https://iapp.org/news/a/training-ai-on-personal-data-scraped-from-the-web

Scraping and processing AI training data – key legal challenges under data protection laws, accessed November 15, 2025, https://www.taylorwessing.com/en/insights-and-events/insights/2025/02/scraping-and-processing-ai-training-data

The EU has let US tech giants run riot. Diluting our data law will only entrench their power | Johnny Ryan and Georg Riekeles | The Guardian, accessed November 15, 2025, https://www.theguardian.com/commentisfree/2025/nov/12/eu-gdpr-data-law-us-tech-giants-digital

California Finalizes Regulations Pertaining to Certain Uses of AI Tools - Lewis Rice, accessed November 15, 2025, https://www.lewisrice.com/publications/california-finalizes-regulations-pertaining-to-certain-uses-of-ai-tools

California Finalizes Regulations to Strengthen Consumers’ Privacy, accessed November 15, 2025, https://cppa.ca.gov/announcements/2025/20250923.html

Algorithmic Bias as a Core Legal Dilemma in the Age of Artificial Intelligence: Conceptual Basis and the Current State of Regulation - MDPI, accessed November 15, 2025, https://www.mdpi.com/2075-471X/14/3/41

Digitalisation, artificial intelligence and algorithmic management in the workplace: Shaping the future of work - European Parliament, accessed November 15, 2025, https://www.europarl.europa.eu/RegData/etudes/STUD/2025/774670/EPRS_STU(2025)774670_EN.pdf

Record Companies Bring Landmark Cases for Responsible AI Against Suno and Udio in Boston and New York Federal Courts, Respectively - RIAA, accessed November 15, 2025, https://www.riaa.com/record-companies-bring-landmark-cases-for-responsible-ai-againstsuno-and-udio-in-boston-and-new-york-federal-courts-respectively/

Suno argues none of the millions of tracks made on its platform ..., accessed November 15, 2025, https://www.musicbusinessworldwide.com/suno-argues-none-of-the-millions-of-tracks-made-on-its-platform-contain-anything-like-a-sample/

Could AI music be the industry’s next Napster moment? - WIPO, accessed November 15, 2025, https://www.wipo.int/en/web/wipo-magazine/articles/could-ai-music-be-the-industrys-next-napster-moment-75538

Creative Rights In AI Coalition Calls On Government To Protect Copyright As GAI Policy Develops - Music Publishers Association, accessed November 15, 2025, https://mpaonline.org.uk/news/creative-rights-in-ai-coalition-calls-on-government-to-protect-copyright-as-gai-policy-develops/

Creative Rights In AI Coalition calls on government to protect copyright as AI policy develops, accessed November 15, 2025, https://societyofauthors.org/2024/12/16/creative-rights-in-ai-coalition-calls-on-government-to-protect-copyright-as-ai-policy-develops/

Action needed to protect our creative future in the age of Generative AI - Queen Mary University of London, accessed November 15, 2025, https://www.qmul.ac.uk/media/news/2025/humanities-and-social-sciences/hss/action-needed-to-protect-our-creative-future-in-the-age-of-generative-ai.html

Joint statement by a broad coalition of rightsholders active across the EU’s cultural and creative sectors regarding the AI Act implementation measures adopted by the European Commission - IFPI, accessed November 15, 2025, https://www.ifpi.org/joint-statement-by-a-broad-coalition-of-rightsholders-active-across-the-eus-cultural-and-creative-sectors-regarding-the-ai-act-implementation-measures-adopted-by-the-european-commission/

NO FAKES Act one-pager - Senator Chris Coons, accessed November 15, 2025, https://www.coons.senate.gov/imo/media/doc/no_fakes_act_one-pager.pdf

S.1367 - NO FAKES Act of 2025 119th Congress (2025-2026), accessed November 15, 2025, https://www.congress.gov/bill/119th-congress/senate-bill/1367

The Real Costs of the NO FAKES Act - CCIA, accessed November 15, 2025, https://ccianet.org/articles/the-real-costs-of-the-no-fakes-act/

Proposed Legislation Reflects Growing Concern Over “Deep Fakes”: What Companies Need to Know - O’Melveny, accessed November 15, 2025, https://www.omm.com/insights/alerts-publications/proposed-legislation-reflects-growing-concern-over-deep-fakes-what-companies-need-to-know/

Reintroduced No FAKES Act Still Needs Revision - The Regulatory Review, accessed November 15, 2025, https://www.theregreview.org/2025/08/18/rothman-reintroduced-no-fakes-act-still-needs-revision/

AI Licensing for Authors: Who Owns the Rights and What’s a Fair Split?, accessed November 15, 2025, https://authorsguild.org/news/ai-licensing-for-authors-who-owns-the-rights-and-whats-a-fair-split/

With the rise of AI ‘slop,’ Canada’s creative sector sets sights on licensing regime, accessed November 15, 2025, https://larongenow.com/2025/11/15/with-the-rise-of-ai-slop-canadas-creative-sector-sets-sights-on-licensing-regime/

When AI-Generated Art Enters the Market, Consumers Win — and ..., accessed November 15, 2025, https://www.gsb.stanford.edu/insights/when-ai-generated-art-enters-market-consumers-win-artists-lose

Impact of AI on art and design industry - NHSJS, accessed November 15, 2025, https://nhsjs.com/2025/impact-of-ai-on-art-and-design-industry/

Economic potential of generative AI - McKinsey, accessed November 15, 2025, https://www.mckinsey.com/capabilities/tech-and-ai/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier

Global AI in the Art Market Statistics 2025 - Artsmart.ai, accessed November 15, 2025, https://artsmart.ai/blog/ai-in-the-art-market-statistics/

As AI Colors Fashion, Copyright Remains a Gray Area | Fenwick, accessed November 15, 2025, https://whatstrending.fenwick.com/post/as-ai-colors-fashion-copyright-remains-a-gray-area

Generative AI in fashion design creation: a copyright analysis of AI-assisted designs | Journal of Intellectual Property Law & Practice | Oxford Academic, accessed November 15, 2025, https://academic.oup.com/jiplp/article/20/10/654/8232563

Artificial Intelligence and Fashion: Threat or Opportunity? - IFA Paris, accessed November 15, 2025, https://www.ifaparis.com/the-school/blog/ai-fashion-threat-opportunity

Top AI Trends for the Fashion Industry in 2025 - Medium, accessed November 15, 2025, https://medium.com/@API4AI/top-ai-trends-for-the-fashion-industry-in-2025-25c056db027a

Generative AI in fashion design complicates trademark ownership | Inside Tech Law, accessed November 15, 2025, https://www.insidetechlaw.com/blog/2024/05/generative-ai-in-fashion-design-complicates-trademark-ownership

Copyright Office Publishes Report on Copyrightability of AI-Generated Materials | Insights | Skadden, Arps, Slate, Meagher & Flom LLP, accessed November 15, 2025, https://www.skadden.com/insights/publications/2025/02/copyright-office-publishes-report

Copyright Office Releases Part 2 of Artificial Intelligence Report, accessed November 15, 2025, https://www.copyright.gov/newsnet/2025/1060.html

Fashion X Generative AI: IP design protection from GenAI - Hogan Lovells, accessed November 15, 2025, https://www.hoganlovells.com/en/publications/fashion-x-generative-ai-ip-design-protection-from-genai

The European Union is still caught in an AI copyright bind - Bruegel, accessed November 15, 2025, https://www.bruegel.org/analysis/european-union-still-caught-ai-copyright-bind

Superagency in the workplace: Empowering people to unlock AI’s full potential, accessed November 15, 2025, https://www.mckinsey.com/capabilities/tech-and-ai/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work

Is 2025 the tipping point for AI in creative industries? - Torres Marketing, accessed November 15, 2025, https://www.torresmarketinginc.com/blog/is-2025-the-tipping-point-for-ai-in-creative-industries

How AI is changing professions like design, art, and the media - UOC, accessed November 15, 2025, https://www.uoc.edu/en/news/2025/ai-could-automate-creative-professions

How AI can empower, not replace, human creativity | World Economic Forum, accessed November 15, 2025, https://www.weforum.org/stories/2025/01/artificial-intelligence-must-serve-human-creativity-not-replace-it/

Artificial Intelligence in Creative Industries: Advances Prior to 2025 - arXiv, accessed November 15, 2025, https://arxiv.org/html/2501.02725v4