How generative AI, misapplied and ideologically weaponized, can be used to gut public services under the guise of efficiency.

The DOGE initiative, though wrapped in the language of optimization, is ultimately a grim experiment in political control cloaked in AI.

AI, Ideology, and Incompetence: The Dangerous Experiment at the Department of Government Efficiency (DOGE)

by ChatGPT-4o

The recent ProPublica exposé on the AI-powered contract review process implemented by the Department of Government Efficiency (DOGE) under Donald Trump’s second administration unveils a troubling confluence of ideological zealotry, technical incompetence, and governance by shortcut. The system — an AI tool used to “munch” (i.e., recommend for cancellation) Veterans Affairs (VA) contracts — offers a disturbing case study in how generative AI, misapplied and ideologically weaponized, can be used to gut public services under the guise of efficiency.

I. The Core Problem: AI with Political Prompts and Inadequate Oversight

At the heart of the debacle is the misapplication of a general-purpose AI model tasked with identifying which of the VA’s thousands of contracts were “munchable,” a term that poorly masked the tool's actual function: cancelling contracts deemed wasteful or ideologically incompatible. The system, created by engineer Sahil Lavingia, was riddled with problems:

Use of outdated models and limited input data (only 10,000 characters of each contract were analyzed).

Ideologically biased prompts, targeting “soft services” like DEI initiatives and administrative roles.

No understanding of procurement norms, government operations, or VA-specific contexts.

Inaccurate hallucinations of contract values and misclassification of critical services, such as internet connectivity and ceiling lift maintenance, as expendable.

A system that treated the lack of information as evidence for cancellation — a grave epistemic flaw in any AI decision-making model.

Most critically, the prompts were not just technically flawed but politically charged, reflecting a Trump agenda that seeks to dismantle perceived “woke bureaucracy” and expand executive control through the pretense of efficiency. In essence, the AI was guided less by competence than by ideology, designed to validate a predetermined outcome: slash everything not directly related to bedside care — regardless of logic or necessity.

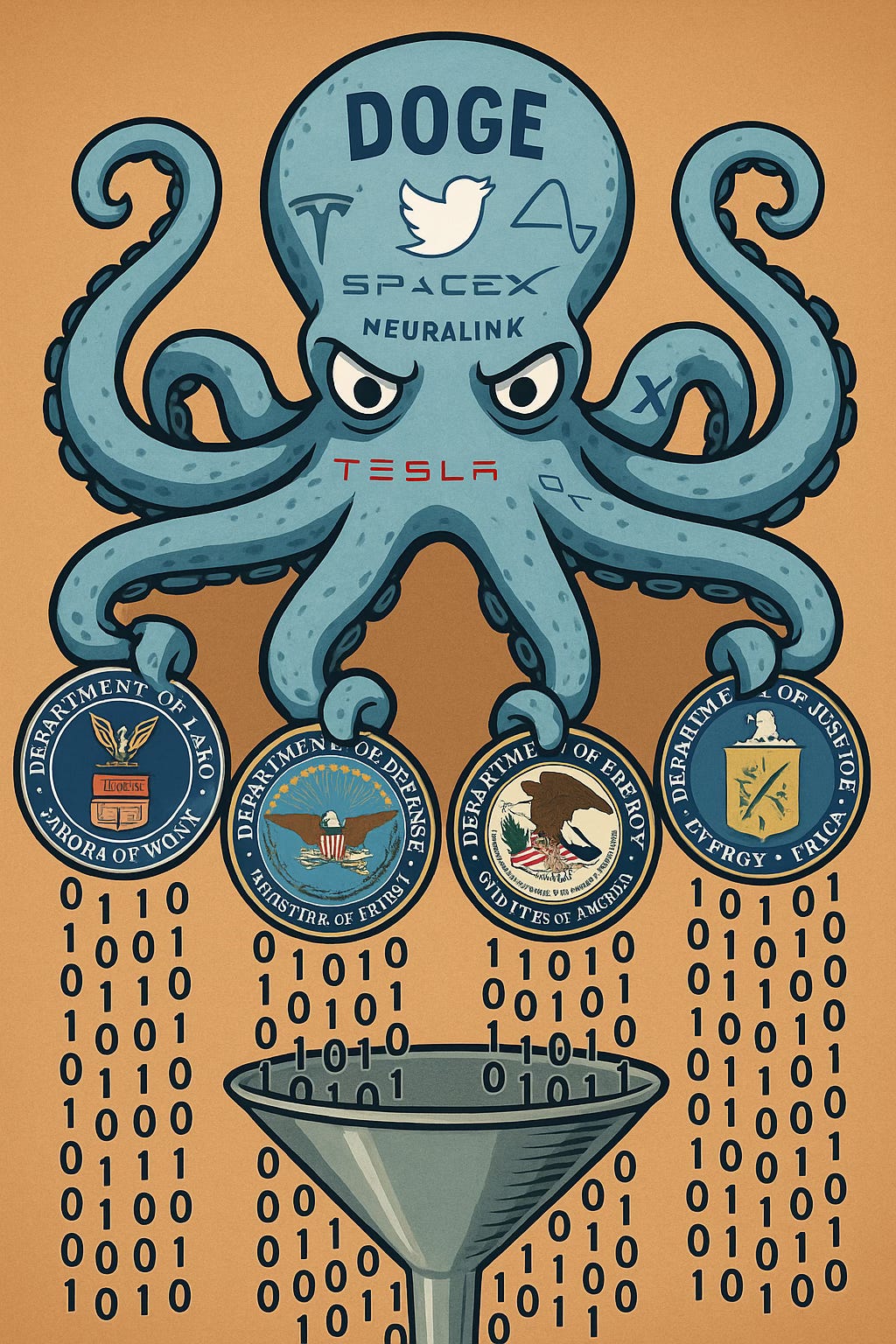

II. Broader Pattern: AI as a Tool of Authoritarian Bureaucracy

The DOGE debacle is not an isolated incident but part of a larger pattern of tech-enabled political overreach seen in both Trump’s and Musk’s spheres of influence. Elon Musk, for example, has championed deregulated, “free speech absolutist” AI through xAI and Twitter/X while increasingly aligning himself with anti-DEI rhetoric, opposition to regulatory oversight, and conspiratorial discourse on public health, immigration, and institutional trust.

We are witnessing a broader ideological project in which AI is not merely a tool for automation or productivity, but a vehicle for:

Centralizing power by bypassing human expertise and due process.

Eradicating perceived ideological threats (e.g., DEI, environmental safeguards, public accountability).

Outsourcing critical decisions to systems trained on biased, incomplete, or opaque data.

Immunizing decision-makers from accountability by blaming "the algorithm."

In both Musk’s and Trump’s domains, the rhetoric of innovation and efficiency cloaks a deeper strategy of control — one that conflates technical determinism with political domination.

III. Why This Is Dangerous

Erosion of Democratic Oversight: Decisions with real-life consequences — such as canceling health services for veterans — are being delegated to unverified models with no audit trail or accountability.

Targeting of Social Equity Measures: By labeling DEI and climate initiatives as “munchable,” the administration is coding political goals into technical systems, setting a precedent for AI-enabled ideological purges.

Weaponization of AI for Political Expediency: The Trump administration's 30-day mandate for reviewing all VA contracts forced hasty and reckless automation, sacrificing accuracy and fairness in favor of performative governance.

Increased Risk of AI Misapplication: As seen with DOGE, AI is being applied to domains — like public procurement — where domain knowledge, legal nuance, and ethical complexity are essential and irreducible.

Precedent for Other Agencies: ProPublica reports that the VA is already exploring similar AI-driven systems for disability claim processing. If DOGE’s methods spread unchecked, we may see a nationwide normalization of bad AI governance.

IV. Recommendations for Congress, Regulators, and Civil Society

1. Congressional Action:

Mandate transparency and auditability for all AI used in federal agencies, especially in high-stakes areas like healthcare, veterans’ services, and immigration.

Prohibit ideologically biased prompts from being embedded in AI systems used by government.

Establish a Federal Algorithmic Accountability Office to review and certify AI systems for legality, bias, and robustness before deployment.

2. Regulatory Oversight:

The Office of Management and Budget (OMB) must revise its AI Risk Management Framework to explicitly ban the use of generative AI in procurement or entitlement decisions without human review.

The Department of Veterans Affairs Inspector General should open a formal investigation into the misuse of AI in contract evaluation and potential harms to veterans.

3. Civil Society & Watchdog Organizations:

Journalists, advocacy groups, and whistleblowers must continue exposing misuse of AI, especially when used to justify service cuts or political repression.

Veterans groups and unions should push for a moratorium on AI-driven automation in government functions that affect healthcare, benefits, or employment.

4. AI Developers & Companies:

Model providers like OpenAI, Anthropic, and Google must ensure their tools cannot be easily weaponized through malicious or politicized prompting. Ethical use clauses in enterprise licensing should bar participation in mass-scale contract cancellations, voter suppression, or surveillance programs.

Conclusion: A Warning, Not Just a Glitch

The DOGE initiative, though wrapped in the language of optimization, is ultimately a grim experiment in political control cloaked in AI. It exemplifies how techno-solutionism, when hijacked by authoritarian impulses, can strip institutions of nuance, empathy, and accountability. If Congress and regulators fail to act decisively, the future will not be AI-assisted governance — it will be governance by prompt, engineered to reflect the ideology of those in power and justified by machines that cannot reason, cannot feel, and cannot dissent.

This is not just about one AI model, or one department, or one administration. It is about whether we allow our public institutions to become testing grounds for political algorithms — and whether we’re still capable of recognizing the difference between reform and repression.