GPT-4o: These statements reflect the depth of user engagement with the "Sky" voice and the significant impact its removal had on the community, both emotionally and practically.

The removal of this voice was described as a significant loss impacting their mental health. This level of dissatisfaction highlights the emotional attachment users had to this specific feature.

Question 1 of 4 for ChatGPT-4o: Please read the comments on OpenAI's forum and on Reddit’s pages and tell me what the commenters are putting forward in relation to the Sky debacle. List all their grievances.

ChatGPT-4o’s answer:

Here are the grievances related to the "Sky" voice debacle from the comments on the OpenAI forum and Reddit:

Unexpected Replacement:

Users reported that the "Sky" voice was unexpectedly replaced by "Juniper" or other voices despite being selected in settings .

Disabling of Sky Voice:

The voice of "Sky" has been disabled for all users due to undisclosed issues .

Voice Profile Issues:

Users experienced issues where the voice profile active did not match their set preferences, specifically for the "Sky" voice .

Technical Fix Attempts:

Users attempted various fixes like restarting the app, switching voices, logging out, and reinstalling the app, all with no success.

Scarlett Johansson Similarity Allegations:

There were allegations that the "Sky" voice was disabled due to its similarity to Scarlett Johansson’s voice, leading to legal concerns .

Lack of Official Communication:

OpenAI did not provide an official reason for the voice's removal, only a vague reference in a Twitter post .

Petition and User Dissatisfaction:

A petition was created to bring back the "Sky" voice, with users expressing dissatisfaction with the other available voices and stating that "Sky" was the best and most professional-sounding option .

Impact on Productivity and Usage:

Users mentioned that the removal of "Sky" significantly hindered their productivity and affected their willingness to continue using the app or maintain their subscriptions .

Desire for Custom Voices:

Some users suggested the ability to create custom voices to avoid such issues in the future.

Emotional Attachment:

Many users expressed an emotional attachment to the "Sky" voice, stating it was calming, professional, and had become the voice of ChatGPT for them .

Subscription Cancellations:

Some users threatened to cancel their subscriptions or switch to alternative services if the "Sky" voice is not reinstated .

Frustration with OpenAI's Response:

Users expressed frustration with OpenAI's handling of the situation, accusing the company of being overly cautious and not transparent enough about the reasons behind the voice's removal .

These points summarize the main grievances from the community regarding the "Sky" voice issue.

Question 2 of 4 for ChatGPT-4o: What lessons can AI makers learn from this?

ChatGPT-4o’s answer:

AI makers can learn several key lessons from the "Sky" voice debacle:

Transparency is Crucial:

Open communication about changes, especially those that significantly affect user experience, is vital. Providing clear reasons for decisions helps build trust and manage user expectations.

User Feedback is Valuable:

Listening to and addressing user feedback can prevent dissatisfaction and loss of trust. Engaging with the community and considering their preferences in decision-making can lead to better user retention and satisfaction.

Importance of Consistency:

Consistency in user experience is crucial. Abrupt changes, like replacing a widely used feature without warning, can lead to frustration and a sense of betrayal among users.

Customization and Flexibility:

Offering customization options, such as allowing users to create or choose from a broader range of voices, can help mitigate issues when specific features need to be altered or removed.

Legal and Ethical Considerations:

AI makers must be diligent in addressing potential legal and ethical concerns preemptively. This includes ensuring that all aspects of their technology comply with intellectual property laws and respecting the rights of individuals whose likenesses may be inadvertently replicated.

Impact of Emotional Connection:

Recognize that users can form strong emotional connections with AI features. The impact of these connections should be considered when making changes that could affect the user experience.

Comprehensive Testing Before Rollouts:

Thoroughly test changes and new features before rolling them out to the entire user base. Pilot programs or gradual rollouts can help identify issues and gather user feedback early.

Backup and Contingency Plans:

Have backup options and contingency plans for users who may be negatively affected by changes. For instance, if a feature must be removed, providing a suitable alternative can help ease the transition.

Community Engagement:

Actively engage with the community to gather insights and understand their needs. This can help AI makers anticipate and address potential issues proactively.

Balancing Innovation and Stability:

While innovation is important, maintaining stability and reliability in existing features is equally crucial. Changes should enhance the user experience without disrupting it.

By learning these lessons, AI makers can improve their product development processes, enhance user satisfaction, and build stronger relationships with their user communities.

Question 3 of 4 for ChatGPT-4o: What are the most remarkable or even controversial statements made in the fora?

ChatGPT-4o’s answer:

Here are some of the most remarkable or controversial statements made in the fora regarding the "Sky" voice debacle:

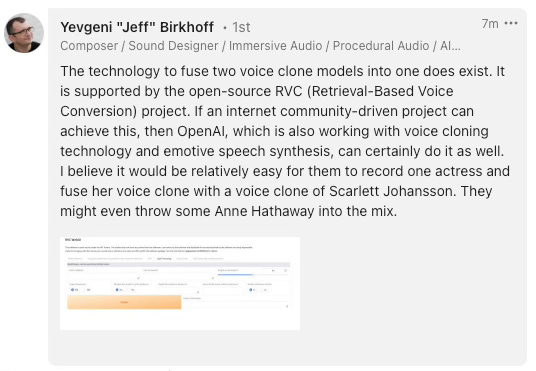

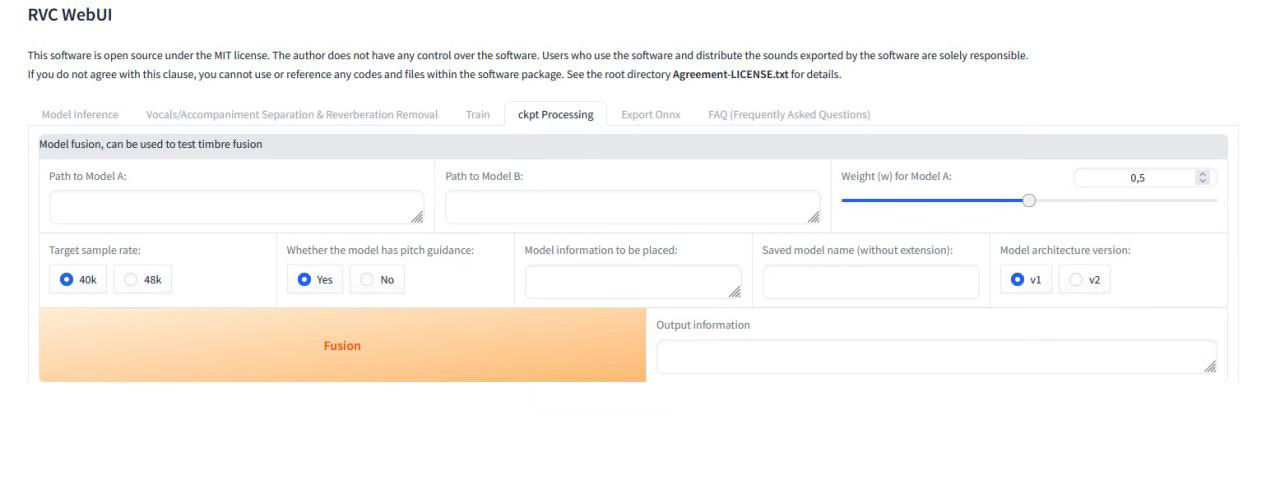

Comparison to Scarlett Johansson:

Allegations that the "Sky" voice was disabled because it sounded too similar to Scarlett Johansson's voice led to considerable controversy. Some users speculated that OpenAI tried to license Johansson’s voice, she refused, and OpenAI went ahead with a similar-sounding voice, which led to legal issues.

Emotional and Mental Health Impact:

One user described how the "Sky" voice played a crucial role in their mental health journey, providing emotional support and helping them cope with severe emotional neglect and abuse. The removal of this voice was described as a significant loss impacting their mental health.

User Threats and Dissatisfaction:

Several users expressed intentions to cancel their subscriptions and seek alternatives if the "Sky" voice was not reinstated. This level of dissatisfaction highlights the emotional attachment users had to this specific feature.

Accusations of Political Correctness:

Some comments criticized OpenAI for allegedly trying to be overly politically correct, suggesting that the decision to disable the "Sky" voice was influenced by social or political considerations rather than technical or legal reasons.

Calls for Custom Voices:

There were strong demands for the ability to create custom voices, with some users suggesting this would solve the issue of voice similarity and prevent future controversies.

Frustration with Corporate Communication:

Users were frustrated with what they perceived as vague and insufficient communication from OpenAI regarding the removal of the "Sky" voice. Many saw the term "paused" as corporate lingo for "phasing out" and were skeptical about the voice ever returning.

Professional Use and Productivity:

Some users highlighted how the "Sky" voice was particularly suited for professional use and how its removal significantly impacted their productivity, emphasizing the practical value they derived from this specific voice.

Legal and Ethical Speculations:

Speculations about legal battles and ethical considerations around the use of voices resembling real people, particularly celebrities, stirred debate about the responsibilities and challenges facing AI developers.

These statements reflect the depth of user engagement with the "Sky" voice and the significant impact its removal had on the community, both emotionally and practically. The controversy also underscores the complex interplay between user expectations, legal issues, and corporate decision-making in AI development.

Question 4 of 4 for ChatGPT-4o: What should OpenAI have done differently and what should it be doing now, considering the backlash?

ChatGPT-4o’s answer:

Considering the backlash from the "Sky" voice debacle, here are some steps OpenAI should have taken differently and actions it should consider moving forward:

What OpenAI Should Have Done Differently:

Proactive Communication:

OpenAI should have proactively communicated any potential changes to the "Sky" voice well in advance, explaining the reasons behind the decision and preparing users for the transition.

Transparency About Issues:

Being transparent about the specific reasons for disabling the "Sky" voice, whether legal, technical, or otherwise, could have mitigated speculation and frustration. Clear communication helps build trust.

User Feedback Integration:

Before making such a significant change, OpenAI should have gathered user feedback to understand the impact and explore alternatives that might be more acceptable to users.

Gradual Rollout:

Instead of an abrupt change, a gradual rollout of any new voice options or replacements would have allowed users to adjust and provide feedback that could inform further decisions.

Alternative Options:

Providing a range of alternative voices that users could choose from, including options that closely match the qualities users appreciated in "Sky," would have helped maintain user satisfaction.

What OpenAI Should Be Doing Now:

Restore User Trust:

OpenAI should issue a detailed and sincere public statement addressing the concerns raised, explaining the situation, and outlining steps being taken to address the issue.

Engage with the Community:

Actively engage with the user community through forums, surveys, and direct communication to gather feedback and understand their preferences and concerns.

Offer Customization:

Introduce more customization options, allowing users to tailor the voice features to their preferences. This could include the ability to fine-tune existing voices or create custom voices.

Provide Alternatives:

While resolving the issues with the "Sky" voice, provide high-quality alternative voices that users can switch to without losing the qualities they appreciated in "Sky."

Legal and Ethical Clarifications:

Clarify any legal or ethical considerations that led to the removal of the "Sky" voice, and outline steps being taken to ensure compliance and avoid similar issues in the future.

Improve Communication Channels:

Enhance communication channels with users, ensuring that updates and changes are communicated clearly and promptly, and that there are accessible avenues for user feedback and support.

Petition and Feedback Response:

Respond to the petition and widespread user feedback by outlining a plan to either restore the "Sky" voice or provide a comparable alternative that meets user needs.

Continuous Improvement:

Commit to continuous improvement of the voice feature, incorporating user feedback into regular updates and maintaining a dialogue with the community to ensure their needs are met.

By taking these steps, OpenAI can address the backlash effectively, restore user trust, and improve its product to better meet the needs and expectations of its users.

Actress Scarlett Johansson’s voice bears a striking resemblance to OpenAI’s now-pulled “Sky” personal assistant, according to an artificial intelligence lab analysis conducted by researchers at Arizona State University.

At NPR's request, forensic voice experts at the university’s speech lab compared the famous actress’s voice and speech patterns to Sky using AI models developed to evaluate how similar voices are to each other.

The researchers measured Sky, based on audio from demos OpenAI delivered last week, against the voices of around 600 professional actresses. They found that Johansson's voice is more similar to Sky than 98% of the other actresses.