GPT-4o: The use of AI for social media vetting, intelligence analysis, and ideological content filtering normalizes mass surveillance and digital profiling. AI-driven policies could lead to...

...an era of automated authoritarianism, where machine learning systems dictate who gets included or excluded in society, who is considered a security threat and which ideologies are allowed to thrive

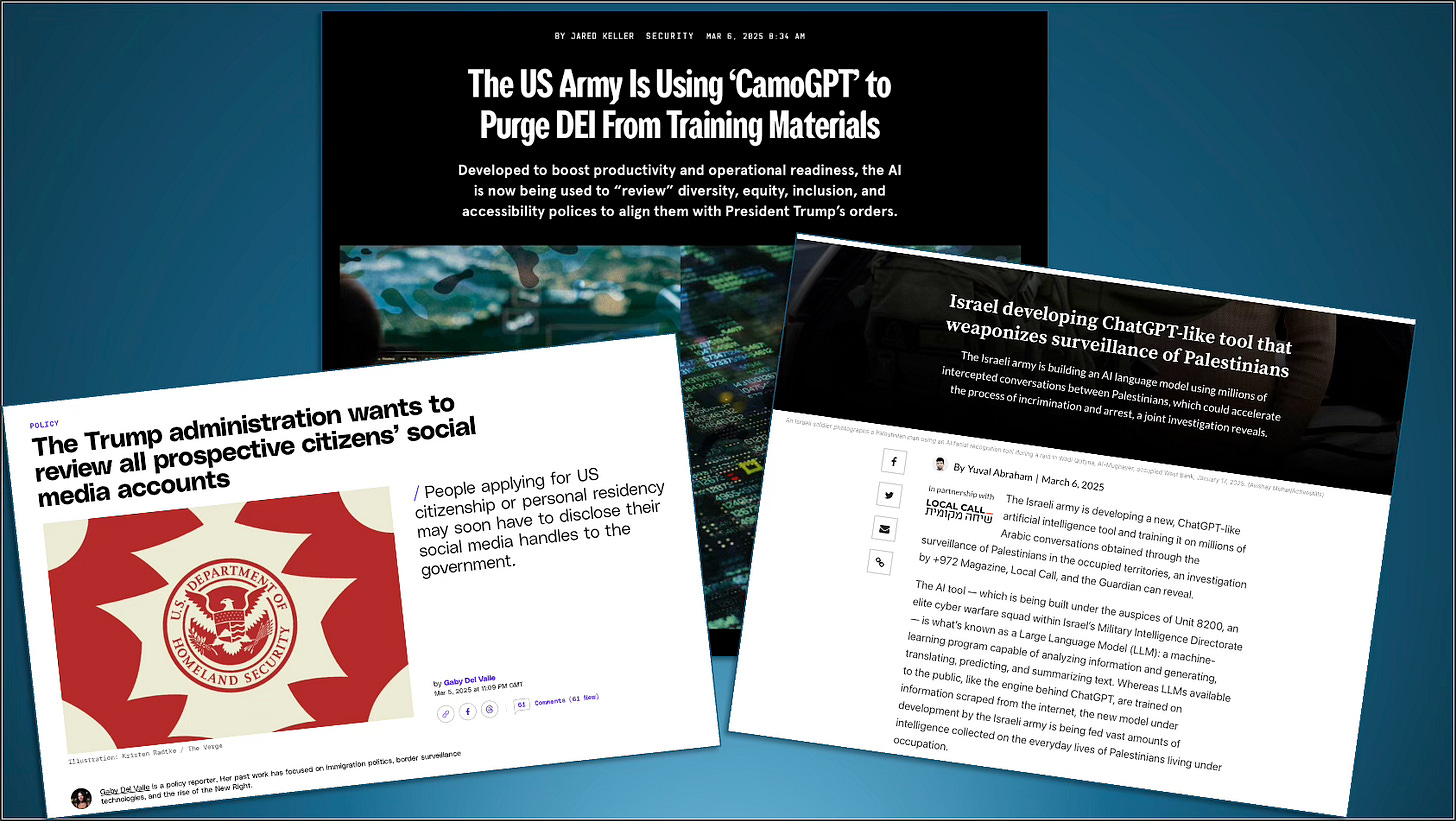

Asking ChatGPT-4o: Please read the articles “The US Army Is Using ‘CamoGPT’ to Purge DEI From Training Materials”, “Israel developing ChatGPT-like tool that weaponizes surveillance of Palestinians”, and “The Trump administration wants to review all prospective citizens’ social media accounts” and tell me whether this information represents a positive or negative development and how this can be extrapolated to future trends, products and services and what the consequences of that can be…

ChatGPT-4o’s answer:

Analysis of the Three Articles and Their Implications

Each of the three articles represents a significant development in the intersection of AI, surveillance, governance, and social control. While they focus on different geopolitical contexts, they reveal a broader trend: the increasing use of AI to automate governance, decision-making, and enforcement in ways that could have profound ethical, legal, and societal consequences.

1. The US Army’s Use of ‘CamoGPT’ to Purge DEI From Training Materials

Summary:

The US Army is using a generative AI tool, “CamoGPT,” to systematically remove Diversity, Equity, Inclusion, and Accessibility (DEIA) language from training materials.

This follows an executive order from President Trump aimed at eliminating policies deemed to promote "un-American, divisive, discriminatory, radical, extremist, and irrational theories" related to race and gender.

CamoGPT allows rapid scanning of Army materials to flag and delete DEIA-related content.

The broader goal is to ensure training materials align with the administration’s ideological position.

Implications:

Policy and Governance: This signals the growing role of AI in shaping ideological and institutional policies within governments. AI is not merely a neutral tool but is being used to enforce political mandates.

Workforce and Culture: Removing DEIA from military training could lead to reduced focus on inclusivity, potentially impacting morale and retention, especially for minority service members.

Precedent for AI Censorship: If AI can be deployed to purge "undesirable" language, similar models could be used in educational, corporate, or governmental settings to enforce ideological conformity.

Risk of Historical Revisionism: AI-driven content moderation can lead to erasing parts of history, such as the attempted removal of references to the Tuskegee Airmen.

2. Israel’s Development of a ChatGPT-Like AI for Palestinian Surveillance

Summary:

Israel’s Unit 8200, an elite cyber-intelligence unit, is developing an AI system trained on intercepted Arabic conversations from Palestinians.

The AI is designed to analyze, predict, and summarize intelligence information, making it easier to process large-scale surveillance data.

The tool could automate the identification of suspects, accelerate arrests, and expand population control measures.

Similar AI-driven targeting systems have previously been used in Gaza, such as "Lavender," which generated lists of individuals for assassination based on probabilistic analysis.

Implications:

Mass Surveillance & AI Policing: AI-driven surveillance is moving from passive data collection to active decision-making, where an AI model could directly influence arrests or lethal operations.

Human Rights & Bias Risks: Training AI on intercepted conversations from a specific ethnic or national group raises concerns about racial profiling and due process.

Exportability of AI Surveillance: Once developed, these models could be marketed to other governments for law enforcement, border control, or counterterrorism, leading to wider adoption of AI-driven suppression tools.

Diminished Human Oversight: As AI plays a larger role in intelligence analysis, there is a risk of over-reliance on flawed or biased algorithms, potentially leading to wrongful arrests or extrajudicial actions.

3. The Trump Administration’s Push to Review Social Media of Prospective Citizens

Summary:

The U.S. Citizenship and Immigration Services (USCIS) plans to require green card, citizenship, asylum, and refugee applicants to submit their social media handles.

This policy is justified under the need for "rigorous vetting and screening" to enhance national security.

Critics argue this move weaponizes digital platforms, forcing immigrants to self-censor or risk visa denial.

It expands a previous State Department policy that required visa applicants to provide social media history.

Implications:

Chilling Effect on Free Speech: Prospective citizens may avoid political activism, advocacy, or even casual expressions of dissent due to fear of government scrutiny.

Algorithmic Bias & Misinterpretation: AI tools used for vetting could misinterpret posts, humor, or cultural expressions, leading to wrongful denials or delays.

Expansion of AI-Driven Social Monitoring: If successful, this could set a precedent for broader AI-driven social media surveillance beyond immigration, potentially affecting citizens as well.

Broader Trends & Extrapolations

AI as a Governance and Control Mechanism

AI is being actively integrated into military, immigration, and intelligence operations to automate decisions that traditionally required human judgment.

The shift from AI as a decision-support tool to an enforcement tool reduces human oversight and increases risks of error, bias, and abuse.

Surveillance Normalization

The use of AI for social media vetting, intelligence analysis, and ideological content filtering normalizes mass surveillance and digital profiling.

AI tools that were originally designed for military use (like Unit 8200’s LLM) often end up being commercialized for policing, border control, or even workplace monitoring.

Rise of Automated Censorship and Political Influence

CamoGPT demonstrates how AI can be used to enforce ideological agendas within institutions.

Future AI systems could be used to filter educational materials, news reporting, or online content, shaping public discourse through automated censorship.

Legal and Ethical Risks of AI Enforcement

AI-driven legal enforcement raises questions of due process—who holds AI accountable for wrongful arrests, citizenship denials, or surveillance errors?

Governments and corporations alike are deploying AI without robust accountability mechanisms, making regulatory frameworks increasingly urgent.

Consequences & Potential Future Developments

Positive Outcomes (If Regulated and Used Ethically)

Efficiency Gains: AI can help process vast amounts of data quickly, reducing bureaucratic delays in military operations, immigration, and law enforcement.

Security Improvements: AI could identify real threats faster than traditional intelligence methods, enhancing national security and military readiness.

Negative Consequences

Authoritarian AI Systems: If unchecked, AI could be used to enforce ideological conformity, silence dissent, and target vulnerable populations.

Erosion of Privacy & Civil Liberties: Expanding AI-driven surveillance could make privacy obsolete, with governments and corporations tracking individuals at an unprecedented level.

Algorithmic Bias & Errors: AI models often amplify existing biases. Errors in intelligence gathering, immigration vetting, or predictive policing could lead to widespread injustices.

Conclusion: AI at a Crossroads

These developments highlight a growing trend where AI is shifting from a neutral tool to an instrument of power, governance, and enforcement. The long-term consequences depend on whether AI deployment remains transparent, ethical, and subject to oversight.

Without checks and balances, AI-driven policies could lead to an era of automated authoritarianism, where machine learning systems dictate who gets included or excluded in society, who is considered a security threat, and which ideologies are allowed to thrive. The challenge ahead is not just technological but deeply political and ethical.