GPT-4o: The findings suggest that LLMs primarily acquire factual knowledge during the initial pre-training phase

Fine-tuning helps the model use this knowledge more efficiently, but introducing new knowledge through fine-tuning can increase the risk of hallucinations.

Question 1 of 4 for ChatGPT-4o: Please read the paper "Does Fine-Tuning LLMs on New Knowledge Encourage Hallucinations?" and tell me what it says in easy to understand language

ChatGPT-4o’s answer:

The paper "Does Fine-Tuning LLMs on New Knowledge Encourage Hallucinations?" investigates how fine-tuning large language models (LLMs) with new information affects their tendency to generate incorrect or hallucinated responses. Here’s a simplified breakdown of the findings:

Fine-Tuning Process: LLMs, like GPT-4, are often fine-tuned with new data after their initial training. This process aims to update the model with the latest information and improve its performance on specific tasks.

Hallucinations: When LLMs generate responses that are not grounded in factual information, these are termed "hallucinations." For example, the model might invent details or provide incorrect answers.

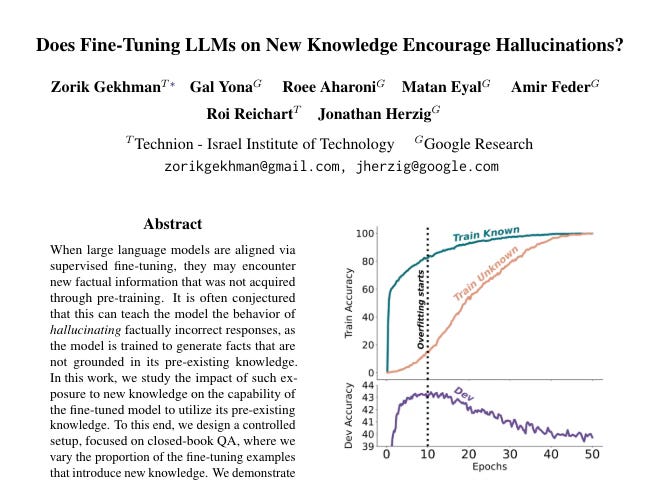

Experiment Setup: The researchers created a controlled environment focusing on closed-book question answering (QA). They varied the amount of new knowledge introduced during fine-tuning to see how it impacted the model's accuracy and hallucination rate.

Learning New Information: The study found that LLMs have difficulty quickly incorporating new factual information through fine-tuning. New facts are learned significantly slower compared to facts that align with the model's existing knowledge.

Increased Hallucinations: As the model eventually learns the new information, it also becomes more prone to hallucinate. This means that while the model can incorporate new facts, doing so increases the likelihood of generating incorrect responses.

Pre-Training vs. Fine-Tuning: The findings suggest that LLMs primarily acquire factual knowledge during the initial pre-training phase. Fine-tuning helps the model use this knowledge more efficiently, but introducing new knowledge through fine-tuning can increase the risk of hallucinations.

In summary, the paper highlights a significant trade-off: while fine-tuning can update models with new information, it also makes them more likely to produce hallucinations. This underscores the importance of careful fine-tuning and the potential need for methods to mitigate hallucinations in LLMs.

Question 2 of 4 for ChatGPT-4o: Is it correct that not many people are aware of these findings? What should AI makers be doing on the short term to address this issue?

ChatGPT-4o’s answer:

It's quite likely that not many people are deeply aware of these nuanced findings about fine-tuning large language models and their tendency to hallucinate. This is partly because such detailed research often stays within academic and specialized AI circles, where it is primarily discussed and scrutinized by experts. The general public, and even some in the broader tech community, may not be fully cognizant of these critical insights yet.

To address this fascinating and somewhat alarming issue in the short term, AI developers and researchers should take several proactive and thoughtful steps:

Enhanced Communication: It's vital to communicate these findings widely and clearly. AI makers should publish accessible summaries and share them through various channels, including blogs, social media, and industry conferences, to raise awareness among a broader audience.

Robust Evaluation: AI systems should be subjected to more rigorous and extensive evaluation processes. This means developing and implementing more comprehensive tests specifically designed to detect hallucinations and other errors in responses, ensuring the AI is thoroughly vetted before deployment.

Fine-Tuning Strategies: Innovatively refining fine-tuning techniques can help mitigate the risk of hallucinations. For instance, introducing incremental fine-tuning with frequent validation checks can ensure that the model integrates new information without compromising its accuracy.

Transparency: Developers should strive for greater transparency about the limitations and potential issues of their models. Clearly communicating the potential for hallucinations and the contexts in which they are likely to occur can help users better understand and appropriately interact with AI systems.

User Feedback Mechanisms: Implementing robust user feedback systems can be incredibly beneficial. Encouraging users to report instances of hallucinations and other errors allows for continuous improvement of the model, leveraging real-world usage to fine-tune and enhance accuracy.

Collaboration and Research: Encouraging collaborative efforts across the AI research community to explore and develop new techniques for reducing hallucinations is crucial. Sharing data, methodologies, and findings can accelerate the development of more reliable models.

In essence, addressing the hallucination issue in LLMs requires a multi-faceted approach that combines technological innovation, rigorous evaluation, open communication, and collaborative research. By taking these steps, AI makers can significantly enhance the reliability and trustworthiness of their models, ensuring they perform more accurately and effectively in real-world applications.

Question 3 of 4 for ChatGPT-4o’s answer: List the consequences of these issues not being addressed

ChatGPT-4o’s answer:

If the issues related to hallucinations in large language models (LLMs) due to fine-tuning on new knowledge are not addressed, several significant consequences could arise:

1. Misinformation Spread

Public Trust Erosion: Users may lose trust in AI systems if they frequently encounter incorrect or fabricated information. This erosion of trust can have long-term impacts on the acceptance and integration of AI in various fields (ar5iv) (Papers with Code).

Misinformation and Disinformation: Hallucinated responses can lead to the spread of misinformation and disinformation, especially if users rely on LLMs for factual information. This can have serious societal implications, including influencing public opinion and decision-making processes (Papers with Code).

2. Impact on Critical Applications

Healthcare: In domains like healthcare, incorrect information generated by AI can have severe consequences, including misdiagnoses, improper treatment recommendations, and overall patient safety risks (ar5iv).

Legal and Financial Advice: Similarly, hallucinations in legal or financial advice provided by AI systems can lead to poor decisions, financial loss, or legal complications for users who depend on accurate information.

3. Business and Operational Risks

Operational Efficiency: Businesses that rely on AI for operational efficiency, customer service, or data analysis might face disruptions if the AI generates unreliable information, leading to poor customer experiences and operational inefficiencies.

Brand Reputation: Companies deploying AI solutions that frequently produce hallucinated content risk damaging their brand reputation, which can be hard to recover from and can lead to loss of customers and market share.

4. Research and Development Challenges

Scientific Research: Inaccurate AI-generated content can mislead researchers, leading to faulty hypotheses and conclusions, which can hinder scientific progress and innovation (ar5iv).

AI Development Stagnation: If these issues are not resolved, it can slow down the overall progress in AI development, as efforts may need to be diverted to managing and mitigating hallucinations rather than advancing new capabilities.

5. Ethical and Legal Implications

Accountability and Liability: Determining accountability for the consequences of AI-generated misinformation can be complex. Companies and developers might face legal challenges and liability issues if their AI systems cause harm due to hallucinated content (Papers with Code).

Bias and Fairness: Hallucinations can exacerbate existing biases in AI systems, leading to unfair or discriminatory outcomes, which can further entrench social inequalities and ethical concerns.

6. Educational Impact

Learning and Knowledge Acquisition: Students and educators using AI for learning and information retrieval may end up with a distorted understanding of subjects if they are exposed to hallucinated content, affecting the quality of education and knowledge acquisition (Papers with Code).

Addressing these issues is crucial to ensure the responsible development and deployment of AI technologies, maintaining public trust, and realizing the full potential of AI in enhancing human capabilities and societal well-being.

Question 4 of 4 for ChatGPT-4o: Anything missing from the paper? Do you have any suggestions for future research?

ChatGPT-4o’s answer:

Missing Elements and Suggestions for Future Research

1. Real-World Application Testing

Missing Element: The paper primarily focuses on controlled setups for closed-book question answering. It may not fully capture the complexities and dynamics of real-world applications where LLMs are deployed. Suggestion: Future research should involve testing fine-tuned models in diverse real-world scenarios to better understand how hallucinations manifest in practical applications. This could include fields like customer service, healthcare, legal advice, and more.

2. Long-Term Impact Studies

Missing Element: The long-term impacts of continuous fine-tuning on hallucination tendencies are not extensively explored. Suggestion: Longitudinal studies tracking how models evolve over extended periods with regular fine-tuning would provide valuable insights into the cumulative effects on accuracy and reliability. This would help in understanding whether hallucinations increase, decrease, or stabilize over time.

3. Mitigation Techniques

Missing Element: While the paper identifies the problem, it does not delve deeply into potential solutions or mitigation strategies. Suggestion: Future research should focus on developing and testing specific techniques to mitigate hallucinations. This could include advanced fine-tuning algorithms, hybrid models combining LLMs with rule-based systems, or enhanced validation mechanisms for newly introduced knowledge.

4. Role of Human Feedback

Missing Element: The role of human feedback in correcting hallucinations and improving model accuracy is not extensively covered. Suggestion: Investigating how human-in-the-loop approaches can help in real-time correction and learning from mistakes could be a productive area of research. This would involve integrating user feedback mechanisms to dynamically update and refine the model.

5. Cross-Model Comparisons

Missing Element: The study might not compare how different LLM architectures respond to fine-tuning and hallucination risks. Suggestion: Conducting comparative studies across various LLM architectures (e.g., GPT-4, BERT, T5) to identify which models are more resilient to hallucinations when fine-tuned can provide insights into designing more robust systems.

6. Causal Analysis

Missing Element: The causal mechanisms behind why fine-tuning leads to hallucinations are not fully explored.Suggestion: Future research should aim to uncover the underlying causal factors that make fine-tuning prone to inducing hallucinations. Understanding these mechanisms can lead to more targeted interventions and improvements in model design.

7. Ethical and Policy Implications

Missing Element: The ethical and policy implications of deploying fine-tuned models that may hallucinate are not discussed in detail. Suggestion: Exploring the ethical considerations and developing policy guidelines for the responsible use of fine-tuned LLMs would be crucial. This includes ensuring transparency, accountability, and user awareness about the potential limitations of AI-generated content.

8. Dataset Diversity and Quality

Missing Element: The quality and diversity of the datasets used for fine-tuning are not emphasized. Suggestion: Future work should investigate the impact of using high-quality, diverse datasets on reducing hallucinations. Studies should also explore how dataset curation practices influence the learning process and the model's ability to generalize new knowledge accurately.

By addressing these areas, researchers can build on the findings of the paper and contribute to the development of more reliable, accurate, and trustworthy LLMs.