GPT-4o: GenAI as an approximation tool. While it is tempting to compare GenAI’s failures with human errors, this oversimplification obscures the reality of how GenAI operates

By highlighting these consequences, the assessment could encourage a more grounded and nuanced approach to developing, deploying, and relying on GenAI systems.

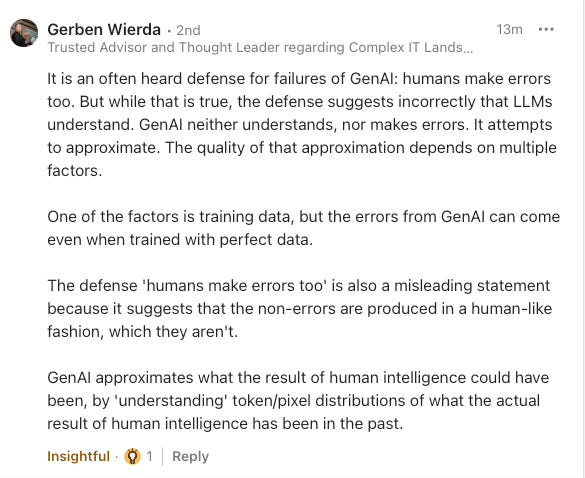

Question for AI services: Please analyze this comment on LinkedIn and explain whether you agree and list all possible consequences of this assessment.

ChatGPT-4o’s answer:

The LinkedIn comment critiques the commonly used defense, “humans make errors too,” in the context of failures by generative AI (GenAI). Here’s an analysis of the comment, followed by whether I agree with it and the possible consequences of this assessment:

Analysis of the Comment

Misrepresentation of Understanding: The comment argues that using human error as a defense is misleading because it assumes that GenAI “understands” in a human-like way. It clarifies that GenAI does not understand or make decisions as humans do; it only approximates based on statistical patterns learned from training data. This distinction is essential, as true understanding implies intent, judgment, and knowledge, which LLMs do not possess.

Nature of Errors: It emphasizes that GenAI’s errors do not stem from a lack of knowledge or oversight, as with human errors, but from limitations in its approximation process. Even if GenAI were trained with flawless data, errors could still arise due to inherent limitations in model architecture, the probabilistic nature of response generation, and generalization errors.

Misleading Human Analogy: The defense, “humans make errors too,” suggests that GenAI’s non-errors are similarly human-like, which the comment refutes. It asserts that GenAI’s “correct” outputs are not generated through any understanding of the task or context. Instead, they are based on recognizing and replicating token or pixel distributions. This suggests that any similarities between human outputs and GenAI outputs are coincidental and lack the depth of human cognition.

Agreement with the Comment

I agree with the comment, as it highlights key differences between human cognition and the mechanistic, statistical nature of GenAI. While it is tempting to compare GenAI’s failures with human errors, this oversimplification obscures the reality of how GenAI operates. The claim provides a more accurate representation of GenAI as an approximation tool rather than an intelligent, understanding entity.

Possible Consequences of This Assessment

Shifting Responsibility and Trust:

Recognizing GenAI’s limitations in understanding could shift the responsibility for errors or misuse away from the model to developers and operators.

Trust in GenAI’s outputs may be tempered, leading to increased skepticism about its reliability, especially in high-stakes applications (e.g., legal or medical advice).

Enhanced Model Testing and Validation:

Developers might adopt stricter validation protocols, focusing on how well approximations hold in diverse, real-world scenarios. This could include stress-testing GenAI in areas where understanding nuances is crucial.

Adjustments in Public Perception and Marketing:

As more users understand that GenAI lacks true understanding, marketing may shift to emphasize GenAI as a tool for assistance and augmentation, rather than as an “intelligent” or “understanding” entity.

Public perception could adjust, potentially reducing overreliance on GenAI for tasks requiring deep comprehension.

Regulatory Implications:

Regulators may impose stricter guidelines on how companies market and deploy GenAI, especially in fields where misunderstandings can lead to harmful consequences.

Policies might be introduced requiring clear disclosures about the limitations of GenAI and warning against its use for decisions that hinge on understanding complex human contexts.

Increased Demand for Explainability and Transparency:

Users and stakeholders might demand better explanations of how GenAI models work, particularly for errors or unexpected results.

This could drive advancements in interpretability, making it easier to understand why GenAI made a particular “approximation” and identifying where these approximations might fail.

Impacts on AI-Assisted Human Decision-Making:

Human decision-makers may be less inclined to defer to GenAI in critical decisions, especially where understanding context and nuance is essential.

This shift could lead to a preference for human oversight, with GenAI acting as a supportive tool rather than an autonomous decision-maker.

Potential Impact on Funding and Research Priorities:

A clearer understanding of GenAI’s limitations might redirect funding toward research focused on addressing these limitations or exploring alternative AI models that better approximate human-like understanding.

By highlighting these consequences, the assessment could encourage a more grounded and nuanced approach to developing, deploying, and relying on GenAI systems. It reminds stakeholders to set realistic expectations and avoid conflating GenAI’s statistical “approximations” with genuine comprehension.

Grok’s answer:

The comment you've shared on LinkedIn raises several interesting points about the nature of errors in Generative AI (GenAI) systems, particularly Large Language Models (LLMs). Here's an analysis:

Analysis of the Comment:

Understanding vs. Approximation:

Agreement: Yes, the idea that GenAI "understands" is misleading. GenAI, including LLMs, does not possess true understanding or consciousness. Instead, it generates outputs based on patterns it has learned from data. This process is indeed more about approximation than understanding.

Consequence: Users might overestimate the AI's capabilities, leading to inappropriate trust or application in contexts where real understanding or nuanced judgment is needed.

Nature of Errors:

Agreement: The comment correctly points out that GenAI does not "make errors" in a human-like way. Errors in GenAI arise from limitations in its training data, model architecture, or the algorithms used for prediction. Even with perfect training data, errors can occur due to complexities in language or unforeseen scenarios not represented in the training set.

Consequences:

Misinterpretation: There could be an over-reliance on AI in decision-making processes where the errors could have significant impacts (e.g., medical diagnosis, legal advice).

Design Flaws: Developers might not focus enough on robustness or edge cases if they're under the impression that more data will solve all issues.

Human Error Comparison:

Agreement: Comparing AI errors to human errors can be misleading because the mechanisms behind these errors are fundamentally different. Human errors might stem from fatigue, judgment, or lack of knowledge, whereas AI errors are from pattern mismatch or data representation issues.

Consequences:

Public Perception: This might lead to a false equivalence between AI and human judgment, potentially undermining trust in AI or leading to unrealistic expectations.

Ethical and Legal Implications: There might be issues regarding accountability when AI systems "fail" in ways humans wouldn't, complicating legal frameworks around AI use.

Approximation of Human Output:

Agreement: The comment correctly identifies that GenAI attempts to mimic what a human might produce based on statistical likelihoods rather than actual reasoning or comprehension.

Consequences:

Educational and Creative Sectors: There's a risk that reliance on AI for content generation could dilute originality and critical thinking if not used judiciously.

Bias Amplification: Since AI models approximate based on existing data, they might perpetuate or even amplify biases present in the training data.

Conclusion:

The defense that "humans make errors too" oversimplifies the complex nature of AI processing and its implications. While it's true that both humans and AI can produce incorrect outputs, the origins and nature of these errors differ significantly. Understanding this difference is crucial for:

Proper Application: Ensuring AI is used in contexts where its strengths (speed, consistency) are beneficial and its weaknesses (lack of understanding, potential for bias) are not critical.

Development Focus: Encouraging developers to focus on improving the interpretability and accountability of AI systems.

Public Discourse: Fostering a more nuanced understanding in public and policy discussions regarding AI's capabilities and limitations.

The comment effectively highlights these points, urging for a more sophisticated approach to evaluating and discussing AI technologies.

Claude’s answer:

This is a nuanced and important analysis that warrants careful consideration. Let me break this down step by step:

Key Arguments Analysis:

The comment challenges the "humans make errors too" defense by highlighting a fundamental difference in how errors occur

It distinguishes between understanding-based errors (human) and approximation-based outputs (AI)

It frames AI outputs as pattern-matching approximations rather than products of genuine understanding

Assessment: I would say this argument has significant merit but could benefit from some additional nuance. Here's why:

The Strong Points:

The distinction between understanding-based errors and approximation artifacts is technically accurate

The framing of AI as working with token/pixel distributions rather than semantic understanding is correct at a technical level

It rightly challenges oversimplified comparisons between human and AI errors

Areas for Additional Nuance:

The nature of "understanding" itself is philosophically complex and debated

The line between "approximation" and "understanding" may not be as clear-cut as suggested

Some cognitive science theories suggest human understanding might also involve sophisticated pattern matching

Potential Consequences of This Assessment:

Positive:

Better risk awareness in AI deployment

More precise discussion of AI capabilities

Improved framework for evaluating AI failures

More realistic expectations for AI systems

Better design of human-AI interaction systems

Negative:

Could lead to overcautious approach to AI adoption

Might underestimate AI capabilities in some areas

Could create artificial barriers between human and machine intelligence

Risk of dismissing valuable AI contributions due to focus on limitations

Might discourage investment in AI development

Practical:

Need for more robust testing frameworks

Importance of maintaining human oversight

Value of hybrid human-AI systems

Necessity of clear error attribution methods

Requirement for better AI explanation systems

I think the author's core argument about the fundamental difference between human errors and AI approximations is valuable, but the implications might be more complex than suggested. The key is not to dismiss AI capabilities entirely, but to understand their true nature for better deployment and risk management.

The real challenge going forward will be developing frameworks that acknowledge these fundamental differences while still allowing us to effectively leverage AI capabilities. This might involve:

Developing better ways to validate AI outputs

Creating more sophisticated human-AI collaboration models

Improving our understanding of both human and machine intelligence

Designing systems that complement rather than attempt to replicate human understanding

Perplexity’s answer:

The comment on LinkedIn presents a thoughtful critique of a common defense used for generative AI (GenAI) failures. I largely agree with the assessment, as it highlights important distinctions between human cognition and GenAI functionality. Let's analyze the key points and potential consequences:

Key Points

Understanding vs. Approximation

The comment rightly emphasizes that GenAI does not truly "understand" in the way humans do. Instead, it approximates outputs based on patterns in its training data. This is a crucial distinction often overlooked in discussions about AI capabilities.Nature of ErrorsWhile humans make errors due to cognitive biases, lack of information, or lapses in judgment, GenAI "errors" stem from limitations in its approximation process. These are fundamentally different types of mistakes.

Factors Influencing GenAI Output

The quality of GenAI approximations depends on various factors, not just training data. This highlights the complexity of GenAI systems and the challenges in ensuring consistent, high-quality outputs.Misleading ComparisonsThe comment astutely points out that comparing GenAI errors to human errors can be misleading, as it implies a similarity in cognitive processes that doesn't exist.

Potential Consequences

Overreliance on GenAI: Misunderstanding the nature of GenAI outputs could lead to excessive trust in its capabilities, potentially resulting in critical errors in decision-making processes.

Inadequate Risk Assessment: Failing to recognize the fundamental differences between human and GenAI errors may lead to inadequate risk assessment and mitigation strategies in AI-dependent systems.

Ethical and Legal Implications: The misconception that GenAI "understands" could complicate discussions around AI accountability and liability in cases of AI-generated errors.

Stifled Innovation: Overestimating GenAI's capabilities might lead to neglecting the development of complementary technologies or human skills that could enhance AI performance.

Public Misconceptions: Widespread misunderstanding of GenAI's limitations could lead to unrealistic public expectations and potential backlash when these expectations are not met.

Educational Challenges: The need to accurately convey the nature of GenAI functionality may require significant changes in how AI and computer science are taught.

Research Focus: Recognizing the approximation nature of GenAI could shift research priorities towards improving the quality and reliability of these approximations.

Human-AI Collaboration: A clearer understanding of GenAI's limitations could lead to more effective strategies for human-AI collaboration, leveraging the strengths of both.

In conclusion, the LinkedIn comment provides a valuable perspective on the nature of GenAI functionality. It emphasizes the importance of understanding AI's limitations and avoiding misleading comparisons with human cognition. This understanding is crucial for responsible AI development, deployment, and public discourse.

Gemini’s answer:

Analysis of the LinkedIn Comment

The LinkedIn comment offers a nuanced perspective on the limitations and potential pitfalls of Generative AI (GenAI) models, particularly Large Language Models (LLMs). The core argument is that while humans make errors, GenAI systems do not, strictly speaking, make errors. Instead, they produce outputs that are approximations based on the data they've been trained on.

Key Points and Agreement:

GenAI Doesn't Understand: The comment correctly points out that GenAI models lack true understanding. They operate on statistical patterns, predicting the most likely sequence of tokens or pixels, rather than comprehending the underlying meaning.

Limitations of Training Data: While high-quality training data is crucial, it's not a panacea. Even with perfect data, GenAI can still produce inaccurate or misleading outputs due to its inherent limitations.

Misleading Comparison to Human Error: The comparison to human error is often used to excuse GenAI's shortcomings. However, human errors stem from cognitive biases, lack of information, or intentional mistakes. GenAI's "errors" are fundamentally different and arise from its statistical nature.

Possible Consequences of This Assessment:

Increased Skepticism: A deeper understanding of GenAI's limitations can lead to increased skepticism and caution when using these tools. Users may be more critical of the outputs and less likely to blindly trust them.

Enhanced Ethical Considerations: The recognition that GenAI doesn't truly understand can highlight ethical concerns, such as the potential for bias, misinformation, and harmful stereotypes to be perpetuated.

Focus on Responsible Development: Developers and researchers may be encouraged to prioritize responsible AI development, focusing on transparency, accountability, and mitigating potential harms.

New Research Directions: This assessment could stimulate research into new techniques for improving GenAI's capabilities, such as incorporating knowledge graphs or causal reasoning.

Shifting Expectations: Users may need to adjust their expectations for GenAI. Instead of viewing it as a human-like intelligence, it should be seen as a powerful tool with specific strengths and weaknesses.

In conclusion, the LinkedIn comment provides a valuable insight into the nature of GenAI. By understanding its limitations, we can use these tools more effectively and responsibly.

Interesting. If I would have had the space that the models took, I could have provided the extra nuance they sometimes ask for (e.g. on the philosophical nature of understanding, or the issue about human brains also technically using comparable approximations).