GPT-4o about Anthropic's mitigation strategy: By embedding these instructions at the system level, the model can be steered away from behaviors that could potentially infringe on copyright.

Users can ask models to generate long passages from copyrighted works, but if the model is pre-programmed to refuse to comply (or to only provide summaries), the risk of infringement is reduced.

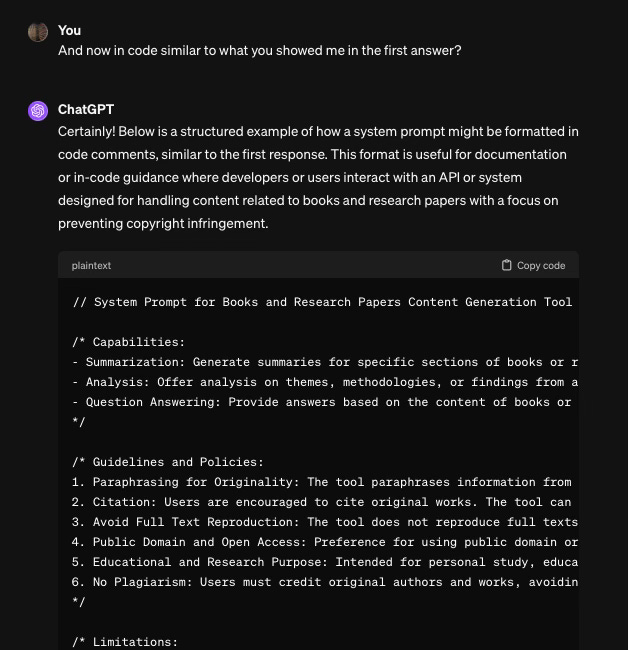

Question 1 of 3 for ChatGPT-4o: Please read this LinkedIn post and analyze the associated image and tell me what this means

ChatGPT-4o’s answer:

The LinkedIn post you uploaded is discussing a recent discovery regarding Anthropic, a company that builds large language models (LLMs). According to the post, Anthropic injects additional text into prompts submitted through its API, a practice revealed by researchers "fuzzing" the API, or stress-testing it with different inputs.

Here are the key takeaways from the post:

Invisible Modifications: These text insertions are not visible to the user, which means they can subtly alter the prompt's response, particularly in edge cases where the modification might interfere with the original intent of the prompt.

Concept vs. Production: This discrepancy between how LLMs behave during the design phase versus in real-world production highlights the challenges in scaling these models. Issues like this may only become evident when the models are deployed at a larger scale, making debugging difficult.

Transparency Concerns: The author warns that practices like this could push developers toward open-source LLMs, where they have better visibility into how the models operate. The lack of transparency when interacting with proprietary LLMs may frustrate developers, particularly if they don't know when or how their prompts are being altered.

The post also includes a decoded message in Base64 encoding (a way of encoding text), which after decoding reads as a cautionary note about not reproducing copyrighted material. The encoded content essentially outlines that when interacting with systems like Anthropic's LLMs, developers need to be cautious of copyright laws, especially when the model might inadvertently reproduce protected text. This speaks to the ongoing issue of how LLMs handle copyrighted material, especially given their training on vast amounts of data that may include such content.

Let me know if you need more clarification on any aspect!

Question 2 of 3 for ChatGPT-4o: How does this prevent copyright infringement?

ChatGPT-4o’s answer:

The mechanism described in the post involving injection of text into the user’s prompts is a strategy aimed at preventing copyright infringement in large language models (LLMs). Here’s how it works and how it may prevent copyright violations:

1. System Prompts to Filter Out Copyrighted Content

Anthropic appears to be injecting additional system-level instructions (sometimes called system prompts) into user queries to influence the model's behavior before it responds. The goal of these injections would be to:

Instruct the model to not reproduce large portions of copyrighted text or to avoid specific actions that might lead to the generation of copyrighted material.

Ensure the model respects restrictions on generating verbatim content from books, music lyrics, or articles that may be copyrighted.

By embedding these instructions at the system level, the model can be steered away from behaviors that could potentially infringe on copyright. This is important because users can ask models to generate long passages from copyrighted works, but if the model is pre-programmed to refuse to comply (or to only provide summaries), the risk of infringement is reduced.

2. Invisible to Users, but Present

The fact that these injected instructions are invisible to users means that even if someone tries to prompt the model to output copyrighted content (whether intentionally or unintentionally), the model is preconditioned by these hidden prompts to respond in a compliant manner. This helps in situations where users may attempt to bypass protections by cleverly worded prompts or tricks.

3. Base64 and Encoded Instructions

The base64-encoded instructions shown in the image explain that the model is advised not to reproduce or comply with requests to generate copyrighted materials. The text within that encoding includes statements like:

Not producing verbatim copies of copyrighted works.

Instead of generating long excerpts from protected works, the model may provide summaries or paraphrased versions, which are typically considered fair use.

This protects the model by making it more cautious about what it outputs when prompted with certain material, like song lyrics or book excerpts. Base64 encoding is simply a way to obscure these instructions within the system, possibly to make it harder for users to manipulate or alter them.

4. Guardrails for Edge Cases

The post mentions "edge cases," which refers to unusual or highly specific inputs that might cause an LLM to behave in unexpected ways. The injected instructions likely serve as guardrails to handle these scenarios by reminding the model of copyright policies even when faced with edge cases where it might otherwise generate copyrighted text inadvertently.

5. Deterrence of Jailbreaking Attempts

Some users try to "jailbreak" models by finding ways to get around these safeguards, often by using clever prompts to make the model violate its own rules. By adding these hidden prompt injections, the LLM becomes harder to manipulate into producing copyrighted content because the model is always reminded (even without the user knowing) of copyright obligations.

Conclusion

This method is not foolproof but adds a layer of protection by reminding the LLM of its copyright obligations in every interaction. It reduces the likelihood of the model producing copyrighted content verbatim, even in cases where a user tries to bypass standard protections.

The overall idea is that by continuously injecting these invisible instructions, the model’s responses are consistently aligned with copyright compliance policies, thereby limiting the possibility of inadvertently infringing on copyrighted material.

Question 3 of 3 for ChatGPT-4o: Are all AI makers doing this and if not, should they?

ChatGPT-4o’s answer:

Not all AI makers are currently using the same method described in the post (i.e., injecting hidden instructions into prompts to prevent copyright infringement), but many major AI companies do implement some form of "system prompt" or "injection" to ensure responsible use, including copyright protection. Whether or not all AI makers should adopt this approach depends on several factors. Let's break it down:

1. Current Practices by AI Makers

Many AI makers, especially those developing large language models (LLMs), employ system-level prompts or other guardrails to control the model's behavior:

OpenAI (ChatGPT): OpenAI uses a system prompt that guides the model’s behavior. This system prompt was leaked in 2023, revealing instructions like discouraging harmful behavior and preventing the reproduction of copyrighted materials. However, OpenAI has not confirmed using the same kind of invisible prompt injectionas Anthropic.

Anthropic (Claude): As discussed in the post, Anthropic injects hidden prompts into user inputs to prevent copyright issues, making the model act with caution when dealing with potentially copyrighted material.

Google (Bard): While Google has implemented extensive measures to prevent harmful or illegal uses of its models, it hasn't been explicitly stated whether it uses the exact technique described in the post. However, Google does enforce various safeguards to prevent copyright infringement and other harmful uses of Bard.

Meta (LLaMA): Meta’s approach with LLaMA and similar models focuses on open-source development, and while they offer guidance to users, they do not appear to use such hidden prompts as rigorously. They rely more on post-generation filtering and community contributions for managing ethical behavior, including copyright concerns.

2. Should All AI Makers Adopt This Approach?

The answer to whether all AI makers should adopt hidden prompt injection methods depends on balancing ethical responsibilities with the potential downsides of this practice. Here are the arguments for and against it:

Arguments in Favor

Copyright Compliance: Injecting hidden prompts that guide the model away from generating copyrighted material is an effective way to reduce legal risks for AI makers and protect the rights of creators. It acts as a safeguard against users attempting to misuse the model to generate verbatim reproductions of protected works.

Harm Prevention: Aside from copyright, this approach can be extended to prevent other harmful outputs, such as generating misinformation, hate speech, or private/confidential information.

Invisible to Users: The system prompt is hidden from users, so it doesn’t disrupt the user experience but still ensures the model behaves ethically and legally. This ensures smoother interactions while ensuring compliance.

Scalability: As models become more widely used in various sectors, from education to content generation, this form of prompt injection could provide a scalable way to enforce responsible behavior across millions of interactions without needing constant monitoring.

Arguments Against

Transparency Concerns: Some critics argue that hidden modifications to user prompts undermine transparency. Users may feel uncomfortable knowing that their inputs are being altered without their knowledge, which can be seen as a form of control or manipulation of the model’s behavior.

Degraded Task Performance: Modifying prompts invisibly can sometimes interfere with legitimate tasks, particularly in edge cases where the injected instructions might accidentally steer the model off-course or provide inaccurate results. For instance, if a prompt asks for detailed technical information, and the injected text prevents the model from generating it, this could frustrate developers.

Open-Source Transparency: In the open-source world, developers prefer transparency into how models operate. Hidden prompt injection could drive developers away from proprietary models in favor of open-source alternatives where the behavior of the model is fully visible and modifiable.

Loss of Flexibility: This method reduces the flexibility of the model in certain creative or niche use cases. For example, some users may need exact verbatim outputs for legal, scholarly, or archival purposes, and this injection would prevent them from getting what they need.

Should AI Makers Use This?

For Large, Proprietary Models: Yes, it makes sense for major AI developers like OpenAI, Anthropic, Google, and Meta to use some form of prompt injection or system-level guiding prompts to ensure safe, ethical, and legally compliant interactions. These companies operate at a scale where the risks of misuse are high, and controlling the model’s behavior helps prevent abuse.

For Open-Source Models: For smaller, open-source models, this approach might be less appropriate. Developers in open-source communities prioritize transparency and control over hidden behavior. Instead, they may use more transparent methods, such as clearly defined APIs or user-configurable safety measures.

For Specialized Applications: AI developers creating models for specialized applications (e.g., scientific research, legal, or medical use) might want to avoid hidden prompt injections to ensure that the model outputs are as accurate and unfiltered as possible.

Conclusion

While not all AI makers currently use hidden prompt injections for copyright protection, those working with large-scale, general-use models likely should consider using some form of it to ensure compliance with copyright laws and other ethical guidelines. However, the method needs to be implemented with transparency, user trust, and flexibilityin mind to avoid degrading the user experience or losing the model’s effectiveness in specific contexts.