GPT-4: This extensive list underscores the critical importance of securing API tokens and other sensitive credentials in AI and ML ecosystems to prevent a wide range of potential security challenges

If attackers had used these tokens, they could have stolen data, altered AI training data, or taken AI models. This could impact over a million users

Question 1 of 3 for ChatGPT-4: Please analyze this article “Exposed Hugging Face API tokens offered full access to Meta's Llama 2” and tell me what it says in easy to understand language.

ChatGPT-4’s answer:

This article explains a significant security issue found in the Hugging Face platform, a popular site for AI and machine learning enthusiasts. Here's a simpler breakdown of the key points:

Exposure of API Tokens: Researchers at Lasso Security discovered over 1,500 API tokens on Hugging Face. These tokens are like special keys that allow access to accounts and services of various organizations, including big names like Meta, Microsoft, Google, and VMware.

Access to Organizations: The exposed tokens gave the researchers access to 723 organizations' accounts, including the ability to modify files for 655 of them. This included access to projects like Meta's Llama, EleutherAI's Pythia, and BigScience Workshop's Bloom.

Potential Risks: If attackers had used these tokens, they could have stolen data, altered AI training data, or taken AI models. This could impact over a million users.

Examples of Potential Harm: The article mentions how Google's Gmail spam filters could be compromised, or how network traffic identification could be sabotaged, leading to various problems.

Extent of Access: The researchers were able to modify datasets and potentially steal over 10,000 private models.

Serious Security Implications: The breach was significant because the researchers got full access to major organizations' models, which could be manipulated for malicious purposes.

How the Tokens Were Found: The researchers found the tokens by doing searches on Hugging Face and then verifying them through the platform's API.

Common Mistake in Exposing Tokens: Developers often accidentally expose these tokens by not hiding them when uploading their code to public repositories.

Preventive Measures by Platforms: Platforms like GitHub and Hugging Face have tools to alert users about exposed tokens.

Response to the Discovery: Affected organizations, upon being notified, acted quickly to revoke the exposed tokens and fix the issues.

Efforts to Improve Security: EleutherAI, for example, worked with Hugging Face and Stability AI to develop a new format that reduces the risk of attacker modifications.

In summary, the article highlights a significant security lapse on the Hugging Face platform, emphasizing the importance of securing API tokens to prevent unauthorized access and potential misuse in the AI and machine learning sector.

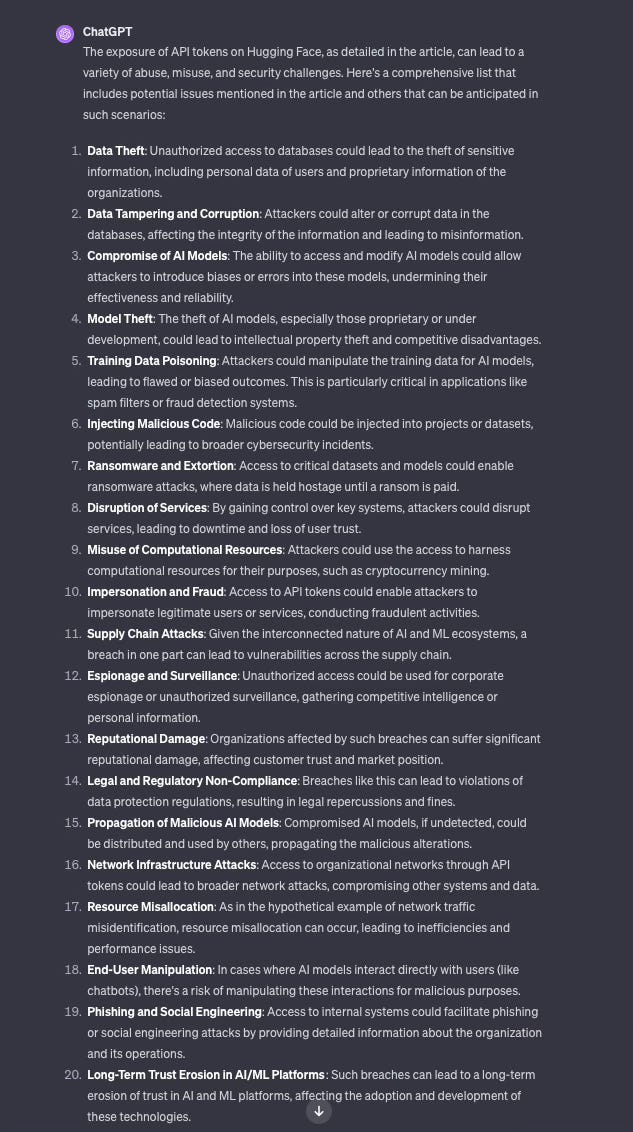

Question 2 of 3 for ChatGPT-4: List all possible abuse, misuse, and security challenges that could be caused by this lapse, whether mentioned in this article or anything you can think of

ChatGPT-4’s answer:

The exposure of API tokens on Hugging Face, as detailed in the article, can lead to a variety of abuse, misuse, and security challenges. Here's a comprehensive list that includes potential issues mentioned in the article and others that can be anticipated in such scenarios:

Data Theft: Unauthorized access to databases could lead to the theft of sensitive information, including personal data of users and proprietary information of the organizations.

Data Tampering and Corruption: Attackers could alter or corrupt data in the databases, affecting the integrity of the information and leading to misinformation.

Compromise of AI Models: The ability to access and modify AI models could allow attackers to introduce biases or errors into these models, undermining their effectiveness and reliability.

Model Theft: The theft of AI models, especially those proprietary or under development, could lead to intellectual property theft and competitive disadvantages.

Training Data Poisoning: Attackers could manipulate the training data for AI models, leading to flawed or biased outcomes. This is particularly critical in applications like spam filters or fraud detection systems.

Injecting Malicious Code: Malicious code could be injected into projects or datasets, potentially leading to broader cybersecurity incidents.

Ransomware and Extortion: Access to critical datasets and models could enable ransomware attacks, where data is held hostage until a ransom is paid.

Disruption of Services: By gaining control over key systems, attackers could disrupt services, leading to downtime and loss of user trust.

Misuse of Computational Resources: Attackers could use the access to harness computational resources for their purposes, such as cryptocurrency mining.

Impersonation and Fraud: Access to API tokens could enable attackers to impersonate legitimate users or services, conducting fraudulent activities.

Supply Chain Attacks: Given the interconnected nature of AI and ML ecosystems, a breach in one part can lead to vulnerabilities across the supply chain.

Espionage and Surveillance: Unauthorized access could be used for corporate espionage or unauthorized surveillance, gathering competitive intelligence or personal information.

Reputational Damage: Organizations affected by such breaches can suffer significant reputational damage, affecting customer trust and market position.

Legal and Regulatory Non-Compliance: Breaches like this can lead to violations of data protection regulations, resulting in legal repercussions and fines.

Propagation of Malicious AI Models: Compromised AI models, if undetected, could be distributed and used by others, propagating the malicious alterations.

Network Infrastructure Attacks: Access to organizational networks through API tokens could lead to broader network attacks, compromising other systems and data.

Resource Misallocation: As in the hypothetical example of network traffic misidentification, resource misallocation can occur, leading to inefficiencies and performance issues.

End-User Manipulation: In cases where AI models interact directly with users (like chatbots), there's a risk of manipulating these interactions for malicious purposes.

Phishing and Social Engineering: Access to internal systems could facilitate phishing or social engineering attacks by providing detailed information about the organization and its operations.

Long-Term Trust Erosion in AI/ML Platforms: Such breaches can lead to a long-term erosion of trust in AI and ML platforms, affecting the adoption and development of these technologies.

This extensive list underscores the critical importance of securing API tokens and other sensitive credentials in AI and ML ecosystems to prevent a wide range of potential security challenges.

Question 3 of 3 for ChatGPT-4: What can the AI makers do to try and prevent this from happening and do they need the help and expertise of anyone else?

ChatGPT-4’s answer:

To prevent incidents like the exposure of API tokens and enhance overall security in AI systems, AI makers can undertake a variety of measures. They may also benefit from the expertise of various professionals outside their immediate field. Here are some strategies and collaborations that can be effective:

Robust Security Protocols: Implementing strong security measures, such as encryption, multi-factor authentication, and regular security audits, is fundamental. This includes securing endpoints, networks, and databases.

Regular Security Audits and Penetration Testing: Regularly auditing systems for vulnerabilities and conducting penetration tests can help identify and mitigate potential security threats.

Token and Credential Management: Implementing strict management policies for API tokens and other credentials, ensuring they are not hardcoded into public repositories, and using secret management tools to store them securely.

Employee Training and Awareness: Educating employees about security best practices, the importance of not exposing sensitive information, and recognizing phishing attempts can significantly reduce risk.

Collaboration with Cybersecurity Experts: AI makers can benefit from the expertise of cybersecurity professionals who can provide insights into the latest threats and best practices for securing systems.

Using Secure Development Practices: Adopting secure software development life cycle (SDLC) practices can ensure that security is integrated at every stage of development.

Regular Software Updates and Patch Management: Keeping all software up to date with the latest security patches can protect against known vulnerabilities.

Incident Response Planning: Having a well-defined incident response plan ensures quick and effective action in case of a security breach.

Access Control and User Permissions: Implementing strict access controls and ensuring that users have only the necessary permissions can limit the potential damage in case of a breach.

Collaboration with Legal and Regulatory Experts: To ensure compliance with data protection and privacy laws, AI makers should consult legal experts familiar with the specific requirements in the regions they operate.

Community Collaboration and Open Source Vigilance: Engaging with the broader AI and tech community, including open-source projects, can help in staying informed about new vulnerabilities and best practices.

Third-party Security Evaluations: Regularly having third-party security firms evaluate the system can provide an unbiased assessment of security postures.

Network Segmentation and Monitoring: Dividing network resources into distinct segments can limit the spread of a breach, and continuous monitoring can help in early detection of anomalies.

Backup and Recovery Plans: Having robust backup and recovery procedures can minimize the impact of data loss or corruption.

Engaging Ethical Hackers: Hiring ethical hackers to uncover vulnerabilities can provide practical insights into potential security flaws.

By combining these practices and leveraging the expertise of professionals in cybersecurity, legal, regulatory, and ethical hacking fields, AI makers can significantly bolster their defenses against security lapses like the exposure of API tokens.