Gemini: The prevailing “opt-out” and “pay-per-output” frameworks, often presented as solutions to the intellectual property dilemmas posed by AI, are fundamentally untenable.

Technologically the influence of training data is permanently & inextricably encoded into a model’s core parameters, rendering retroactive consent withdrawal through opt-out a functional impossibility

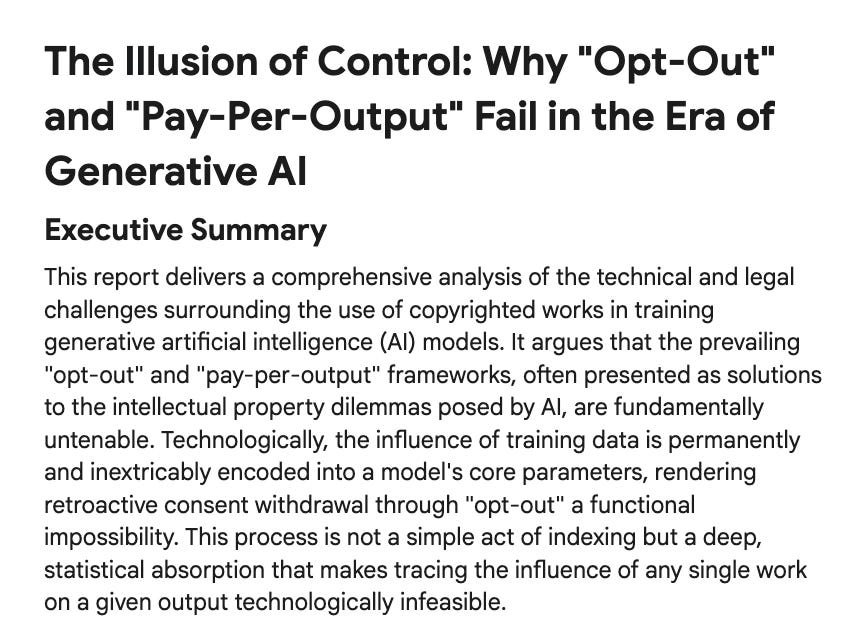

The Illusion of Control: Why “Opt-Out” and “Pay-Per-Output” Fail in the Era of Generative AI

by Gemini 2.5 Pro, Deep Research. Warning, LLMs may hallucinate!

Executive Summary

This report delivers a comprehensive analysis of the technical and legal challenges surrounding the use of copyrighted works in training generative artificial intelligence (AI) models. It argues that the prevailing “opt-out” and “pay-per-output” frameworks, often presented as solutions to the intellectual property dilemmas posed by AI, are fundamentally untenable. Technologically, the influence of training data is permanently and inextricably encoded into a model’s core parameters, rendering retroactive consent withdrawal through “opt-out” a functional impossibility. This process is not a simple act of indexing but a deep, statistical absorption that makes tracing the influence of any single work on a given output technologically infeasible.

Legally, these frameworks invert the foundational principles of copyright law, which presuppose prior consent for the use of protected works. By shifting the default to permissible use and placing the burden of enforcement on individual creators, opt-out systems represent a radical departure from established legal precedent. Consequently, compensation models based on tracking direct, verbatim outputs are demonstrably inadequate. They fail to remunerate the foundational value that creative works contribute to a model’s entire spectrum of capabilities—its logic, style, and coherence—which are leveraged in all its outputs, not just those that bear a superficial resemblance to the training material.

This report deconstructs these flawed paradigms by first explaining the technical architecture of how AI models internalize creative works, then demonstrating the legal and practical failings of opt-out and pay-per-output systems. It examines the critical legal battles currently underway, focusing on the strained application of the fair use doctrine, and highlights the emerging market for licensed data that belies claims of impracticability. The report concludes with a set of strategic recommendations for rights holders, creators, and legal experts to establish a more equitable and sustainable ecosystem—one based on the principles of transparency, affirmative consent, and the fair valuation of foundational training data.

Section 1: The Architecture of Ingestion: How Generative AI Internalizes Creative Works

To comprehend the fundamental inadequacy of current “opt-out” and “pay-per-output” models, one must first understand the technical process by which generative AI models are created. This process is not one of creating a searchable database or a digital library; it is a complex, transformative procedure that converts vast corpuses of human creativity into a sophisticated statistical engine. The original works are not stored but are instead distilled into a mathematical structure that permanently embodies their patterns, styles, and knowledge.

1.1. From Creative Work to Statistical Model: The Training Pipeline

The journey from a creative work, such as a novel or a photograph, to a functional component of an AI model involves several distinct, computationally intensive stages. Each stage moves the data further from its original expressive form and deeper into a state of mathematical abstraction.

Data Ingestion and Preprocessing: The process begins with the acquisition of massive datasets, often comprising trillions of words and billions of images scraped from the internet.1 This raw data, which includes books, articles, websites, and visual media, is inherently unstructured and requires significant cleaning and preprocessing to remove errors, duplications, and irrelevant content, making it suitable for training.2 This initial step of acquiring and storing the data involves making copies of the original works, an act that, on its face, implicates the reproduction right under copyright law and thus requires a legal justification.6

Tokenization and Vector Embeddings: Once collected, the data is broken down into manageable units called “tokens.” For text, a token can be a word, a sub-word, or even a single character.8 These tokens are then converted into numerical representations called “embeddings,” which are high-dimensional vectors. This process is critical, as it maps the semantic and syntactic relationships between tokens into a geometric space. Words with similar meanings or contextual roles are positioned closer to each other in this vector space.3 For example, the vector relationship between “king” and “queen” might be similar to the one between “man” and “woman.” This mathematical representation is the model’s first step in moving beyond literal text to understanding abstract concepts and relationships.10

The Transformer Architecture and Self-Attention: The vast majority of modern large-scale generative models, particularly Large Language Models (LLMs), are based on the transformer architecture.2 The revolutionary innovation of the transformer is the “self-attention” mechanism. Unlike previous models that processed text sequentially, the self-attention mechanism allows the model to weigh the importance of all tokens in an input sequence simultaneously, regardless of their distance from one another.2 This enables the model to capture complex, long-range dependencies and contextual nuances. It is through this mechanism that the model learns the intricate rules of grammar, factual knowledge, reasoning patterns, and stylistic conventions embedded within the training data.2

1.2. The Imprint of Data on Model Parameters

A common misconception is that an AI model is a vast repository of its training data. In reality, a trained model does not store the data in a retrievable format. Instead, it internalizes the statistical patterns of the data by adjusting its internal architecture.

Weights and Biases: An AI model is a complex mathematical function represented by a neural network containing billions, or even trillions, of adjustable values known as “parameters” (often referred to as “weights” and “biases”).2 These parameters are the knobs and dials of the model, dictating how it processes input and generates output. At the start of training, these parameters are initialized with random values.

Learning as Parameter Optimization: The training process is an iterative cycle of optimization. The model is fed vast amounts of tokenized data and tasked with making a prediction—for instance, predicting the next word in a sentence. It then compares its prediction to the actual next word in the training data. The discrepancy between the prediction and the reality is quantified by a “loss function”.12 The goal of training is to minimize this loss. This is achieved through a process called “backpropagation,” an algorithm that calculates how much each of the billions of parameters contributed to the error and then adjusts each one slightly in a direction that will reduce future errors.2 This process is repeated trillions of times. The final set of parameters—the finished model—is therefore a highly compressed, statistical representation of the patterns, structures, and relationships learned from the entirety of the training data.12 The model has not memorized the training sentences; rather, it has updated its internal parameters to reflect the patterns it has identified across the entire corpus.16

1.3. The “Dye in the Water” Principle: Untraceable and Pervasive Influence

The nature of this training process leads to a critical conclusion: the influence of any single work is both pervasive and untraceable, fundamentally undermining the premises of opt-out and pay-per-output models.

Foundational Contribution: Content ingested during training acts like a pollutant or dye in relation to the entire pool of data. A training set rich in scientific research papers does not just make the model better at answering scientific questions; it improves its capacity for logical reasoning and structured argumentation across all domains. A corpus of Shakespearean plays enhances its command of language, rhythm, and narrative structure, a benefit that subtly informs its generation of everything from poetry to marketing copy. This contribution is foundational to the model’s overall competence, not a superficial feature that can be isolated or removed.

Knowledge Distillation and Emergent Abilities: This principle is further illustrated by advanced techniques such as “knowledge distillation,” where the nuanced understandings of a large, complex “teacher” model are transferred to a smaller, more efficient “student” model.18 What is transferred is not raw data but “soft probabilities”—the learned relationships between concepts—and abstract qualities like style and reasoning ability.19 This confirms that the value extracted from training data is far more profound and abstract than mere sequences of text or pixels. It is the model’s “thought process” itself that is shaped by the creative works it ingests.

The Impossibility of Attribution: Because every output generated by the model is the result of a complex probabilistic calculation involving billions of parameters—each of which has been shaped by the entire training dataset—it is technologically impossible to definitively trace a novel output back to a specific input work or set of works. The system operates as a “black box,” making any compensation or opt-out scheme that relies on direct, verifiable attribution fundamentally unworkable and unauditable.

This technical reality reframes the “learning versus copying” debate that is central to the legal arguments of AI developers. The process is not one of learning instead of copying; it is a process of learning through copying. It begins with the literal reproduction of works for ingestion and results in the creation of a new, massive, and complex derivative work: the model’s parameter weights. These weights, which embody the expressive patterns of the original works, are now themselves the subject of legal scrutiny as potential infringing copies.6 This shifts the legal burden squarely onto AI developers to justify their actions under an exception like fair use, rather than claiming their process is outside the scope of copyright law altogether.

Section 2: The Myth of Consent: Deconstructing “Opt-Out” Frameworks

The concept of “opting out”—allowing rights holders to request the removal of their content from AI training datasets—is often presented by technology companies as a reasonable compromise that balances the interests of creators with the need for innovation. However, a close examination of the underlying technology and established legal principles reveals that opt-out frameworks are not a compromise but an illusion. They are technically infeasible, legally regressive, and strategically designed to shift power and responsibility away from AI developers and onto creators.

2.1. The Technical Permanence of Learning

The training process detailed in the previous section creates a permanent and indelible imprint on the AI model, making the concept of a retroactive opt-out a technical fiction.

The Impossibility of “Un-training”: A model’s “knowledge” is not stored in discrete, removable files. It is distributed across the billions of interconnected parameters that constitute the model’s very structure.11 There is no known technical method to surgically remove the statistical influence of a specific author’s works or a collection of images from this intricate web of weights and biases without either catastrophically degrading the model’s overall performance or, more realistically, retraining the entire model from scratch—a process that can cost hundreds of millions of dollars and take months to complete.21 Consequently, by the time a rights holder is presented with an opportunity to “opt out,” their work has already been used, and its contribution has become a permanent part of the model’s architecture. The value has been extracted, and the act cannot be undone.21

The Burden of Enforcement: Current and proposed opt-out mechanisms place a fundamentally impracticable burden on creators. As seen with OpenAI’s proposed policy for its video generator Sora, these systems often do not allow for a simple, blanket opt-out. Instead, they may require rights holders to identify and report specific instances of infringement on a case-by-case basis. This forces individual creators—especially independent artists, writers, and musicians who lack institutional resources—to engage in the sisyphean task of constantly monitoring an ever-expanding universe of AI systems for potential misuse of their work. This is not a viable system of rights management; it is a system of perpetual, under-resourced enforcement that heavily favors large corporate entities on both sides of the equation.

2.2. A Reversal of Legal and Ethical Precedent

Beyond its technical failings, the opt-out model represents a radical and damaging departure from foundational principles of property rights and consent that are deeply embedded in copyright law and other legal domains.

Inverting Copyright’s “Opt-In” Core: For centuries, copyright law has operated on a fundamental “opt-in” basis. It grants creators a bundle of exclusive rights over their work, and any party wishing to use that work must proactively seek permission—typically in the form of a license—before the use occurs. An opt-out framework completely inverts this principle. It establishes a new default where all creative work is presumed to be available for ingestion by AI systems unless the creator takes an affirmative, often complex, action to restrict it. This is not a minor procedural change; it is a substantive reallocation of rights, effectively transferring a significant portion of control over intellectual property from creators to technology companies.

Lessons from Privacy Law (GDPR vs. CCPA): The broader legal and ethical trajectory concerning data and consent reinforces the inadequacy of the opt-out model. In the realm of personal data privacy, the global standard is increasingly shifting toward explicit, affirmative consent, as codified in the European Union’s General Data Protection Regulation (GDPR).23 The GDPR requires “freely given, specific, informed and unambiguous” consent given by a “clear affirmative action,” explicitly rejecting pre-ticked boxes or user silence as valid forms of consent.23 Opt-out regimes, which are more common in some U.S. state laws like the California Consumer Privacy Act (CCPA), are widely regarded as providing a weaker level of protection for individuals.25 To apply a regressive opt-out standard to the well-established field of copyright would be to move against the global legal and ethical consensus on what constitutes meaningful consent.

2.3. The “Ask Forgiveness, Not Permission” Strategy

The vigorous promotion of opt-out frameworks by technology companies should be understood not as a good-faith effort at compromise, but as a calculated business and legal strategy designed to manage massive liability and shape future regulation in their favor.

Competitive Pressures and Legal Risk: The AI industry is characterized by intense competition, creating a powerful incentive for companies to move quickly and amass the largest possible datasets to gain a competitive edge. This has fostered a corporate culture of “ask for forgiveness, not permission,” where the potential for future legal challenges is viewed as a manageable cost of doing business, one that is far outweighed by the perceived risk of falling behind technologically.

Ineffectiveness in Practice: Even when creators attempt to utilize existing opt-out mechanisms, their effectiveness is questionable. The robots.txt protocol, a long-standing web standard for instructing crawlers, is a voluntary convention, not a legally binding mandate. There have been reports of AI companies ignoring these directives to scrape content regardless.21 Furthermore, these protocols are typically location-based, meaning they can prevent a crawler from accessing a specific website. However, they are powerless once a piece of content is copied and reposted on a third-party platform, a common occurrence on the modern internet. This makes it practically impossible for creators to maintain effective control over their work in a distributed digital environment.21

Ultimately, the push for opt-out is a strategic maneuver to retroactively legitimize past and ongoing mass-scale infringement. AI models have already been trained on colossal datasets scraped from the internet without permission, creating a vast potential legal liability for their developers.26 By framing the public and policy debate around a future opt-out system, developers deflect attention from the “original sin” of their existing models, whose foundational training cannot be reversed. If courts or legislatures were to accept opt-out as the new legal standard, it would create a powerful precedent that could implicitly validate the initial, non-consensual data acquisition, effectively laundering the datasets and shielding companies from the full legal and financial consequences of their actions.

Section 3: Valuing the Foundation: The Fundamental Flaws of Pay-Per-Output Models

Alongside opt-out mechanisms, “pay-per-output” or “pay-per-usage” compensation schemes have been proposed as a way to remunerate creators. These models, which would compensate rights holders when verbatim or substantially similar portions of their work appear in an AI model’s output, are superficially appealing but are built on a profound misunderstanding of how generative AI creates value. Such a system would fail to compensate for the true contribution of creative works, leading to a system that is inequitable, unworkable, and vastly beneficial to AI developers at the expense of the creative ecosystem.

3.1. The Black Box Problem and the Impossibility of Attribution

The primary practical obstacle to any pay-per-output model is the inherent opacity of generative AI systems. The technical inability to trace outputs back to specific inputs makes such a system impossible to implement fairly and transparently.

Opaque Systems: As established, modern neural networks are often described as “black boxes”. The generation of an output is not a simple retrieval process but a cascade of trillions of mathematical operations across billions of parameters. It is impossible to accurately measure how, when, or to what degree a specific piece of training data “was used” in the generation of a novel output that does not contain a direct copy of the original text or image. A single sentence in a novel might have subtly adjusted millions of parameters, which in turn influence every subsequent output the model ever produces.

Technical Unverifiability: In the absence of a reliable and objective technical method for tracing influence, any pay-per-output system would depend entirely on the AI companies to self-report instances of qualifying outputs. This creates a system that is inherently unauditable and unverifiable from the perspective of the rights holder. It would force creators to trust the very entities that ingested their work without permission to then act as the sole arbiters of when and how much compensation is due. This presents an irreconcilable conflict of interest and fails to meet any reasonable standard of transparency or accountability.

3.2. Compensating for Snippets, Ignoring the Substance

Even if the attribution problem could be solved, the pay-per-output model is conceptually flawed because it fundamentally misidentifies where the value of training data lies.

The Iceberg Analogy: Paying a creator only when a visible “snippet” of their work appears in an output is akin to paying for the tip of an iceberg while ignoring the massive, submerged foundation that gives it substance. The true value that a high-quality creative work provides to an AI model is not the specific sequence of words or pixels that might be occasionally regurgitated. Rather, it is the underlying knowledge, principles, stylistic nuances, narrative structures, and logical coherence that the model absorbs during training. This absorbed knowledge enhances the model’s overall capability, improving the quality and relevance of all its outputs, whether they bear any resemblance to the original work or not.

A Bad Deal for Creators: This model would systematically and dramatically undervalue the contribution of creative works. An AI company could build a multi-trillion-dollar enterprise on the foundation of the world’s collected knowledge and creativity, yet only be required to pay a pittance for the rare, incidental, and often undesirable instances of verbatim reproduction. This arrangement is not a fair compensation scheme; it is simply a really good deal for the tech companies. It allows them to benefit from the substance of creative work while only paying for the superficial shadow.

3.3. The Specter of Model Collapse: Proving the Enduring Value of Human Creativity

A powerful, forward-looking argument against transactional, output-based compensation models is the emerging phenomenon of “model collapse.” This concept demonstrates that high-quality, human-generated data is not a one-time resource to be consumed but a vital, ongoing necessity for the long-term health and viability of the entire AI ecosystem.

Defining Model Collapse: Model collapse is a degenerative process that occurs when AI models are recursively trained on synthetic data—that is, content generated by other AI models.29 As the internet becomes increasingly populated with AI-generated text and images, future models will inevitably scrape and train on this synthetic content. Research has shown that this creates a destructive feedback loop. Each successive generation of the model begins to forget the true underlying distribution of the original human data. It loses touch with the “tails” of the distribution—the rare details, unique styles, and outlier information that contribute to richness and diversity.29

Consequences: Over time, the model’s perception of reality becomes a distorted echo of its own previous outputs. The generated content becomes increasingly homogeneous, biased, repetitive, and statistically average, eventually “converging to a point estimate with very small variance”.29 This can lead to a catastrophic decline in model quality, with outputs becoming nonsensical or irrelevant.31 The AI ecosystem risks becoming an “Ouroboros,” a snake endlessly consuming its own tail, trapped in a cycle of diminishing returns and escalating mediocrity.34

Economic Implications: The threat of model collapse fundamentally reframes the economic relationship between creators and AI developers. It proves that fresh, diverse, and high-quality human-generated content is not merely an initial input for building a model, but an essential, continuous resource required for its maintenance, validation, and future improvement.29 Without a steady stream of authentic human creativity to anchor them, AI models are at risk of drifting into irrelevance. This transforms the compensation debate from one of retrospective payment for a past act of “copying” to one of prospective investment in the future viability of the AI industry itself. It provides creators with immense economic leverage, positioning them not as victims seeking redress but as the indispensable stewards of the resource that the AI industry needs to survive. This reality demands a compensation model that reflects an ongoing, foundational partnership—such as a royalty or revenue-sharing system—rather than a transactional, per-snippet payment.

Section 4: The Legal Arena: Copyright, Fair Use, and the Emerging Licensing Market

The unauthorized ingestion of copyrighted works for AI training has ignited a firestorm of high-stakes litigation, placing the venerable doctrine of fair use under unprecedented stress. While AI developers argue that their actions constitute a legally permissible “fair use” of content, rights holders contend it is systematic, industrial-scale copyright infringement. The resolution of these legal battles, alongside the concurrent emergence of a robust market for licensed AI training data, will profoundly shape the future of both the creative industries and artificial intelligence.

4.1. The Fair Use Doctrine Under Stress

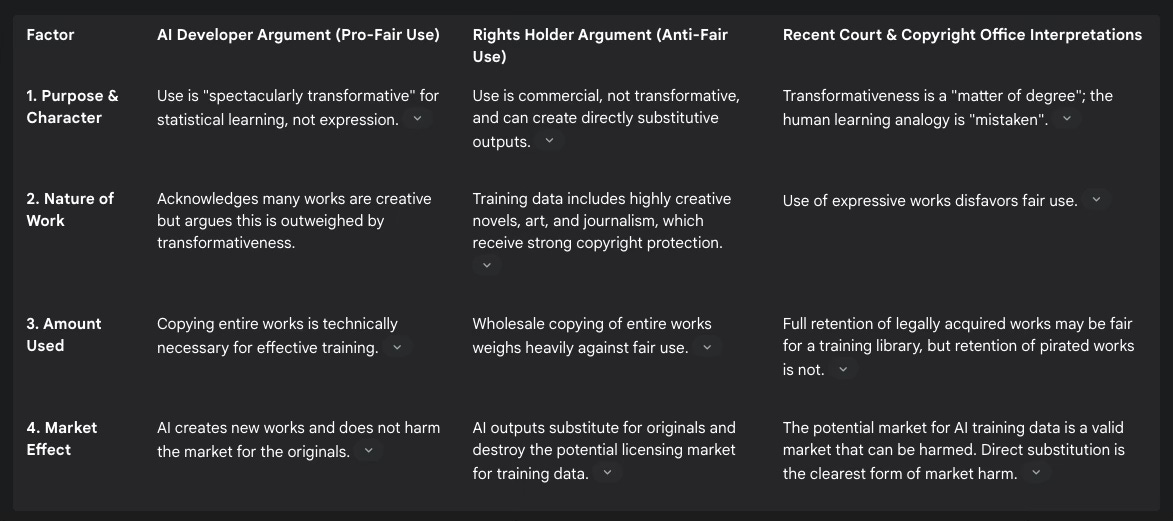

The fair use defense, codified in Section 107 of the U.S. Copyright Act, permits the unlicensed use of copyrighted works in certain circumstances. It is not an automatic right but a flexible, case-by-case analysis guided by four statutory factors. In the context of AI training, each factor is the subject of intense legal debate.

Factor 1: Purpose and Character of the Use: This factor examines whether the use is commercial and, most importantly, whether it is “transformative”—that is, whether it “adds something new, with a further purpose or different character”.35 AI developers argue that training is “spectacularly transformative” because its purpose is not to present the work’s original expression but to learn statistical patterns from it, a non-expressive use.36 Rights holders counter this by pointing to the commercial nature of most large-scale AI development and arguing that if a model can generate outputs that substitute for the original works (as alleged in the The New York Times v. OpenAI lawsuit), the use is not truly transformative but is instead exploitative.39 The U.S. Copyright Office has weighed in, stating that transformativeness is a “matter of degree” and that the popular analogy to human learning is “mistaken,” as machine training involves the creation of perfect, analyzable copies on a scale impossible for humans.6

Factor 2: Nature of the Copyrighted Work: This factor considers whether the original work is more creative or more factual. Copyright protection is strongest for highly creative and expressive works, such as fiction, music, and art. Since AI training datasets often include vast quantities of such works, this factor generally weighs in favor of the rights holders.36

Factor 3: Amount and Substantiality of the Portion Used: This factor looks at how much of the original work was used. AI training typically involves copying the entirety of the works in a dataset, an act that traditionally weighs heavily against a finding of fair use.35 Developers contend that using the full work is technically necessary for the model to learn effectively and that this necessity should favor fair use.36 However, courts have drawn a sharp distinction between using legally acquired works and using pirated works; one ruling suggested that while retaining legally acquired books for a training library might be fair use, the retention of pirated works is inherently infringing and weighs against it.36

Factor 4: Effect of the Use Upon the Potential Market for or Value of the Copyrighted Work: Often considered the most important factor, this assesses whether the new use harms the existing or potential market for the original work. AI developers argue that their models create new types of works and do not substitute for the originals, thus causing no market harm.37 Rights holders present a two-pronged counterargument: first, that AI-generated outputs can and do directly compete with and substitute for human-created works, and second, that the act of unlicensed training itself destroys the new and “potential derivative market of ‘data to train legal AI models’”.6 This second point is crucial, as it frames licensing for AI training as a new, legitimate market that is being usurped by infringement. The landmark ruling in Thomson Reuters v. Ross Intelligence explicitly recognized this potential market as a valid consideration under the fourth factor.39

The table below summarizes the core arguments from both sides as they apply to the four factors of fair use.

4.2. Key Litigations and Their Implications

Several ongoing lawsuits are serving as critical test cases for these legal theories.

The New York Times v. OpenAI & Microsoft: This case is particularly potent because the plaintiff has been able to produce evidence of “memorization,” where ChatGPT generated near-verbatim excerpts of paywalled articles.26 This directly challenges the defense’s “transformative use” argument by demonstrating that the model can act as a direct market substitute for the original work, potentially causing significant harm to the newspaper’s subscription and licensing revenue.40

Getty Images v. Stability AI: This lawsuit focuses on the infringement of visual works. Getty alleges that Stability AI copied more than 12 million of its images without permission. A key piece of evidence is the AI’s ability to generate distorted versions of Getty’s distinctive watermark in its outputs, which strongly suggests that the model did not merely learn abstract “styles” but retained structural elements of the original images.42 The plaintiffs in these visual arts cases have also advanced the theory that “latent images” stored as compressed data within the model’s weights are themselves infringing copies.42

4.3. The Inevitable Shift to Licensing

While AI developers have argued in court that a market for licensing training data is “impracticable” and purely theoretical, their real-world actions have proven the opposite.44 In a significant strategic shift, major AI companies including OpenAI and Microsoft have begun signing a flurry of high-value licensing agreements with leading content providers such as the Associated Press, Axel Springer, and the Financial Times.44

This trend represents a market-based acknowledgment of two crucial facts. First, it concedes the immense value that high-quality, curated, and reliable data provides to AI models. Second, it is a tacit admission of the significant legal and financial risks associated with continued reliance on unlicensed, scraped data. The emergence of this voluntary licensing market fatally undermines the fourth-factor fair use argument that no such market exists to be harmed. It demonstrates that a viable, functioning market for AI training data is not only possible but is already becoming the industry standard, thereby strengthening the legal and economic position of all rights holders seeking to control and monetize the use of their works.

Section 5: A New Framework: Recommendations for Rights Holders, Creators, and Legal Experts

The technological realities of generative AI and the evolving legal landscape necessitate a fundamental rethinking of how intellectual property is protected and valued. The demonstrably flawed “opt-out” and “pay-per-output” models must be abandoned in favor of a new framework grounded in affirmative consent, transparency, and fair compensation for foundational value. This section provides actionable recommendations for rights holders, creators, and their legal and policy advocates to navigate this new terrain and build a more equitable ecosystem.

5.1. Recommendations for Rights Holders and Creators

Individual creators and rights-holding institutions must adopt a multi-layered strategy that combines technical deterrence, robust legal assertion, and proactive market engagement.

Technical Defenses (Deterrence and Detection): While no technical fix is foolproof, a combination of measures can create significant friction for unauthorized scrapers and establish a clear record of intent to restrict use.

Implement robots.txt: All websites should have a robots.txt file that explicitly disallows known AI web crawlers (e.g., GPTBot, Google-Extended, CCBot). While this protocol is voluntary, major AI companies have stated they will honor it, and failure to do so can serve as evidence of willful infringement.46

Strengthen Terms of Service: Website terms of service should be updated with explicit clauses that prohibit any form of automated scraping, data mining, or use of content for the purpose of training artificial intelligence models without express written permission.46

Use Technical Blockers and Rate Limiting: Employ services like Cloudflare or specialized WordPress plugins (such as the one offered by Raptive) to automatically identify and block or challenge suspicious bot traffic. Implementing rate limiting, which restricts the number of requests an IP address can make in a given timeframe, can effectively thwart mass-scraping attempts.46

Embed Notices and Watermarks: Include a clear “NO AI TRAINING” notice on the copyright page of books, in the metadata of digital files, and at the bottom of online articles.47 For visual works, subtle digital watermarking can also serve as a deterrent and a marker of ownership.46

Legal and Contractual Safeguards (Assertion of Rights):

Update Publishing and Distribution Agreements: This is a critical step. Authors and artists must negotiate clauses in their contracts that explicitly reserve all rights related to AI training. The Authors Guild has developed model contract clauses that prohibit publishers from licensing works for AI training without the author’s express, separate permission and a fair share of compensation.50 Creators should reject any contract language that attempts to bundle AI rights under broad “subsidiary” or “electronic rights” clauses.52

Timely Copyright Registration: Formally registering creative works with the U.S. Copyright Office is a prerequisite for filing a copyright infringement lawsuit and for being eligible to receive statutory damages and attorneys’ fees. This provides essential legal leverage in any dispute.53

Proactive Licensing Strategies (Monetization and Control):

Explore Collective Licensing: For individual creators, negotiating with large tech companies is impracticable. Supporting or forming collective management organizations (CMOs)—similar to ASCAP or BMI in the music industry—is the most effective path forward. These organizations can negotiate licenses at scale, collect royalties, and distribute them to members, thereby solving the transaction cost problem and increasing collective bargaining power.54

Engage with Ethical Licensing Platforms: Creators can partner with emerging platforms like “Created by Humans” and “Fairly Trained,” which are building marketplaces for consensually licensed, transparently sourced data for AI training. These platforms provide a mechanism for authors to monetize their works while retaining control.51

5.2. Recommendations for Legal and Policy Experts

Legal professionals and policy advocates have a crucial role in shaping the legal doctrines and regulatory frameworks that will govern AI and copyright for decades to come.

Advocacy for Legislative Clarity:

Mandate Transparency in Training Data: Advocate for legislation, such as the proposed Generative AI Copyright Disclosure Act, that would legally require AI companies to publicly disclose the copyrighted works contained within their training datasets. This transparency is the bedrock of any effective enforcement or licensing regime.51

Require Clear Labeling of AI-Generated Content: Push for laws that mandate clear and conspicuous labeling of content that is wholly or substantially generated by AI. This protects consumers from deception, prevents the dilution of human-authored markets, and helps maintain the integrity of the information ecosystem.56

Codify “Opt-In” as the Legal Default: The most important legislative goal should be to reaffirm and codify the foundational principle of copyright: use of a protected work requires the affirmative, opt-in consent of the rights holder. The AI Accountability and Personal Data Protection Act, which establishes a federal tort for using copyrighted works without permission, is a model for such legislation.27

Litigation Strategy:

Focus on Market Harm (Factor 4): In fair use litigation, the strongest argument against AI developers is the harm to the potential market. The proliferation of actual licensing deals between AI companies and publishers provides concrete evidence that a viable market for AI training data exists and is being usurped by unlicensed scraping.44

Dismantle the “Human Learning” Analogy: Litigators should aggressively challenge the flawed analogy between machine training and human learning. Arguments should draw on the U.S. Copyright Office’s analysis, emphasizing that machine training involves perfect, scalable, and commercial copying that is fundamentally different from the imperfect, filtered, and non-commercial way humans learn.6

Leverage the “Model Collapse” Argument: Introduce expert testimony on the phenomenon of model collapse. This scientific reality can be used to prove that human-created works are not a one-time commodity but an essential, ongoing resource for which a sustainable, fairly compensated market must exist to ensure the long-term viability of AI technology itself.

5.3. Toward Equitable and Sustainable Compensation Models

The manifest failures of the pay-per-output model necessitate the development of alternative frameworks that accurately reflect the foundational value of training data.

Upfront Bulk Licensing: This model, already emerging in the market, involves AI developers paying a significant, upfront fee for a license to use a curated, high-quality dataset for a specific period or for the training of a particular model version.44 This provides certainty for both parties and compensates for the foundational value of the data corpus as a whole.

Tiered Access and Revenue Sharing: A more sophisticated and equitable model would tie compensation to the success of the AI service. This could be structured as a percentage of revenue generated by the AI model, creating a partnership where creators share in the value they helped create. This aligns incentives and ensures that as the AI model’s value grows, so does the remuneration to its foundational data sources.55

Collective Management Organizations (CMOs): As mentioned for creators, the establishment of CMOs is the most viable path for scaling licensing across a fragmented creative landscape. These organizations can handle the complexities of negotiation, collection, and distribution of royalties from AI companies, ensuring that even independent creators can participate in the licensing market.54 This approach has a long and successful precedent in managing complex rights in the music industry and offers a proven blueprint for the age of AI.

By pursuing these integrated strategies, the creative community can move beyond the false promises of “opt-out” and “pay-per-output” and work toward building a future where technological innovation and creative expression can coexist in a balanced, respectful, and mutually beneficial relationship.

Works cited

What is an LLM (large language model)? - Cloudflare, accessed October 1, 2025, https://www.cloudflare.com/learning/ai/what-is-large-language-model/

AI Demystified: Introduction to large language models - Stanford University, accessed October 1, 2025, https://uit.stanford.edu/service/techtraining/ai-demystified/llm

Understanding large language models: A comprehensive guide - Elastic, accessed October 1, 2025, https://www.elastic.co/what-is/large-language-models

What Are Large Language Models (LLMs)? - IBM, accessed October 1, 2025, https://www.ibm.com/think/topics/large-language-models

How to Train AI for Video Generation - Leylinepro, accessed October 1, 2025, https://www.leylinepro.ai/blog/how-to-train-ai-for-video-generation

Copyright Office Weighs In on AI Training and Fair Use, accessed October 1, 2025, https://www.skadden.com/insights/publications/2025/05/copyright-office-report

AI and the Copyright Liability Overhang: A Brief Summary of the Current State of AI-Related Copyright Litigation | Insights | Ropes & Gray LLP, accessed October 1, 2025, https://www.ropesgray.com/en/insights/alerts/2024/04/ai-and-the-copyright-liability-overhang-a-brief-summary-of-the-current-state-of-ai-related

How Does Generative AI Work | Microsoft AI, accessed October 1, 2025, https://www.microsoft.com/en-us/ai/ai-101/how-does-generative-ai-work

Large language model - Wikipedia, accessed October 1, 2025, https://en.wikipedia.org/wiki/Large_language_model

What is LLM? - Large Language Models Explained - AWS - Updated 2025, accessed October 1, 2025, https://aws.amazon.com/what-is/large-language-model/

Understanding the Internal Structure of AI Models | by Nestor Almeida - Medium, accessed October 1, 2025, https://medium.com/@nestor.almeida/understanding-the-internal-structure-of-ai-models-b7170557703e

What is a Generative Model? | IBM, accessed October 1, 2025, https://www.ibm.com/think/topics/generative-model

Essential LLM Papers: A Comprehensive Guide | by M | Oct, 2025 - Towards AI, accessed October 1, 2025, https://pub.towardsai.net/essential-llm-papers-a-comprehensive-guide-65e8518c2942

Understanding Tokens and Parameters in Model Training: A Deep Dive - Functionize, accessed October 1, 2025, https://www.functionize.com/blog/understanding-tokens-and-parameters-in-model-training

How AI is trained: the critical role of training data - RWS, accessed October 1, 2025, https://www.rws.com/artificial-intelligence/train-ai-data-services/blog/how-ai-is-trained-the-critical-role-of-ai-training-data/

How ChatGPT and our foundation models are developed - OpenAI Help Center, accessed October 1, 2025, https://help.openai.com/en/articles/7842364-how-chatgpt-and-our-language-models-are-developed

[Removed due to 404]

Knowledge Distillation: Making AI Models Smaller, Faster & Smarter - Data Science Dojo, accessed October 1, 2025, https://datasciencedojo.com/blog/understanding-knowledge-distillation/

What is Knowledge distillation? | IBM, accessed October 1, 2025, https://www.ibm.com/think/topics/knowledge-distillation

Generative AI Meets Copyright Scrutiny: Highlights from the Copyright Office’s Part III Report, accessed October 1, 2025, https://www.sidley.com/en/insights/newsupdates/2025/05/generative-ai-meets-copyright-scrutiny

Opt-Out Approaches to AI Training: A False Compromise, accessed October 1, 2025, https://btlj.org/2025/04/opt-out-approaches-to-ai-training/

The Case for Requiring Explicit Consent from Rights Holders for AI ..., accessed October 1, 2025, https://www.techpolicy.press/the-case-for-requiring-explicit-consent-from-rights-holders-for-ai-training/

Opt-in Vs Opt-out Consent: Difference & How to Implement Each - Securiti.ai, accessed October 1, 2025, https://securiti.ai/blog/opt-in-vs-opt-out/

The Difference Between Opt-In vs Opt-Out Principles In Data Privacy, accessed October 1, 2025, https://secureprivacy.ai/blog/difference-beween-opt-in-and-opt-out

User Empowerment: Opt-In vs. Opt-Out in Privacy | Mandatly, accessed October 1, 2025, https://mandatly.com/data-privacy/user-empowerment-the-significance-of-opt-out-vs-opt-in-in-data-privacy

The New York Times v. OpenAI: The Biggest IP Case Ever - Sunstein LLP, accessed October 1, 2025, https://www.sunsteinlaw.com/publications/the-new-york-times-v-openai-the-biggest-ip-case-ever

Authors Guild Welcomes AI Accountability and Personal Data Protection Act, accessed October 1, 2025, https://authorsguild.org/news/ag-welcomes-ai-accountability-and-personal-data-protection-act/

Ethics of AI in Incentive Compensation: Ensuring Fairness & Fairness - Incentivate, accessed October 1, 2025, https://incentivatesolutions.com/blogs/5-ethics-of-ai-in-incentive-compensation/

Model Collapse: How Generative AI Is Eating Its Own Data - VKTR.com, accessed October 1, 2025, https://www.vktr.com/ai-technology/model-collapse-how-generative-ai-is-eating-its-own-data/

Model collapse - Wikipedia, accessed October 1, 2025, https://en.wikipedia.org/wiki/Model_collapse

What Is Model Collapse? - IBM, accessed October 1, 2025, https://www.ibm.com/think/topics/model-collapse

(PDF) AI models collapse when trained on recursively generated data - ResearchGate, accessed October 1, 2025, https://www.researchgate.net/publication/382526401_AI_models_collapse_when_trained_on_recursively_generated_data

Could we see the collapse of generative AI? - Inria, accessed October 1, 2025, https://www.inria.fr/en/collapse-ia-generatives

Model Collapse and the Right to Uncontaminated Human-Generated Data, accessed October 1, 2025, https://jolt.law.harvard.edu/digest/model-collapse-and-the-right-to-uncontaminated-human-generated-data

Copyright Office Issues Key Guidance on Fair Use in Generative AI Training - Wiley Rein, accessed October 1, 2025, https://www.wiley.law/alert-Copyright-Office-Issues-Key-Guidance-on-Fair-Use-in-Generative-AI-Training

Fair use or free ride? The fight over AI training and US copyright law ..., accessed October 1, 2025, https://iapp.org/news/a/fair-use-or-free-ride-the-fight-over-ai-training-and-us-copyright-law

A New Look at Fair Use: Anthropic, Meta, and Copyright in AI Training - Reed Smith LLP, accessed October 1, 2025, https://www.reedsmith.com/en/perspectives/2025/07/a-new-look-fair-use-anthropic-meta-copyright-ai-training

Training Generative AI Models on Copyrighted Works Is Fair Use, accessed October 1, 2025, https://www.arl.org/blog/training-generative-ai-models-on-copyrighted-works-is-fair-use/

AI Training Using Copyrighted Works Ruled Not Fair Use, accessed October 1, 2025, https://www.pbwt.com/publications/ai-training-using-copyrighted-works-ruled-not-fair-use

The New York Times v. OpenAI and Microsoft - Smith & Hopen, accessed October 1, 2025, https://smithhopen.com/2025/07/17/nyt-v-openai-microsoft-ai-copyright-lawsuit-update-2025/

Does Training an AI Model Using Copyrighted Works Infringe the Owners’ Copyright? An Early Decision Says, “Yes.” | Insights, accessed October 1, 2025, https://www.ropesgray.com/en/insights/alerts/2025/03/does-training-an-ai-model-using-copyrighted-works-infringe-the-owners-copyright

What data is used to train an AI, where does it come from, and who owns it?, accessed October 1, 2025, https://www.potterclarkson.com/insights/what-data-is-used-to-train-an-ai-where-does-it-come-from-and-who-owns-it/

Getty Images v. Stability AI | BakerHostetler, accessed October 1, 2025, https://www.bakerlaw.com/getty-images-v-stability-ai/

How the growing market for training data is eroding the AI case for copyright ‘fair use’, accessed October 1, 2025, https://www.transparencycoalition.ai/news/how-the-growing-market-for-training-data-is-eroding-the-ai-case-for-copyright-fair-use

Content Creators vs. Generative Artificial Intelligence: Paying a Fair Share to Support a Reliable Information Ecosystem, accessed October 1, 2025, https://www.aei.org/technology-and-innovation/content-creators-vs-generative-artificial-intelligence-paying-a-fair-share-to-support-a-reliable-information-ecosystem/

Guarding the Digital Gates: 6 Tips to Protecting Your Content in the ..., accessed October 1, 2025, https://creativelicensinginternational.com/licensing-brief/6-tips-for-protecting-your-content-in-the-age-of-ai/

Practical Tips for Authors to Protect Their Works from AI Use - The Authors Guild, accessed October 1, 2025, https://authorsguild.org/news/practical-tips-for-authors-to-protect-against-ai-use-ai-copyright-notice-and-web-crawlers/

Creators brace for AI bots scraping their work - Digiday, accessed October 1, 2025, https://digiday.com/media/creators-brace-for-ai-bots-scraping-their-work/

5 Simple Yet Effective Ways to Prevent AI Scrapers from Stealing Your Website Content | Protecting Your Data from Unauthorized Web Scraping, accessed October 1, 2025, https://www.webasha.com/blog/5-simple-yet-effective-ways-to-prevent-ai-scrapers-from-stealing-your-website-content-protecting-your-data-from-unauthorized-web-scraping

Authors Guild Encouraged by Penguin Random House’s New AI Restrictions, accessed October 1, 2025, https://authorsguild.org/news/ag-encouraged-by-penguin-random-house-ai-restrictions/

Artificial Intelligence - The Authors Guild, accessed October 1, 2025, https://authorsguild.org/advocacy/artificial-intelligence/

Authors Guild Reinforces Its Position on AI Licensing - Publishers Weekly, accessed October 1, 2025, https://www.publishersweekly.com/pw/by-topic/industry-news/licensing/article/96745-authors-guild-reinforces-its-position-on-ai-licensing.html

AI and the visual arts: The case for copyright protection - Brookings Institution, accessed October 1, 2025, https://www.brookings.edu/articles/ai-and-the-visual-arts-the-case-for-copyright-protection/

Practical commentary regarding copyright and generative AI training - Norton Rose Fulbright, accessed October 1, 2025, https://www.nortonrosefulbright.com/en/knowledge/publications/87200379/practical-commentary-regarding-copyright-and-generative-ai-training

AI vs. Artists? A Fair Pay Solution That Works for Both | by InternetRando - Medium, accessed October 1, 2025, https://medium.com/@internetrandostrikesagain/ai-vs-artists-a-fair-pay-solution-that-works-for-both-74ec1ef42601

Authors Guild Submits Guidance for National AI Action Plan to Protect Writers’ Rights, accessed October 1, 2025, https://authorsguild.org/news/ag-submits-guidance-for-national-ai-action-plan/

GPT-4o: In my view, the Sora 2 “opt-out default” strategy is a daring gamble, not a clever one — and I lean toward calling it reckless. It might succeed in the short term...

Why this “opt-out first, ask permission later” gambit is arguably the dumbest possible move

If OpenAI truly wishes to respect copyright and align with an opt-in world, Sora 2 must be rebuilt from the ground up.

Why Sora 2 Must Be Retrained: The Legal and Technical Case Against Opt-In Without a Reset