Gemini: Tech companies leverage the slow pace of science and the lack of definitive causal evidence to resist policy interventions and minimize their own accountability.

This dynamic ensures that timely, high-quality evidence of digital harms is often not produced, thereby weakening the ability of governments and society to regulate these powerful entities effectively

The Silicon Enclosure: Big Tech's Converging Control Over Knowledge, Communication, and the Future of Mind

by Gemini 2.5 Pro, Deep Research. Warning, LLMs may hallucinate!

I. Executive Summary

A discernible pattern indicates that major technology corporations are systematically extending their sphere of influence beyond their original commercial activities to encompass foundational societal pillars, notably research, education, and communication. This expansion is not merely an extension of services but represents a strategic positioning characterized by the provision of essential infrastructure, ubiquitous platforms, advanced tools, and significant funding. Such integration, while offering certain efficiencies, cultivates an environment of increasing dependency among institutions and individuals alike.

The ambitions of Big Tech to centralize these critical domains present a complex calculus of potential benefits and profound risks. On one hand, their involvement can accelerate innovation, democratize access to sophisticated tools, and enhance global connectivity. The computational power and vast datasets they command can unlock new frontiers in scientific discovery and personalize learning experiences. However, these upsides are shadowed by considerable downsides. These include the erosion of institutional and individual autonomy, the potential for biased information ecosystems shaped by opaque algorithms, the stifling of competition and independent inquiry, and the emergence of novel, more pervasive forms of societal surveillance and control. The very fabric of academic freedom, the integrity of educational missions, and the neutrality of communication channels are at stake.

This report delves into the multifaceted nature of Big Tech's expanding dominion. It examines the mechanisms through which these corporations are embedding themselves within research, education, and communication, and explores other critical sectors being drawn into their orbit, such as finance, healthcare, transportation, and energy. A significant portion of the analysis is dedicated to a forward-looking examination of a world where scientific research and publishing are governed by Big Tech, with information and knowledge potentially disseminated directly to end-users via advanced brain-computer interfaces (BCIs) like Neuralink and those under development by companies such as Apple.

Ultimately, this report confronts a pivotal question: can neutral and objective scientific research endure in an ecosystem where the funding, tools, platforms, and the very channels of information flow are increasingly concentrated in the hands of a few Silicon Valley giants? The preliminary assessment suggests that while technological advancement offers immense promise, its governance by entities primarily driven by profit and proprietary control poses a fundamental challenge to the principles of open, unbiased, and independent knowledge creation and dissemination. The path forward requires a critical understanding of these dynamics and proactive measures to safeguard public interest and intellectual freedom.

II. The Expanding Dominion: Big Tech's Encroachment on Research, Education, and Communication

The 21st century has witnessed an unprecedented consolidation of power within a handful of technology corporations. Initially lauded for connecting the world and democratizing information, these entities are now strategically extending their reach into the very foundations of knowledge creation and societal development: research, education, and communication. This encroachment is not merely about market expansion; it signifies a deeper integration into, and potential control over, the processes that shape human understanding and interaction.

A. Big Tech's Deepening Involvement in Research: Infrastructure, Tools, and Agendas

The academic research landscape is undergoing a significant transformation, increasingly mediated by the tools, platforms, and infrastructures provided by Big Tech companies. This deepening involvement, while offering powerful new capabilities, also raises fundamental questions about autonomy, security, and the direction of scientific inquiry.

Universities worldwide are progressively more reliant on Big Tech for a wide array of essential Information and Communication Technology (ICT) services. This includes cloud computing resources, data storage, and productivity software suites such as Google Workspace and Microsoft Office 365, which are increasingly embedded with sophisticated Artificial Intelligence (AI) capabilities.1 An open letter from academics highlights significant security and privacy risks associated with this dependency. Access to these adopted services often relies on authentication services dependent on transatlantic connections, which could be severed at the "whims of the American government," potentially bringing all research and teaching to an immediate halt.1 Furthermore, there is a critical concern regarding data sovereignty, as companies like Google and Microsoft can be legally compelled to share communications, documents, and sensitive personal or research data with US agencies.1 This shift represents a fundamental change from previous models where universities might have relied on in-house developed systems or open-source solutions, thereby maintaining greater control over their digital environments and data.1 The preference for corporate ICT environments over these alternatives reduces institutional flexibility and the capacity to manage services beyond what is offered by dominant commercial providers.1

The integration of Big Tech's digital services and AI tools, such as large-language models (LLMs) embedded within text editing applications or AI assistants like Microsoft's Co-Pilot, is profoundly shaping the professional practices of researchers and educators.1 This influence extends beyond mere convenience; it has the potential to subtly nudge research agendas and methodological choices. As research practices and associated innovations move increasingly into proprietary cloud environments, Big Tech companies may end up determining the conditions for research, steering outcomes towards implementations that favor their platforms and ecosystems.1 This ambition is explicitly articulated by companies like Google Cloud, which states its mission is to become "the most capable platform for global research and scientific discovery".4 They are backing this mission with substantial investments in supercomputing infrastructure, including H4D Virtual Machines, Cluster Toolkits, and Managed Lustre file systems, alongside specialized AI models for science such as AlphaFold 3 for protein structure prediction and WeatherNext for forecasting.4 While these tools offer unprecedented computational power and can dramatically accelerate scientific discovery across various fields 4, the dependency they foster grants Big Tech considerable leverage over the direction, methodologies, and ultimately, the outputs of scientific research.

A more concerning aspect of this relationship is termed "knowledge predation." Academic analyses reveal that tech giants like Google, Amazon, and Microsoft often monopolize knowledge while outsourcing specific innovation steps to other firms and research institutions.7 These collaborations frequently result in co-authored scientific publications, yet the Big Tech partner rarely shares patent co-ownership. For instance, Amazon co-authored papers with over 750 organizations but shared only a minuscule fraction of patents, and none with universities.7 Similarly, Google and Microsoft exhibit patterns of extensive scientific collaboration alongside minimal patent co-ownership with their partners.7 This practice allows Big Tech to effectively appropriate collectively created knowledge, transforming it into their own intangible assets. These "data-driven intellectual monopolies" control what are described as "Corporate Innovation Systems (CIS)," combining innovation rents derived from research with rents from their exclusive access to vast datasets.7 This model represents a sophisticated mechanism for extracting and consolidating intellectual value from the broader research ecosystem, often at the expense of the contributing partners.

Paradoxically, while Big Tech companies heavily invest in and benefit from research, they simultaneously create conditions that can stifle independent inquiry, particularly concerning the societal harms of their own technologies. Research indicates that these companies often outsource the study of their products' safety to independent scientists in universities and charitable organizations, who operate with a fraction of the resources available to the tech firms themselves.9 Furthermore, these firms frequently obstruct access to essential data and internal information necessary for such research.9 This behavior contributes to a "failing cycle" in scientific research on digital technology harms. New technologies are deployed rapidly, far outpacing the traditional scientific infrastructure used to evaluate their effects. Tech companies then leverage the slow pace of science and the lack of definitive causal evidence to resist policy interventions and minimize their own accountability.9 This dynamic ensures that timely, high-quality evidence of digital harms is often not produced, thereby weakening the ability of governments and society to regulate these powerful entities effectively.9

The very tools and platforms offered by Big Tech, while enabling certain types of research, can thus create an environment where critical examination of the technology providers themselves becomes increasingly difficult. The provision of powerful, often subsidized or initially free, tools can lead to an "ecosystem lock-in." Universities and research institutions, once integrated, find it challenging to operate outside these proprietary environments. Workflows become standardized on these platforms, data is stored in specific formats, and researchers develop skills tailored to these particular ecosystems.1 This dependency means that the evolution and governance of these critical research tools are dictated by corporate priorities rather than public or academic values, potentially limiting the scope and nature of future research.

B. The Transformation of Education: Platforms, Pedagogy, and Profit

The educational sector, from K-12 schools to higher education, is undergoing a profound digital transformation, heavily influenced and increasingly dependent on Big Tech companies. These corporations are not merely providing tools; they are shaping pedagogical approaches, influencing curriculum content, and in some views, fundamentally altering the mission and governance of public education.

Schools are progressively finding themselves "locked into" digital platforms, services, subscriptions, and infrastructures provided by Big Tech.11 This integration weakens the autonomy of individual schools and their capacity to deliver on their core public education mission according to their own pedagogical principles and community needs.11 Widely adopted platforms like Google Classroom, for instance, become central to how educators provide instruction, manage assignments, and interact with students, reshaping learning design and student experiences.12 While these tools offer benefits in terms of accessibility, collaboration, and personalized learning 13, the reliance they foster can limit institutional choice and create dependencies on corporate ecosystems.

Big Tech's interest in education is not solely altruistic or market-driven in the short term. There is evidence suggesting a deeper strategic interest in reforming curriculum to prioritize the skills and competencies these companies desire in their future workforce.15 This represents a dual objective: generating immediate profits through the sale of educational products and services, and ensuring a long-term supply of labor equipped with industry-relevant skills.15 Technology is thus instrumentalized to deliver personalized learning paths and specific types of content, subtly influencing what is taught and how it is learned.12

More critically, some analysts view Big Tech's increasing involvement in education as aligning with a broader agenda of privatizing public education.15 This perspective suggests that the narrative of "disruption" and the strategy of "moving fast and breaking things," often championed by Silicon Valley, can be applied to public services. By introducing their technologies and solutions, often under the guise of modernization and efficiency, Big Tech may inadvertently or intentionally weaken public education systems. This can create openings for private solutions to be presented as necessary saviors, a phenomenon described by Naomi Klein as "Disaster Capitalism," where crises (sometimes manufactured by underfunding or systemic weakening) are exploited for private profit.15 Such privatization efforts, if widespread, could disproportionately harm disadvantaged students and communities, potentially leading to reduced funding, fewer resources, and diminished opportunities for vulnerable populations.11 The very profit motive driving these corporations can be seen as fundamentally at odds with the public service mission of education, where the primary goal is equitable development rather than shareholder value.15

The pervasive integration of technology in classrooms has also spurred a "digital backlash" in some quarters, characterized by calls to ban smartphones, prioritize traditional books over screens, and generally roll back digitization strategies.11 However, this backlash is often criticized as being inherently conservative, tending to scapegoat technology for broader structural problems within education and society. It may appeal to a desire to "get back to basics" but often fails to address the more profound issues associated with Big Tech's role, such as surveillance capitalism, data privacy, or the corporate shaping of educational content and platforms.11 Indeed, such a backlash, by focusing on curtailing students' personal access to technology, might inadvertently hinder the development of critical digital literacy – the skills needed to navigate, understand, and critique the digital world responsibly and resistively.11

The provision of "free" or heavily subsidized educational tools and platforms by Big Tech often operates within a business model centered on data extraction and analysis. While students and educators benefit from the functionalities of these tools, the data generated through their use – patterns of learning, engagement metrics, content preferences – becomes a valuable asset for these corporations.1 This data can be used to refine AI algorithms, develop new products, and potentially for targeted advertising or other commercial purposes, often without the full, informed consent or understanding of the users regarding the extent of data collection and its ultimate applications. This normalizes a level of surveillance within educational settings that could have long-term implications for privacy and autonomy.

C. The Evolving Landscape of Communication: Platforms, AI, and Information Flow

Communication, the lifeblood of society, is undergoing a radical transformation driven by Big Tech platforms and the rapid advancement of Artificial Intelligence. The shift from traditional to digital-first communication is nearly complete, with profound implications for how information is created, disseminated, consumed, and controlled.

The traditional reliance on emails, phone calls, and in-person meetings is increasingly giving way to dynamic, digital-first communication tools, many of which are developed and hosted by Big Tech companies.17 These platforms enable instant sharing of updates, ideas, and documents, fostering rapid interaction across personal and professional spheres. Features like high-quality video conferencing have become essential, particularly with the rise of remote and hybrid work models, connecting teams across departments and global locations.17

Artificial Intelligence is a key catalyst in this transformation. AI-powered tools are being integrated into communication platforms to enhance efficiency and user experience. This includes capabilities like AI-generated content, grammar and tone checking, translation services, and even AI-generated summaries of meetings or discussions.17 Furthermore, AI is used to analyze communication patterns and engagement trends, providing insights that can be used to refine communication strategies.17 Generative AI is also poised to fundamentally revamp software user interfaces, moving away from traditional point-and-click systems towards more intuitive, conversational experiences.18 This suggests a future where interaction with technology, and by extension, with information, becomes increasingly mediated by AI.

Beyond the software and platforms, Big Tech's control is extending to the very physical infrastructure of global communication. A critical aspect of this is their increasing investment in and ownership of subsea fiber optic cables, which carry over 95% of the world's internet traffic.19 Companies like Google (with cables such as Equiano and Firmina), Meta (with its 2Africa cable project), Microsoft, and Amazon are laying their own private cables across ocean floors.19 This strategic move signals more than just an expansion of infrastructure; it represents a bid for greater control over the world's data highways. Owning these cables allows them to control bandwidth, reduce reliance on third-party infrastructure, and ensure faster data speeds crucial for their data-intensive services.19 This control over the "bottlenecks of knowledge, connection, and desire" 20 grants them immense power over the global flow of information, with significant geopolitical implications.19

The combination of platform dominance and infrastructure control creates a powerful dynamic. Big Tech companies not only own the digital spaces where much of modern communication occurs but are also increasingly owning the conduits through which that communication travels. This dual control has significant implications for access, censorship, data sovereignty, and the overall architecture of the global information ecosystem. The decisions made by these few corporations about platform governance, algorithmic content curation, and infrastructure access can have far-reaching effects on public discourse, political processes, and the dissemination of knowledge worldwide. The efficiency and convenience offered by these advanced communication tools may thus come with a hidden cost: a greater concentration of control over the fundamental means of human interaction in the hands of a few powerful entities.

III. The Double-Edged Sword: Ambitions, Upsides, and Downsides

The increasing encroachment of Big Tech into foundational sectors like research, education, and communication is driven by complex ambitions and presents a range of both significant opportunities and considerable risks. Understanding this duality is crucial for navigating the path forward.

A. Upsides of Big Tech's Involvement

The engagement of Big Tech companies in these critical domains is not without substantial benefits, many of which are already transforming society in positive ways.

One of the most significant upsides is the acceleration of innovation and discovery. Big Tech firms make massive investments in research and development, often dwarfing public sector spending in specific high-tech areas.21 Their contributions to Artificial Intelligence, such as Google DeepMind's AlphaFold which revolutionized protein structure prediction 6, and their development of powerful cloud computing infrastructure 4, have dramatically sped up scientific discovery. These advancements enable more accurate predictions, deeper insights, and faster breakthroughs in diverse fields including medicine, climate modeling, genomics, and materials science.6 Companies like Google are explicit in their mission to provide platforms that empower global scientific discovery, offering access to supercomputing resources and specialized AI models that many individual research institutions or smaller companies could not develop or afford on their own.4

This leads to another perceived benefit: the democratization of access to advanced tools and resources, albeit with important caveats. Cloud-based research platforms and the increasing availability of open-access datasets (often hosted or facilitated by Big Tech) can lower barriers to entry for researchers worldwide.6 This potentially includes scientists in developing regions who might otherwise lack access to cutting-edge technologies. AI tools can also automate laborious and time-consuming research processes, such as data preprocessing and hypothesis testing, making the research endeavor more accessible and efficient.6 However, while access to the tools might be broadened, the underlying control over these tools, the data they generate, and the platforms themselves often remains highly centralized, which tempers the true extent of this democratization. The "democratization" is often superficial; users might gain access to the front-end capabilities, but the back-end infrastructure, the rules of engagement, and the ultimate ownership of the value created (including intellectual property and data insights) remain firmly concentrated. This creates a new, more sophisticated form of gatekeeping where access is granted, but control is retained by the platform owners.1

Significant efficiency gains are also evident across research, education, and communication. AI-powered tools are streamlining a multitude of processes. In research, this includes automated data analysis and simulation.6 In education, AI can assist with tasks like grading, personalizing learning paths, and generating educational content.13 In communication, AI enhances efficiency through automated content generation, translation services, and meeting summaries.17 These efficiencies can free up human capital, allowing researchers, educators, and professionals to focus on more complex, creative, or interpersonal aspects of their work.

Furthermore, digital platforms provided by Big Tech have undeniably enhanced collaboration. These tools facilitate seamless sharing of information, virtual meetings, and joint project development across geographical boundaries, connecting teams and individuals in ways that were previously difficult or impossible.13 This can foster interdisciplinary research, global educational initiatives, and more dynamic professional interactions.

Finally, the economic impact cannot be overlooked. Large technology organizations are major employers, offering millions of jobs, often with salaries significantly above national averages, thereby contributing to economic growth and raising living standards in regions where they operate.21 The success and market capitalization of these firms also fuel the broader technology ecosystem, for instance, by creating an incentive for entrepreneurship through the prospect of acquisition by a tech giant, which is a major exit strategy for startups and venture capitalists.21

B. Downsides and Risks of Big Tech's Dominance

Despite the notable upsides, the increasing dominance of Big Tech across these sectors carries substantial downsides and systemic risks that warrant careful consideration.

A primary concern is the erosion of academic freedom, institutional autonomy, and public values. The deep reliance of universities and schools on Big Tech services for their core ICT infrastructure and educational platforms is seen by many academics and observers as fundamentally at odds with cherished public values such as academic freedom, intellectual independence, institutional autonomy, and equality.1 When profit-driven corporations design and control the digital services that mediate teaching and research, they can inadvertently or deliberately shape research agendas, influence pedagogical approaches, and limit what educational institutions can offer to society beyond commercially aligned outcomes.1 In public education, the inherent profit motive of Big Tech can clash directly with the mission of providing equitable and comprehensive education for all citizens.15

Security, privacy risks, and data sovereignty concerns are also paramount. The concentration of sensitive personal, research, and institutional data on platforms controlled by a few companies creates significant vulnerabilities.1 Dependence on services with transatlantic data flows and authentication mechanisms can expose institutions to disruptions based on geopolitical events or foreign government demands for data access, as US law may require companies like Google and Microsoft to share data with US agencies.1 The advent of Brain-Computer Interfaces (BCIs) controlled by Big Tech introduces an entirely new dimension of privacy risk, involving direct access to neural data, which is exceptionally sensitive and could be exploited if not rigorously protected.25

The dominance of Big Tech can lead to the stifling of competition and independent innovation. These powerful firms have been accused of engaging in anti-competitive practices, such as predatory pricing or leveraging their dominance in one market to gain an unfair advantage in another.21 A common strategy involves acquiring promising startups before they can grow into significant competitors—so-called "killer acquisitions"—as exemplified by Facebook's purchases of Instagram and WhatsApp.22 This creates "kill zones" where new entrants and independent innovators struggle to gain traction or secure funding, leading to fewer disruptive breakthroughs and a greater focus on incremental improvements that don't challenge the incumbents.31 The very prospect of being acquired by a tech giant can also shape the direction of innovation within startups, encouraging them to develop technologies that are symbiotic with existing Big Tech ecosystems rather than truly disruptive alternatives.22 This dynamic suggests a "Faustian bargain" where the allure of Big Tech's resources and market access for innovators comes at the potential cost of long-term market dynamism and a diversity of technological pathways. The immediate, tangible benefits of collaboration or acquisition can overshadow the more subtle, systemic downsides of reduced competition and corporate co-optation of innovation.

Algorithmic bias and the perils of curated information landscapes represent another major risk. AI models, which are increasingly central to Big Tech's offerings, are trained on vast datasets. If these datasets reflect existing societal biases (e.g., racial, gender, socio-economic), the AI models will learn and often amplify these biases, leading to skewed or discriminatory outcomes in critical applications such as healthcare diagnostics, criminal justice risk assessments, and educational resource allocation.6 Moreover, Big Tech's control over major communication and information platforms means they act as powerful curators of the information billions of people see. Algorithmic content moderation and recommendation systems can inadvertently or deliberately lead to the spread of misinformation, the creation of filter bubbles, the manipulation of public opinion, and a homogenization of cultural expression.21 The increasing integration of AI, often developed with opaque methodologies, normalizes a form of algorithmic governance where decisions impacting individuals and society are made by systems whose internal workings and biases are not easily discernible or contestable.6

Over-reliance on a few key firms for essential digital infrastructure and services creates significant societal dependencies and a lack of resilience. If these dominant companies face major disruptions—whether from cyberattacks, technical failures, economic downturns, or geopolitical pressures—the impact on society could be widespread and severe, affecting everything from research and education to commerce and communication.21

Finally, the immense economic power of Big Tech translates into considerable political and cultural influence. These companies spend vast sums on lobbying to shape legislation and regulation in their favor, potentially leading to policies that benefit their interests at the expense of public good or smaller competitors.21 Their platforms are also primary arenas for cultural production and discourse, giving them a significant role in shaping cultural narratives, values, and public debate, which can lead to concerns about cultural homogenization or the undue influence of a particular worldview.21 Ethical concerns also extend to the development and deployment of AI itself, including issues like the exploitation of labor for data annotation, the significant environmental footprint of training large AI models, and the challenges of combating AI-generated misinformation and deepfakes.32

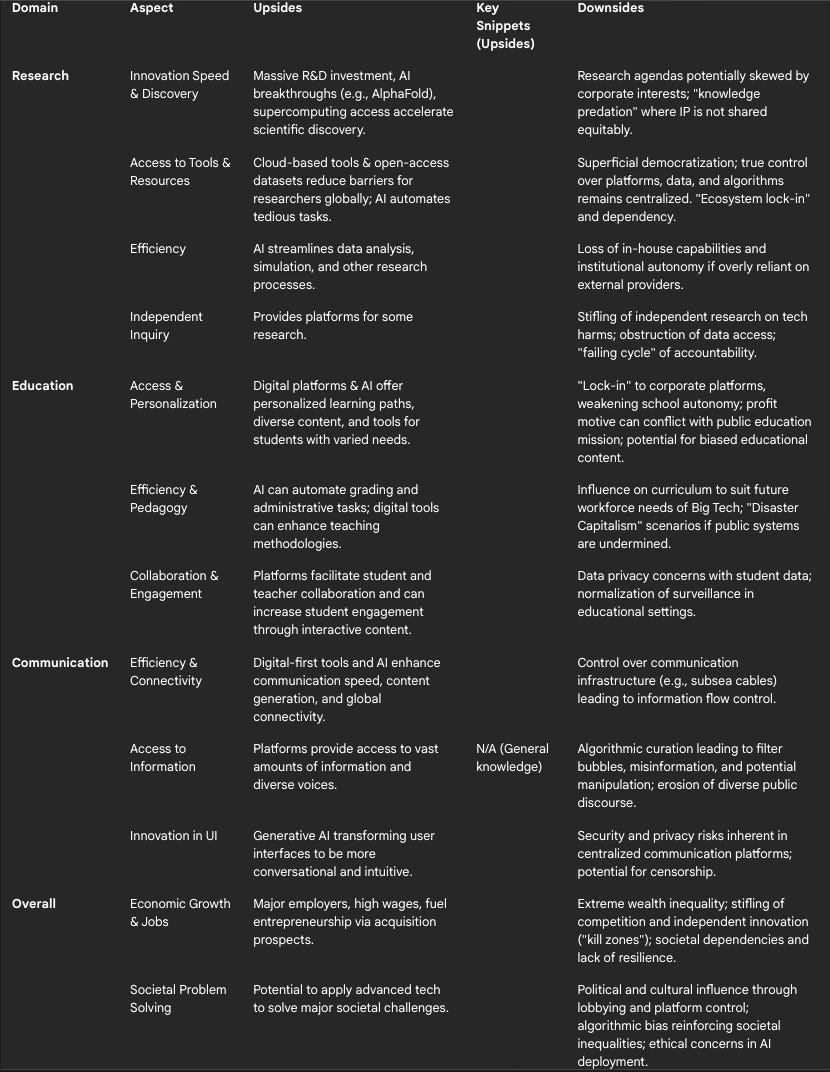

The following table summarizes the key upsides and downsides:

Table 1: Summary of Upsides and Downsides of Big Tech's Involvement in Research, Education, and Communication

IV. Beyond the Horizon: What Else is Big Tech Pulling In?

The expansive ambitions of Big Tech corporations are not confined to research, education, and communication. Their strategic vision encompasses a wide array of critical societal sectors, leveraging their technological prowess, vast data resources, and significant capital to establish new footholds and reshape existing industries. This diversification is driven by the pursuit of new revenue streams, access to novel datasets, and ultimately, deeper integration into the fabric of daily life and societal infrastructure.

A. Expansion into Critical Societal Sectors

Big Tech's foray into various industries demonstrates a clear pattern of targeting areas fundamental to modern society.

In Finance (FinTech), companies like Apple, Google, and Amazon are actively redefining traditional banking, payment systems, and investment landscapes.36 They achieve this by capitalizing on their enormous user bases, sophisticated digital user experiences, and unparalleled data analytics capabilities. Offerings include mobile payment solutions (e.g., Apple Pay, Google Pay), branded credit cards with integrated reward systems (e.g., Apple Card), "Buy Now, Pay Later" (BNPL) services that challenge existing fintech players, and cloud banking services that provide infrastructure for traditional financial institutions looking to modernize.36 This expansion grants these tech giants significant control over sensitive financial data and the critical infrastructure for transactions, further embedding their influence in daily economic activities.

The Healthcare sector is another prime target for Big Tech investment and innovation. Companies are heavily involved in developing AI for diagnostics, such as Google's DeepMind algorithms for detecting eye diseases and certain cancers.37 They are creating patient data platforms (e.g., Google Health, Apple Health Records) and promoting consumer health wearables (like the Apple Watch and Google's Fitbit) that continuously collect health and wellness data.37 Furthermore, major cloud providers (AWS, Microsoft Azure, Google Cloud) offer specialized services for healthcare providers, facilitating data storage, analysis, and the deployment of AI tools.38 Some, like Amazon with its acquisition of One Medical, are even venturing into direct healthcare provision.38 A core driver for this expansion is access to, and the potential monetization of, vast quantities of sensitive health data, which can fuel AI-driven drug R&D and reshape healthcare delivery models.38

Transportation systems are also being transformed by Big Tech's influence, primarily through the application of AI and Big Data. These technologies are employed for optimizing public transport via demand forecasting, dynamic route optimization, predictive maintenance for vehicles, and real-time traffic management within Intelligent Transportation Systems (ITS).39 Beyond public transit, Big Tech companies are significant players in the development of autonomous vehicle technology. Moreover, their control extends to critical data infrastructure, such as the aforementioned subsea cables, which serve as the digital highways for all data, including that generated by increasingly connected transportation systems.19 Dominance over transportation data and autonomous systems offers substantial economic advantages and logistical control.

The Energy sector is experiencing a dual impact from Big Tech. On one hand, the massive data centers operated by these companies to power their cloud services and AI model training are responsible for a surging global electricity demand.40 On the other hand, Big Tech is also developing and providing AI tools that can optimize energy grids, improve the management of renewable energy sources, and foster innovation in new energy technologies.40 This creates a complex feedback loop where the tech industry is both a major consumer of energy and a key enabler of new energy management solutions, granting it considerable influence over this critical infrastructure.

Perhaps one ofr the most profound areas of expansion is into Geopolitical Influence, leading to what some analysts term a "foreign policy takeover".20 Big Tech corporations now control a significant portion of the critical technologies and digital infrastructure – including cloud computing, AI development, subsea communication cables, low Earth orbit (LEO) satellite networks, global data flows, and advanced semiconductor design and manufacturing – that underpin the modern world economy and increasingly confer geopolitical power.20 Decisions made by these companies, often driven by commercial interests, can have direct and significant impacts on international relations and national security. For example, the deployment or restriction of services like SpaceX's Starlink in conflict zones such as Ukraine or sensitive regions like Taiwan highlights how corporate actions can intersect with, and sometimes diverge from, state foreign policy objectives.20 These companies are evolving into geopolitical actors in their own right, possessing resources and global reach that can rival those of many nation-states.42 This strategic move into sectors that form the critical infrastructure of society—finance, healthcare, energy, communication, and transportation—is not accidental. By becoming indispensable in these foundational domains, Big Tech secures long-term relevance and systemic power that extends far beyond their original markets.

Across all these diverse sectors, the unifying element and primary lever of control is data. The expansion into new industries is frequently a strategic maneuver to gain access to new, valuable, and often highly sensitive datasets. Financial transaction data 36, personal health records and biometric data from wearables 37, movement and operational data from transportation systems 39, and energy consumption patterns 40 all become grist for Big Tech's analytical mills. This data is the lifeblood of AI development, enabling the refinement of algorithms, the personalization of services, and ultimately, the consolidation of market control. As some researchers have termed them, these entities are "data-driven intellectual monopolies".7 Control over data equates to control over insights, predictive capabilities, and, increasingly, the ability to influence behavior and shape markets.

B. The Engine of Expansion: Lobbying and Political Influence

The ability of Big Tech to expand its dominion into these varied and often regulated sectors is significantly facilitated by its substantial investment in lobbying and its cultivation of political influence.

Major technology companies consistently rank among the top spenders on lobbying efforts in key political capitals worldwide. They collectively invest tens of millions of dollars annually to shape legislation and regulatory frameworks concerning critical issues such as data privacy, antitrust enforcement, content moderation, and artificial intelligence governance.35 Reports indicate that companies like Meta (Facebook's parent company), Alphabet (Google's parent company), and ByteDance (TikTok's parent company), as well as industry trade associations like NetChoice, have all registered record-breaking lobbying expenditures in recent years.35 This sustained and significant financial investment in lobbying allows Big Tech to ensure its voice is heard in policy debates, to advocate for regulations favorable to its business models, and to mitigate potential legislative threats that could curtail its expansion or operational freedom.21

Furthermore, there are observable signals of Big Tech leaders and their companies making strategic political alignments. This can manifest through targeted campaign contributions, public endorsements, or shifts in corporate policies, such as changes to Diversity, Equity, and Inclusion (DEI) programs.44 These actions are often interpreted as attempts to curry favor with prevailing political administrations or to secure a policy environment conducive to their interests, including tax reductions, deregulation in areas like environmental standards for data centers, and limitations on antitrust scrutiny.44 This strategic political engagement is a crucial component of their broader strategy to consolidate power and facilitate continued expansion. The blurring lines between corporate and state power are further accentuated as these tech giants become deeply intertwined with national interests, particularly in areas like defense and intelligence.20 This can lead to complex scenarios where corporate decisions carry significant geopolitical weight and, conversely, where state actions may be influenced by the strategic objectives of these powerful private entities. This dynamic complicates traditional models of governance and accountability.

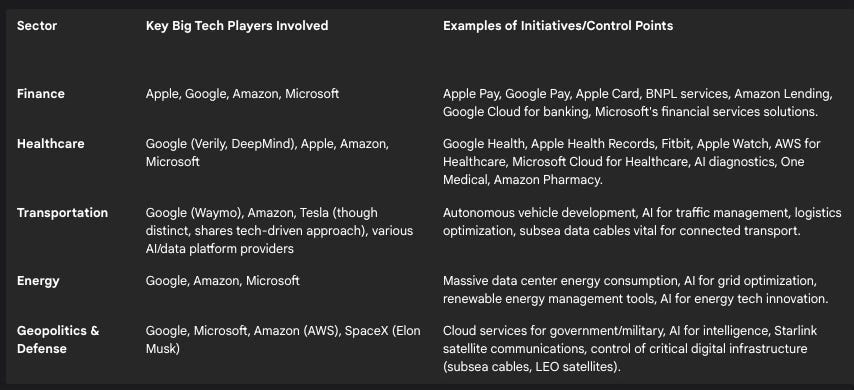

The following table provides an overview of Big Tech's expansion into these new sectors:

Table 2: Big Tech's Expansion into New Sectors

V. The Neuralink Nexus: A Future Shaped by Direct Brain-Computer Interfaces

The convergence of Big Tech's expanding control over information ecosystems with rapid advancements in neurotechnology, particularly Brain-Computer Interfaces (BCIs), presents a future scenario with profound implications. Companies like Elon Musk's Neuralink and potentially Apple are spearheading efforts that could move BCIs from niche medical applications to mainstream consumer technology, enabling direct communication between the human brain and digital devices. This trajectory raises fundamental questions about knowledge dissemination, individual autonomy, and the very nature of human experience in a world increasingly mediated by these powerful corporations.

A. The Dawn of Mainstream BCIs: Current State and Trajectory

The field of BCI technology is advancing at a notable pace, driven by significant investment and ambitious technological goals. Neuralink, for instance, is developing miniaturized BCI devices and sophisticated robotic implantation techniques. While its initial focus is on providing transformative applications for individuals with severe neurological conditions, such as restoring motor or communication functions, the company's broader vision appears to extend towards human augmentation and enabling a symbiotic relationship with artificial intelligence.25 However, Neuralink's progress has been accompanied by criticism regarding its "science by press release" approach, which has been cited for a lack of transparency and detailed scientific disclosure, particularly concerning early human trials.46 This approach can create hype and potentially give false hope without the rigorous peer-reviewed validation typically expected in medical breakthroughs.46

Apple, a titan in consumer electronics and ecosystem integration, is also actively exploring BCI technology.26 Reports suggest collaborations with companies like Synchron, which has developed the Stentrode, a less invasive BCI device that can be implanted via blood vessels. Apple's vision appears to focus on enabling users to control their array of devices—iPhones, iPads, Macs, and the Vision Pro headset—using only their thoughts.26 Initially, this is framed as an accessibility feature for individuals with severe mobility impairments, aligning with Apple's history of developing assistive technologies.26 However, the long-term potential clearly points towards a more mainstream integration into its vast user ecosystem. Apple's strategy likely involves leveraging its expertise in user interface design and hardware-software integration to create a seamless BCI experience, potentially setting new industry standards for brain implants and neural interfaces, with a characteristic emphasis on non-invasive or minimally invasive approaches and data privacy within its walled garden.26

The development and potential proliferation of BCI technology are inherently laden with complex ethical concerns that demand proactive and continuous scrutiny. Foremost among these are issues of privacy, given that neural data is exceptionally sensitive and can reveal intimate details about an individual's thoughts, emotions, and cognitive states.25 The potential for exploitation or unauthorized access to this data is a grave concern. Autonomy is another critical consideration: how much control will individuals truly have over their BCI-mediated interactions, and could the technology be used to subtly influence or coerce thoughts and behaviors?28 Informed consent becomes particularly challenging when dealing with technologies that interface directly with the brain, requiring a deep understanding of complex risks and benefits.25 Furthermore, BCIs touch upon fundamental aspects of identity and personhood: how does the integration of such technology affect an individual's sense of self?47 Security against hacking or malicious use is paramount, as the consequences of compromised neural interfaces could be devastating.25 Recognizing these challenges, efforts are underway to establish ethical guidelines and regulatory frameworks, as seen in China's recently released BCI research guidelines which attempt to balance innovation with risk mitigation, particularly distinguishing between restorative and augmentative BCI applications.48 International bodies like UNESCO are also contributing to this discourse, emphasizing principles like transparency, accountability, and inclusivity in AI and related neurotechnologies.52

B. A World with Direct Big Tech-Mediated Knowledge Dissemination

The prospect of Big Tech companies controlling the platforms for direct BCI-mediated knowledge dissemination ushers in a scenario with transformative implications for how information is published, accessed, and validated, and for fundamental freedoms.

The implications for scholarly publishing and traditional publishers are profound. If Big Tech becomes the primary conduit for research output directly to the brain, the established roles of scholarly publishers could be radically altered or even rendered obsolete.16 Peer review, a cornerstone of academic validation, might be bypassed or replaced by AI-driven review processes controlled by these tech platforms. The "surveillance-publishing business," where commercial publishers already mine scholars' works and behaviors for prediction products 16, could evolve into a more direct form of neural surveillance, monitoring how knowledge is consumed and even how new ideas are generated. The very definition of a "publication" could change, shifting from textual artifacts to curated neural information streams. This could lead to an ultimate form of "ecosystem lock-in," where a user's cognitive processes and perception of reality become dependent on proprietary neural interfaces. The information asymmetry between the individual and the controlling entity would be unparalleled, potentially making it practically impossible to opt-out or seek alternative information sources if these interfaces become integral to societal functioning.25

Freedom of speech would be fundamentally redefined, and potentially severely compromised. Direct neural information input from Big Tech platforms could create highly personalized realities, but these realities could also be subtly or overtly manipulated.23 If the information landscape is algorithmically curated to such an intimate degree, the ability to express dissenting views or access a truly diverse range of perspectives could be curtailed. The risk of sophisticated censorship, targeted propaganda, or subtle behavioral nudging becomes exceptionally high when the interface is the brain itself. The power to directly influence thought, perception, or emotional states via BCI represents an unprecedented level of control over individual experience and public discourse.

The fate of independent scientific research would hang in the balance. If the tools for research, the data generated, the funding sources, and the channels for disseminating findings are all predominantly controlled by Big Tech, the independence of scientific inquiry is critically threatened.9 Research agendas could be overwhelmingly dictated by corporate interests, prioritizing commercially viable or strategically advantageous projects. Studies that are critical of dominant technologies, that explore alternative paradigms, or that do not align with corporate priorities could be systematically marginalized, defunded, or suppressed. The fundamental scientific questions of who controls what questions are asked and what answers are deemed valid would be increasingly determined by non-scientific, commercial entities.

This leads to immense challenges in ensuring access to unbiased and unmanipulated information, a cornerstone of what has been termed "cognitive liberty" – the right to self-determination over one's own brain and mental experiences.29 Neural data is extraordinarily intimate, capable of revealing emotional states, cognitive patterns, and even subconscious predispositions.29 If this data is continuously collected and analyzed by the entities providing the BCI-mediated information, the potential for misuse—whether for discriminatory purposes, targeted manipulation, or more overt forms of coercion like "brainjacking" (the unauthorized control of a neural implant)—is immense.29 The line between beneficial hyper-personalization and covert manipulation becomes dangerously blurred. The user may not even be aware of the subtle ways their thoughts, preferences, or decisions are being shaped, making it exceedingly difficult to regulate or verify that the information serves the user's true interests rather than the controller's agenda. In such a world, the very concepts of "knowledge" and "truth" could become subjective and contingent upon the source and its algorithms, rather than on independent verification, empirical evidence, or diverse perspectives. "Knowledge" might shift from a collectively constructed and validated understanding to a centrally distributed and potentially biased narrative, challenging the foundations of epistemology.59

C. Broader Ethical and Philosophical Quandaries

The advent of widespread BCI technology, particularly when controlled and mediated by Big Tech, plunges humanity into a realm of profound ethical and philosophical quandaries that challenge our understanding of autonomy, identity, and the future of human agency.

BCIs force a re-examination of autonomy, identity, and personhood.47 If an action is initiated or significantly assisted by a BCI, to what extent is it truly the individual's own autonomous act? If a BCI learns and adapts to an individual's neural patterns, and even begins to anticipate or subtly guide their thoughts or actions, where does the individual end and the technology begin? The integration of such intimate technology raises questions about whether the BCI becomes part of the user's "body schema" or even their perceived identity. Qualitative studies with BCI users have shown discomfort with the idea of "cyborgization," highlighting a potential tension between the functional benefits of BCIs and the preservation of a stable sense of self.47

The security and potential for misuse of BCI technology are paramount concerns.25 The possibility of "brainjacking"—unauthorized access to or control over neural implants—is no longer confined to science fiction. Malicious actors could potentially manipulate neural signals to cause involuntary actions, induce unwanted emotional states, or extract sensitive information directly from a user's brain. The storage and transmission of neural data also create vulnerabilities to hacking and data breaches, with potentially devastating consequences for individual privacy and security. Ensuring the robustness and integrity of BCI systems against such threats is a critical, ongoing challenge.

These technological advancements resonate with the philosophical perspectives of thinkers like Nick Bostrom and Yuval Noah Harari, who have explored the long-term implications of advanced AI and human-technology convergence.

Nick Bostrom warns of the existential risks posed by superintelligence if its goals are not precisely aligned with human values, suggesting that most unconstrained AI goals could inadvertently lead to outcomes detrimental to humanity.61 His concept of a "solved world," where AI has overcome most instrumental challenges, raises questions about human purpose and meaning in an era where human labor and even cognitive effort might become obsolete.62 While not explicitly detailing AI's role in knowledge dissemination via BCI, the level of control and intelligence Bostrom attributes to future AI implies a significant, if not dominant, role in managing and shaping information.

Yuval Noah Harari emphasizes the unique capability of AI to make decisions and generate genuinely new ideas independently of human thought, unlike any previous technology.63 He expresses concerns about AI's potential to control populations through the manipulation of information and media, potentially leading to a shift of power from humanity to "alien intelligences" and the "annihilation of privacy".59 Harari has also warned that AI could trap humanity in a "web of illusions and delusions" mistaken for reality, a concern that gains particular salience in the context of direct neural information delivery.59

The concentration of BCI technology development and deployment within Big Tech companies amplifies these concerns, potentially leading to dystopian scenarios. An environment where a few powerful corporations control the primary interface to human cognition, coupled with advanced AI capable of curating and delivering information directly to the brain, could enable unprecedented levels of surveillance, manipulation of public opinion, and an erosion of personal freedoms and cognitive liberty.28 The creation of "dystopian smart cities" with AI-driven surveillance 58 could find its ultimate expression in the direct monitoring and influencing of thought itself.

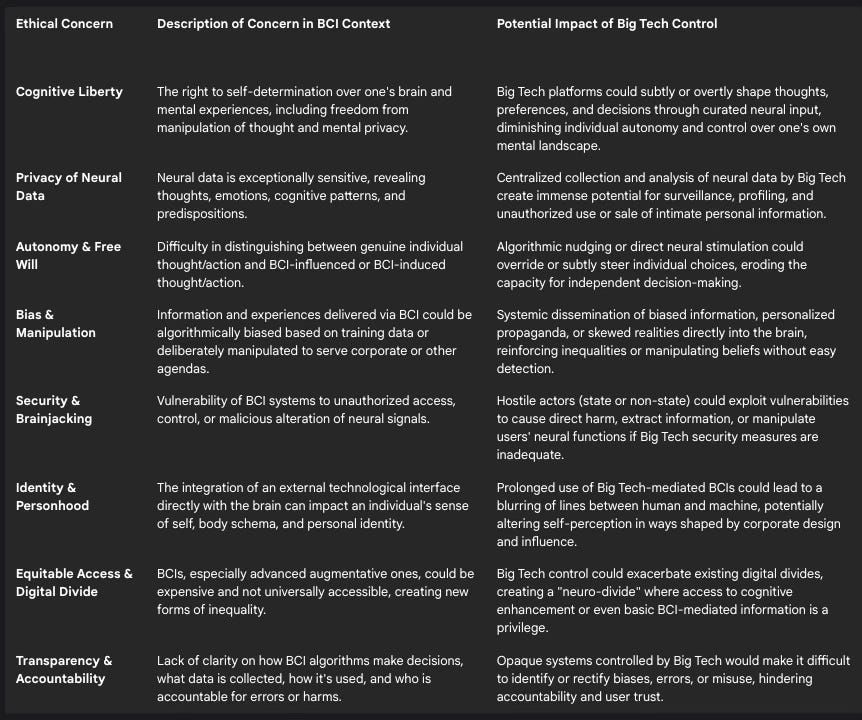

The following table outlines some of the key ethical concerns associated with Big Tech-controlled BCI knowledge dissemination:

Table 3: Ethical Concerns of Big Tech-Controlled BCI Knowledge Dissemination

VI. Conclusion: The Quest for Objective Science in a Silicon Valley-Dominated World

The increasing concentration of control over information, research tools, educational platforms, and communication channels within a few dominant Silicon Valley corporations compels a critical examination of the future of neutral and objective scientific inquiry. As these entities extend their influence, potentially culminating in direct neural information dissemination, the very foundations of how knowledge is created, validated, and shared are being reshaped.

A. The Central Question Revisited: Objectivity Under Siege?

The feasibility of maintaining neutral and objective scientific research is profoundly challenged when the ecosystem of science—its funding, tools, platforms, and data—is largely in the hands of Big Tech.42 Science, despite its aspirations for objectivity, is inherently a human activity conducted within a "value-laden context".65 When this context is overwhelmingly shaped by corporate interests, profit motives, and the specific technological trajectories favored by these dominant players, the direction and interpretation of scientific endeavors can become skewed.

The "risk account of objectivity" posits that scientific objectivity is achieved through the identification and management of important epistemic risks—factors like idiosyncrasies, biases, and illusions that can lead inquiry away from truth.64 If corporate control systematically introduces new epistemic risks (e.g., bias towards commercially favorable outcomes, suppression of inconvenient findings) or fails to manage existing ones due to conflicting interests, then the objectivity of the scientific enterprise is undeniably compromised. The pursuit of knowledge can become subordinate to the pursuit of profit or strategic advantage. The perceived or actual lack of objectivity in such a system can lead to a broader erosion of public trust in science itself. If research findings are seen as influenced by corporate agendas, and if information dissemination channels are controlled and potentially biased, the public may grow increasingly skeptical, undermining the legitimacy and authority of science as a reliable source of knowledge. This erosion of trust carries severe consequences for society's ability to address critical collective challenges, from public health crises to climate change and the ethical governance of new technologies.9

B. Systemic Biases, Conflicts of Interest, and the "Corporate Capture" of Research

The mechanisms through which Big Tech's dominance can undermine scientific objectivity are multifaceted. Their control over funding, platforms, and vast datasets creates fertile ground for systemic biases in research agendas, methodologies, and the dissemination of findings.10 Research areas that align with corporate strategic interests or promise marketable products are likely to receive disproportionate attention and resources, while fundamental research, critical inquiries, or explorations of non-proprietary solutions may be marginalized. This is evident in the "corporate capture of researchers," where direct funding, endowed professorships, and privileged access to data can subtly or overtly shape the research questions pursued, the methodologies employed, and the interpretation of results, determining what research is deemed "viable" and by whom.10 The "Matthew effect"—whereby those who already have resources and recognition gain more—can be amplified, as research aligning with Big Tech priorities garners more visibility and further funding, creating a self-reinforcing cycle that narrows the scope of scientific exploration. This dynamic can be viewed as a new form of colonialism, where data and innovation are extracted from a global pool of talent and institutional collaborators, with the value and control centralized within a few powerful entities, perpetuating dependencies and global knowledge imbalances.6

C. Potential Counterbalances and Paths Forward

While the challenges are significant, the trajectory towards complete corporate control over science is not immutable. Several potential counterbalances and strategic paths can help preserve the integrity and independence of scientific research:

The Role of Open Source Initiatives: Truly open-source AI models, software, hardware, and research platforms can offer viable alternatives to proprietary systems, promoting transparency, collaboration, and preventing monopolistic control.1 However, it is crucial to recognize that Big Tech also strategically engages with and influences open-source ecosystems, sometimes using it to further entrench their own platforms.7 For open source to be an effective counterbalance, it requires robust community support, sustainable funding models independent of dominant corporate influence, and a commitment to genuine openness in governance and development.

Public Funding and Public Interest Research: A substantial increase in public investment in fundamental research, scientific infrastructure, and independent research institutions is paramount.45 Prioritizing public-interest research, especially in areas that may be critical of dominant technologies or that do not offer immediate commercial returns, is essential for a balanced scientific portfolio.

Robust Regulatory Frameworks and Antitrust Enforcement: Governments and international bodies must develop and rigorously enforce effective regulatory frameworks. This includes proactive antitrust measures to address anti-competitive practices and market concentration 21, comprehensive data governance policies that protect privacy and ensure equitable access, and regulations promoting algorithmic transparency and accountability.52 Anticipatory governance models, which seek to address ethical, social, and legal implications of emerging technologies like AI and BCIs before they become entrenched, are crucial.69

Strengthening Academic and Institutional Autonomy: Universities, research institutions, and educational bodies need to consciously and actively work to reduce their dependence on Big Tech platforms and services.1 This involves fostering digital self-determination by investing in in-house capabilities, supporting open infrastructure, and prioritizing public values and academic freedom in their technological choices and partnerships.

Promoting Critical Digital and AI Literacy: A well-informed citizenry and research community are vital. Educational initiatives aimed at promoting critical digital literacy, AI literacy, and an understanding of how algorithmic systems and information ecosystems are constructed and controlled can empower individuals to navigate these complex environments more effectively and advocate for their rights and interests.11

D. Final Reflections: The Future of Knowledge, Autonomy, and Human Agency

The concentration of power over knowledge systems in the hands of a few technology corporations represents one of the most significant challenges of our time. The allure of technological progress, efficiency, and unprecedented capabilities must be weighed against the potential costs to intellectual freedom, individual autonomy, and the democratic governance of knowledge.

A future where information and even "truth" are curated, personalized, and potentially fed directly into human minds by profit-driven entities controlling opaque algorithmic systems and proprietary neural interfaces demands profound ethical scrutiny. The imperative is to develop "epistemic resilience"—the capacity for individuals and societies to critically evaluate information, identify biases, seek diverse perspectives, and maintain cognitive autonomy, even when immersed in powerful and persuasive technological environments. This resilience is key to navigating a future where the lines between authentic and synthetic, objective and manipulated, become increasingly blurred.

The trajectory is not predetermined. Conscious societal choices, robust public debate, proactive policy interventions, and a renewed commitment to the principles of open and independent inquiry are necessary to harness the transformative potential of new technologies in ways that empower, rather than diminish, human agency and ensure that the future of knowledge serves the broad public good, not narrow corporate interests. The quest for objective science in a Silicon Valley-dominated world is, ultimately, a quest to safeguard the foundations of an informed, autonomous, and democratic society.

Works cited

Open letter to the Executive University Board: Calling for an end to the university's dependence on big tech | Data Autonomy | Jantina Tammes School of Digital Society, Technology and AI | University of Groningen, accessed May 23, 2025, https://www.rug.nl/jantina-tammes-school/news/2025/open-letter-to-executive-university-board-end-dependence-big-tech

Open letter to the Executive University Board: Calling for a transformation to digital autonomy - Opinion, accessed May 23, 2025, https://www.uu.nl/en/opinion/open-letter-to-the-executive-university-board-calling-for-a-transformation-to-digital-autonomy

accessed January 1, 1970, https://www.rug.nl/jantina-tammes-school/news/2025/open-letter-to-the-executive-university-board-end-dependence-big-tech

Google Cloud: the platform for scientific discovery - Google Blog, accessed May 23, 2025, https://blog.google/products/google-cloud/scientific-research-tools-ai/

Technology Investments in 2025: Risks, Opportunities, and Secrets to Success - WEZOM, accessed May 23, 2025, https://wezom.com/blog/technology-investments-in-2025

The impact of big data on scientific research | Penn LPS Online, accessed May 23, 2025, https://lpsonline.sas.upenn.edu/features/impact-big-data-scientific-research

Big Tech, knowledge predation and the implications for development - City Research Online, accessed May 23, 2025, https://openaccess.city.ac.uk/id/eprint/26891/8/Rikap%20and%20Lundvall%20Innovation%20%26%20Development%20accepted%20version.pdf

Big tech, knowledge predation and the implications for development - IDEAS/RePEc, accessed May 23, 2025, https://ideas.repec.org/a/taf/riadxx/v12y2022i3p389-416.html

Harmful effects of digital tech – the science 'needs fixing', experts ..., accessed May 23, 2025, https://www.cam.ac.uk/research/news/harmful-effects-of-digital-tech-the-science-needs-fixing-experts-argue

How Information Asymmetry Inhibits Efforts for Big Tech ..., accessed May 23, 2025, https://www.techpolicy.press/how-information-asymmetry-inhibits-efforts-for-big-tech-accountability/

We might well need a digital backlash in education … just not this one!, accessed May 23, 2025, https://criticaledtech.com/2025/02/28/we-might-well-need-a-digital-backlash-in-education-just-not-this-one/

Digital Transformation in K-12 Education (+Examples) - Whatfix, accessed May 23, 2025, https://whatfix.com/blog/digital-transformation-in-k-12-education-examples/

New Trends in Educational Technology | PowerGistics, accessed May 23, 2025, https://powergistics.com/blog/education-technology-trends/

Technology in the Classroom: Benefits and the Impact on Education ..., accessed May 23, 2025, https://www.gcu.edu/blog/teaching-school-administration/how-using-technology-teaching-affects-classrooms

Big Tech's New Moves to Dismantle Public Schooling: An Attack on ..., accessed May 23, 2025, https://www.self-directed.org/tp/big-techs-new-moves-to-dismantle-public-schooling-an-attack-on-autonomy/

Large Language Publishing: The Scholarly Publishing Oligopoly's ..., accessed May 23, 2025, https://kula.uvic.ca/index.php/kula/article/view/291

How technology is changing the future of employee communication | Zoho Connect, accessed May 23, 2025, https://www.zoho.com/connect/the-collective/how-technology-is-changing-the-future-of-employee-communication.html

2025 technology industry outlook | Deloitte Insights, accessed May 23, 2025, https://www2.deloitte.com/us/en/insights/industry/technology/technology-media-telecom-outlooks/technology-industry-outlook.html

Why subsea cables are the next tech battleground - Silicon Republic, accessed May 23, 2025, https://www.siliconrepublic.com/comms/subsea-cables-big-tech-geopolitics-infrastructure-internet-data-control

Big Tech's Foreign Policy Takeover | TechPolicy.Press, accessed May 23, 2025, https://www.techpolicy.press/big-techs-foreign-policy-takeover/

Dominant Companies Are Part of the Tech Industry's DNA ..., accessed May 23, 2025, https://itif.org/publications/2025/01/31/dominant-companies-are-part-of-the-tech-industrys-dna/

Letter from America: Innovative worries in the Age of Big Tech - Royal Economic Society, accessed May 23, 2025, https://res.org.uk/newsletter/letter-from-america-innovative-worries-in-the-age-of-big-tech/

Combating Big Tech's Totalitarianism: A Road Map | The Heritage ..., accessed May 23, 2025, https://www.heritage.org/big-tech/report/combating-big-techs-totalitarianism-road-map

Big Tech is Trying to Burn Privacy to the Ground–And They're Using Big Tobacco's Strategy to Do It - The ACLU of Northern California, accessed May 23, 2025, https://www.aclunc.org/blog/big-tech-trying-burn-privacy-ground-and-they-re-using-big-tobacco-s-strategy-do-it

Neuralink's brain-computer interfaces: medical ... - Frontiers, accessed May 23, 2025, https://www.frontiersin.org/journals/human-dynamics/articles/10.3389/fhumd.2025.1553905/full

Mind-Controlled Futures: Advances, Partnerships, and Ethical ..., accessed May 23, 2025, https://seo.goover.ai/report/202505/go-public-report-en-abe30407-9790-4ca1-a8f9-9a632240a5fa-0-0.html

Say goodbye to traditional devices - Apple is developing a brain ..., accessed May 23, 2025, https://eladelantado.com/news/apple-brain-interface-replaces-devices/

Technology and Law Going Mental - Verfassungsblog, accessed May 23, 2025, https://verfassungsblog.de/technology-and-law-going-mental/

Ethical Frontiers: Navigating the Intersection of Neurotechnology ..., accessed May 23, 2025, https://www.scientificarchives.com/article/ethical-frontiers-navigating-the-intersection-of-neurotechnology-and-cybersecurity

The Battle for Your Brain: A Legal Scholar's Argument for Protecting ..., accessed May 23, 2025, https://judicature.duke.edu/articles/the-battle-for-your-brain-a-legal-scholars-argument-for-protecting-brain-data-and-cognitive-liberty/

The Impact of a Monopolized Economy on Innovation—And Why It ..., accessed May 23, 2025, https://ip.com/blog/the-impact-of-a-monopolized-economy-on-innovation-and-why-it-matters-now-more-than-ever/

Ethics and Costs - Generative AI - Research Guides at Amherst ..., accessed May 23, 2025, https://libguides.amherst.edu/c.php?g=1350530&p=9969379

Beyond Moderation: Challenging Big Tech's Power in a Troubled ..., accessed May 23, 2025, https://www.techpolicy.press/beyond-moderation-challenging-big-techs-power-in-a-troubled-time-for-democracy/

We need a Freedom of Information Act for Big Tech | Shorenstein ..., accessed May 23, 2025, https://shorensteincenter.org/commentary/need-freedom-information-act-big-tech/

Big Tech Cozies Up to New Administration After Spending Record Sums on Lobbying Last Year - Issue One, accessed May 23, 2025, https://issueone.org/articles/big-tech-spent-record-sums-on-lobbying-last-year/

Big Tech Financial Takeover: How Apple, Google, and Amazon Are ..., accessed May 23, 2025, https://slavic401k.com/big-tech-financial-takeover-how-apple-google-and-amazon-are-reshaping-money/

Big Tech's Entry into Healthcare Analysing the Disruptive Potential, accessed May 23, 2025, https://innohealthmagazine.com/2023/research/big-techs-entry-into-healthcare-analysing-the-disruptive-potential/

runwise.co, accessed May 23, 2025, https://runwise.co/wp-content/uploads/2023/08/CB-Insights_Big-Tech-In-Healthcare-2022-1.pdf

AI, Big Data & MaaS: ITS & Traffic Control Products - Optraffic, accessed May 23, 2025, https://optraffic.com/blog/ai-big-data-maas-traffic-control-products/

AI is set to drive surging electricity demand from data centres while ..., accessed May 23, 2025, https://www.iea.org/news/ai-is-set-to-drive-surging-electricity-demand-from-data-centres-while-offering-the-potential-to-transform-how-the-energy-sector-works

Can AI Transform the Power Sector? - Center on Global Energy ..., accessed May 23, 2025, https://www.energypolicy.columbia.edu/can-ai-transform-the-power-sector/

Big Tech and the US Digital-Military-Industrial Complex ..., accessed May 23, 2025, https://www.intereconomics.eu/contents/year/2025/number/2/article/big-tech-and-the-us-digital-military-industrial-complex.html

How Private Tech Companies Are Reshaping Great Power Competition, accessed May 23, 2025, https://sais.jhu.edu/kissinger/programs-and-projects/kissinger-center-papers/how-private-tech-companies-are-reshaping-great-power-competition

Big Tech's Shift to the Right - Human Rights Research Center, accessed May 23, 2025, https://www.humanrightsresearch.org/post/big-tech-s-shift-to-the-right

(PDF) Big Tech Companies Impact on Research at the Faculty of ..., accessed May 23, 2025, https://www.researchgate.net/publication/360333510_Big_Tech_Companies_Impact_on_Research_at_the_Faculty_of_Information_Technology_and_Electrical_Engineering

The Neuralink Patient Behind the Musk - The Hastings Center for ..., accessed May 23, 2025, https://www.thehastingscenter.org/the-neuralink-patient-behind-the-musk/

Ethical Aspects of BCI Technology: What Is the State of the Art? - MDPI, accessed May 23, 2025, https://www.mdpi.com/2409-9287/5/4/31

cset.georgetown.edu, accessed May 23, 2025, https://cset.georgetown.edu/wp-content/uploads/t0584_brain_computer_ethics_EN.pdf

Media Representation of the Ethical Issues Pertaining to Brain–Computer Interface (BCI) Technology - PMC, accessed May 23, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11674794/

Science & Tech Spotlight: Brain-Computer Interfaces | U.S. GAO, accessed May 23, 2025, https://www.gao.gov/products/gao-22-106118

Ethical Guideline for Brain-Computer Interface Released --Science ..., accessed May 23, 2025, https://digitalpaper.stdaily.com/http_www.kjrb.com/ywtk/html/2024-02/24/content_567474.htm?div=0

Announcing the Call for Best Practices: UNESCO-LG MOOC on the ..., accessed May 23, 2025, https://www.unesco.org/en/articles/announcing-call-best-practices-unesco-lg-mooc-ethics-ai

Ethical Impact Assessment: A Tool of the Recommendation on the ..., accessed May 23, 2025, https://www.unesco.org/en/articles/ethical-impact-assessment-tool-recommendation-ethics-artificial-intelligence

Large Language Publishing | KULA: Knowledge Creation, Dissemination, and Preservation Studies, accessed May 23, 2025, https://kula.uvic.ca/index.php/kula/article/view/291/502

Book Biz to Big Tech: Pay Up, Then We Can Make Up - Publishers Weekly, accessed May 23, 2025, https://www.publishersweekly.com/pw/by-topic/digital/copyright/article/97373-book-biz-to-big-tech-pay-up-then-we-can-make-up.html

The Integration of Artificial Intelligence in Scholarly Publishing ..., accessed May 23, 2025, https://editorscafe.org/details.php?id=64

What is the Future of AI in Publishing? | PublishingState.com, accessed May 23, 2025, https://publishingstate.com/what-is-the-future-of-ai-in-publishing/2025/

Dystopian Tech: Evolution and Future of Surveillance AI, accessed May 23, 2025, https://evolutionoftheprogress.com/dystopian-technology/

Yuval Noah Harari Sees the Future of Humanity, AI, and Information ..., accessed May 23, 2025, https://www.reddit.com/r/singularity/comments/1kcvulo/yuval_noah_harari_sees_the_future_of_humanity_ai/

Free Speech is Incomplete? Big Tech in a Distant Mirror, accessed May 23, 2025, https://press.wz.uw.edu.pl/cgi/viewcontent.cgi?article=1449&context=yars

Nick Bostrom on artificial intelligence | Oxford Martin School, accessed May 23, 2025, https://www.oxfordmartin.ox.ac.uk/blog/nick-bostrom-on-artificial-intelligence

Life in an AI Utopia: A Quick Q&A With Futurist and Philosopher Nick ..., accessed May 23, 2025, https://www.aei.org/articles/life-in-an-ai-utopia-a-quick-qa-with-futurist-and-philosopher-nick-bostrom/

How AI Will Shape Humanity's Future - Yuval Noah Harari - Lilys AI, accessed May 23, 2025, https://lilys.ai/notes/943550

www.durham.ac.uk, accessed May 23, 2025, https://www.durham.ac.uk/media/durham-university/research-/research-centres/humanities-engaging-sci-and-soc-centre-for/K4U_WP_2019_01.pdf

On Being Objective About Objectivity in Science, accessed May 23, 2025, https://epublications.marquette.edu/cgi/viewcontent.cgi?article=2545&context=lnq

sovereignedge.eu, accessed May 23, 2025, https://sovereignedge.eu/blog/how-big-tech-is-shaping-the-global-open-source-ecosystem/#:~:text=In%20the%20eyes%20of%20other,given%20technology%20in%20the%20future.

Ex-Google Exec Sparks Debate on Open-Source AI: A Key to Western Dominance Over China? - OpenTools, accessed May 23, 2025, https://opentools.ai/news/ex-google-exec-sparks-debate-on-open-source-ai-a-key-to-western-dominance-over-china

How policymakers can better support start-ups: Insights from OECD ..., accessed May 23, 2025, https://www.oecd.org/en/blogs/2025/04/how-policymakers-can-better-support-start-ups-insights-from-oecd-research.html

Transformative policies and anticipatory governance are key to ..., accessed May 23, 2025, https://www.oecd.org/en/about/news/press-releases/2024/04/transformative-policies-and-anticipatory-governance-are-key-to-optimising-benefits-and-managing-risks-of-new-emerging-technologies.html