Establishing frameworks for attribution in AI models, especially at the output stage, ensures that content originators receive proper credit, even in complex, large models.

Differentiating licensing for foundational AI models, custom fine-tuning, and Retrieval-Augmented Generation (RAG) is essential.

Please read the article “Wiley Leans into AI. The Community Should Lean with Them.” and list all the important lessons for AI makers, AI users (of any kind) and regulators

ChatGPT-4o’s answer:

Here are the key lessons from "Wiley Leans into AI. The Community Should Lean with Them" for AI makers, AI users, and regulators:

For AI Makers:

Ethical Standards and Attribution: Establishing frameworks for attribution in AI models, especially at the output stage, ensures that content originators receive proper credit, even in complex, large models.

Content-Specific Training: Differentiating licensing for foundational AI models, custom fine-tuning, and Retrieval-Augmented Generation (RAG) is essential. RAG, which combines external retrieval with language generation, is particularly suited to scientific and scholarly use due to its accuracy requirements.

Collaborative Development: Engaging publishers and authors in licensing arrangements fosters responsible AI use, allowing fair compensation and safeguarding intellectual property. AI makers are encouraged to adopt structured licensing over relying on fair use claims.

Next-Gen Detection Software: Developing tools to detect AI-driven plagiarism and unethical usage is essential for maintaining research integrity.

For AI Users (Researchers, Authors, Publishers):

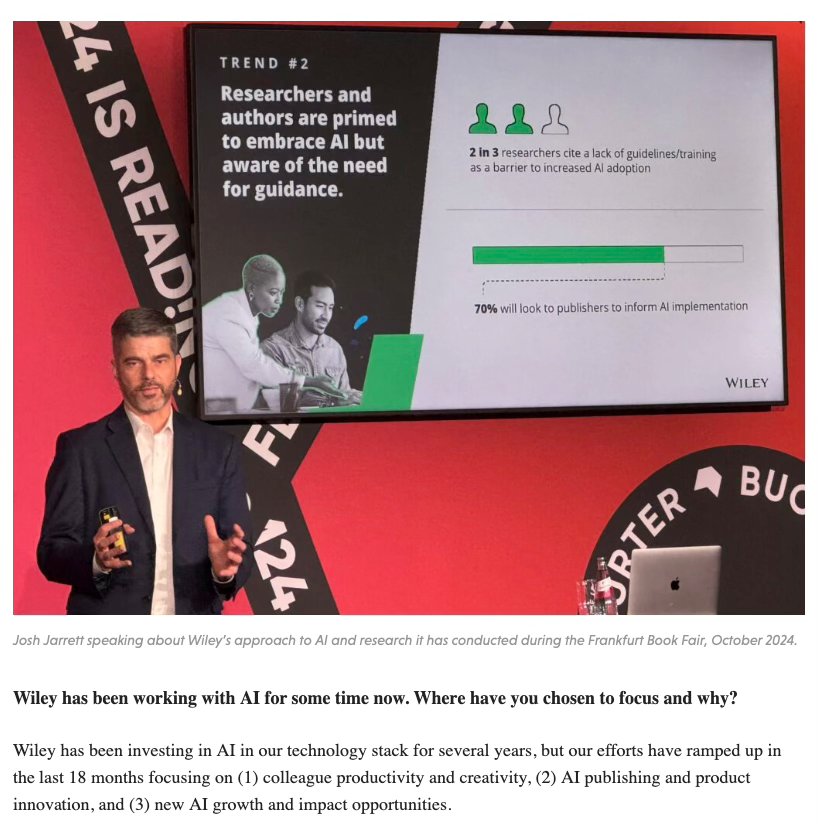

Clear Guidelines and Training: AI use requires guidelines to balance its benefits against ethical concerns, especially for peer-reviewed research. The study revealed that 70% of researchers expect guidance from publishers on responsible AI usage.

Responsible AI Use in Research: Researchers and authors are advised to transparently disclose when AI assists with data analysis, hypothesis generation, or drafting to maintain integrity and reproducibility.

Transparency in AI Usage: Academic integrity is supported by clearly disclosing AI use in the research process, such as assistance with data or manuscript drafting, enabling others to accurately assess and build on AI-assisted work.

Peer Review Evolution: Peer review processes must adapt to evaluate AI-assisted research. This may include reviewer training and new roles to ensure AI-driven research upholds scholarly rigor.

For Regulators:

Collaborative Policy Development: Effective AI regulation will require collaboration across publishers, institutions, and AI makers to create an ecosystem aligned with transparency, reproducibility, and open science values.

Incorporating AI Disclosures: Guidelines mandating AI disclosure help maintain research transparency and credibility, enabling the academic community to monitor and understand AI's role in generating research insights.

Ethics in Data Use: Policymakers should address data privacy, algorithmic bias, and ethical AI usage to ensure AI tools enhance rather than replace the intellectual rigor of research.

These lessons emphasize a balanced approach to AI development and usage, highlighting collaboration, responsible use, and evolving regulatory practices to ensure AI serves scholarly goals responsibly.

Claude’s answer:

Here are the key lessons from the article for different stakeholders:

For AI Makers:

High-quality, authorized training data is crucial - there's a growing legitimate marketplace for licensing content

Different AI approaches have different use cases:

Large Language Models (LLMs) for general language understanding

Fine-tuned models for specialized domain knowledge

Retrieval-Augmented Generation (RAG) for accuracy and authoritative responses

Attribution remains a technical challenge, especially during the training stage due to diffused information across billions of parameters

Fair use claims may not hold up when there's a robust licensing marketplace

For AI Users (Researchers/Authors):

Clear guidelines are needed - 70% of authors look to publishers for guidance on responsible AI use

Common questions need addressing:

Acceptable uses of AI for language refinement

Boundaries for data analysis

Appropriate use for generating hypotheses

Identifying research gaps

There's a growing divide between early adopters and skeptics that needs bridging

Transparency is crucial - users should disclose when and how AI tools were used in their work

For Regulators:

The line between human-authored and machine-created content is blurring, requiring evolving regulatory frameworks

New standards are needed for:

AI disclosures in research

Peer review processes for AI-assisted work

Data privacy protections

Addressing algorithmic bias

Copyright protection in the AI era

Cross-industry collaboration is essential between publishers, institutions, and regulatory bodies

Clear frameworks are needed for:

Content licensing

Attribution standards

Ethical use guidelines

Protecting intellectual property rights

General Lessons for All Stakeholders:

Proactive engagement is better than waiting to be shaped by AI developments

Society benefits when AI models are trained on high-quality, authoritative content

Transparency and trust are fundamental principles that must be maintained

The ecosystem needs to balance innovation with protection of rights and maintaining research integrity

Common standards and guidelines are essential for responsible AI development and use

The focus should be on using AI to enhance, not replace, human intellectual work

Grok’s answer:

Here are the important lessons for AI makers, users, and regulators based on the article “Wiley Leans into AI. The Community Should Lean with Them.”:

For AI Makers:

Ethical Development: AI development should include mechanisms to ensure ethical use, such as preventing plagiarism or misconduct. This involves creating technologies that can detect unethical use of AI in scholarly work.

Licensing and Copyright: Engage with publishers and copyright holders through structured licensing agreements rather than relying on fair use, which helps in maintaining the integrity of copyright and ensures authors and publishers are fairly compensated.

Collaboration with Publishers: Work closely with scholarly publishers to understand and meet the needs of the academic community, especially in terms of providing accurate, authoritative content for AI training.

Transparency in Training: Address how AI models handle content attribution, especially considering the complexity of training models where information is not compartmentalized but diffused.

For AI Users (Researchers, Authors, etc.):

Guidance on AI Use: Seek and utilize guidelines from publishers on how AI can be ethically and effectively used in research, from hypothesis generation to manuscript editing.

Training and Education: Look for training or guidance offered by publishers on AI tools, understanding their ethical boundaries and practical applications in research.

Disclosure of AI Use: When AI is used in research, disclosure should be clear and detailed to maintain transparency and allow for proper peer review and reproducibility.

Peer Review Evolution: Understand that the role of AI in research might necessitate changes in peer review processes, possibly requiring new skills or even roles to evaluate AI-assisted research effectively.

For Regulators:

Policy Development: Develop policies that address the evolving use of AI in scholarly publishing, focusing on ethical standards, copyright, and data privacy.

Support for Licensing Frameworks: Encourage and support the creation of licensing frameworks that protect copyright while allowing for AI development, ensuring a balance between innovation and rights protection.

Promote Transparency: Advocate for transparency in how AI is used in research, including how data is sourced and how AI contributions are disclosed in published works.

Collaborative Efforts: Facilitate collaboration among publishers, AI developers, and academic institutions to create standards and practices that uphold the integrity of scholarly work while integrating AI.

Addressing Bias and Privacy: Ensure regulations consider the implications of AI in terms of algorithmic bias and data privacy, aiming to protect individuals and maintain the credibility of research outcomes.

These lessons highlight the need for a collaborative approach among all stakeholders to ensure that AI's integration into scholarly publishing is beneficial, ethical, and maintains the quality and trust in academic work.