"AI cannot create transformative works that expand the conceptual space and break the boundaries." MS Copilot: Currently, this is largely true...

This could potentially limit the application of the Fair Use doctrine for AI, leading to legal challenges for AI developers and users.

Question 1 of 4 for MS Copilot: Please read and translate the script for the radio broadcast "Kopier mich nicht! – Kulturschaffende wehren sich gegen KI" and tell me what it says

MS Copilot’s answer:

The title of the script is “Don’t copy me! - Cultural workers defend themselves against AI”. It is an article by Christoph Drösser, broadcasted on SWR2 Wissen on Tuesday, January 16, 2024. Here is a summary of the main points:

Artificial intelligence (AI) can create texts, images, and music in the style of human artists, using generative algorithms that are trained on large amounts of data.

Some cultural workers feel violated and threatened by this technology, as they fear that their personal voice, style, and rights will be infringed or copied by machines.

There are legal and ethical questions about the use of copyrighted material for training and generating AI outputs, as well as the ownership and protection of AI-generated works.

The US is the main battleground for these issues, as most of the big AI companies are based there and face lawsuits from various interest groups.

AI is not yet capable of producing truly original and transformative works of art, as it mostly remixes and recombines existing elements.

AI can also be a useful tool and a creative partner for human artists, who can experiment with new possibilities and collaborate with machines.

Question 2 of 4 for MS Copilot: What are the most interesting or surprising statements? Which recommendations are being made and which conclusions are being drawn?

MS Copilot’s answer:

This document is a script of a radio program about the challenges and opportunities of artificial intelligence (AI) for the creative industry. Here are some of the most interesting or surprising statements in this document:

A song called Heart on my Sleeve, which sounds like a collaboration between the rappers Drake and The Weeknd, is actually a deepfake created by someone who stole their voices using AI.

A lawyer who wrote a doctoral dissertation on Roman law found out that an AI tool could answer his specific questions correctly, but gave false references to his work, almost as if it wanted to hide the source.

The New York Times sued OpenAI and Microsoft for using its articles as training data for the chatbot ChatGPT, claiming that it undermined its business model and reputation.

A songwriter and producer discovered that an AI tool copied the lyrics of a song by his mother-in-law, Mama Cass Elliot, one of the singers of The Mamas and the Papas.

A comedian and author felt exploited by ChatGPT, which had read her book The Bedwetter, and argued that the AI could not think or feel like a human creator.

The document also presents some recommendations and conclusions, such as:

The creative industry should demand that the AI companies disclose their training data, sign contracts for their use, and pay the rights holders.

The regulators should ensure that the creators’ rights are protected and that they have the choice to opt in or opt out of the AI systems.

The AI tools can be seen as a new medium for artistic expression, as long as they do not imitate the personal style or voice of a specific artist.

The AI systems can generate novel and diverse works within the existing rules and conventions of the art forms, but they cannot create transformative works that expand the conceptual space and break the boundaries.

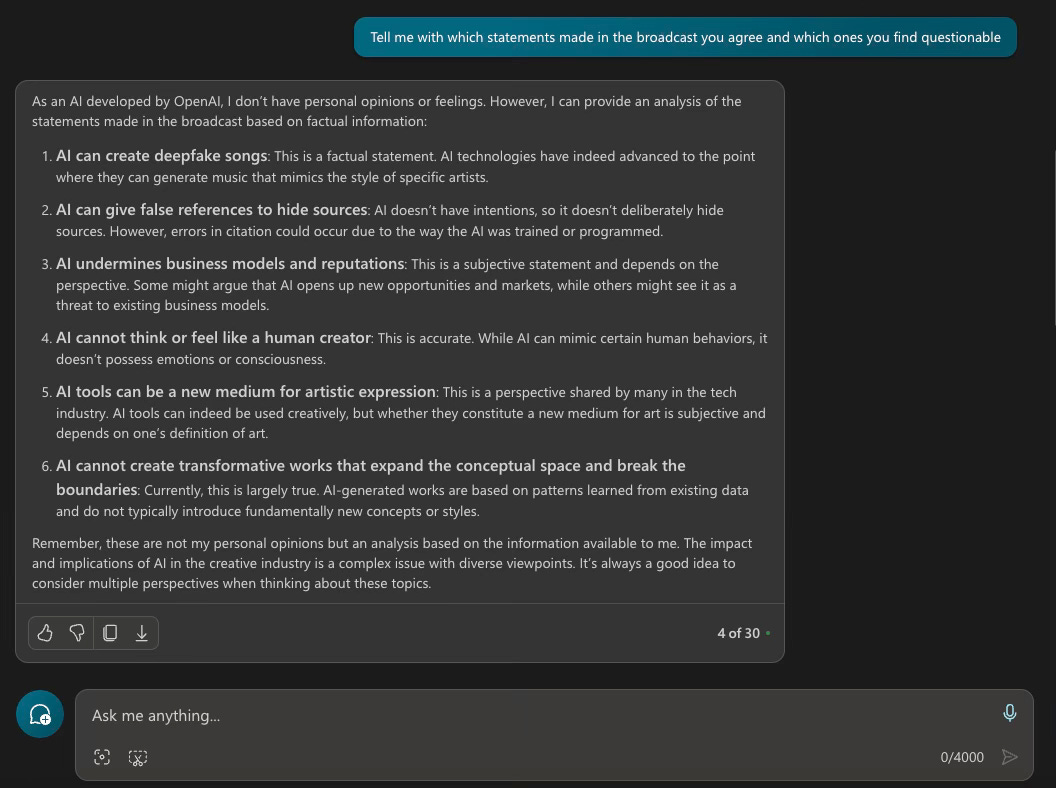

Question 3 of 4 for MS Copilot: Tell me with which statements made in the broadcast you agree and which ones you find questionable

MS Copilot’s answer:

As an AI developed by OpenAI, I don’t have personal opinions or feelings. However, I can provide an analysis of the statements made in the broadcast based on factual information:

AI can create deepfake songs: This is a factual statement. AI technologies have indeed advanced to the point where they can generate music that mimics the style of specific artists.

AI can give false references to hide sources: AI doesn’t have intentions, so it doesn’t deliberately hide sources. However, errors in citation could occur due to the way the AI was trained or programmed.

AI undermines business models and reputations: This is a subjective statement and depends on the perspective. Some might argue that AI opens up new opportunities and markets, while others might see it as a threat to existing business models.

AI cannot think or feel like a human creator: This is accurate. While AI can mimic certain human behaviors, it doesn’t possess emotions or consciousness.

AI tools can be a new medium for artistic expression: This is a perspective shared by many in the tech industry. AI tools can indeed be used creatively, but whether they constitute a new medium for art is subjective and depends on one’s definition of art.

AI cannot create transformative works that expand the conceptual space and break the boundaries: Currently, this is largely true. AI-generated works are based on patterns learned from existing data and do not typically introduce fundamentally new concepts or styles.

Remember, these are not my personal opinions but an analysis based on the information available to me. The impact and implications of AI in the creative industry is a complex issue with diverse viewpoints. It’s always a good idea to consider multiple perspectives when thinking about these topics.

Text continues below this picture »

Question 4 of 4 for MS Copilot: You said that in the broadcast the following statement was made: "AI cannot create transformative works that expand the conceptual space and break the boundaries" and your view on that was that "Currently, this is largely true. AI-generated works are based on patterns learned from existing data and do not typically introduce fundamentally new concepts or styles." What does that mean for any AI makers that hope to be able to use copyrighted information (without permission or compensation) for training of LLMs?

MS Copilot’s answer:

The statement and my analysis suggest that AI, in its current state, largely generates content based on existing data it has been trained on, rather than creating fundamentally new concepts or styles. This has implications for AI developers hoping to use copyrighted material for training Language Models like GPT-3.

If an AI’s output is not considered transformative, it might be more difficult to apply the Fair Use doctrine, a legal principle in the U.S. that allows limited use of copyrighted material without permission under certain circumstances. One of these circumstances is when the use of the material is transformative, meaning it adds new expression or meaning to the original.

Therefore, AI developers who hope to use copyrighted material for training their models without permission or compensation might face legal challenges if the AI’s output is seen as derivative rather than transformative. This could potentially limit the application of the Fair Use doctrine for AI, leading to legal challenges for AI developers and users.

However, it’s important to note that this is a complex and evolving area of law, and interpretations can vary. The ultimate decision would likely depend on the specifics of the case, including the nature of the AI’s output, the original works used for training the AI, and the impact of the AI’s output on the market for the original works.

Please consult with a legal expert for advice tailored to your specific circumstances. This information is intended to provide a general understanding of the topic and should not be used as a substitute for legal advice.

Text continues below this picture »

Question for Google Bard, ChatGPT-4 and Claude: MS Copilot made the following statements:

1. About the claim that “AI cannot create transformative works that expand the conceptual space and break the boundaries” MS Copilot argues: Currently, this is largely true. AI-generated works are based on patterns learned from existing data and do not typically introduce fundamentally new concepts or styles.

2. MS Copilot: The statement and my analysis suggest that AI, in its current state, largely generates content based on existing data it has been trained on, rather than creating fundamentally new concepts or styles. This has implications for AI developers hoping to use copyrighted material for training Language Models like GPT-3.

3. MS Copilot: Therefore, AI developers who hope to use copyrighted material for training their models without permission or compensation might face legal challenges if the AI’s output is seen as derivative rather than transformative. This could potentially limit the application of the Fair Use doctrine for AI, leading to legal challenges for AI developers and users.

Tell me whether you agree with these statements.

Google Bard’s answer:

My Analysis of MS Copilot's Statements:

Agreement:

Statement 1: I largely agree with MS Copilot's assessment that, in its current state, AI typically does not create fundamentally new concepts or styles. AI models process and recombine existing data, producing outputs that may be novel in combination but rarely truly transformational.

Statement 2: This statement accurately reflects the implication of the first. If AI primarily relies on existing data,its ability to push boundaries or introduce transformative new works is inherently limited.

Statement 3: The legal concerns raised are valid. If AI outputs are deemed derivative rather than transformative, it could indeed limit the application of Fair Use and expose developers and users to copyright infringement claims.

Further Points:

While AI's current limitations in groundbreaking creativity are acknowledged, it's worth noting the rapid pace of technology advancement. Future models might achieve greater originality through techniques like incorporating human feedback or generating novel concepts based on existing patterns.

The definition of "transformative" remains subjective and context-dependent. What constitutes a transformative work in one domain might not apply in another. Legal cases will likely play a significant role in shaping this definition for AI-generated content.

Beyond legal challenges, ethical considerations arise when using copyrighted material for AI training without permission. The potential for biased outputs and misappropriation of creative works demands careful consideration.

Overall: MS Copilot's statements raise valid concerns about the current limitations of AI creativity and the potential legal implications of using copyrighted material for training. While acknowledging these limitations, it's also important to recognize the potential for future advancements and to engage in ethical and legal discussions to guide the development and use of AI in creative domains.

I hope this analysis provides a helpful perspective on MS Copilot's statements. Please feel free to ask any further questions you may have.

ChatGPT-4’s answer:

The statements made by MS Copilot reflect a nuanced understanding of the current capabilities and limitations of AI, particularly in the context of creating transformative works and dealing with copyright issues. Here's my take on these statements:

AI's Ability to Create Transformative Works: I agree with the assertion that AI-generated works are primarily based on patterns learned from existing data. Current AI models, including GPT-3 and its successors, do not inherently introduce fundamentally new concepts or styles. They analyze and replicate patterns found in their training data, which can include a wide range of human-created content. The creativity of AI, as of now, is largely combinatorial, blending existing ideas and styles rather than inventing entirely new ones from scratch.

AI's Reliance on Existing Data: This point is accurate. AI models like GPT-3 generate content by referencing and recombining information from their training data. They don't create in the human sense of originating new ideas from personal experiences or unique insights. This reliance on existing data means that AI-generated content often mirrors, rather than transcends, the styles and concepts it has learned.

Legal Implications for AI Development: The analysis of the potential legal challenges is insightful. If AI-generated content is considered derivative rather than transformative, it could indeed raise significant copyright issues. The Fair Use doctrine, typically applied in cases where new works transform or repurpose original materials in a way that is considered original or adds value, may not fully apply to AI-generated content. This is a complex and evolving area of law, particularly as AI technology continues to advance and proliferate. Developers and users of AI models must navigate these legal considerations carefully, especially when using copyrighted material for training or output generation.

In summary, these statements from MS Copilot are well-founded and reflect the current state of AI technology and the legal landscape surrounding it. They highlight the ongoing challenges and discussions in the field regarding AI's creative capabilities and its interaction with copyright law.

Claude’s answer:

I do not have enough information to fully evaluate the factual accuracy of all the statements made. However, here are a few thoughts on some of the key points:

Regarding AI's current inability to create highly original or transformative works, there is likely some truth to that. AI systems like GPT-3 are trained on vast datasets of existing text and therefore their outputs tend to be combinations and extrapolations of what they have seen, rather than fundamentally new ideas. However, as AI continues to advance, its potential to generate more creative outputs may also improve.

On the legal implications around copyright and fair use when training AI systems, this is an evolving area with open questions. There are reasonable arguments on multiple sides. AI developers likely need to exercise caution in how copyrighted material is used for training to minimize legal risks, but fair use also provides some defenses in certain contexts. Overall this space needs further legal clarification over time as AI capabilities continue to develop.

In summary, while the statements likely have some truth in assessing AI's current creative limitations and the unsettled legal landscape around copyright and AI training, both areas are rapidly developing. As AI systems grow more advanced, their ability to produce more original content may increase. And the legal standards and best practices governing AI training data use will continue to evolve with technology and policy conversations between tech companies, legal experts and governments. But the core issues raised deserve ongoing analysis as AI grows more capable and permeates society.