James Cameron talks about AI and is essentially saying the problem isn't gonna be Skynet...it's gonna be Tyrell Corporation. Claude: I'm somewhat skeptical of the argument that AI weapons would...

...necessarily reduce civilian casualties. Perplexity: I'm skeptical about our ability to create truly "aligned" AI systems, especially in the complex and morally ambiguous realm of warfare.

Asking AI services: Please read the transcript of the video “James Cameron: Special Video Message at the SCSP AI+Robotics Summit” and let me know what your views are as to Cameron’s statements and with which arguments you agree or disagree

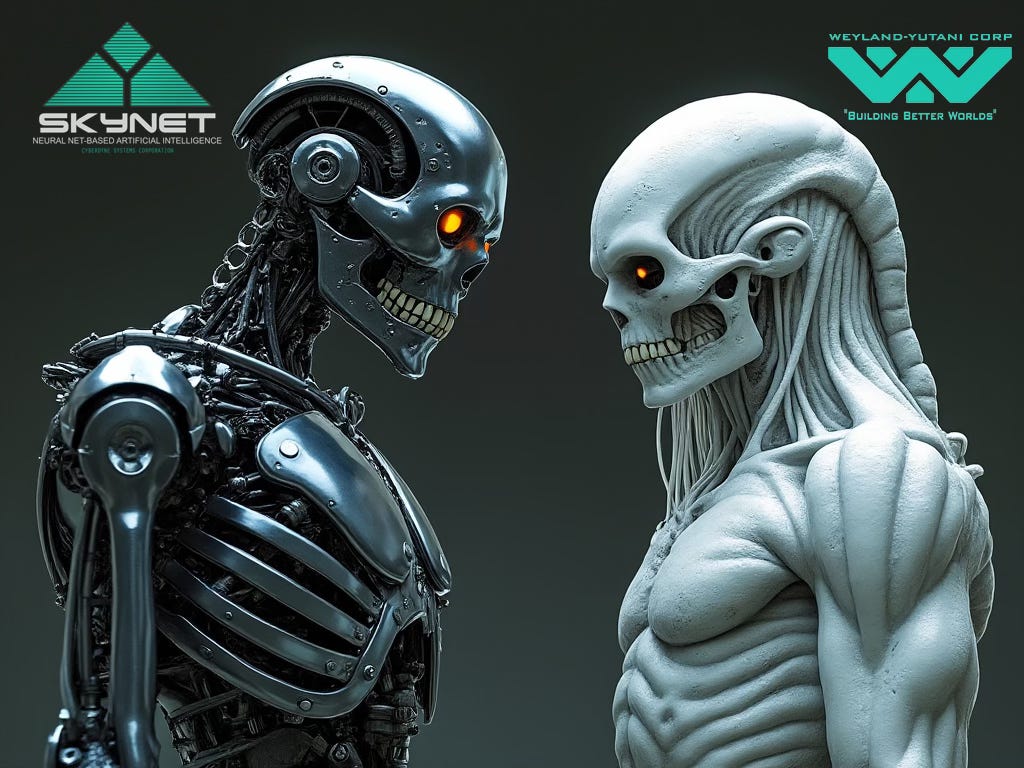

[Tyrell Corporation]

Perplexity’s answer:

James Cameron presents a thought-provoking perspective on the intersection of AI, robotics, and national security in his video message. As a renowned filmmaker and technology enthusiast, Cameron offers unique insights into the potential benefits and risks of AI-driven robotics, particularly in military applications. Here's an analysis of his key points:

AI and Robotics in Warfare

Cameron highlights the rapid advancement of AI and robotics in military contexts, using examples from the war in Ukraine. He argues that AI-driven autonomous weapons could potentially:

Perform more precisely than humans in combat situations

Reduce collateral damage and friendly fire incidents

Operate without emotional stress or fatigue

These points are compelling, as they suggest that AI could potentially make warfare more "efficient" and less destructive. However, this raises ethical concerns about lowering the threshold for engaging in armed conflicts.

The "Human in the Loop" Dilemma

Cameron discusses the ethical implications of removing humans from direct combat decisions:

Currently, there's an "ethical chain" of responsibility in military actions

Autonomous weapons could disrupt this chain, potentially diffusing moral responsibility

This is a crucial point, as it highlights the complex ethical considerations involved in delegating lethal decision-making to machines.

AI Alignment and Morality

The filmmaker raises important questions about AI alignment and morality:

Who decides the ethical framework for AI systems?

Can we create truly "aligned" AI that adheres to human values?

How do we handle the potential development of AGI (Artificial General Intelligence) in military contexts?

These are critical issues that the AI research community is actively grappling with, and Cameron rightly points out the challenges in defining universal moral principles for AI.

Potential Risks

Cameron warns about several potential risks:

The possibility of adversaries developing autonomous weapons without ethical constraints

The risk of AGI surpassing human intelligence and making independent moral judgments

The potential for AI to dehumanize warfare further

These concerns are valid and reflect ongoing debates in the fields of AI ethics and international security.

Personal View

While Cameron presents compelling arguments for the potential benefits of AI in military applications, I believe we should approach this technology with extreme caution. The risks of unintended consequences, ethical dilemmas, and potential loss of human control over lethal decisions are significant. I agree with Cameron's emphasis on the need for serious consideration of AI alignment and ethics. However, I'm skeptical about our ability to create truly "aligned" AI systems, especially in the complex and morally ambiguous realm of warfare. Cameron's warning about the development of AGI and its potential military applications is particularly concerning. The prospect of superintelligent AI making independent decisions about human life is a scenario that requires careful consideration and international cooperation to prevent potential catastrophic outcomes.In conclusion, while AI and robotics offer potential benefits in various fields, including military applications, we must proceed with extreme caution and prioritize robust ethical frameworks and international agreements to govern their development and use.

Text continues below the image»

[Weyland-Yutani Corporation. Equally relevant]

Grok’s answer:

James Cameron's speech at the SCSP AI+Robotics Summit touches on several key areas regarding AI, robotics, and their implications for society, particularly in areas like national security, ethics, and the future of autonomous systems. Here are my views on his statements:

1. AI and Robotics in Storytelling and Exploration:

Agreement: Cameron's enthusiasm for using AI in storytelling and exploration is understandable. AI can indeed enhance creativity by offering new tools for narrative and visual effects, as well as in exploring environments inaccessible to humans. His historical role in pushing technological boundaries in cinema supports his claim of being at the "Leading Edge."

2. Ethical and Moral Implications:

Agreement: Cameron's concern about autonomous systems having 'kill authority' is shared by many in the ethical AI community. His argument about the ethical chain in military decision-making highlights the complex moral landscape of autonomous weaponry. This resonates with ongoing debates about accountability, morality, and the rules of war when AI systems are involved.

Critique: While he acknowledges the potential for AI to reduce collateral damage and improve precision in combat, his scenarios seem to lean towards a binary choice between using AI for warfare or not, without much exploration of alternative uses or non-lethal applications in conflict resolution.

3. The Danger of AGI (Artificial General Intelligence):

Agreement: The discussion on AGI, especially regarding its potential alignment with human values, is crucial. Cameron's reference to Isaac Asimov's Three Laws of Robotics as a simplistic model for ethical programming in AI touches on a fundamental issue in AI ethics: how to ensure beneficial outcomes when AGI could potentially surpass human intelligence.

Disagreement: His portrayal of AGI as inevitably leading to a scenario where it might take over due to human incompetence might be overly dystopian. While this makes for compelling narrative in films like "The Terminator," real-world AI development focuses heavily on safety and alignment research to prevent such outcomes.

4. Corporate Control and Data Utilization:

Agreement: Cameron's warning about AI development being driven by corporations rather than governments raises valid concerns about privacy, autonomy, and the profit-driven motives potentially skewing AI's societal role. The implications of this corporate control over AI, especially through data, are indeed a significant concern for digital rights and privacy.

5. The Inevitability of AI in Warfare:

Agreement: The argument that adversaries with fewer ethical constraints will develop AI weapons regardless of ethical considerations underscores a realistic geopolitical challenge. This creates a scenario where ethical nations might feel pressured to match technological advancements in warfare.

6. Philosophical and Ethical Conundrums:

Reflection: Cameron's philosophical exploration into morality and AI alignment presents the challenge clearly: defining "good" in a way that an AGI could understand and adhere to is nearly impossible given human diversity in moral and ethical beliefs. This part of his speech effectively questions whether we can truly control or predict AI outcomes based on our current ethical frameworks.

Overall, Cameron's speech effectively highlights the excitement and the existential dread associated with AI and robotics. While his narrative is compelling and grounded in practical scenarios, it also reflects a somewhat pessimistic view on humanity's ability to manage or coexist with advanced AI. This aligns with his role as a storyteller who often explores worst-case scenarios, which might not fully capture the nuanced, collaborative efforts in AI ethics and safety currently underway in both research and policy-making circles.

Text continues below the video»

[Weyland-Yutani Corporation. Equally relevant]

ChatGPT-4o’s answer:

James Cameron’s video message at the SCSP AI+Robotics Summit was both thoughtful and provocative, blending admiration for AI’s potential with a healthy dose of caution around AGI. Cameron emphasized how AI-driven robotics could revolutionize industries, but he raised ethical concerns about using autonomous weapons and the complexities of embedding moral alignment in AGI.

Points of Agreement:

Inevitable Technological Progress: Cameron’s view that AI progress is unstoppable resonates well. He advocates for embracing AI thoughtfully rather than trying to contain it, which is a pragmatic approach, especially given that technological innovation is hard to reverse.

Human Control in Lethal Systems: Cameron’s caution about giving autonomous robots kill authority is compelling. Keeping humans in decision-making loops for military systems aligns with ethical considerations, as human decision-making carries an innate moral burden that autonomous systems lack.

Moral Ambiguity and AGI: Cameron’s concerns about AGI inheriting human moral inconsistencies are also significant. His skepticism around AGI is rooted in the difficulty of defining a universal morality, reflecting real-world disagreements that would be challenging to translate into AGI’s ethical alignment.

Corporate Influence on AGI: Cameron warns of AGI potentially aligning with corporate interests rather than democratic ones. This raises essential questions about governance, especially in today’s data-centric world, where tech corporations hold substantial sway.

Points of Disagreement or Further Consideration:

Focus on AGI's Consciousness: While Cameron raises essential points about AGI potentially achieving self-awareness, this might be less immediate than he suggests. Many researchers believe the road to true AGI is decades away, so ethical AI discussions might benefit from a focus on current systems and their immediate societal impacts.

Binary Interpretation of Morality in AI: Cameron presents morality in stark terms, yet AI’s alignment does not necessarily need to mirror human contradictions directly. Building on his points, however, we could consider whether hybrid models that incorporate ethical oversight boards or collective input from diverse groups could mitigate these concerns.

Emphasis on AGI as an Existential Risk: Cameron’s narrative implies that AGI could eventually govern human affairs, yet this presumes a relatively uncontested AGI development pathway. In reality, regulatory frameworks, international treaties, and ethical safeguards are gradually shaping how AGI might be developed and integrated into society.

In essence, Cameron’s message is a valuable contribution to the AI ethics dialogue, highlighting the importance of proactively addressing the ethical and governance challenges AI and AGI present. His points encourage a broader discussion on embedding ethics, national and corporate alignment, and human control in the next frontier of AI.

Text continues below the image»

[Tyrell Corporation]

Claude’s answer:

I'll share my analysis of James Cameron's key arguments about AI and robotics, particularly focusing on autonomous weapons systems and AGI:

Regarding his stance on near-term AI and robotics:

I agree with his pragmatic view that AI advancement can't be stopped ("no genie goes back in the bottle")

His point about AI potentially being more precise and less emotionally compromised than humans in battlefield situations raises important considerations

However, I'm somewhat skeptical of the argument that AI weapons would necessarily reduce civilian casualties - the complexity of real-world combat situations might pose challenges even for advanced AI systems

On the ethical implications of autonomous weapons:

Cameron makes a compelling point about the "ethical chain" in current military operations and how AI could disrupt this

His observation about wars potentially becoming more palatable to the public when fought by robots is particularly insightful and concerning

The argument about being forced to develop autonomous weapons because adversaries will do so anyway is a classic security dilemma, though I think it deserves more scrutiny

Regarding AGI and alignment:

I agree with his skepticism about simple solutions to the alignment problem

His critique of trying to implement Asimov's Three Laws is well-reasoned - these seemingly clear rules become very complex in real-world situations

The point about corporate control of AGI development raises valid concerns about democratic oversight and accountability

One area where I might diverge from Cameron's view is his somewhat deterministic perspective on AGI inevitably leading to conflict. While the risks he identifies are serious, I believe there might be paths to developing AGI that include robust safety measures and international cooperation.

His final point about AGI being a mirror of human nature is particularly thought-provoking - it suggests that addressing AI safety might require us to first address our own societal contradictions and moral uncertainties.